Projection of Turn Completion in Incremental Spoken Dialogue Systems

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Projection of Turn Completion in Incremental Spoken Dialogue Systems

Erik Ekstedt Gabriel Skantze

KTH Speech, Music and Hearing KTH Speech, Music and Hearing

Stockholm, Sweden Stockholm, Sweden

erikekst@kth.se skantze@kth.se

Abstract SDSs typically have response delays of around 700-

1000ms. The reason for this is that they typically

The ability to take turns in a fluent way (i.e.,

without long response delays or frequent inter- rely solely on this silence to determine when to

ruptions) is a fundamental aspect of any spo- take the turn, whereas humans also use other cues,

ken dialog system. However, practical speech such as prosody, gaze and syntactic completeness

recognition services typically induce a long re- (Skantze, 2021). Many studies have investigated

sponse delay, as it takes time before the pro- how to include such features in turn-taking mod-

cessing of the user’s utterance is complete. els for SDSs (Ferrer et al., 2002; Sato et al., 2002;

There is a considerable amount of research

Schlangen, 2006; Raux and Eskenazi, 2008; Meena

indicating that humans achieve fast response

times by projecting what the interlocutor will

et al., 2013; Maier et al., 2017; Lala et al., 2019).

say and estimating upcoming turn completions. Another difference between human turn-taking

In this work, we implement this mechanism in and SDSs is that humans do not only react to turn-

an incremental spoken dialog system, by us- yielding cues from the interlocutor. If they were

ing a language model that generates possible simply waiting for a cue and only then started to

futures to project upcoming completion points. formulate a response, psycholinguistic research has

In theory, this could make the system more re- estimated that the response time would be around

sponsive, while still having access to semantic

600-1500ms (Levinson and Torreira, 2015), which

information not yet processed by the speech

recognizer. We conduct a small study which is substantially slower than the observed response

indicates that this is a viable approach for prac- times. This indicates that humans also project turn

tical dialog systems, and that this is a promis- completions in advance, before the turn is complete

ing direction for future research. (Sacks et al., 1974; Levinson and Torreira, 2015;

Garrod and Pickering, 2015).

1 Introduction In this paper, we investigate whether the human

One of the most fundamental conversational be- ability to project future turn completions could be a

haviour of any spoken dialog system (SDS) is that viable option for conversational systems to achieve

of turn-taking, i.e., to take turns without long re- more fluent turn-taking. We constrain our approach

sponse delays or frequent interruptions (Skantze, to the textual domain using a pre-trained conversa-

2021). To achieve this, the system must be able to tional language model to project future words and

correctly identify when the user is yielding the turn, turn-completions.

and it is appropriate to make a response, and when The projection of turn-completions in SDSs can

the user is simply making a mid-utterance pause. have a number of applications. For example, the

In their seminal work, Sacks et al. (1974) de- system could initiate a turn just before the end of

scribe general properties of human-human con- the user’s utterance to minimize response time, or

versation in which they observe that, overwhelm- even take the turn with a small overlap. It could

ingly, one speaker talk at a time and the time be- also give the system more time to generate a re-

tween consecutive turns (response time) is mini- sponse, or be used to address the problem of pro-

mal. For the English language, a typical response cessing delays. For example, SDSs rely heavily on

time is around 200ms and similar response pat- Automatic Speech Recognition (ASR) to extract

terns seem to be consistent across different cul- the text from the user’s speech. Most ASR services

tures (Stivers et al., 2009). Contrary to this, current are associated with a certain latency (Baumann

431

Proceedings of the 22nd Annual Meeting of the Special Interest Group on Discourse and Dialogue, pages 431–437

July 29–31, 2021. ©2021 Association for Computational Linguisticset al., 2017; Addlesee et al., 2020). For turn-taking, the ongoing utterance if less than 80% has been

this means that even if the system has detected that sent. The interrupted utterance is then repeated for

the user has stopped speaking, it is hard to deter- the system’s next response. If the agent completed

mine whether the turn is yielded or not, since the an utterance and the user is inactive for 5 seconds,

final ASR result is not complete yet. a fallback is triggered and the agent continues the

There has been some previous research on pre- conversation by producing a new utterance.

dicting upcoming activity in dialog, such as recog- For the simplicity of our experiment, the dialog

nizing NLU intents on incomplete user speech (De- manager is defined by a set of predetermined ques-

Vault et al., 2009), projecting prosodic informa- tions, where the only possible deviation occurs if

tion and timing (Ward et al., 2010; Baumann and the user provides a too short utterance. If such a

Schlangen, 2011) as well as estimating future voice short utterance is recognized, the system randomly

activity (Skantze, 2017; Roddy et al., 2018; Ward chooses from a set of paraphrased responses that

et al., 2018). However, we are not aware of any encourages the user to elaborate.

previous studies of how a SDS could predict up- In this study, we implement two different turn-

coming words in the user’s speech, and use this for taking policies: the baseline and the projection

managing turn-taking. model. The baseline defines a user turn as complete

once the VAD module is inactive and the ASR has

2 Conversational agent produced its final hypothesis.

For our study, we implemented a SDS that per-

3 Turn-completion projection model

forms an interview with a user, talking about past

travel memories, similar to Johansson et al. (2016). To make projections, we utilize the TurnGPT model

The reason we chose this domain is that the dialog by Ekstedt and Skantze (2020), which is a pre-

manager can be implemented in a very simple way, trained GPT-2 (Radford et al., 2019) language

while the turn-taking can be challenging, as pauses model (LM) fine-tuned on conversational data. The

within the user’s turn might be more frequent than model was trained on data from seven publicly

in, for example, a Q/A system. An example dialog available dialog datasets listed in Appendix A.2.

can be found in Appendix A.1. The model trained until the validation loss reached

A general first step for modelling responsive a minimum, resulting in an average validation per-

turn-taking is to use an incremental dialog archi- plexity of 17.6.

tecture, where the user’s speech is processed in- The model includes special tokens that encode

crementally, so that decisions can be made in a speaker shifts, which we will refer to as turn-

more continuous fashion (Schlangen and Skantze, completions. As shown by Ekstedt and Skantze

2009). For this study, we build upon the recent (2020), the model does not only consider the on-

Retico (Michael, 2020) framework (implemented going user turn, but also benefits from taking the

in Python1 ), which implements the general, ab- larger dialog context into account (i.e., previous

stract model of incremental dialog processing pro- turns by the system and the user).

posed by Schlangen and Skantze (2009). Given the currently recognized user words (and

The system processes incoming user speech and the dialog context), a set of N possible continua-

outputs audio. The incoming incremental audio tions (of length M ) are generated (using a temper-

chunks are processed by a local voice activity de- ature τ and topk sampling). The number of those

tection (VAD) component and streamed to a re- that include turn-completions are counted, which

mote incremental ASR service (Google). The VAD gives a ratio. This ratio then approximates the prob-

triggers on silences of 200ms which defines inter- ability of an “actual” turn-completion point in the

pausal units (IPU). near future. If the ratio is larger than a threshold R,

A user turn is started when both the VAD detects the turn is predicted to be complete.

ongoing speech and the ASR has provided its first In this setup we strive towards simplicity and

hypothesis. If the VAD module activates during only trigger a projection at the end of each user IPU.

an ongoing agent utterance, an interruption compo- However, if new ASR hypotheses are received after

nent is triggered. This module checks how much of this, new projections are made until the system de-

the planned audio has been transmitted and stops cides to take the turn. The projection model uses a

1 maximum silence threshold T as a fallback, which

https://github.com/Uhlo/retico

432triggers a response regardless of the projections. both interactions, the participants were asked to

These different parameters can potentially be annotate the recorded dialogues by labeling mo-

fine-tuned for the specific application (or user). ments where they felt they had been interrupted by

This was not done in our study, and we selected the system. To do this, they were provided with a

values we found reasonable in preliminary tests, graphical tool where they could see the waveforms

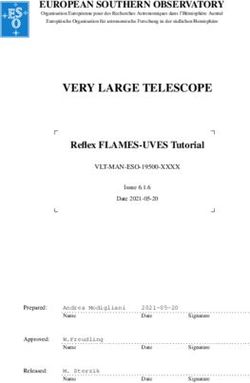

which are shown in Table 1. of the dialogs and play them, as well as inserting

An example taken from one of the interactions labels.

is illustrated in Figure 1 The agent interacted directly over Zoom by con-

necting its microphone to the zoom speakers and

Parameter Value vice versa. All audio was recorded directly on the

IPU 0.2 s agent side, in the same way as in a live setup.

Turn-completion ratio (R) 0.4

Fallback threshold (T ) 1.25 s 5 Results

Sampling

10 subjects interacted with the system, resulting

Continuations (N ) 10

in a total of 20 interactions, with an average dura-

Length (M ) 3

tion of 3 minutes and 43 seconds. The number of

topk 5

questions varied by the amount of triggered elabo-

Temperature (τ ) 1.0

ration requests. The baseline agent asked the users

max context 70

to elaborate 33 times, almost double the amount

Table 1: The parameters for the model. of 17 for the projection model. A transcript of an

interaction is shown in Appendix A.1.

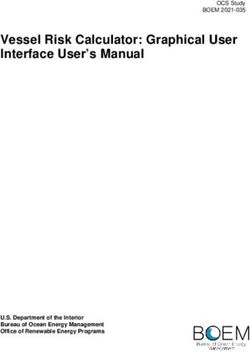

The total number of agent shifts (transitions be-

tween the user and the agent) was 220 for the base-

line and 210 for the projection model. The duration

of these (i.e., response times) are shown in the his-

togram in Figure 2. The average response times

were 1.03 and 0.80 seconds for the baseline and

projection agent, respectively. While this differ-

ence is not very large, it should be noted that the

prediction model has a bimodal distribution (as

seen in Figure 2), representing early predicted turn

shifts and fallbacks. Thus, the model is able to take

the turn quickly at some points, while allowing for

more time at others.

The users annotated 18 of the agent shifts as

Figure 1: Illustration of language projection. The blue interruptions for the baseline, and 28 for the pro-

box represents the agent and the green boxes the recog- jection model. The estimated average cut-in rate,

nized user words at two projection moments. The red

boxes show a subset of projections made by the LM.

defined as the annotated interruptions divided by

the number of agent shifts, was 0.08 for the base-

line and 0.13 for the projection model.

4 Experiment When evaluating the performance of a turn-

taking model, both response time and cut-in rate

To evaluate the model, we conducted an experi- should be taken into account (i.e., both should be

ment over Zoom2 where ten participants had two minimized) (Raux and Eskenazi, 2008). However,

conversations each with the agent (testing the two there is typically also a trade-off between these two

turn-taking policies) about two distinct travel mem- factors. Since both these values were different be-

ories. The participants were asked to choose a tween the baseline and prediction model, they are

memory prior to each agent interaction. We used difficult to compare directly.

two sets of paraphrased questions, assigned ran- One way of doing that is to perform an analysis

domly between the two policies. After completing of what would happen if we reduce the maximum

2

https://zoom.us/ allowed response time (for the prediction model

433els that are specifically trained with data from the

target domain. Contrary to this, we have used a

generic LM (TurnGPT) with a set of basic param-

eters that were not fine-tuned using domain data.

If the LM and the parameters would be fine-tuned,

we could expect further improvements. An anal-

ysis of the perplexity of the LM on the recorded

data shows a rather high perplexity (ppl ≈ 80).

Another obvious improvement would be to also

include prosodic features.

An important question we have not addressed

here is how good the projections are in terms of

Figure 2: A histogram over the response times for each

agent. predicting the last words more exactly (i.e., not just

how well the system predicts whether there will be

a turn completion). Depending on the domain of

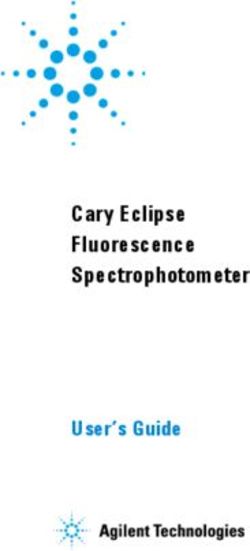

this is the parameter T ). As we do this, the average the system, this might be more or less important.

response time will also be reduced, while the cut-in In this respect, the comparison of the baseline and

rate will increase, since silences in between user prediction models (presented in Figure 3), is some-

IPUs longer than T become both additional cut- what unfair to the prediction model, since we could

ins and agent shifts. The result of this analysis is not reduce the response time of the baseline model

shown in Figure 3. without also truncating the ASR result.

The proposed model make turn-completion deci-

sions exclusively in the textual domain, restricted

by the latency of the ASR, at the end of user IPUs.

In practice, this means that we are more likely to

”project” the already spoken words currently being

processed by the ASR, as opposed to the actual

future activity of the user. This could be mitigated

by using a more reactive IPU trigger, increasing the

projection events during a user utterance, and to use

a longer continuation length, surpassing the latency

of the ASR. If so, the system could potentially also

start to respond before the user has stopped speak-

ing (i.e., producing overlapping speech).

Figure 3: Cut-in rate vs response time. The points rep- Another important aspect is that the interactions

resent the aggregate values over the interactions and the

were all conducted over Zoom which introduces

lines the estimated performance given varying values of

T.

added latencies. This also makes the probability of

cut-ins even greater than it would have been in a

This analysis enables a direct comparison of the live setup.

agents over values where both lines are defined. 7 Conclusion

The figure shows that the prediction agent is more

responsive and produces less interruptions by the In conversation, humans project future turn-

fact that the green line is strictly below the red. The completion points in order to achieve faster re-

greatest difference occurs at around 0.48s on the sponse times. In this paper, we have investigated

x-axis, with a cut-in rate difference of 0.1, given whether it is possible to implement this ability

threshold values of 0.5 and 0.6 seconds for the in a SDS. The projections are done in the tex-

baseline and projection agents, respectively. tual domain by generating future dialog continu-

ations with a conversational LM (TurnGPT). We

6 Discussion conducted a small study and show, as a proof-of-

To our knowledge, all previous work on end-of- concept, that this approach is viable. We note that

utterance-detection in SDSs have relied on mod- there is room for improvements, such as optimizing

434the hyperparameters, train and use a task specific Erik Ekstedt and Gabriel Skantze. 2020. TurnGPT:

LM, project turn-completion at finer increments, a transformer-based language model for predicting

turn-taking in spoken dialog. In Findings of the As-

and add prosodic features. However, the idea to

sociation for Computational Linguistics: EMNLP

use a text-based LM to project turn-completions, 2020, pages 2981–2990, Online. Association for

as a way to improve the turn-taking abilities of a Computational Linguistics.

SDS, is something we believe will be common and

Mihail Eric, Rahul Goel, Shachi Paul, Abhishek Sethi,

useful for the future of conversational systems. Sanchit Agarwal, Shuyang Gao, and Dilek Hakkani-

Tür. 2019. Multiwoz 2.1: Multi-domain dialogue

Acknowledgements state corrections and state tracking baselines. CoRR,

abs/1907.01669.

This work is supported by the Swedish research

council (VR) project ”Prediction and Coordination L. Ferrer, E. Shriberg, and A. Stolcke. 2002. Is the

speaker done yet? faster and more accurate end-

for Conversational AI” (2020-03812) and the Bank

of-utterance detection using prosody. pages 2061–

of Sweden Tercentenary Foundation (RJ) project 2064. Cited By 56.

”Understanding predictive models of turn-taking in

Simon Garrod and Martin J. Pickering. 2015. The

spoken interaction” (P20-0484).

use of content and timing to predict turn transitions.

Frontiers in Psychology, 6:751.

References Martin Johansson, Tatsuro Hori, Gabriel Skantze, Anja

Höthker, and Joakim Gustafson. 2016. Making turn-

Angus Addlesee, Yanchao Yu, and Arash Eshghi. 2020. taking decisions for an active listening robot for

A comprehensive evaluation of incremental speech memory training. In Proceedings of the Interna-

recognition and diarization for conversational AI. In tional Conference on Social Robotics, volume 9979

Proceedings of the 28th International Conference LNAI, pages 940–949.

on Computational Linguistics, pages 3492–3503,

Barcelona, Spain (Online). International Committee Divesh Lala, Koji Inoue, and Tatsuya Kawahara. 2019.

on Computational Linguistics. Smooth turn-taking by a robot using an online con-

tinuous model to generate turn-taking cues. In

Timo Baumann, Casey Kennington, Julian Hough, and 2019 International Conference on Multimodal Inter-

David Schlangen. 2017. Recognising Conversa- action, ICMI ’19, page 226–234, New York, NY,

tional Speech: What an Incremental ASR Should Do USA. Association for Computing Machinery.

for a Dialogue System and How to Get There, pages

421–432. Springer Singapore, Singapore. Sungjin Lee, Hannes Schulz, Adam Atkinson, Jianfeng

Gao, Kaheer Suleman, Layla El Asri, Mahmoud

Timo Baumann and David Schlangen. 2011. Predict- Adada, Minlie Huang, Shikhar Sharma, Wendy Tay,

ing the micro-timing of user input for an incremen- and Xiujun Li. 2019. Multi-domain task-completion

tal spoken dialogue system that completes a user’s dialog challenge. In Dialog System Technology

ongoing turn. In Proceedings of the SIGDIAL 2011 Challenges 8.

Conference, pages 120–129, Portland, Oregon. As-

sociation for Computational Linguistics. Stephen C. Levinson and Francisco Torreira. 2015.

Timing in turn-taking and its implications for pro-

Paweł Budzianowski, Tsung-Hsien Wen, Bo-Hsiang cessing models of language. Frontiers in Psychol-

Tseng, Iñigo Casanueva, Stefan Ultes, Osman Ra- ogy, 6:731.

madan, and Milica Gašić. 2018. MultiWOZ - a

large-scale multi-domain wizard-of-Oz dataset for Yanran Li, Hui Su, Xiaoyu Shen, Wenjie Li, Ziqiang

task-oriented dialogue modelling. In Proceedings of Cao, and Shuzi Niu. 2017. DailyDialog: A manu-

the 2018 Conference on Empirical Methods in Nat- ally labelled multi-turn dialogue dataset. In Proceed-

ural Language Processing, pages 5016–5026, Brus- ings of the Eighth International Joint Conference on

sels, Belgium. Association for Computational Lin- Natural Language Processing (Volume 1: Long Pa-

guistics. pers), pages 986–995, Taipei, Taiwan. Asian Federa-

tion of Natural Language Processing.

Bill Byrne, Karthik Krishnamoorthi, Chinnadhurai

Sankar, Arvind Neelakantan, Daniel Duckworth, Angelika Maier, Julian Hough, and David Schlangen.

Semih Yavuz, Ben Goodrich, Amit Dubey, Kyu- 2017. Towards deep end-of-turn prediction for situ-

Young Kim, and Andy Cedilnik. 2019. Taskmaster- ated spoken dialogue systems. In Proc. Interspeech

1: Toward a realistic and diverse dialog dataset. 2017, pages 1676–1680.

David DeVault, Kenji Sagae, and David Traum. 2009. Raveesh Meena, Gabriel Skantze, and Joakim

Can I finish? learning when to respond to incre- Gustafson. 2013. A data-driven model for tim-

mental interpretation results in interactive dialogue. ing feedback in a map task dialogue system. In

In Proceedings of the SIGDIAL 2009 Conference, Proceedings of the SIGDIAL 2013 Conference,

pages 11–20, London, UK. Association for Compu- pages 375–383, Metz, France. Association for

tational Linguistics. Computational Linguistics.

435Thilo Michael. 2020. Retico: An incremental frame- Gabriel Skantze. 2021. Turn-taking in Conversational

work for spoken dialogue systems. In Proceedings Systems and Human-Robot Interaction : A Review.

of the 21th Annual Meeting of the Special Interest Computer Speech & Language, 67:101178.

Group on Discourse and Dialogue, pages 49–52, 1st

virtual meeting. Association for Computational Lin- Tanya Stivers, N. J. Enfield, Penelope Brown, Christina

guistics. Englert, Makoto Hayashi, Trine Heinemann, Gertie

Hoymann, Federico Rossano, Jan Peter de Ruiter,

Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Kyung-Eun Yoon, and Stephen C. Levinson. 2009.

Dario Amodei, and Ilya Sutskever. 2019. Language Universals and cultural variation in turn-taking in

models are unsupervised multitask learners. OpenAI conversation. Proceedings of the National Academy

Blog, 1(8):9. of Sciences, 106(26):10587–10592.

Filip Radlinski, Krisztian Balog, Bill Byrne, and N. G. Ward, D. Aguirre, G. Cervantes, and O. Fuentes.

Karthik Krishnamoorthi. 2019. Coached conversa- 2018. Turn-taking predictions across languages and

tional preference elicitation: A case study in un- genres using an lstm recurrent neural network. In

derstanding movie preferences. In Proceedings of 2018 IEEE Spoken Language Technology Workshop

the Annual SIGdial Meeting on Discourse and Dia- (SLT), pages 831–837.

logue.

Nigel G. Ward, Olac Fuentes, and Alejandro Vega.

Hannah Rashkin, Eric Michael Smith, Margaret Li, and 2010. Dialog prediction for a general model of

Y-Lan Boureau. 2019. Towards empathetic open- turn-taking. pages 2662–2665. International Speech

domain conversation models: a new benchmark and Communication Association.

dataset. In ACL.

Saizheng Zhang, Emily Dinan, Jack Urbanek, Arthur

Antoine Raux and Maxine Eskenazi. 2008. Optimiz- Szlam, Douwe Kiela, and Jason Weston. 2018. Per-

ing endpointing thresholds using dialogue features sonalizing dialogue agents: I have a dog, do you

in a spoken dialogue system. In Proceedings of the have pets too? In Proceedings of the 56th An-

9th SIGdial Workshop on Discourse and Dialogue, nual Meeting of the Association for Computational

pages 1–10, Columbus, Ohio. Association for Com- Linguistics (Volume 1: Long Papers), pages 2204–

putational Linguistics. 2213, Melbourne, Australia. Association for Com-

putational Linguistics.

Matthew Roddy, Gabriel Skantze, and Naomi Harte.

2018. Investigating Speech Features for Continuous

Turn-Taking Prediction Using LSTMs. In Proceed-

ings of Interspeech, Hyderabad, India.

H Sacks, Emanuel Schegloff, and G Jefferson. 1974.

A simplest systematics for the organization of turn-

taking for conversation. Language, 50:696–735.

Ryo Sato, Ryuichiro Higashinaka, Masafumi Tamoto,

Mikio Nakano, and Kiyoaki Aikawa. 2002. Learn-

ing decision trees to determine turn-taking by spo-

ken dialogue systems. In 7th International Confer-

ence on Spoken Language Processing, ICSLP2002

- INTERSPEECH 2002, Denver, Colorado, USA,

September 16-20, 2002. ISCA.

David Schlangen. 2006. From reaction to predic-

tion: experiments with computational models of

turn-taking. In Proceedings of Interspeech 2006,

Pittsburgh, PA, USA, 2010-2013.

David Schlangen and Gabriel Skantze. 2009. A gen-

eral, abstract model of incremental dialogue process-

ing. In Proceedings of the 12th Conference of the

European Chapter of the ACL (EACL 2009), pages

710–718, Athens, Greece. Association for Computa-

tional Linguistics.

Gabriel Skantze. 2017. Towards a general, continu-

ous model of turn-taking in spoken dialogue using

lstm recurrent neural networks. In Proceedings of

the 18th Annual SIGdial Meeting on Discourse and

Dialogue, pages 220–230, Saarbrücken, Germany.

Association for Computational Linguistics.

436A Appendix User: absolutely[H]

Agent: Please, tell me more about that. [elaboration re-

The dialog in A.1 shows a transcript of a user in- quest]

teraction with the projection agent. We highlight a User: well because America is[H] such[H] a big coun-

negative turn-completion projection by [H] (hold) try it’s a lot of things to see[H] something[H] that

suits[S] all yeah[H]

and a positive with [S] (shift). Note that these

Agent: Let’s [interrupted by ”yeah”]

transcriptions and highlights are shown from the

Agent: Let’s see, do you think that such a trip is suitable

agent’s perspective (i.e., based on what the ASR for families?

has reported), and not on what was actually said. User: yeah[H] I[H] think[H] so[S]

Given a continuation length of M =3, a [S] indi- Agent: Did you do anything that you have not done before?

cates that the model estimates that there is less than User: yeah we were hiking in the mountains near Grand

3 tokens left in the turn. Canyon I hadn’t done[S] that before

The blue text shows words that are recognized Agent: Do you think you are going to revisit any time soon?

by the ASR after the decision to take the turn was User: yes[H] I think so[H]

made. That is, blue text to the right of a [S] had Agent: So, was it a vacation or work related trip?

been spoken by the user at the time of projection, User: it was a[H] vacation[H]

but was not accessible to the model. The orange Agent: For how long were you away?

[...] are used to comment on specific events. User: we were away for three weeks three weeks[H] I[H]

think I almost[S] 4 weeks

A.1 Example dialog Agent: How was the journey home?

Agent: Hi there, in this session I want to talk about one of User: it was a long journey home[H] and it was good[S]

your past travels. Where did you travel? Agent: Thank you for answering my questions. This session

User: I travel to the United States[H] of[H] America[H] is over. Goodbye.

Agent: What mode of transportation did you use to get to

your travel destination? A.2 Datasets

User: we went there by[S] airplane We trained TurnGPT on seven publicly avail-

Agent: Would you say that it was a summer or winter trip? able dialog datasets namely, Taskmaster (Byrne

User: it was a summer[S] trip et al., 2019), MetaLWOZ (Lee et al., 2019), Multi-

Agent: Let’s see, did you try any new foods that you could woz (Budzianowski et al., 2018; Eric et al., 2019),

tell me about? The Coached Conversational Preference Elicita-

User: and yeah we tried a lot of foods then like[H] a[H] tion (Radlinski et al., 2019), Persona (Zhang et al.,

burritos and[H] vegan pizza[H]

2018), DailyDialog (Li et al., 2017), and Empa-

Agent: What is your best memory from the trip?

thetic dialogs (Rashkin et al., 2019).

User: the best memory from the Westland and me and my

husband went to Central Park and it was a sunny

day and we sat there and ate some[H] noodles[S]

Agent: So, did you visit any tourist attractions?

User: yeah we[H] did a lot of tourist attractions like the

World Trade Center[H]

Agent: Can you tell me [interrupted by user cough]

Agent: Can you tell me anything interesting about your

trip?

User: yes it was very interesting to go by car to the[H]

different states we went to with a car[H] from from

Denver in Colorado all the way to Los Angeles

in[H] California[S]

Agent: Do you have any recommendations for people trav-

eling there?

User: yeah I would definitely definitely recommend them

to go to New York and[H] the Grand Canyon[H]

Agent: Let’s see, how was the weather during your trip?

User: it was very warm[H] it was in the summer[H]

Agent: So, could you talk about who you traveled with?

User: yeah I traveled with my husband[H] Rasmus[S]

Agent: Yeah, would you recommend others to go there?

437You can also read