Differential Privacy at Risk: Bridging Randomness and Privacy Budget

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Proceedings on Privacy Enhancing Technologies ; 2021 (1):64–84

Ashish Dandekar*, Debabrota Basu*, and Stéphane Bressan

Differential Privacy at Risk: Bridging

Randomness and Privacy Budget

Abstract: The calibration of noise for a privacy-

preserving mechanism depends on the sensitivity of the

1 Introduction

query and the prescribed privacy level. A data steward

Dwork et al. [12] quantify the privacy level ε in ε-

must make the non-trivial choice of a privacy level that

differential privacy (or ε-DP) as an upper bound on the

balances the requirements of users and the monetary

worst-case privacy loss incurred by a privacy-preserving

constraints of the business entity.

mechanism. Generally, a privacy-preserving mechanism

Firstly, we analyse roles of the sources of randomness,

perturbs the results by adding the calibrated amount of

namely the explicit randomness induced by the noise

random noise to them. The calibration of noise depends

distribution and the implicit randomness induced by

on the sensitivity of the query and the specified pri-

the data-generation distribution, that are involved in

vacy level. In a real-world setting, a data steward must

the design of a privacy-preserving mechanism. The finer

specify a privacy level that balances the requirements

analysis enables us to provide stronger privacy guaran-

of the users and monetary constraints of the business

tees with quantifiable risks. Thus, we propose privacy

entity. For example, Garfinkel et al. [14] report on is-

at risk that is a probabilistic calibration of privacy-

sues encountered when deploying differential privacy as

preserving mechanisms. We provide a composition the-

the privacy definition by the US census bureau. They

orem that leverages privacy at risk. We instantiate the

highlight the lack of analytical methods to choose the

probabilistic calibration for the Laplace mechanism by

privacy level. They also report empirical studies that

providing analytical results.

show the loss in utility due to the application of privacy-

Secondly, we propose a cost model that bridges the gap

preserving mechanisms.

between the privacy level and the compensation budget

We address the dilemma of a data steward in two

estimated by a GDPR compliant business entity. The

ways. Firstly, we propose a probabilistic quantification

convexity of the proposed cost model leads to a unique

of privacy levels. Probabilistic quantification of privacy

fine-tuning of privacy level that minimises the compen-

levels provides a data steward with a way to take quan-

sation budget. We show its effectiveness by illustrat-

tified risks under the desired utility of the data. We refer

ing a realistic scenario that avoids overestimation of the

to the probabilistic quantification as privacy at risk. We

compensation budget by using privacy at risk for the

also derive a composition theorem that leverages privacy

Laplace mechanism. We quantitatively show that com-

at risk. Secondly, we propose a cost model that links the

position using the cost optimal privacy at risk provides

privacy level to a monetary budget. This cost model

stronger privacy guarantee than the classical advanced

helps the data steward to choose the privacy level con-

composition. Although the illustration is specific to the

strained on the estimated budget and vice versa. Con-

chosen cost model, it naturally extends to any convex

vexity of the proposed cost model ensures the existence

cost model. We also provide realistic illustrations of how

of a unique privacy at risk that would minimise the bud-

a data steward uses privacy at risk to balance the trade-

get. We show that the composition with an optimal pri-

off between utility and privacy.

vacy at risk provides stronger privacy guarantees than

Keywords: Differential privacy, cost model, Laplace the traditional advanced composition [12]. In the end,

mechanism we illustrate a realistic scenario that exemplifies how the

DOI 10.2478/popets-2021-0005

Received 2020-05-31; revised 2020-09-15; accepted 2020-09-16.

*Corresponding Author: Debabrota Basu: Dept. of

Computer Sci. and Engg., Chalmers University of Technology,

Göteborg, Sweden, E-mail: basud@chalmers.se

*Corresponding Author: Ashish Dandekar: DI ENS, Stéphane Bressan: National University of Singapore, Singa-

ENS, CNRS, PSL University & Inria, Paris, France, E-mail: pore, E-mail: steph@nus.edu.sg

adandekar@ens.frDifferential Privacy at Risk 65

data steward can avoid overestimation of the budget by the optimal privacy at risk, which is estimated using the

using the proposed cost model by using privacy at risk. cost model, with traditional composition mechanisms –

The probabilistic quantification of privacy levels de- basic and advanced mechanisms [12]. We observe that

pends on two sources of randomness: the explicit ran- it gives stronger privacy guarantees than the ones ob-

domness induced by the noise distribution and the im- tained by the advanced composition without sacrificing

plicit randomness induced by the data-generation distri- on the utility of the mechanism.

bution. Often, these two sources are coupled with each In conclusion, benefits of the probabilistic quantifi-

other. We require analytical forms of both sources of cation i.e., of the privacy at risk are twofold. It not

randomness as well as an analytical representation of only quantifies the privacy level for a given privacy-

the query to derive a privacy guarantee. Computing the preserving mechanism but also facilitates decision-

probabilistic quantification of different sources of ran- making in problems that focus on the privacy-utility

domness is generally a challenging task. Although we trade-off and the compensation budget minimisation.

find multiple probabilistic privacy definitions in the lit-

erature [16, 27] 1 , we miss an analytical quantification

bridging the randomness and privacy level of a privacy-

preserving mechanism. We propose a probabilistic quan-

2 Background

tification, namely privacy at risk, that further leads to

We consider a universe of datasets D. We explicitly men-

analytical relation between privacy and randomness. We

tion when we consider that the datasets are sampled

derive a composition theorem with privacy at risk for

from a data-generation distribution G with support D.

mechanisms with the same as well as varying privacy

Two datasets of equal cardinality x and y are said to be

levels. It is an extension of the advanced composition

neighbouring datasets if they differ in one data point. A

theorem [12] that deals with a sequential and adaptive

pair of neighbouring datasets is denoted by x ∼ y. In

use of privacy-preserving mechanisms. We also prove

this work, we focus on a specific class of queries called

that privacy at risk satisfies convexity over privacy levels

numeric queries. A numeric query f is a function that

and a weak relaxation of the post-processing property.

maps a dataset into a real-valued vector, i.e. f : D → Rk .

To the best of our knowledge, we are the first to ana-

For instance, a sum query returns the sum of the values

lytically derive the proposed probabilistic quantification

in a dataset.

for the widely used Laplace mechanism [10].

In order to achieve a privacy guarantee, researchers

The privacy level proposed by the differential pri-

use a privacy-preserving mechanism, or mechanism in

vacy framework is too abstract a quantity to be inte-

short, which is a randomised algorithm that adds noise

grated in a business setting. We propose a cost model

to the query from a given family of distributions.

that maps the privacy level to a monetary budget. The

Thus, a privacy-preserving mechanism of a given fam-

proposed model is a convex function of the privacy level,

ily, M(f, Θ), for the query f and the set of parame-

which further leads to a convex cost model for privacy

ters Θ of the given noise distribution, is a function i.e.

at risk. Hence, it has a unique probabilistic privacy level

M(f, Θ) : D → R. In the case of numerical queries, R is

that minimises the cost. We illustrate this using a real-

Rk . We denote a privacy-preserving mechanism as M,

istic scenario in a GDPR-compliant business entity that

when the query and the parameters are clear from the

needs an estimation of the compensation budget that it

context.

needs to pay to stakeholders in the unfortunate event

of a personal data breach. The illustration, which uses Definition 1 (Differential Privacy [12]). A privacy-

the proposed convex cost model, shows that the use of preserving mechanism M, equipped with a query f and

probabilistic privacy levels avoids overestimation of the with parameters Θ, is (ε, δ)-differentially private if for

compensation budget without sacrificing utility. The il- all Z ⊆ Range(M) and x, y ∈ D such that x ∼ y:

lustration naturally extends to any convex cost model.

In this work, we comparatively evaluate the privacy P(M(f, Θ)(x) ∈ Z) ≤ eε × P(M(f, Θ)(y) ∈ Z) + δ.

guarantees using privacy at risk of the Laplace mecha-

nism. We quantitatively compare the composition under An (ε, 0)-differentially private mechanism is also simply

said to be ε-differentially private. Often, ε-differential

privacy is referred to as pure differential privacy whereas

1 A widely-used (ε, δ)-differential privacy is not a probabilistic

(ε, δ)-differential privacy is referred as approximate dif-

relaxation of differential privacy [29]. ferential privacy.Differential Privacy at Risk 66

A privacy-preserving mechanism provides perfect pri-

vacy if it yields indistinguishable outputs for all neigh-

3 Privacy at Risk: A Probabilistic

bouring input datasets. The privacy level ε quantifies Quantification of Randomness

the privacy guarantee provided by ε-differential privacy.

For a given query, the smaller the value of the ε, the The parameters of a privacy-preserving mechanism are

qualitatively higher the privacy. A randomised algo- calibrated using the privacy level and the sensitivity of

rithm that is ε-differentially private is also ε0 -differential the query. A data steward needs to choose an appro-

private for any ε0 > ε. priate privacy level for practical implementation. Lee

In order to satisfy ε-differential privacy, the param- et al. [25] show that the choice of an actual privacy

eters of a privacy-preserving mechanism requires a cal- level by a data steward in regard to her business re-

culated calibration. The amount of noise required to quirements is a non-trivial task. Recall that the privacy

achieve a specified privacy level depends on the query. level in the definition of differential privacy corresponds

If the output of the query does not change drastically to the worst case privacy loss. Business users are how-

for two neighbouring datasets, then a small amount of ever used to taking and managing risks, if the risks can

noise is required to achieve a given privacy level. The be quantified. For instance, Jorion [21] defines Value at

measure of such fluctuations is called the sensitivity of Risk that is used by risk analysts to quantify the loss in

the query. The parameters of a privacy-preserving mech- investments for a given portfolio and an acceptable con-

anism are calibrated using the sensitivity of the query fidence bound. Motivated by the formulation of Value

that quantifies the smoothness of a numeric query. at Risk, we propose to use the use of probabilistic pri-

vacy level. It provides us with a finer tuning of an ε0 -

Definition 2 (Sensitivity). The sensitivity of a query differentially private privacy-preserving mechanism for

f : D → Rk is defined as a specified risk γ.

∆f , max kf (x) − f (y)k1 . Definition 5 (Privacy at Risk). For a given data gen-

x,y∈D

x∼y

erating distribution G, a privacy-preserving mecha-

The Laplace mechanism is a privacy-preserving mecha- nism M, equipped with a query f and with parame-

nism that adds scaled noise sampled from a calibrated ters Θ, satisfies ε-differential privacy with a privacy at

Laplace distribution to the numeric query. risk 0 ≤ γ ≤ 1 if, for all Z ⊆ Range(M) and x, y sam-

pled from G such that x ∼ y:

Definition 3 ([35]). The Laplace distribution with

P(M(f, Θ)(x) ∈ Z)

mean zero and scale b > 0 is a probability distribution P ln > ε ≤ γ, (1)

P(M(f, Θ)(y) ∈ Z)

with probability density function

where the outer probability is calculated with respect to

1 |x|

Lap(b) , exp − , the probability space Range(M ◦ G) obtained by apply-

2b b

ing the privacy-preserving mechanism M on the data-

where x ∈ R. We write Lap(b) to denote a random vari- generation distribution G.

able X ∼ Lap(b)

If a privacy-preserving mechanism is ε0 -differentially

Definition 4 (Laplace Mechanism [10]). Given any private for a given query f and parameters Θ, for

function f : D → Rk and any x ∈ D, the Laplace any privacy level ε ≥ ε0 , the privacy at risk is 0. We

Mechanism is defined as are interested in quantifying the risk γ with which an

ε0 -differentially private privacy-preserving mechanism

∆f ∆f also satisfies a stronger ε-differential privacy, i.e., with

Lε (x) , M f, (x) = f (x) + (L1 , ..., Lk ),

ε ε < ε0 .

∆

where Li is drawn from Lap εf and added to the ith

component of f (x). Unifying Probabilistic and Random DP

∆ Interestingly, Equation (1) unifies the notions of proba-

Theorem 1 ([10]). The Laplace mechanism, Lε0f , is

bilistic differential privacy and random differential pri-

ε0 -differentially private.

vacy by accounting for both sources of randomness in

a privacy-preserving mechanism. Machanavajjhala etDifferential Privacy at Risk 67

al. [27] define probabilistic differential privacy that in- over multiple evaluations with a square root dependence

corporates the explicit randomness of the noise distribu- on the number of evaluations. In this section, we provide

tion of the privacy-preserving mechanism, whereas Hall the composition theorem for privacy at risk.

et al. [16] define random differential privacy that incor-

porates the implicit randomness of the data-generation Definition 6 (Privacy loss random variable). For a

distribution. In probabilistic differential privacy, the privacy-preserving mechanism M : D → R, any two

outer probability is computed over the sample space of neighbouring datasets x, y ∈ D and an output r ∈ R, the

Range(M) and all datasets are equally probable. value of the privacy loss random variable C is defined

as:

P(M(x) = r)

C(r) , ln .

P(M(y) = r)

Connection with Approximate DP

Despite a resemblance with probabilistic relaxations of Lemma 1. If a privacy-preserving mechanism M sat-

differential privacy [13, 16, 27] due to the added param- isfies ε0 -differential privacy, then

eter δ, (ε, δ)-differential privacy (Definition 1) is a non-

probabilistic variant [29] of regular ε-differential privacy. P[|C| ≤ ε0 ] = 1.

Indeed, unlike the auxiliary parameters in probabilis-

Theorem 3. For all ε0 , ε, γ, δ > 0, the class of ε0 -

tic relaxations, such as γ in privacy at risk (ref. Def-

differentially private mechanisms, which satisfy (ε, γ)-

inition 5), the parameter δ of approximate differential

privacy at risk under a uniform data-generation distri-

privacy is an absolute slack that is independent of the

bution, are (ε0 , δ)-differential privacy under n-fold com-

sources of randomness. For a specified choice of ε and

position where

δ, one can analytically compute a matching value of δ

for a new value of ε2 . Therefore, as other probabilistic

r

0 1

relaxations, privacy at risk cannot be directly related ε = ε0 2n ln + nµ,

δ

to approximate differential privacy. An alternative is to

find out a privacy at risk level γ for a given privacy level where µ = 12 [γε2 + (1 − γ)ε20 ].

(ε, δ) while the original noise satisfies (ε0 , δ).

Proof. Let, M1...n : D → R1 × R2 × ... × Rn denote the

Theorem 2. If a privacy preserving mechanism satis- n-fold composition of privacy-preserving mechanisms

{Mi : D → Ri }n i=1 . Each ε0 -differentially private M

i

fies (ε, γ) privacy at risk, it also satisfies (ε, γ) approxi-

mate differential privacy. also satisfies (ε, γ)-privacy at risk for some ε ≤ ε0 and

appropriately computed γ. Consider any two neighbour-

We obtain this reduction as the probability measure ing datasets x, y ∈ D. Let,

induced by the privacy preserving mechanism and ( n

)

^ P(Mi (x) = ri )

data generating distribution on any output set Z ⊆ B = (r1 , ..., rn ) > eε

P(Mi (y) = ri )

Range(M) is additive. 3 The proof of the theorem is i=1

in Appendix A. Using the technique in [12, Theorem 3.20], it suffices to

show that P(M1...n (x) ∈ B) ≤ δ.

Consider

3.1 Composition Theorem

P(M1...n (x) = (r1 , ..., rn ))

ln

The application of ε-differential privacy to many real- P(M1...n (y) = (r1 , ..., rn ))

n

world problem suffers from the degradation of privacy Y P(Mi (x) = ri )

= ln

guarantee, i.e., privacy level, over the composition. The P(Mi (y) = ri )

i=1

basic composition theorem [12] dictates that the pri- n n

vacy guarantee degrades linearly in the number of eval-

X P(Mi (x) = ri ) X

= ln , Ci (2)

uations of the mechanism. The advanced composition P(Mi (y) = ri )

i=1 i=1

theorem [12] provides a finer analysis of the privacy loss

where C i in the last line denotes the privacy loss random

variable related to Mi .

2 For any 0 < ε0 ≤ ε, any (ε, δ)-differentially private mechanism Consider an ε-differentially private mechanism Mε

0

also satisfies (ε0 , (eε − eε + δ))-differential privacy. and ε0 -differentially private mechanism Mε0 . Let Mε0

3 The converse is not true as explained before. satisfy (ε, γ)-privacy at risk for ε ≤ ε0 and appropriatelyDifferential Privacy at Risk 68

computed γ. Each Mi can be simulated as the mech- A detailed discussion and analysis of proving such het-

anism Mε with probability γ and the mechanism Mε0 erogeneous composition theorems is available in [22,

otherwise. Therefore, the privacy loss random variable Section 3.3].

for each mechanism Mi can be written as In fact, if we consider both sources of randomness,

the expected value of the loss function must be com-

C i = γCεi + (1 − γ)Cεi 0 puted by using the law of total expectation.

where Cεi denotes the privacy loss random variable as- E[C] = Ex,y∼G [E[C]|x, y]

sociated with the mechanism Mε and Cεi 0 denotes the Therefore, the exact computation of privacy guaran-

privacy loss random variable associated with the mech- tees after the composition requires access to the data-

anism Mε0 . Using [5, Remark 3.4], we can bound the generation distribution. We assume a uniform data-

mean of every privacy loss random variable as: generation distribution while proving Theorem 3. We

1 2 can obtain better and finer privacy guarantees account-

µ , E[C i ] ≤ [γε + (1 − γ)ε20 ].

2 ing for data-generation distribution, which we keep as a

future work.

We have a collection of n independent privacy random

variables C i ’s such that P |C i | ≤ ε0 = 1. Using Hoeffd-

ing’s bound [18] on the sample mean for any β > 0,

" #

3.2 Convexity and Post-Processing

nβ 2

1X i

P i

C ≥ E[C ] + β ≤ exp − 2 . We show that privacy at risk satisfies the convexity

n 2ε0

i property and does not satisfy the post-processing prop-

Rearranging the inequality by renaming the upper erty.

bound on the probability as δ, we get:

Lemma 2 (Convexity). For a given ε0 -differentially

" #

private privacy-preserving mechanism, privacy at risk

r

X

i 1

P C ≥ nµ + ε0 2n ln ≤ δ. satisfies the convexity property.

δ

i

Proof. Let M be a mechanism that satisfies ε0 -

differential privacy. By the definition of the privacy at

Theorem 3 is an analogue, in the privacy at risk setting, risk, it also satisfies (ε1 , γ1 )-privacy at risk as well as

of the advanced composition of differential privacy [12, (ε2 , γ2 )-privacy at risk for some ε1 , ε2 ≤ ε0 and appro-

Theorem 3.20] under a constraint of independent evalu- priately computed values of γ1 and γ2 . Let M1 and

ations. Note that if one takes γ = 0, then we obtain the M2 denote the hypothetical mechanisms that satisfy

exact same formula as in [12, Theorem 3.20]. It provides (ε1 , γ1 )-privacy at risk and (ε2 , γ2 )-privacy at risk re-

a sanity check for the consistency of composition using spectively. We can write privacy loss random variables

privacy at risk. as follows:

Corollary 1 (Heterogeneous Composition). For all C 1 ≤ γ1 ε1 + (1 − γ1 )ε0

εl , ε, γl , δ > 0 and l ∈ {1, . . . , n}, the composition of C 2 ≤ γ2 ε2 + (1 − γ2 )ε0

{εl }nl=1 -differentially private mechanisms, which satisfy where C 1 and C 2 denote privacy loss random variables

(ε, γl )-privacy at risk under a uniform data-generation

for M1 and M2 .

distribution, also satisfies (ε0 , δ)-differential privacy

Let us consider a privacy-preserving mechanism M

where v

u n

! that uses M1 with a probability p and M2 with a prob-

0

u X

2 1 ability (1−p) for some p ∈ [0, 1]. By using the techniques

ε = 2t εl ln + µ,

δ in the proof of Theorem 3, the privacy loss random vari-

l=1

able C for M can be written as:

where µ = 21 [ε2 ( − γl )ε2l ].

Pn Pn

l=1 γl ) + l=1 (1

C = pC 1 + (1 − p)C 2

Proof. The proof follows from the same argument as ≤ γ 0 ε0 + (1 − γ 0 )ε0

that of Theorem 3 of bounding the loss random variable

where

at step l using γl Cεl + (1 − γl )Cεl l and then applying the

pγ1 ε1 + (1 − p)γ2 ε2

concentration inequality. ε0 =

pγ1 + (1 − p)γ2Differential Privacy at Risk 69

γ 0 = (1 − pγ1 − (1 − p)γ2 ) at risk. Therefore, we keep privacy at risk for Gaussian

mechanism as the future work.

Thus, M satisfies (ε0 , γ 0 )-privacy at risk. This proves In this section, we instantiate privacy at risk for the

that privacy at risk satisfies convexity [23, Axiom 2.1.2]. Laplace mechanism in three cases: two cases involving

two sources of randomness and a third case involving the

Meiser [29] proved that a relaxation of differential pri- coupled effect. These three different cases correspond to

vacy that provides probabilistic bounds on the privacy three different interpretations of the confidence level,

loss random variable does not satisfy post-processing represented by the parameter γ, corresponding to three

property of differential privacy. Privacy at risk is indeed interpretations of the support of the outer probability

such a probabilistic relaxation. in Definition 5. In order to highlight this nuance, we

denote the confidence levels corresponding to the three

Corollary 2 (Post-processing). Privacy at risk does cases and their three sources of randomness as γ1 , γ2 ,

not satisfy the post-processing property for every pos- and γ3 , respectively.

sible mapping of the output.

Though privacy at risk is not preserved after post- 4.1 The Case of Explicit Randomness

processing, it yields a weaker guarantee in terms of ap-

proximate differential privacy after post-processing. The In this section, we study the effect of the explicit ran-

proof involves reduction of privacy at risk to approxi- domness induced by the noise sampled from Laplace

mate differential privacy and preservation of approxi- distribution. We provide a probabilistic quantification

mate differential privacy under post-processing. for fine tuning for the Laplace mechanism. We fine-tune

the privacy level for a specified risk under by assuming

Lemma 3 (Weak Post-processing). Let M : D → R ⊆ that the sensitivity of the query is known a priori.

Rk be a mechanism that satisfy (ε, γ)-privacy at risk and ∆

For a Laplace mechanism Lε0f calibrated with sensi-

f : R → R0 be any arbitrary data independent map- tivity ∆f and privacy level ε0 , we present the analytical

ping. Then, f ◦ M : D → R0 would also satisfy (ε, γ)- formula relating privacy level ε and the risk γ1 in The-

approximate differential privacy. orem 4. The proof is available in Appendix B.

Proof. Let us fix a pair of neighbouring datasets x and Theorem 4. The risk γ1 ∈ [0, 1] with which a Laplace

y, and also an event Z 0 ⊆ R0 . Let us define pre-image ∆

Mechanism Lε0f , for a numeric query f : D → Rk sat-

of Z 0 as Z , {r ∈ R : f (r) ∈ Z}. Now, we get isfies a privacy level ε ≥ 0 is given by

P(f ◦ M(x) ∈ Z 0 ) = P(M(x) ∈ Z) P(T ≤ ε)

γ1 = , (3)

ε

≤ e P(M(y) ∈ Z) + γ P(T ≤ ε0 )

(a)

where T is a random variable that follows a distribution

= eε P(f ◦ M(y) ∈ Z 0 ) + δ

with the following density function.

(a) is a direct consequence of Theorem 2.

21−k tk− 2 Kk− 1 (t)ε0

1

PT (t) = √ 2

2πΓ(k)∆f

4 Privacy at Risk for Laplace where Kn− 1 is the Bessel function of second kind.

2

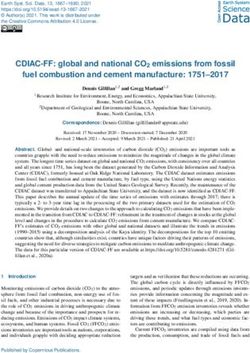

Mechanism Figure 1a shows the plot of the privacy level against

risk for different values of k and for a Laplace mecha-

The Laplace and Gaussian mechanisms are widely used nism L1.0

1.0 . As the value of k increases, the amount of

privacy-preserving mechanisms in the literature. The noise added in the output of numeric query increases.

Laplace mechanism satisfies pure ε-differential privacy Therefore, for a specified privacy level, the privacy at

whereas the Gaussian mechanism satisfies approximate risk level increases with the value of k.

(ε, δ)-differential privacy. As previously discussed, it is The analytical formula representing γ1 as a func-

not straightforward to establish a connection between tion of ε is bijective. We need to invert it to obtain the

the non-probabilistic parameter δ of approximate differ- privacy level ε for a privacy at risk γ1 . However the an-

ential privacy and the probabilistic bound γ of privacy alytical closed form for such an inverse function is notDifferential Privacy at Risk 70

∆S

explicit. We use a numerical approach to compute pri- For the Laplace mechanism Lε f calibrated with

vacy level for a given privacy at risk from the analytical sampled sensitivity ∆Sf and privacy level ε, we evalu-

formula of Theorem 4. ate the empirical risk γˆ2 . We present the result in The-

Result for a Real-valued Query. For the case orem 5. The proof is available in Appendix C.

k = 1, the analytical derivation is fairly straightfor-

ward. In this case, we obtain an invertible closed-form Theorem 5. Analytical bound on the empirical risk,

∆S

of a privacy level for a specified risk. It is presented in γˆ2 , for Laplace mechanism Lε f with privacy level ε

Equation 4. and sampled sensitivity ∆Sf for a query f : D → Rk is

1 2

ε = ln (4) γˆ2 ≥ γ2 (1 − 2e−2ρ n ) (5)

1 − γ1 (1 − e−ε0 )

where n is the number of samples used for estimation of

Remarks on ε0 . For k = 1, Figure 1b shows the

the sampled sensitivity and ρ is the accuracy parameter.

plot of privacy at risk level ε versus privacy at risk γ1

γ2 denotes the specified absolute risk.

for the Laplace mechanism L1.0 ε0 . As the value of ε0 in-

creases, the probability of Laplace mechanism generat-

The error parameter ρ controls the closeness between

ing higher value of noise reduces. Therefore, for a fixed

the empirical cumulative distribution of the sensitivity

privacy level, privacy at risk increases with the value of

to the true cumulative distribution of the sensitivity.

ε0 . The same observation is made for k > 1.

Lower the value of the error, closer is the empirical cu-

mulative distribution to the true cumulative distribu-

tion. Mathematically,

4.2 The Case of Implicit Randomness

ρ ≥ sup |FSn (∆) − FS (∆)|,

∆

In this section, we study the effect of the implicit ran-

domness induced by the data-generation distribution to where FSn is the empirical cumulative distribution of

provide a fine tuning for the Laplace mechanism. We sensitivity after n samples and FS is the actual cumu-

fine-tune the risk for a specified privacy level without lative distribution of sensitivity.

assuming that the sensitivity of the query. Figure 2 shows the plot of number of samples as a

If one takes into account randomness induced by function of the privacy at risk and the error parameter.

the data-generation distribution, all pairs of neighbour- Naturally, we require higher number of samples in order

ing datasets are not equally probable. This leads to es- to have lower error rate. The number of samples reduces

timation of sensitivity of a query for a specified data- as the privacy at risk increases. The lower risk demands

generation distribution. If we have access to an ana- precision in the estimated sampled sensitivity, which in

lytical form of the data-generation distribution and to turn requires larger number of samples.

the query, we could analytically derive the sensitivity If the analytical form of the data-generation distri-

distribution for the query. In general, we have access bution is not known a priori, the empirical distribution

to the datasets, but not the data-generation distribu- of sensitivity can be estimated in two ways. The first

tion that generates them. We, therefore, statistically way is to fit a known distribution on the available data

estimate sensitivity by constructing an empirical dis- and later use it to build an empirical distribution of the

tribution. We call the sensitivity value obtained for a sensitivities. The second way is to sub-sample from a

specified risk from the empirical cumulative distribu- large dataset in order to build an empirical distribution

tion of sensitivity the sampled sensitivity (Definition 7). of the sensitivities. In both of these ways, the empirical

However, the value of sampled sensitivity is simply an distribution of sensitivities captures the inherent ran-

estimate of the sensitivity for a specified risk. In or- domness in the data-generation distribution. The first

der to capture this additional uncertainty introduced way suffers from the goodness of the fit of the known

by the estimation from the empirical sensitivity distri- distribution to the available data. An ill-fit distribution

bution rather than the true unknown distribution, we does not reflect the true data-generation distribution

compute a lower bound on the accuracy of this esti- and hence introduces errors in the sensitivity estima-

mation. This lower bound yields a probabilistic lower tion. Since the second way involves subsampling, it is

bound on the specified risk. We refer to it as empirical immune to this problem. The quality of sensitivity es-

risk. For a specified absolute risk γ2 , we denote by γˆ2 timates obtained by sub-sampling the datasets depend

corresponding empirical risk. on the availability of large population.Differential Privacy at Risk 71

1.0 k =1 1.0 ǫ0 =1.0 ρ = 0.0001

k =2 ǫ0 =1.5 108 ρ = 0.0010

k =3 ǫ0 =2.0 ρ = 0.0100

ǫ0 =2.5

0.8 0.8 ρ = 0.1000

107

106

Privacy level (ǫ)

Privacy level (ǫ)

0.6 0.6

Sample size (n)

105

0.4 0.4

104

0.2 0.2 103

102

0.0 0.0

0.0 0.2 0.4 0.6 0.8 1.0 0.0 0.2 0.4 0.6 0.8 1.0

Privacy at Risk (γ1 ) Privacy at Risk (γ1 ) 0.0 0.2 0.4 0.6 0.8 1.0

Privacy at Risk (γ2 )

(a) (b)

Fig. 1. Privacy level ε for varying privacy at risk γ1 for Laplace mechanism L1.0

ε0 . In Fig. 2. Number of samples n for varying pri-

Figure 1a, we use ε0 = 1.0 and different values of k. In Figure 1b, for k = 1 and vacy at risk γ2 for different error parameter

different values of ε0 . ρ.

Let, G denotes the data-generation distribution, ei- 4.3 The Case of Explicit and Implicit

ther known apriori or constructed by subsampling the Randomness

available data. We adopt the procedure of [38] to sam-

ple two neighbouring datasets with p data points each. In this section, we study the combined effect of both

We sample p − 1 data points from G that are common to explicit randomness induced by the noise distribution

both of these datasets and later two more data points, and implicit randomness in the data-generation distri-

independently. From those two points, we allot one data bution respectively. We do not assume the knowledge of

point to each of the two datasets. the sensitivity of the query.

Let, Sf = kf (x) − f (y)k1 denotes the sensitivity We estimate sensitivity using the empirical cumula-

random variable for a given query f , where x and y tive distribution of sensitivity. We construct the empiri-

are two neighbouring datasets sampled from G. Using cal distribution over the sensitivities using the sampling

n pairs of neighbouring datasets sampled from G, we technique presented in the earlier case. Since we use

construct the empirical cumulative distribution, Fn , for the sampled sensitivity (Definition 7) to calibrate the

the sensitivity random variable. Laplace mechanism, we estimate the empirical risk γˆ3 .

∆S

For Laplace mechanism Lε0 f calibrated with sam-

Definition 7. For a given query f and for a specified

pled sensitivity ∆Sf and privacy level ε0 , we present

risk γ2 , sampled sensitivity, ∆Sf , is defined as the value

the analytical bound on the empirical sensitivity γˆ3 in

of sensitivity random variable that is estimated using

Theorem 6 with proof in the Appendix D.

its empirical cumulative distribution function, Fn , con-

structed using n pairs of neighbouring datasets sampled Theorem 6. Analytical bound on the empirical risk

from the data-generation distribution G. γˆ3 ∈ [0, 1] to achieve a privacy level ε > 0 for Laplace

∆S

∆Sf , Fn−1 (γ2 ) mechanism Lε0 f with sampled sensitivity ∆Sf of a

query f : D → Rk is

2

γˆ3 ≥ γ3 (1 − 2e−2ρ n ) (6)

If we knew analytical form of the data generation dis-

where n is the number of samples used for estimating

tribution, we could analytically derive the cumulative

the sensitivity, ρ is the accuracy parameter. γ3 denotes

distribution function of the sensitivity, F , and find the

the specified absolute risk defined as:

sensitivity of the query as ∆f = F −1 (1). Therefore, in

order to have the sampled sensitivity close to the sensi- P(T ≤ ε)

γ3 = · γ2

tivity of the query, we require the empirical cumulative P(T ≤ ηε0 )

distributions to be close to the cumulative distribution Here, η is of the order of the ratio of the true sensitivity

of the sensitivity. We use this insight to derive the ana- of the query to its sampled sensitivity.

lytical bound in the Theorem 5.

The error parameter ρ controls the closeness between

the empirical cumulative distribution of the sensitivity

to the true cumulative distribution of the sensitivity.

Figure 3 shows the dependence of the error parameterDifferential Privacy at Risk 72

1.0 ρ=0.010 1.0 n=10000

ρ=0.012 n=15000

ρ=0.015 n=20000

ρ=0.020 n=25000

0.8 0.8

Privacy level (ǫ)

Privacy level (ǫ)

0.6 0.6

0.4 0.4

0.2 0.2

0.0 0.0

0.2 0.4 0.6 0.8 1.0 0.2 0.4 0.6 0.8 1.0

Privacy at Risk (γ3 ) Privacy at Risk (γ3 )

(a) (b)

∆S

Fig. 3. Dependence of error and number of samples on the privacy at risk for Laplace mechanism L1.0 f . For the figure on the left

hand side, we fix the number of samples to 10000. For the Figure 3b we fix the error parameter to 0.01.

on the number of samples. In Figure 3a, we observe that

for a fixed number of samples and a privacy level, the

5 Minimising Compensation

privacy at risk decreases with the value of error param- Budget for Privacy at Risk

eter. For a fixed number of samples, smaller values of

the error parameter reduce the probability of similarity Many service providers collect users’ data to enhance

between the empirical cumulative distribution of sensi- user experience. In order to avoid misuse of this data,

tivity and the true cumulative distribution. Therefore, we require a legal framework that not only limits the

we observe the reduction in the risk for a fixed privacy use of the collected data but also proposes reparative

level. In Figure 3b, we observe that for a fixed value of measures in case of a data leak. General Data Protection

error parameter and a fixed level of privacy level, the Regulation (GDPR)4 is such a legal framework.

risk increases with the number of samples. For a fixed Section 82 in GDPR states that any person who suf-

value of the error parameter, larger values of the sam- fers from material or non-material damage as a result of

ple size increase the probability of similarity between a personal data breach has the right to demand compen-

the empirical cumulative distribution of sensitivity and sation from the data processor. Therefore, every GDPR

the true cumulative distribution. Therefore, we observe compliant business entity that either holds or processes

the increase in the risk for a fixed privacy level. personal data needs to secure a certain budget in the

Effect of the consideration of implicit and explicit scenario of the personal data breach. In order to re-

randomness is evident in the analytical expression for duce the risk of such an unfortunate event, the business

γ3 in Equation 7. Proof is available in Appendix D. The entity may use privacy-preserving mechanisms that pro-

privacy at risk is composed of two factors whereas the vide provable privacy guarantees while publishing their

second term is a privacy at risk that accounts for inher- results. In order to calculate the compensation budget

ent randomness. The first term takes into account the for a business entity, we devise a cost model that maps

implicit randomness of the Laplace distribution along the privacy guarantees provided by differential privacy

with a coupling coefficient η. We define η as the ratio and privacy at risk to monetary costs. The discussions

of the true sensitivity of the query to its sampled sen- demonstrate the usefulness of probabilistic quantifica-

sitivity. We provide an approximation to estimate η in tion of differential privacy in a business setting.

the absence of knowledge of the true sensitivity. It can

be found in Appendix D.

P(T ≤ ε)

γ3 , · γ2 (7)

P(T ≤ ηε0 )

4 https://gdpr-info.eu/Differential Privacy at Risk 73

5.1 Cost Model for Differential Privacy Equation 9.

Let E be the compensation budget that a business en- Eεpar

0

(ε, γ) , γEεdp + (1 − γ)Eεdp

0

(9)

tity has to pay to every stakeholder in case of a per- Note that the analysis in this section is specific to

sonal data breach when the data is processed without the cost model in Equation 8. It naturally extends to

any provable privacy guarantees. Let Eεdp be the com- any choice of convex cost model.

pensation budget that a business entity has to pay to

every stakeholder in case of a personal data breach when

the data is processed with privacy guarantees in terms 5.2.1 Existence of Minimum Compensation Budget

of ε-differential privacy.

Privacy level, ε, in ε-differential privacy is the quan- We want to find the privacy level, say εmin , that yields

tifier of indistinguishability of the outputs of a privacy- the lowest compensation budget. We do that by min-

preserving mechanism when two neighbouring datasets imising Equation 9 with respect to ε.

are provided as inputs. When the privacy level is zero,

the privacy-preserving mechanism outputs all results Lemma 4. For the choice of cost model in Equation 8,

with equal probability. The indistinguishability reduces Eεpar

0 (ε, γ) is a convex function of ε.

with increase in the privacy level. Thus, privacy level of

zero bears the lowest risk of personal data breach and By Lemma 4, there exists a unique εmin that minimises

the risk increases with the privacy level. Eεdp needs to the compensation budget for a specified parametrisa-

be commensurate to such a risk and, therefore, it needs tion, say ε0 . Since the risk γ in Equation 9 is itself

to satisfy the following constraints. a function of privacy level ε, analytical calculation of

1. For all ε ∈ R≥0 , Eεdp ≤ E. εmin is not possible in the most general case. When the

2. Eεdp is a monotonically increasing function of ε. output of the query is a real number, i. e. k = 1, we de-

3. As ε → 0, Eεdp → Emin where Emin is the unavoid- rive the analytic form (Equation 4) to compute the risk

able cost that business entity might need to pay in under the consideration of explicit randomness. In such

case of personal data breach even after the privacy a case, εmin is calculated by differentiating Equation 9

measures are employed. with respect to ε and equating it to zero. It gives us

4. As ε → ∞, Eεdp → E. Equation 10 that we solve using any root finding tech-

nique such as Newton-Raphson method [37] to compute

There are various functions that satisfy these con- εmin .

straints. In absence of any further constraints, we model

1 − eε

1 1

Eεdp as defined in Equation (8). − ln 1 − = (10)

ε ε2 ε0

c

Eεdp , Emin + Ee− ε . (8)

Eεdp has two parameters, namely c > 0 and Emin ≥ 0. 5.2.2 Fine-tuning Privacy at Risk

c controls the rate of change in the cost as the privacy

level changes and Emin is a privacy level independent For a fixed budget, say B, re-arrangement of Equation 9

bias. For this study, we use a simplified model with c = 1 gives us an upper bound on the privacy level ε. We use

and Emin = 0. the cost model with c = 1 and Emin = 0 to derive the

upper bound. If we have a maximum permissible ex-

pected mean absolute error T , we use Equation 12 to

5.2 Cost Model for Privacy at Risk obtain a lower bound on the privacy at risk level. Equa-

tion 11 illustrates the upper and lower bounds that dic-

Let, Eεpar

0 (ε, γ) be the compensation that a business en- tate the permissible range of ε that a data publisher can

tity has to pay to every stakeholder in case of a per- promise depending on the budget and the permissible

sonal data breach when the data is processed with an error constraints.

ε0 -differentially private privacy-preserving mechanism −1

1 γE

along with a probabilistic quantification of privacy level. ≤ ε ≤ ln (11)

Use of such a quantification allows us to provide a

T B − (1 − γ)Eεdp

0

stronger privacy guarantee viz. ε < ε0 for a specified Thus, the privacy level is constrained by the ef-

privacy at risk at most γ. Thus, we calculate Eεpar

0 using fectiveness requirement from below and by the mone-Differential Privacy at Risk 74

sure of effectiveness for the Laplace mechanism.

1

120000

E |L1ε (x) − f (x)| = (12)

ε

100000 Equation 12 makes use of the fact that the sensitivity of

Bpar (in dollars)

the count query is one. Suppose that the health centre

80000 requires the expected mean absolute error of at most

two in order to maintain the quality of the published

statistics. In this case, the privacy level has to be at

60000

least 0.5.

ǫ0 =0.7

ǫ0 =0.6 In order to compute the budget, the health cen-

40000 ǫ0 =0.5

tre requires an estimate of E. Moriarty et al. [30] show

0.0 0.1 0.2 0.3 0.4

Privacy level (ǫ)

0.5 0.6 0.7

that the incremental cost of premiums for the health

insurance with morbid obesity ranges between $5467 to

Fig. 4. Variation in the budget for Laplace mechanism L1ε0 under $5530. With reference to this research, the health cen-

privacy at risk considering explicit randomness in the Laplace tre takes $5500 as an estimate of E. For the staff size

mechanism for the illustration in Section 5.3. of 100 and the privacy level 0.5, the health centre uses

tary budget from above. [19] calculate upper and lower Equation 8 in its simplified setting to compute the total

bound on the privacy level in the differential privacy. budget of $74434.40.

They use a different cost model owing to the scenario Is it possible to reduce this budget without degrad-

of research study that compensates its participants for ing the effectiveness of the Laplace mechanism? We

their data and releases the results in a differentially show that it is possible by fine-tuning the Laplace mech-

private manner. Their cost model is different than our anism. Under the consideration of the explicit random-

GDPR inspired modelling. ness introduced by the Laplace noise distribution, we

show that ε0 -differentially private Laplace mechanism

also satisfies ε-differential privacy with risk γ, which is

5.3 Illustration computed using the formula in Theorem 4. Fine-tuning

allows us to get a stronger privacy guarantee, ε < ε0

Suppose that the health centre in a university that com- that requires a smaller budget. In Figure 4, we plot the

plies to GDPR publishes statistics of its staff health budget for various privacy levels. We observe that the

checkup, such as obesity statistics, twice in a year. In privacy level 0.274, which is same as εmin computed

January 2018, the health centre publishes that 34 out of by solving Equation 10, yields the lowest compensation

99 faculty members suffer from obesity. In July 2018, the budget of $37805.86. Thus, by using privacy at risk, the

health centre publishes that 35 out of 100 faculty mem- health centre is able to save $36628.532 without sacri-

bers suffer from obesity. An intruder, perhaps an analyst ficing the quality of the published results.

working for an insurance company, checks the staff list-

ings in January 2018 and July 2018, which are publicly

available on website of the university. The intruder does 5.4 Cost Model and the Composition of

not find any change other than the recruitment of John Laplace Mechanisms

Doe in April 2018. Thus, with high probability, the in-

truder deduces that John Doe suffers from obesity. In Convexity of the proposed cost function enables us to es-

order to avoid such a privacy breach, the health centre timate the optimal value of the privacy at risk level. We

decides to publish the results using the Laplace mecha- use the optimal privacy value to provide tighter bounds

nism. In this case, the Laplace mechanism operates on on the composition of Laplace mechanism. In Figure 5,

the count query. we compare the privacy guarantees obtained by using

In order to control the amount of noise, the health basic composition theorem [12], advanced composition

centre needs to appropriately set the privacy level. Sup- theorem [12] and the composition theorem for privacy

pose that the health centre decides to use the expected at risk. We comparatively evaluate them for composi-

mean absolute error, defined in Equation 12, as the mea- tion of Laplace mechanisms with privacy levels 0.1, 0.5

and 1.0. We compute the privacy level after composition

by setting δ to 10−5 .Differential Privacy at Risk 75

Advanced composition for ǫ0 = 0.10, δ = 10−5 Advanced composition for ǫ0 = 0.50, δ = 10−5 Advanced composition for ǫ0 = 1.00, δ = 10−5

350

30 Basic Composition[10] Basic Composition[10] Basic Composition[10]

Advanced Composition[10] 140 Advanced Composition[10] Advanced Composition[10]

Composition with Privacy at Risk Composition with Privacy at Risk 300 Composition with Privacy at Risk

25

120

Privacy level after composition (ǫ′ )

Privacy level after composition (ǫ′ )

Privacy level after composition (ǫ′ )

250

20 100

200

80

15

150

60

10

100

40

5

20 50

0 0 0

0 50 100 150 200 250 300

0 50 100 150 200 250 300 0 50 100 150 200 250 300

Number of compositions (n)

Number of compositions (n) Number of compositions (n)

(a) L10.1 satisfies (0.08, 0.80)-privacy at risk. (b) L10.5 satisfies (0.27, 0.61)-privacy at risk. (c) L11.0 satisfies (0.42, 0.54)-privacy at risk.

Fig. 5. Comparing the privacy guarantee obtained by basic composition and advanced composition [12] with the composition obtained

using optimal privacy at risk that minimises the cost of Laplace mechanism L1ε0 . For the evaluation, we set δ = 10−5 .

We observe that the use of optimal privacy at risk which optimally satisfies (0.08, 0.8)-privacy at risk. We

provided significantly stronger privacy guarantees as list the calculated privacy guarantees in Table 1. The re-

compared to the conventional composition theorems. ported privacy guarantee is the mean privacy guarantee

Advanced composition theorem is known to provide over 30 experiments.

stronger privacy guarantees for mechanism with smaller

εs. As we observe in Figure 5c and Figure 5b, the compo-

sition provides strictly stronger privacy guarantees than

basic composition, in the cases where the advanced com-

6 Balancing Utility and Privacy

position fails.

In this section, we empirically illustrate and discuss the

steps that a data steward needs to take and the issues

that she needs to consider in order to realise a required

Comparison with the Moment Accountant

privacy at risk level ε for a confidence level γ when seek-

ing to disclose the result of a query.

Papernot et al. [33, 34] empirically showed that the

We consider a query that returns the parameter

privacy guarantees provided by the advanced compo-

of a ridge regression [31] for an input dataset. It is a

sition theorem are quantitatively worse than the ones

basic and widely used statistical analysis tool. We use

achieved by the state-of-the-art moment accountant [1].

the privacy-preserving mechanism presented by Ligett

The moment accountant evaluates the privacy guaran-

et al. [26] for ridge regression. It is a Laplace mech-

tee by keeping track of various moments of privacy loss

anism that induces noise in the output parameters of

random variables. The computation of the moments is

the ridge regression. The authors provide a theoretical

performed by using numerical methods on the specified

upper bound on the sensitivity of the ridge regression,

dataset. Therefore, despite the quantitative strength of

which we refer as sensitivity, in the experiments.

privacy guarantee provided by the moment accountant,

it is qualitatively weaker, in a sense that it is specific to

the dataset used for evaluation, in constrast to advanced

6.1 Dataset and Experimental Setup.

composition.

Papernot et al. [33] introduced the PATE frame-

We conduct experiments on a subset of the 2000 US

work that uses the Laplace mechanism to provide pri-

census dataset provided by Minnesota Population Cen-

vacy guarantees for a machine learning model trained

ter in its Integrated Public Use Microdata Series [39].

in an ensemble manner. We comparatively evaluate the

The census dataset consists of 1% sample of the original

privacy guarantees provided by their moment accoun-

census data. It spans over 1.23 million households with

tant on MNIST dataset with the privacy guarantees ob-

records of 2.8 million people. The value of several at-

tained using privacy at risk. We do so by using privacy

tributes is not necessarily available for every household.

at risk while computing a data dependent bound [33,

We have therefore selected 212, 605 records, correspond-

Theorem 3]. Under the identical experimental setup,

ing to the household heads, and 6 attributes, namely,

we use a 0.1-differentially private Laplace mechanism,Differential Privacy at Risk 76

Privacy level for moment accountant(ε)

δ #Queries

with differential privacy [33] with privacy at risk

10−5 100 2.04 1.81

10−5 1000 8.03 5.95

Table 1. Comparative analysis of privacy levels computed using three composition theorems when applied to 0.1-differentially private

Laplace mechanism, which optimally satisfies (0.08, 0.8)-privacy at risk. The observations for the moment accountant on MNIST

datasets are taken from [33].

Age, Gender, Race, Marital Status, Education, Income, at risk level ε, the confidence level γ1 and the privacy

whose values are available for the 212, 605 records. level of noise ε0 . Specifically, for given ε and γ1 , she

In order to satisfy the constraint in the derivation of computes ε0 by solving the equation:

the sensitivity of ridge regression [26], we, without loss

of generality, normalise the dataset in the following way. γ1 P(T ≤ ε0 ) − P(T ≤ ε) = 0.

We normalise Income attribute such that the values lie Since the equation does not give an analytical formula

in [0, 1]. We normalise other attributes such that l2 norm for ε0 , the data steward uses a root finding algorithm

of each data point is unity. such as Newton-Raphson method [37] to solve the above

All experiments are run on Linux machine with equation. For instance, if she needs to achieve a privacy

12-core 3.60GHz Intel® Core i7™processor with 64GB at risk level ε = 0.4 with confidence level γ1 = 0.6,

memory. Python® 2.7.6 is used as the scripting lan- she can substitute these values in the above equation

guage. and solve the equation to get the privacy level of noise

ε0 = 0.8.

Figure 6 shows the variation of privacy at risk level

6.2 Result Analysis ε and confidence level γ1 . It also depicts the variation of

utility loss for different privacy at risk levels in Figure 6.

We train ridge regression model to predict Income using In accordance to the data steward’s problem, if she

other attributes as predictors. We split the dataset into needs to achieve a privacy at risk level ε = 0.4 with

the training dataset (80%) and testing dataset (20%). confidence level γ1 = 0.6, she obtains the privacy level

We compute the root mean squared error (RMSE) of of noise to be ε0 = 0.8. Additionally, we observe that

ridge regression, trained on the training data with regu- the choice of privacy level 0.8 instead of 0.4 to calibrate

larisation parameter set to 0.01, on the testing dataset. the Laplace mechanism gives lower utility loss for the

We use it as the metric of utility loss. Smaller the value data steward. This is the benefit drawn from the risk

of RMSE, smaller the loss in utility. For a given value taken under the control of privacy at risk.

of privacy at risk level, we compute 50 runs of an ex- Thus, she uses privacy level ε0 and the sensitivity

periment of a differentially private ridge regression and of the function to calibrate Laplace mechanism.

report the means over the 50 runs of the experiment. The Case of Implicit Randomness (cf. Sec-

Let us now provide illustrative experiments under tion 4.2). In this scenario, the data steward does not

the three different cases. In every scenario, the data know the sensitivity of ridge regression. She assesses

steward is given a privacy at risk level ε and the con- that she can afford to sample at most n times from the

fidence level γ and wants to disclose the parameters of population dataset. She understands the effect of the

a ridge regression model that she trains on the census uncertainty introduced by the statistical estimation of

dataset. She needs to calibrate the Laplace mechanism the sensitivity. Therefore, she uses the confidence level

by estimating either its privacy level ε0 (Case 1) or sen- for empirical privacy at risk γˆ2 .

sitivity (Case 2) or both (Case 3) to achieve the privacy Given the value of n, she chooses the value of the

at risk required the ridge regression query. accuracy parameter using Figure 2. For instance, if the

The Case of Explicit Randomness (cf. Sec- number of samples that she can draw is 104 , she chooses

tion 4.1). In this scenario, the data steward knows the the value of the accuracy parameter ρ = 0.01. Next, she

sensitivity for the ridge regression. She needs to compute uses Equation 13 to determine the value of probabilistic

the privacy level, ε0 , to calibrate the Laplace mecha- tolerance, α, for the sample size n. For instance, if the

nism. She uses Equation 3 that links the desired privacy data steward is not allowed to access more than 15, 000Differential Privacy at Risk 77

Fig. 6. Utility, measured by RMSE (right y-axis), and privacy at Fig. 7. Empirical cumulative distribution of the sensitivities of ridge

risk level ε for Laplace mechanism (left y-axis) for varying confi- regression queries constructed using 15000 samples of neighboring

dence levels γ1 . datasets.

samples, for the accuracy of 0.01 the probabilistic toler- Equation 25 and Equation 23, she calculates:

ance is 0.9.

γˆ3 P(T ≤ ηε0 ) − αγ2 P(T ≤ ε) = 0

α = 1 − 2e(−2ρ n)

2

(13)

She solves such an equation for ε0 using the root find-

She constructs an empirical cumulative distribution over

ing technique such as Newton-Raphson method [37]. For

the sensitivities as described in Section 4.2. Such an

instance, if she needs to achieve a privacy at risk level

empirical cumulative distribution is shown in Figure 7.

ε = 0.4 with confidence levels γˆ3 = 0.9 and γ2 = 0.9, she

Using the computed probabilistic tolerance and desired

can substitute these values and the values of tolerance

confidence level γˆ2 , she uses equation in Theorem 5 to

parameter and sampled sensitivity, as used in the pre-

determine γ2 . She computes the sampled sensitivity us-

vious experiments, in the above equation. Then, solving

ing the empirical distribution function and the confi-

the equation leads to the privacy level of noise ε0 = 0.8.

dence level for privacy ∆Sf at risk γ2 . For instance,

Thus, she re-calibrates the Laplace mechanism with

using the empirical cumulative distribution in Figure 7

privacy level ε0 , sets the number of samples to be n and

she calculates the value of the sampled sensitivity to

sampled sensitivity ∆Sf .

be approximately 0.001 for γ2 = 0.4 and approximately

0.01 for γ2 = 0.85

Thus, she uses privacy level ε, sets the number of

samples to be n and computes the sampled sensitivity 7 Related Work

∆Sf to calibrate the Laplace mechanism.

The Case of Explicit and Implicit Random- Calibration of Mechanisms. Researchers have pro-

ness (cf. Section 4.3). In this scenario, the data stew- posed different privacy-preserving mechanisms to make

ard does not know the sensitivity of ridge regression. She different queries differentially private. These mecha-

is not allowed to sample more than n times from a pop- nisms can be broadly classified into two categories. In

ulation dataset. For a given confidence level γ2 and the one category, the mechanisms explicitly add calibrated

privacy at risk ε, she calibrates the Laplace mechanism noise, such as Laplace noise in the work of [11] or Gaus-

using illustration for Section 4.3. The privacy level in sian noise in the work of [12], to the outputs of the query.

this calibration yields utility loss that is more than her In the other category, [2, 6, 17, 41] propose mechanisms

requirement. Therefore, she wants to re-calibrate the that alter the query function so that the modified func-

Laplace mechanism in order to reduce utility loss. tion satisfies differentially privacy. Privacy-preserving

For the re-calibration, the data steward uses pri- mechanisms in both of these categories perturb the orig-

vacy level of the pre-calibrated Laplace mechanism, i.e. inal output of the query and make it difficult for a ma-

ε, as the privacy at risk level and she provides a new licious data analyst to recover the original output of

confidence level for empirical privacy at risk γˆ3 . Using the query. These mechanisms induce randomness us-You can also read