Runtime Locality Optimizations of Distributed Java Applications

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

16th Euromicro Conference on Parallel, Distributed and Network-Based Processing

Runtime Locality Optimizations of Distributed Java Applications

Christian Hütter, Thomas Moschny

University of Karlsruhe

{huetter, moschny}@ipd.uni-karlsruhe.de

Abstract performance gains through parallelism in a distributed

environment.

Solely distributing objects and threads over virtual

In distributed Java environments, locality of objects machines is not sufficient for achieving performance

and threads is crucial for the performance of parallel gains. Since the placement of an object determines the

applications. We introduce dynamic locality processor of its methods, only methods of objects that

optimizations in the context of JavaParty, a reside on different machines can actually be executed

programming and runtime environment for parallel in parallel. So we have two conflicting goals: On the

Java applications. Until now, an optimal distribution one hand, groups of objects with frequent and

of the individual objects of an application has to be expensive communication should be placed on the

found manually, which has several drawbacks. same node. On the other hand, objects should be

Based on a former static approach, we develop a distributed over the available processors to enable

dynamic methodology for automatic locality parallelism.

optimizations. By measuring processing and Until now, JavaParty provides a mechanism to

communication times of remote method calls at create remote objects on specific nodes of a cluster

runtime, a placement strategy can be computed that environment. The developer is responsible for

maps each object of the distributed system to its distributing the individual objects and thus for

optimal virtual machine. Objects then are migrated distributing the activities to the processing nodes. Such

between the processing nodes in order to realize this a manual approach has several disadvantages. First, the

placement strategy. We evaluate our approach by object distribution is dependent on the specific

comparing the performance of two benchmark topology for which the program is compiled. The

applications with manually distributed versions. It is distribution strategy must be adapted to each target

shown that our approach is particularly suitable for platform. Second, manually specifying the location of

dynamic applications where the optimal object every single object creation is tedious. Third, the

distribution varies at runtime. optimal placement of objects often cannot be

determined statically for dynamic applications where

the optimal location of objects changes at runtime.

The work at hand focuses on the automatic

1. Introduction generation of a distribution strategy for remote objects.

The generation is based on runtime information of the

Java enables developers to express concurrency and distributed system. Thus, the programmer does not

to create parallel applications by means of threads. have to worry about a proper object distribution and

Performance gains over a sequential solution can only can focus on the solution of the problem. Even if the

be expected if the virtual machine is executed on a initial object distribution generated by JavaParty is not

system with several processors. JavaParty [10] extends optimal, the locality of the application is optimized at

Java by a distributed runtime environment that consists runtime.

of several Java virtual machines. The virtual machines In chapter 2 we give a brief overview of JavaParty.

are executed on the nodes of a cluster of workstations. Chapter 3 discusses related work in the field of

Each virtual machine has its own address space, but distributed Java applications. In Chapter 4 we describe

can perform remote method invocations on other the design of our approach and explain some basic

virtual machines. Thus, JavaParty allows for concepts that are necessary for further understanding.

0-7695-3089-3/08 $25.00 © 2008 IEEE 149

DOI 10.1109/PDP.2008.76

Authorized licensed use limited to: National Kaohsiung University of Applied Sciences. Downloaded on May 08,2010 at 14:53:01 UTC from IEEE Xplore. Restrictions apply.Chapter 5 presents the implementation and discusses a standard JVM. The advantage of using non-standard

the problems we encountered. In chapter 6 we evaluate JVMs is increased efficiency due to the ability to

the effectiveness and efficiency of our work using two access machine resources directly rather than through

benchmark applications. Finally, chapter 7 concludes the JVM. A weakness of such systems is their lack of

this paper. cross-platform compatibility.

cJVM aims at virtualizing a cluster and at obtaining

2. JavaParty high performance for regular Java applications. A

number of optimization techniques are used to address

JavaParty extends Java by a pre-processor and a caching, locality of execution and object placement.

runtime environment for distributed parallel The smart proxy mechanism of cJVM can be used as

programming in workstation clusters. It transparently framework to implement different locality protocols.

adds remote objects to Java whose methods can be Currently, cJVM is unable to use a standard JIT

invoked from remote virtual machines. Programmers compiler and does not implement a custom one.

can use the keyword remote to indicate that a class JESSICA2 applies transparent Java thread

should be remotely accessible. Instances of remote migration to multi-threaded Java applications. The

classes are called remote objects, regardless on which migration mechanism allows distributing threads

virtual machine they reside. The runtime system offers among cluster nodes at runtime. To support shared

a mechanism to migrate remote objects between object access, a global object space has been

machines. implemented. The system includes some important

features, e.g. load balancing through thread migration,

Java Remote Method Invocation (RMI) [14]

an adaptive home-migration protocol, and a custom

permits the creation of classes whose instances can be

JIT compiler.

accessed remotely from other JVMs. JavaParty uses

Other systems compile the source or class files of a

RMI as target and thus inherits some of its advantages,

Java application into native machine code. Both

e.g. distributed garbage collection. It uses a special

Hyperion [1] and Jackal [15] support standard Java

pre-processor to generate pure Java source code that is

and do not change its programming paradigm. The

consistent with the RMI requirements. This approach

usage of a custom source or byte code compiler has the

hides the increased program complexity due to RMI

disadvantage that such a compiler must continually be

constraints as well as the additional code for creation

adapted to changes of the Java language specification.

and access of remote objects.

The advantage of compiler-based systems is their

JavaParty code is transformed into regular Java

increased performance because of compiler

code plus RMI hooks. The resulting RMI portions are

optimizations and direct access to system resources.

fed into the RMI compiler to generate stubs and

Hyperion offers an infrastructure for heterogeneous

skeletons. Since existing code might be using the

clusters providing the illusion of a single JVM. The

original classes, handle objects are introduced that hide

original Java threads are mapped onto native system

the RMI classes from the user. This approach

threads which are spread across the processing nodes

maintains the Java object semantics such that the

to provide load balancing. The Java memory model is

programmer can use remote objects just like normal

implemented by a DSM protocol, so the original

Java objects.

semantics of the Java language is kept unchanged. To

achieve portability, the Hyperion platform has been

3. Related work built on top of a portable runtime environment which

supports various networks and communication

This section gives an overview of existing systems interfaces.

for distributed execution of Java applications. The goal Jackal is a DSM system for Java which consists of

of these systems is to gain increased computational an optimizing compiler and a runtime system. In

power while preserving Java’s parallel programming combination with compiler optimizations, Jackal

paradigm. In [3], distributed runtime systems are applies various runtime optimizations to increase

categorized into cluster-aware VMs, compiler-based locality and manage large data structures. The runtime

DSM systems, and systems using standard JVMs. system includes a distributed garbage collector and

The first category consists of systems that use a provides thread and object location transparency.

non-standard JVM on each node to execute distributed While most systems use standard JVMs, only a few

applications. The most important examples of such of them preserve the standard Java programming

systems are cJVM [2] and JESSICA2 [16]. Both paradigm. Examples for such systems are

approaches provide a complete single system image of JavaSymphony [4] and ADAJ [5]. Using standard

150

Authorized licensed use limited to: National Kaohsiung University of Applied Sciences. Downloaded on May 08,2010 at 14:53:01 UTC from IEEE Xplore. Restrictions apply.JVMs has the advantage that such systems can use slicing and blocking, competing activities on one JVM

heterogeneous nodes which locally optimize their decrease the total parallelism. Additional costs are

performance using a JIT compiler. The main introduced by the remote method invocation itself

disadvantage of such systems is their relatively slow because of communication latency and bandwidth

access to system resources. limitations. Thus, the general distribution strategy must

JavaSymphony is a programming environment for be activity-centered: different activities should be

distributed and parallel computing that exploits placed onto different JVMs. Objects should be co-

heterogeneous resources. In order to use located to activities such that method invocation is

JavaSymphony efficiently, the programmer has to local. Local method invocation avoids network

explicitly control data locality and load balancing. The communication and competing activities.

structure of the computing resources has to be defined Haumacher proposes an iterative procedure [6] to

manually. Since all objects must be created, mapped, assign objects to activities and then activities to virtual

and freed explicitly, the handling of remote objects can machines. Based on a static type analysis, estimates for

be quite cumbersome. JavaSymphony does not offer two values are derived: work(t, a) describes the

assistance for those manual steps, so the semi- computing time that activity t spends on methods of

automatic distribution is likely to be error-prone. object a, and cost(t, a) describes the communication

ADAJ is an environment for the development and time that would be necessary if t and a were not

execution of distributed Java applications. ADAJ is located in the same address space. Through the

designed on top of JavaParty and is therefore most placement of object a, the computing time of that

closely related to our work. The ADAJ project deals activity t should be maximized in which address space

with placement and migration of Java objects. It a is created. At the same time, the sum of

automatically deploys parallel Java applications on a communication cost that is required for those activities

cluster of workstations by monitoring the application ti assigned to remote virtual machines should be

behavior. ADAJ contains a load-balancing mechanism minimized.

that considers changes in the evolution of the We assume an initial setting where all objects are

application. While the focus of ADAJ is to balance the located in a single address space with a single

load between the individual JVMs, we concentrate on processor such that all method calls are local. In order

optimizing the locality of the distributed application. to distribute objects to activities, we suppose that each

activity is running in a different address space with its

own processor. By placing object a in the address

4. Design space of activity t, method calls of a by t can be

executed parallel to other activities. Thus, work(t, a)

4.1. Locality optimizations indicates the time that is gained by the placement of a

within the address space of t. The communication cost

Philippsen and Haumacher proposed locality that other activities ti spend to access methods of a

optimizations in JavaParty by means of static type break even if work(t, a) is greater than the sum of

analysis [11]. They classify approaches to deal with cost(ti, a). So each object a can be mapped to an

locality in parallel object-oriented languages in three activity t in which address space it should be placed:

categories: (i) let the programmer specify placement

and migration explicitly by means of annotations, activity(a) = t ⇔ t maximizes (work(t,a) − ∑ cost(t i , a))

ti ≠t

(ii) static object distribution where the compiler tries to

predict the best node for a new object, and Since usually more activities are used than virtual

(iii) dynamic object distribution based on a runtime machines are available, several activities must share a

system that keeps track of the call graph. JavaParty virtual machine. Thus, it is necessary to identify

already provides mechanisms for manual object groups of activities that should be executed on a shared

placement and migration, so we focus on static and virtual machine. The parallelization win of each

dynamic object distribution in the following. activity can be estimated by mapping each object to its

optimal activity. The parallelization win is computed

4.1.1. Static object distribution. Although a Java by the sum of work(t, a) for objects a which reside in

thread cannot migrate, the control flow (called activity the address space of activity t minus the sum of

in the following) can: when a method of a remote cost(t, b) for objects b that are placed remotely:

object is invoked, the activity conceptually leaves the

JVM of the caller and is continued at the callee’s JVM

win(t) = ∑ work(t, a) −

{a|activity(a) = t}

∑ cost(t, b)

{b|activity(b) ≠ t}

where it competes with other activities. Due to time-

151

Authorized licensed use limited to: National Kaohsiung University of Applied Sciences. Downloaded on May 08,2010 at 14:53:01 UTC from IEEE Xplore. Restrictions apply.The sum of work(t, a) represents the computing 4.2. Time measurements

time that activity t spends in its own address space.

This work is done in parallel to other activities if no Having developed a placement methodology for

synchronization mechanisms are used. The time that is remote objects, we now focus on how to measure the

spent for communication with other address spaces is time values required for the distribution algorithm.

represented by the sum of cost(t, b) for all objects b Beginning with the Pentium processor, Intel allows the

that are not assigned to activity t. Note that we charge programmer to access a time-stamp counter [8]. This

the cost of a remote call to the activity that invoked the counter keeps an accurate count of every cycle that

remote method, not to the activity that actually occurs on the processor since it is incremented every

executes the method call. clock cycle, starting with zero. To access the counter,

Activities are assigned to the available virtual programmers can use the RDTSC (read time-stamp

machines in decreasing order of their parallelization counter) instruction. We use the counter to get an time

wins until a single activity has been scheduled to each estimate for the duration of method invocations.

virtual machine. For each remaining activity, a new Note that the time-stamp counter measures cycles,

parallelization win is computed that accounts for the not time. Thus, comparing cycle counts only makes

potential co-location with other activities. The activity sense on processors of the same speed – like in a

is assigned to that group of activities with the highest homogeneous cluster environment. To compare

combined parallelization win. This process is repeated processors of different speeds, the cycle counts should

until all activities are scheduled to their optimal virtual be converted into time units. While the unit of time

machine. returned by currentTimeMillis() is a

millisecond, the granularity of the value depends on

The result of the distribution analysis is a mapping the underlying OS and may be larger. Thus, the time-

of each remote object to the virtual machine on which stamp counter also allows much finer measurements.

it should be placed. To avoid measurement errors because of

concurrency, we assume that the workstations of the

cluster are used exclusively for JavaParty. In the

4.1.2. Dynamic object distribution. While Philippsen presence of background jobs, cycle counting does not

and Haumacher focus on static object distribution always reflect the real execution time of an application.

through type analysis, we rely on dynamic object But in the long run, the interrupts through background

distribution to improve locality. This approach is jobs are approximately the same for all workstations of

reported to have two disadvantages: First, there is no a homogenous cluster. Thus, we assume that those

knowledge about future call graphs as well as interrupts balance over time such that cycle counting

invocation frequencies. Second, the creation of objects actually reflects the average execution time.

that cannot migrate often results in a broad re-

distribution of other objects. The first problem is 4.3. Remote Method Invocation

inherent to dynamic approaches, but can be softened

by using heuristics to predict future behavior. The

RMI uses a standard mechanism for communicating

second problem is not exactly an issue in

with remote objects – stubs and skeletons. A stub for a

homogeneous cluster environments and can be handled

remote object acts as a local representative or proxy

by avoiding cyclic redistributions of remote objects.

for the remote object. The stub hides the serialization

Besides these problems, the dynamic approach has of parameters and the network communication whereas

the essential advantage that instead of estimating the the skeleton is responsible for dispatching the call to

values of work and cost, they can be measured: we the actual remote object implementation. We want to

take work as the actual execution time of a method call measure work(t, a) and cost(t, a) in order to apply the

and cost as the communication time of a remote distribution algorithm. In the context of stubs and

method invocation. As detailed later, we have to skeletons, work corresponds to the time that the actual

estimate the cost of remote calls that are actually method implementation takes and cost corresponds to

executed locally because the called object resides on the time that is required for carrying out the remote

the same node. We adapt Haumacher’s approach and call, i.e. marshaling and transmitting parameters and

use an iterative procedure to distribute objects to result.

activities and then assign activities to virtual machines. For remote object r, a stub is instantiated on each

Objects are migrated to the virtual machine their node while only one skeleton is instantiated on the

optimal activity is assigned to. node where the implementation of r resides. That is,

152

Authorized licensed use limited to: National Kaohsiung University of Applied Sciences. Downloaded on May 08,2010 at 14:53:01 UTC from IEEE Xplore. Restrictions apply.there are n stubs and one skeleton for each remote values are stored in the skeleton using a special data

object. Basically, our approach is to measure the structure described later.

communication time of a remote call in the stub and

the execution time of the implementation in the 5.3. Estimation of cost

skeleton by using the RDTSC instruction. We store

aggregated work and cost values in the skeleton. An important optimization carried out by JavaParty

is that a call is only executed remotely if the called

5. Implementation object actually resides on another node. Otherwise, the

call is executed locally. Recall that cost(t, a) estimates

5.1. Time measurements the communication time that would be necessary if

activity t was not located on the same node as object a.

While we’re able to measure the actual communication

Our framework for performance measuring wraps

time of remote calls, we have to estimate the cost of

the RDTSC instruction described in the previous

local calls as if they were remote. Thus, we have to

chapter using the Java Native Interface [13]. As

develop a model to estimate the communication cost

detailed in Table 1, accessing the system time is orders

based on the measured cost of a local call.

of magnitude more expensive than using the RDTSC

instruction. Times were measured on a Pentium III 800 Whenever the client and server objects are in the

MHz system. same address space, arguments and result are cloned to

preserve the copy semantics of a remote call. JavaParty

produces a deep clone with all referenced objects also

Table 1. Cost of System.currentTimeMillis() being cloned. In the generated stubs, the instrumented

Call Cycles Time version of the local short cut measures the cost of

RDTSC.readccounter() 613 0.77 µs cloning arguments and return value.

System.currentTimeMillis() 36941 46.18 µs The measuring can be divided into three parts:

cloning of the arguments, local method invocation, and

cloning of the result. Based on the measured local cost

5.2. KaRMI of cloning arguments and result, we estimate the

communication cost if the call was remote. For this

purpose we analyzed the results of a benchmark suite

KaRMI [12] is a fast replacement for Java RMI. It

that measures the execution times of local and remote

is based on an efficient object serialization mechanism

method calls for a representative set of parameter

that replaces regular Java serialization. Since the

types.

remote method invocation protocol is different from

Java RMI, the format of stubs and skeletons is Given the duration of a local call, we estimate how

different, too. The KaRMI compiler generates stub and long a remote call takes. While the absolute values are

skeleton classes from compiled remote classes. We likely to vary on different machines, the relation

modified the generation of stubs and skeletons to between local and remote calls should approximately

include code that measures the execution times of be the same. For simplicity, we assume a linear model

remote calls. The measured times are processed by the with offset a and gradient b:

distribution task to compute an optimal object remote cost = a + b ⋅ (local cost)

distribution.

More precisely, we modified the generation of stubs We applied a nonlinear least-squares algorithm to

to measure the total execution time of remote calls. the results of the benchmark suite in order to fit the

Once a remote call returns, the stub sends the total time estimate function and determine the values of a and b.

to the skeleton which measured the execution time of

the actual implementation (i.e. work). Using both 5.4. Smoothing and storing time values

values, we compute cost as the difference between the

total time and work. We use a hash map to store time values, mapping

In order to transmit the total time from stub to activities to work and cost values. JavaParty assigns a

skeleton, we added methods to send and receive the globally unique thread id to activities that face remote

measured times to the client and server side of the calls. If a new measurement is to be stored, the given

connection. These methods are called after a remote thread id is mapped to a pair of work and cost values.

method invocation has been completed and the result is We store these values directly with the skeleton, so the

marshaled back to the caller. Finally, the work and cost addressed object is implicit. Since work and cost

153

Authorized licensed use limited to: National Kaohsiung University of Applied Sciences. Downloaded on May 08,2010 at 14:53:01 UTC from IEEE Xplore. Restrictions apply.indicate the computing and communication times an The first application is a numerical algorithm that

activity spends on all methods of an object, we have to has a static structure. We started with a sub-optimal

aggregate the values of the individual methods in a distribution and optimized its locality during runtime.

reasonable way. The second application is an n-body simulation with an

We use an exponential moving average which has inherently dynamic structure. We started with an

the following advantages over simply adding up the optimal distribution and adapted the locality as the

time values: First, the weighting for each data point structure of the application changed.

decreases exponentially, giving more importance to All measurements in this chapter have been

recent observations while still not discarding older conducted on our Carla cluster, using the Java Server

observations. Second, the weighting makes our VM 1.4.2_13-b06. This cluster consists of 16 nodes

measurement more robust against outliers, e.g. delayed equipped with two Pentium III 800 MHz processors

execution because of distributed garbage-collection. and 1 GB RAM each.

Third, the exponential moving average is easy to

compute and thus a relatively cheap operation. 6.2. Successive over-relaxation

5.6. Application monitoring Successive over-relaxation is a numerical algorithm

for solving Laplace equations on a grid. The sequential

JavaParty offers an interface that allows plugging in implementation involves an outer loop for the

additional classes that can be used for monitoring the iterations and two inner loops, each looping over the

distributed environment. In our case, the monitor grid. During an iteration, the new value of each point

interface is implemented as an invisible task that of the grid is determined by calculating the average

collects runtime data based on instrumentation. This value of the four neighbor points. The algorithm

data is used to analyze the distribution of remote terminates if no point of the grid has changed more

objects over the virtual machines. than a certain threshold.

In JavaParty, references to remote objects are stored The parallel implementation [9] provided by

in a distributed fashion. Thus, we have to iterate over Maassen is based on a red-black ordering mechanism.

all virtual machines to obtain references to the remote The grid is partitioned among the available processors,

objects. These references are used to collect the each processor receiving a number of adjacent rows.

measured times. Before a processor starts to update the points of a

The monitor also serves as front end for the certain color, it exchanges the border rows of the

distribution task which can either be scheduled for opposite color with its neighbors.

repeated fixed-delay execution or invoked manually

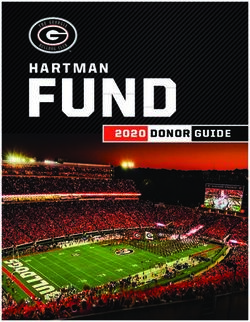

via a library call. Basically, our distribution task 1000x1000 grid, 300 iterations

fetches the measured times and runs the distribution

120

algorithm discussed in section 4.1.

The distribution algorithm sorts the application 100

threads according to their parallelization wins. Each 80

time [ms]

manual

activity is assigned to a group of activities which are 60 optimized

optimally placed on the same virtual machine. Finally, random

40

each object is assigned to its optimal JVM and possibly

20

migrated there. The migration succeeds only for

objects that are not declared to be resident. If nothing 0

2 4 8 16

was changed during the migration, the distribution task

# machines

is canceled.

Figure 1. Results of the SOR benchmark

6. Evaluation

The SOR benchmark performs 300 iterations of

In order to evaluate the effectiveness and efficiency successive over-relaxation on a 1000x1000 grid of

of our work, we examined two applications that have double values. The performance was measured on 2, 4,

potential for locality optimizations. If a program was 8, and 16 nodes and is reported in milliseconds per

already distributed optimally at compile time and its iteration. In order to evaluate our approach, we created

locality did not change during run time, there would be three versions of the benchmark: (i) a manual version

nothing to optimize. that creates all remote objects at their optimal location,

(ii) a random version where the location of the remote

154

Authorized licensed use limited to: National Kaohsiung University of Applied Sciences. Downloaded on May 08,2010 at 14:53:01 UTC from IEEE Xplore. Restrictions apply.objects is determined randomly, and (iii) an optimized The benchmark performs 10 iterations of n-body

version which invokes the locality optimizations after simulation with 1000 particles. The performance was

the first iteration based on the random object measured on 2, 4, 8, and 16 nodes and is reported in

distribution. seconds per iteration. Again, we created three versions

The results of the SOR benchmark are shown in of the benchmark: (i) a manual version with explicit

Figure 1. As expected, the manual version performs placement annotations, (ii) a random version where the

best with a constant speedup as the number of location of the remote objects is determined randomly,

machines increases. The random version performs and (iii) an optimized version which invokes the

worst and does not scale with additional machines. locality optimizations after the first iteration based on

Finally, the optimized version of the benchmark the random object distribution.

performs considerably better than the random version,

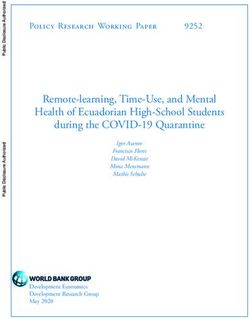

improving its performance towards the optimal Figure 2 shows the results of the n-body

version. If more iterations were performed, the benchmark. Because of the dynamic structure of the

optimized version would do even better since the cost benchmark, an optimal distribution of the remote

of the locality optimizations would bear less weight. objects is hard to predict and depends on the spatial

Figure 1 might give the impression that the distribution of the particles. As the initial coordinates

optimized version does not scale with additional of the particles are determined randomly and thus are

machines. This is not exactly true since the cost of the not known a priori, the manual version of the

locality optimizations is proportional to the number of benchmark performs only slightly better than the

nodes, too. Table 2 details the cost of the procedure for random version. Since the locality of the application is

the SOR benchmark. Polling the remote objects clearly adapted to the actual location of the particles, the

dominates the overall cost. In spite of its square optimized version of the benchmark performs best.

complexity, the cost of the distribution algorithm is The cost of the locality optimizations can easily be

relatively small. Again, if the number of iterations was covered by the savings achieved during the following

increased or a benchmark with longer processing times iterations.

was used, the cost would decrease.

Table 2. Cost of the locality optimizations 1000 particles, 10 iterations

polling computing cost of overall

300

remote locality migrating cost

objects algorithm objects [ms] 250

2 929 43 235 1206,87 200

manual

time [s]

4 1799 137 249 2185,82 150 optimized

8 4044 332 588 4963,73 random

100

16 7000 652 1068 8720,30

50

0

2 4 8 16

6.3. N-body simulation # machines

The n-body simulation approximates the movement Figure 2. Results of the n-body benchmark

of n particles in a two-dimensional space based on

mutual gravitation. The simulation is discretized into

time steps where the gravity between each of the The n-body benchmark is a good example for the

n particles must be computed for each time step. effectiveness of our approach. In dynamic settings

Afterwards, acceleration and change in velocity and such as the n-body simulation, it is hard and sometimes

location are determined for each particle. In order to impossible to determine a good initial distribution of

avoid the square complexity of computing forces, the the remote objects. Even if an optimal distribution can

present implementation uses an approximation be determined, the performance of the initial

proposed by Barnes and Hut. Through hierarchical distribution will decrease since the locality of the

grouping and generation of substitute masses for application changes. Only a dynamic approach that

distant space regions, the computation complexity is optimizes the locality at runtime can guarantee

reduced to O(n log(n)) operations per time step. We consistently high performance throughout the whole

refer to [7] for a detailed description of the benchmark. life cycle of the application.

155

Authorized licensed use limited to: National Kaohsiung University of Applied Sciences. Downloaded on May 08,2010 at 14:53:01 UTC from IEEE Xplore. Restrictions apply.7. Conclusion and future work [4] T. Fahringer, “JavaSymphony: a system for

development of locality-oriented distributed and parallel

Java applications”, Cluster Computing, 2000.

In this work, we presented runtime locality [5] V. Felea, R. Olejnik, and B. Toursel, “ADAJ: a Java

optimizations of distributed Java applications. Based Distributed Environment for Easy Programming Design

on a static approach, we developed a dynamic and Efficient Execution”, Shedae Informaticae, UJ

methodology to automatically generate a distribution Press, Krakow, 2004, pp. 9-36.

strategy for the objects of a distributed system. We [6] B. Haumacher, “Lokalitätsoptimierung durch statische

instrumented stubs and skeletons to measure the Typanalyse in JavaParty“, Diploma theses, Institute for

execution time and communication cost of remote Program Structures and Data Organization, University

calls. The measured time values are stored locally to of Karlsruhe, January 1998.

[7] B. Haumacher, “Plattformunabhängige Umgebung für

avoid communication overhead. The locality

verteilt paralleles Rechnen mit Rechnerbündeln“, PhD

optimizations are implemented as a task that runs thesis, Institute for Program Structures and Data

periodically or can be started on demand. This task Organization, University of Karlsruhe, October 2005.

collects the measured time values and computes an [8] Intel Corp, “Using the RDTSC Instruction for

optimal distribution strategy. In order to realize the Performance Monitoring”, 1997.

distribution strategy, objects are migrated between http://developer.intel.com/drg/pentiumII/appnotes/RDT

machines. SCPM1.HTM

We evaluated the effectiveness and efficiency of [9] J. Maassen and R.V. Nieuwpoort, “Fast parallel Java“,

our work by optimizing two benchmark applications. Master's thesis, Dept. of Computer Science, Vrije

The first benchmark is a typical example of a Universiteit, Amsterdam, August 1998.

[10] M. Philippsen and M. Zenger, “JavaParty - Transparent

numerical algorithm with a static structure, so we

Remote Objects in Java”, Concurrency: Practice and

created a random initial distribution of the objects and Experience, November 1997.

optimized their locality at runtime. The second [11] M. Philippsen and B. Haumacher, “Locality

benchmark has a dynamic structure, so that the optimization in JavaParty by means of static type

performance of the initial object distribution – even of analysis”, Proc. Workshop on Java for High

an optimal one – will deteriorate at runtime. We have Performance Network Computing at EuroPar '98,

shown that our approach is particularly suitable for Southhampton, September 1998.

such dynamic settings. [12] M. Philippsen, B. Haumacher, and C. Nester, “More

In future work, we will focus on automatically Efficient Serialization and RMI for Java”, Concurrency:

Practice and Experience, John Wiley & Sons,

adapting the periodic time of the distribution task such

Chichester, West Sussix, May 2000, pp. 495-518.

that it reflects the processing time of the application. If [13] Sun Microsystems, “Java Native Interface”, 2003.

the structure of the application does not change, we http://java.sun.com/j2se/1.4.2/docs/guide/jni/

might even want to switch off the measuring [14] Sun Microsystems, “Java Remote Method Invocation

completely. For large clusters with thousands of Specification”, 2003.

processors or applications with a great number of http://java.sun.com/j2se/1.4.2/docs/guide/rmi/spec/rmiT

objects, an algorithm with square complexity might be OC.html

suboptimal. We could imagine a distributed algorithm [15] R. Veldema, R. A. F. Bhoedjang, and H. E. Bal,

that works with exact time values for only a couple of “Jackal, a compiler based implementation of java for

clusters of workstations”, Proceedings of PPoPP, 2001.

local nodes and extrapolates the values for remote

[16] W. Zhu, C.-L. Wang, and F. C. M. Lau, “JESSICA2: A

nodes. Distributed Java Virtual Machine with Transparent

Thread Migration Support”, IEEE Fourth International

References Conference on Cluster Computing, Chicago, USA,

September 2002.

[1] G. Antoniu, L. Bouge, P. Hatcher, M. MacBeth, K.

McGuigan, and R. Namyst, “The Hyperion system:

Compiling multithreaded Java bytecode for distributed

execution”, Parallel Computing, 2001.

[2] Y. Aridor, M. Factor, and A. Teperman, “cJVM: a

single system image of a JVM on a cluster”, Parallel

Processing, 1999, pp. 4-11.

[3] M. Factor, A. Schuster, and K. Shagin, “A distributed

runtime for Java: yesterday and today”, Parallel and

Distributed Processing Symposium, 2004.

156

Authorized licensed use limited to: National Kaohsiung University of Applied Sciences. Downloaded on May 08,2010 at 14:53:01 UTC from IEEE Xplore. Restrictions apply.You can also read