Ginseng: Market-Driven Memory Allocation

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Ginseng: Market-Driven Memory Allocation

Orna Agmon Ben-Yehuda1 Eyal Posener 1 Muli Ben-Yehuda1 Assaf Schuster1 Ahuva Mu’alem1,2

1 2

Technion – Israel Institute of Technology Ort Braude

{ladypine, posener, muli, assaf, ahumu}@cs.technion.ac.il

Abstract addition to CPU and I/O usage. In general, today’s cloud

Physical memory is the scarcest resource in today’s cloud computing clients buy a supposedly-fixed amount of physi-

computing platforms. Cloud providers would like to max- cal memory for the lifetime of their guests.

imize their clients’ satisfaction by renting precious phys- Providers can greatly reduce their expenses by using

ical memory to those clients who value it the most. But less memory to run more client guest virtual machines on

real-world cloud clients are selfish: they will only tell their the same physical hosts. This can be done transparently by

providers the truth about how much they value memory means of memory overcommitment [8, 34, 55]. When mem-

when it is in their own best interest to do so. How can real- ory is overcommited, the clients have no way to discern how

world cloud providers allocate memory efficiently to those much physical memory they are actually getting. Due to the

(selfish) clients who value it the most? lack of transparency and difficulties with providing a given

We present Ginseng, the first market-driven cloud sys- level of quality of service when overcommitting memory,

tem that allocates memory efficiently to selfish cloud clients. some providers refrain from memory overcommitment and

Ginseng incentivizes selfish clients to bid their true value for let their hardware go underutilized. Others simply reduce

the memory they need when they need it. Ginseng contin- their clients’ quality of service.

uously collects client bids, finds an efficient memory allo- Clients would much prefer to have full visibility and

cation, and re-allocates physical memory to the clients that control over the resources they receive [3, 42]. They would

value it the most. Ginseng achieves a 6.2×–15.8× improve- like to pay only for the physical memory they need, when

ment, which is 83%–100% of the optimum, in aggregate they need it [1, 5, 18]. By granting clients this flexibility,

client satisfaction when compared with state-of-the-art ap- providers can increase client satisfaction: clients interested

proaches for cloud memory allocation. in high quality of service (QoS) will be able to choose a

non-overcommited machine, while budget-conscious clients

Categories and Subject Descriptors D.4.2 Operating Sys- will be able to enjoy the cloud at low prices when demand is

tems [Storage Management]: Main memory low. Finding an efficient allocation of physical memory on

General Terms Virtualization, Economics, Performance each cloud host—an allocation that gives each guest virtual

machine precisely the amount of memory it needs, when it

Keywords Virtual Machines, Operating Systems, KVM, needs it, at the price it is willing to pay—yields benefits for

Memory Overcommit, Oversubscription both clients, whose satisfaction is improved, and providers,

whose hardware utilization is improved.

1. Introduction Previous physical memory allocation schemes assumed

Infrastructure-as-a-Service (IaaS) cloud computing providers that guest virtual machines are run by fully cooperative

rent computing resources to their clients. As competition be- clients, who let the host know precisely what each guest is

tween providers gets tougher and prices decrease, providers doing, how much benefit additional memory would bring to

will need to continuously and ruthlessly reduce expenses, it, and the importance of the workload to the client [19, 25,

primarily by improving their hardware utilization. Physi- 27, 41]. Gingko, for example, assumed that guests are coop-

cal memory is the most constrained and thus precious re- erative and that the host knows the benefit additional mem-

source in use in cloud computing platforms today [19, 25, ory would bring each guest [19, 27]. Heo et al. [25] solved a

27, 36, 41, 55]. Google, for example, had to begin charging control problem over performance level objectives, by mea-

for memory usage in addition to CPU usage: not charg- suring guest performance inside the guests, again assuming

ing for memory made the scaling of applications that use guest cooperation. Nathuji et al. [41] sold performance in-

a lot of memory and little CPU time “cost-prohibitive to stead of resources, again by measuring guest performance

Google.” [12]. Other platforms (such as Amazon EC2) of- inside the guests. All these systems allocated memory effi-

fered virtual machines with varying amounts of memory to ciently and improved the overall system’s performance, but

begin with, thereby charging clients for memory usage in were unsuitable for real-world commercial clouds, because

Ginseng: Market-Driven Memory Allocation 1 2013/11/11the assumption that the host has full, accurate information plementation of a single-resource Resource-as-a-Service

on all aspects of guest performance is unrealistic. (RaaS) cloud [3]. Ginseng is the first cloud platform to opti-

As recent commercial cloud trends of price dynamicity mize overall client satisfaction for black box guests. We also

and fine-grained resource granularity [3] indicate, real-world build a strategic agent for the MPSP auction.

cloud clients act rationally and selfishly. They are black Ginseng supports static-memory applications—legacy

boxes with private information such as their performance applications that require some fixed quantity of memory and

statistics, how much memory they need at the moment, and do not perform better with more memory, but is tailored for

what it is worth to them. Rational, selfish black-boxes will elastic-memory applications—applications that can improve

not share this information with their provider unless it is in their performance when given more memory on-the-fly over

their own best interest to do so. a large range of memory quantities and can return mem-

When white-box models are applied to selfish guests, the ory to the system when needed. Many applications in use

guests have an incentive to manipulate the host into granting today are static-memory applications, but elastic-memory

them more memory than their fair share. For example, if the applications are starting to become more common. It is easy

host gives memory to those guests that will benefit more to convert Java- or database-dependent static-memory ap-

from it, each guest will say it benefits from memory more plications to being dynamic using environments such as

than any other guest. If the host gives memory to those Salomie et al.’s database [44] or a Java runtime with bal-

guests that perform poorly with their current allocation, each loons [19, 27, 44] or CRAMM [58]. Other examples of

guest will say it performs poorly. dynamic-memory applications include dynamic heap ad-

The host can allocate memory on the basis of pas- justment for garbage-collected environments [21, 26, 59],

sive black-box or grey-box measurements [29, 34, 36, 55, applications making use of the Linux mempressure control

60, 61]. For example, Jones, Arpaci-Dusseau, and Arpaci- group [40, 63] and CloudSigma’s Burst Pricing [1]. Our

Dusseau [29] monitored I/O and inferred major page faults, third and final contribution is two elastic-memory bench-

and Zhao and Wang [61] monitored use of physical pages mark applications: an elastic-memory version of Mem-

to balance the guests’ need for physical memory. However, cached, a widely-used key-value storage cloud application,

in such cases the guests have an incentive to bias the mea- and MemoryConsumer, an elastic memory benchmark we

surement results, e.g., by inducing unnecessary page faults developed.

or accessing unnecessary memory. Furthermore, black-box Ginseng achieves a 6.2× improvement in aggregate client

methods compare the guests only by technical qualities such satisfaction for MemoryConsumer and 15.8× improvement

as throughput and latency, which are valued differently by for Memcached, when compared with state-of-the-art ap-

different guests under different circumstances. proaches for cloud memory allocation. Overall, it achieves

In this work we address the cloud provider’s fundamental 83%–100% of the optimal aggregate client satisfaction.

memory allocation problem: How should it divide the phys-

ical memory on each cloud host among selfish black-box

2. System Architecture

guests? A reasonable meta-approach would be to give more

memory to guests who would benefit more from it. But how Ginseng is a market-driven cloud system that allocates mem-

can the host compare the benefits of additional memory for ory to guests using guest bids for memory. It is imple-

each guest? mented for cloud hosts running the KVM hypervisor [31]

We make the following three contributions. Our first with Litke’s Memory Overcommit Manager MOM [34]. It

contribution is the Memory Progressive Second Price controls the exact amount of physical memory allocated to

(MPSP) auction, a game-theoretic market-driven mecha- each guest via the libvirt abstraction using balloon drivers [8,

nism which induces auction participants to bid (and thus 34, 55].

express their willingness to pay) for memory according to The balloon driver, first presented by Waldspurger [55],

their true economic valuations (how they perceive the bene- is installed in the guest operating system. The host’s Balloon

fit they get from the memory, stated in monetary terms). In Controller controls the balloon driver, inflating or deflating

Ginseng, the host periodically auctions memory using the it. When inflating, the balloon driver allocates memory from

MPSP auction. Guests bid for the memory they need as they the guest OS and pins it, so that the guest OS won’t attempt

need it; the host then uses these bids to compare the benefit to swap it out; the balloon driver then transfers this memory

that different guests obtain from physical memory, and to to the host. When deflating, the balloon driver frees memory

allocate it to those guests which benefit from it the most. back to its OS, in effect giving the OS more memory from

The host is not manipulated by guests and does not require the host. Ginseng does not specifically depend on any bal-

unreliable black-box measurements. loon implementation; it only requires that the host supports

Our second contribution is Ginseng itself, a market- some underlying mechanism for balancing physical memory

driven cloud system for allocating memory efficiently to between guests.

selfish black-box virtual machines. It is the first full im- Ginseng’s system architecture is depicted in Figure 1.

Ginseng has a host component and a guest component. The

Ginseng: Market-Driven Memory Allocation 2 2013/11/11tical reasons, bidders in these auctions do not communicate

their valuation for the full range of auctioned goods. One

of these VCG-like auctions is Lazar and Semret’s Progres-

sive Second Price (PSP) auction [32]. None of the auctions

proposed so far for divisible goods, including the PSP auc-

tion, are suitable for auctioning memory, because memory

has two characteristics that set it apart from other divisible

resources: first, the participants’ valuation functions may be

non-concave; second, transferring memory too quickly be-

tween two participants leads to waste. The memory val-

uation function, which describes how much the guest is

willing to pay for different memory quantities, is a func-

Figure 1: Ginseng system architecture tion of the load the guest is under, the performance gain or

loss it expects from less or more memory given that load,

host component includes the Auctioneer, which runs the and the value of (less or more) performance to the guest.

MPSP auction that is presented in Section 3 and described Formally, V (mem, load) = Vp (perf(mem, load)), where

in detail in Section 5. The auctioneer and the guests asyn- Vp (perf) refers to the valuation of performance as described

chronously communicate information for the purpose of the below, and perf(mem, load) describes the performance the

auction using their respective Communicators, according to guest can achieve given a certain load and a certain memory

the protocol specified in Section 4. The host’s communica- quantity.

tor is also responsible for instructing the Balloon Controller Performance might be measured in page hits per second

how to allocate memory between different guests. The Bal- for a webserver, “get” hits per second for a caching service,

loon controller inflates and deflates the balloon drivers inside transactions per second for a database, trades per second for

the guests. In the guest, the Strategy Adviser is the brains of a high-frequency-trading system, or any other guest-specific

the guest’s economic learning agent, which acts on behalf metric. Performance can be mapped offline as function of

of the client. The client is free to choose its logic, provided memory and load, and done by Hines et al. [27] and Gor-

it speaks the MPSP protocol. Our implementation of an ad- don et al. [19]. As we demonstrate in Section 8.2, the perfor-

viser is described in Section 6. mance predictions made in this paper according to the offline

measurements were accurate enough. However, real-world

performance may depend on many variable conditions such

3. Memory Auctions

as program phase and bottlenecks in resources other than

Ginseng allocates memory efficiently because its guests bid memory. To this end, performance can be measured online as

for the memory they want in a specially-designed auction. several works demonstrate [60–62]. An important feature of

We begin by describing how Ginseng auctions memory. the MPSP auction is that it does not require the guest to have

In Ginseng, each guest has a different, changing, private its performance defined for any memory value. Hence, the

(secret) valuation for memory. Simply put, this valuation guest can keep a moving window of its latest performance

reflects how much additional memory is worth to each guest. measurements, which reflect best the current conditions in

We define the aggregate benefit of a memory allocation to which it operates.

all guests—their satisfaction from auction results—using the Vp (perf), the guest’s owner’s (i.e., the client’s) valuation

game-theoretic measure of social welfare. The social welfare of performance function, describes the value the client de-

of an allocation is defined as the sum of all the guests’ rives from a given level of performance from a given guest.

valuations of the memory they receive in this allocation. An This function is different for each client and is private infor-

efficient memory auction allocates the memory to the guests mation of that client. It is computed mostly on the basis of

such that social welfare—guest satisfaction—is maximized. economic considerations and business logic.

VCG [11, 20, 53] auctions optimize social welfare by For example, an e-commerce website that typically makes

incentivizing even selfish participants with conflicting eco- $100 sales and needs to present 10, 000 Web pages on aver-

nomic interests to inform the auctioneer of their true valua- age to generate a single sale might measure its performance

tion of the auctioned items. VCG auctions do so by charging in displayed pages per second, and value each presented

page ·perf. Another

each participant for the damage it inflicts on other partic- page at $0.01. For this client, Vp (perf) = $0.01

ipants’ social welfare, rather than directly for the items it client might require the same average number of presented

wins. VCG auctions are used in various settings, including pages to make a sale, but its typical sale would be $10 only.

page · perf. Both clients will

Facebook’s repeated auctions [24, 35]. For this client, Vp (perf) = $0.001

Various auction mechanisms, some of which resemble need to know perf(mem, load): how many pages they can

the VCG family, have been proposed for divisible resources, present per second when given various amounts of memory

in particular for bandwidth sharing [30, 32, 37]. For prac-

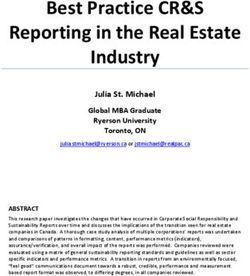

Ginseng: Market-Driven Memory Allocation 3 2013/11/11and under the current conditions (e.g., load). The guests do Figure 2: Application performance (“get” hits per second

not need to measure the perf(·) function for the full range for Memcached, hits per second for MemoryConsumer) as a

of possible memory values. It is enough that these guests function of guest physical memory, for different load values.

measure it only for the working points that they would like The load is defined as the number of concurrent requests

to consider bidding for. made to the application.

If either of these functions is non-concave or not mono-

tonically rising, the composed function may be non-concave (a) Elastic Memcached, (b) Memcached, 500MB

or not monotonically rising as well. The PSP auction op- experimental system internal cache, default system

timally allocates a divisible resource if and only if all the 5 3

Performance [Khits/s]

Performance [Khits/s]

load: 10

valuation functions are monotonically rising and concave. 4

load: 8

load: 6

Other bandwidth auctions also rely on the monotonically ris- 2 load: 4

3 load: 2

ing concave property of the valuation functions. load: 10

load: 1

Guest performance perf(mem, load), however, is not nec- 2 load: 8

1

load: 6

essarily a concave, monotonically rising function of phys- 1 load: 4

load: 2

ical memory. For example, the performance graph of off- load: 1

0 0

the-shelf memcached in our experimental environment is 0.6 1.0 1.4 1.8 2.2 0.4 0.5 0.6 0.7 0.8

Memory [GB] Memory [GB]

monotonically rising, but not concave (Figure 2). This non- (c) Elastic Memcached, (d) MemoryConsumer,

concave function resembles a step function, and is typical default system experimental system

of the operating system’s efforts to handle memory pressure

6 9

Performance [10 hits/s]

through swapping. Non-concavity may also result from dif-

Performance [Khits/s]

load: 10 load: 4 load: 10

load: 8 load: 2

8 load: 8

5

ferences in the size and frequency of use of various work- load: 6 load: 1 7 load: 6

load: 4

ing sets, swapping policies, or garbage collection opera- 4 6

load: 2

5 load: 1

tions [49]. An application that knows how much memory 3

4

it can use and can change its heap size on-the-fly will have 2 3

2

a more concave graph. We modified memcached so that it 1

1

can be instructed to change its heap size on-the-fly by re- 0 0

0.6 1.0 1.4 1.8 2.2 2.6 0.6 1.0 1.4 1.8 2.2

leasing and allocating some of the memory used for its in- Memory [GB] Memory [GB]

ternal cache. Our elastic memcached has a concave, mono-

tonically rising performance graph in the same experimen-

non-monotonic valuation functions by specifying forbidden

tal environment (Figure 2). However, in a default system

ranges. These are forbidden memory-quantity ranges for a

configuration, its performance graph is neither concave nor

single price bid. The guest can use forbidden ranges to cover

monotonically rising (Figure 2), due to a network bottle-

domains in which its average valuation per memory unit is

neck that was prevented in the experimental environment.

lower than its bid price. By definition, MPSP will not allo-

But a real production system guest cannot fine-tune its setup

cate the guest a memory quantity within its forbidden ranges.

parameters and re-design its software on-the-fly; it has to

Rather, it will optimize the allocation given the constraints.

make the best of the performance graphs it measures for the

The guest can thus avoid getting certain memory quantities

current setup, which might look like Figure 2. To do that,

in advance while still expressing a variety of desired quanti-

we designed Ginseng to allow valuation-of-memory graphs

ties. The forbidden ranges are designed to efficiently convey

V (mem, load) such as Figure 2 that are neither concave nor

information about functions which are concave, monoton-

monotonically rising.

ically rising in separate ranges. However, the terminology

Auction protocols that assume monotonically rising con-

does not restrict the guest valuation functions in any way. In

cave valuation functions either interpret a bid of unit price

particular, the guest can bid for a specific desired point (p, q)

and quantity (p, q) as willingness to buy exactly q units for

by setting the open range (0, q) as a forbidden range.

unit price p or as willingness to buy up to q units at price

p. In the first case, the bidding language is limited to ex-

act quantities. In the second case, if the valuation function

4. MPSP: Repeated Auction Protocol

is non-concave, the guest may get a quantity that is smaller In Ginseng, each guest has some permanent base memory.

than the one it bid for, and pay for it a unit price it is not Guests pay a constant hourly fee for their base memory, and

willing to pay. If the function is not, at the very least, mono- it is theirs to keep as long as they run. In each auction round,

tonically rising, it may even get a quantity it would be better each guest can bid for extra memory. Ginseng calculates a

off without. new memory allocation after every auction round and guests

MPSP supports monotonically rising concave memory rent the extra memory they won until the next auction round,

valuation functions in the same way that the PSP auction when the same memory will be put up for auction again.

supports them. In addition, it supports non-concave and The constant fees for base memory are designed to pro-

vide the lion’s share of the host’s revenue from memory,

Ginseng: Market-Driven Memory Allocation 4 2013/11/11such that the host can afford not to make more profit off described in Section 5. For each guest i, it computes how

the extra memory rental, and use this mechanism solely to much memory it won (denoted by q 0 i ) and at what unit price

optimize social welfare, thereby attracting more guests. The (denoted by p0 i ). The payment rule guarantees that the price

price of base memory is not affected by the prices paid for the guest will pay is less or equal to the unit price it bid.

extra memory. The guest’s account is charged accordingly. In the example,

Ultra high-end guests with hard QoS requirements are guest 1 loses (p01 = 0, q10 = 0), and guest 2 wins all of the

expected to pre-pay for all the memory they need in advance, free memory (p02 = 1 GBs $

, q20 = 0.4GB).

to ensure that they always get the resources they need. Ultra Informing Guests. The host informs each guest i of its

low-end budget-clients are expected to pre-pay only for as personal results p0i , qi0 . The host also announces borderline

much memory as they need to operate the guest OS and bids: the lowest accepted bid’s unit-price and the highest

$ $

limit their bids, so that they can temporarily rent additional rejected bid’s unit-price (5 GBs and 1 GBs in the example,

resources later while staying within their budget. The clients respectively). This is information that guests can work out

spanning the range between those extremities are expected on their own; having the host supply it makes for a more

to choose their flexible deal according to their needs. efficient system. The guests use this information in on-line

Here we describe one MPSP auction round, accompanied algorithms that decide how much to bid in future rounds, as

by a numeric example. described in Section 6.3.

Initialization. Each guest i is set up with its base mem- Adjusting and Moving Memory. After an adjustment

ory as it enters the system. For example, guest 1 runs mem- period following the announcement, the host actually takes

cached and pre-pays for 1.4GB, while guest 2 runs Memo- memory from those who lost it and gives it to those who

ryConsumer and pre-pays for 0.6GB. won, by inflating and deflating their balloons as necessary.

Auction Announcement. The host computes the free The purpose of the adjustment period is to allow each guest’s

memory—the maximal amount of memory each guest can agent to notify its applications of the upcoming memory

bid for—as the excess physical memory beyond the amount changes, and then allow the applications time to gracefully

of memory in use by the host and the sum of base memo- reduce their memory consumption, if necessary. The appli-

ries. It then informs each guest of the free memory and the cations are free to choose when to start reducing their mem-

auction’s closing time, after which bids are ignored. In the ory consumption, according to their memory-release agility.

example, the machine has 4GB. The host uses 1.6GB, and This early notification approach makes it possible for the

the guests pre-paid for 2GB, so the host announces an auc- guest operating systems to gracefully tolerate sudden large

tion for 0.4MB. memory changes and spares applications the need to monitor

Bidding. Interested guests bid for memory. Agent i’s bid second-hand information on memory pressure [63]. Which

is composed of a unit price pi —memory price per MB per applications to notify and when to notify them is left to the

hour (billing is still done per second according to exact rental guest’s agent. In the absence of elastic applications, it is left

duration) and a list of desired ranges: mutually exclusive, to the guest kernel to deal with memory pressure, e.g., by

closed ranges of desired memory quantities, sorted in as- shrinking internal caches.

cending order. We denote the desired ranges by [rj , qj ] for

j = 1 . . . mi . The bid means that the guest is willing to rent 5. MPSP: Auction Rules

any memory quantity within the desired range list for a unit

price pi . Every auction has an allocation rule—who gets the goods?—

In the example, both guests experience a load of 10 con- and a payment rule—how much do they pay? To decide

current requests. Guest 1 values its performance at $1 per who gets the goods, the MPSP auction determines the opti-

Khit/second, and bids $1 per GB of memory per second mal allocation of memory. This is the allocation that max-

(p = 1 GBs $

) for any amount of memory between 0 and imizes social welfare—client satisfaction—as described in

0.4GB (r1 = 0, q1 = 0.4GB), on the basis of Figure 2. Section 3. To determine the optimal allocation, the MPSP

Guest 2 values its performance at $0.1 per hit/second, and auction solves a constrained divisible good allocation prob-

bids $5 per GB of memory per second for the same amount lem, as detailed in Section 5.1. To determine how much they

of memory (p = 5 GBs $

, r1 = 0, q1 = 0.4GB), on the basis pay, the MPSP auction takes into account the damage they

of Figure 2. inflict on other guests, as detailed in Section 5.2. After ex-

Bid Collection. The host asynchronously collects guest plaining the rules we discuss their run-time complexity and

bids. It considers the most recent bid from each guest, dis- provide an example for executing them. A correctness proof

missing bids received before the auction round was an- is also available but has been omitted for the sake of brevity.

nounced. Guests that did not bid lose the auction automati-

5.1 Allocation Rule

cally, and are left with their base memory.

Allocation and Payments. The host computes the alloca- Ginseng finds the optimal allocation using a constrained

tion and payments according to the MPSP auction protocol divisible-good allocation algorithm, which works in stages

as described below. In each stage, Ginseng attempts a divis-

Ginseng: Market-Driven Memory Allocation 5 2013/11/11ible good allocation by sorting the guests lexically by three Nevertheless, for real life performance functions, a few

qualities: first by their bid unit-price, second (to break ties) forbidden ranges should be enough to cover the non-concave

by their current holdings [61] and last by a random shuffle. regions. We observed one forbidden range for off-the-shelf

Ginseng then allocates each guest its maximal desired quan- memcached and zero forbidden ranges for elastic-memory

tities according to this order. applications. Given the relatively small number of guests on

If there are a guest g and a forbidden range R such a physical machine, the algorithm’s run-time is reasonable:

that g ends up with a memory quantity inside R, then the we observed less than one second using a single hardware

allocation is invalid. This can happen if g is the last guest thread, even in experiments with 23 guests.

allocated some memory and there is not enough memory left

to fulfill all of g’s requested quantity. Ginseng examines the 5.4 Single Round Example

social welfare of such invalid allocations. If such an invalid Consider a system with 6 GB of physical memory and two

allocation gives a higher social welfare than the highest guests. The first guest bids a unit price of 2 for between

social welfare seen to date in a valid allocation, then Ginseng 3GBs and 4GBs of memory (p = 2, r1 = 3, q1 = 4) and

considers two constrained allocations instead of the invalid the second guest bids a unit price of 1 for between 3GBs and

allocation. In the first one, guest g gets a memory quantity 5GBs of memory (p = 1, r1 = 3, q1 = 5). In the first stage,

that is large enough to cover all of R. In the second one, g we sort the guests by price, and try to allocate 4GB to guest

gets a memory quantity that is small enough such that it does 1 and 2GB to guest 2. This is an invalid allocation, because

not get any memory within R. The social welfare of the valid the second guest gets a quantity that falls in its forbidden

allocations is compared to find the optimal allocation. range (anything less than 3GBs). We therefore examine two

5.2 Payment Rule constrained systems instead. (1) The second guest gets no

more than the minimum of the forbidden range, which is

The payments follow the exclusion compensation principle, 0. In this case, the overall allocation is 4GB to the first

as formulated by Lazar and Semret [32]. Let q 00 k denote the guest, with a social welfare of 8. (2) The second guest is

memory that would have been allocated to guest k in an allocated at least as much as the maximum of its forbidden

auction in which guest i does not participate and the rest of range, i.e., at least 3GBs. Then the rest of the free memory

the guests bid as they bid in the current auction. Then guest is allocated by the order of prices, so the first guest gets the

i is charged a unit price p0i , which is computed as follows: other remaining 3GBs. The social welfare in this case is 9,

1 X and this is the chosen allocation.

p0i = 0 pk (qk00 − qk0 ) . (1)

qi According to the payment rule given in Equation 1, the

k6=i

guests pay p01 = 13 (1 [5 − 3]) = 32 and p02 = 13 (2 [4 − 3]) =

According to this payment rule, when guest i is charged 2

3 , because in each other’s absence they each would have

p0i qi0 , it actually pays for the damage that its bid made to the gotten all of the memory they wanted.

benefit of the rest of the guests. We note that to compute the

payment for a guest that gets allocated some memory, the

constrained divisible good allocation algorithm needs to be

6. Guest Strategy

computed again without this guest. In total, the allocation So far, we discussed Ginseng system’s architecture, and the

procedure needs to be called one time more than the number MPSP memory auction from the auctioneer’s point of view.

of winning guests. But what should guests who participate in MPSP auctions

do? How much memory should they bid for and how much

5.3 Complexity should they offer to pay for it? In an exact VCG auction,

The problem that the MPSP algorithm solves—finding the guests would be expected to inform the host about their

the memory allocation that maximizes the social welfare valuation for different memory quantities. However, the re-

function—is defined over the domain of memory quantities duced MPSP bidding language lightens the computational

that guests agree to rent. This domain is not convex because burden off the host and leaves the choice of memory quan-

the forbidden ranges create “holes” in it. Maximizing a func- tity with the guest. Multi-bid auctions are further discussed

tion over a non-convex domain is at least as hard as the knap- in Section 10.

sack problem, and therefore NP-hard. In the worst case the In this section we present an example bidding strategy

algorithm needs to compute the social welfare which results we developed. It is used by the guests in the performance

from each forbidden range being completely allocated or evaluation in Section 8. Our guest wishes to maximize the

completely denied: 2M different divisible allocations, where utility it estimates it will derive from the next auction. This

M is the number of all the forbidden ranges in all the bids. is a natural class of bidding strategies in ad auctions [9].

Each such allocation takes O(N ) to compute, where N is Our guest needs to decide how much memory to bid for,

the number of bids, and each payment rule requires O(N ) and at what price. We show in Section 6.1 that for any

allocations to be computed. Hence, the time complexity of memory quantity, the best strategy for the guest would be

MPSP is O(N 2 · 2M ). to bid its true valuation for that quantity. To choose the

Ginseng: Market-Driven Memory Allocation 6 2013/11/11maximal quantity it wants to bid for, the guest compares its Figure 3: Strategies for choice of unit price for two maxi-

estimated utility from bidding for the different quantities, as mal quantities, using the same valuation function. Figure 3

described in Section 6.2, with the help of on-line algorithms demonstrates a single desired range strategy for a concave

(Section 6.3). monotonically rising part of the valuation function. Figure 3

demonstrates a multiple desired range strategy for a non-

concave, not even monotonically rising part of the valuation

6.1 Choosing a Bid Price function.

In this subsection we assume the guest has decided how (a) Single range (b) Multiple range

much memory (qm , or q for short) it wants to bid for and

show how much it should bid for it (p(q)). For the sim-

ple case of an exact desired memory quantity (m = 1,

rm = qm = q), for any value q, bidding the mean unit

valuation of the desired quantity p(q) = V (base+q)−V q

(base)

is the best strategy, no matter what the other guests do. By

bidding lower than p(q), the guest risks losing the auction;

by bidding higher it risks operating at a loss (paying more

than what it thinks the memory is worth).

For less simple cases when the guest bids for a range of

memory quantities up to q, if the valuation function is (at When the auction has reached a steady state—when a

least locally, in the range up to q) concave monotonically ris- guest’s won goods and payment turn out the same in sub-

ing, bidding p(q) is still the best strategy for q regardless of sequent auctions in response to the same strategy—the guest

other guests’ bids: p(q) is the guest’s minimal valuation for already knows how much memory it can get for any bid it

the range because the unit valuation drops with the quantity. makes. The guest is incentivized to raise its bid price to a

See, for example, Figure 3, where the valuation function is maximum, thus increasing the exclusion compensation that

above the line connecting the valuation of 1200 MB with the other guests pay and making them more considerate. Hence,

base (400 MB) valuation. Since the connecting line’s slope our guests always bid p(qm ). In our experiments, a steady

is the mean unit valuation of 1200MB, any point above the state is typically reached after 3 auction rounds.

line is a point whose mean unit valuation is higher than that

of 1200MB. 6.2 Choosing a Maximal Memory Quantity qm

In the rest of the cases, the valuation function is non- To maximize the guest’s estimated utility from the next auc-

concave or not even monotonically rising. In such func- tion, the guest chooses qm . Our guest assumes it is in a

tions, the mean unit-valuation p(q) may rise locally with steady state, and estimates its utility using past auction re-

quantity: For example, in Figure 3, p(2200M B) is higher sults. The guest assesses its utility from the next auction by

p(1800M B). This means that simply bidding for 2200MB estimating the quantity of memory it will get qest , which is

with a unit-price of p(2200M B) may result in getting a estimated for simplicity as qm if p > pmin , and as 0 other-

memory quantity for which the guest is not willing to pay wise. pmin is the lowest price the guest can offer and still

as much. The guest can avoid getting quantities for which have a chance of getting any memory at all. The guest’s es-

the mean unit valuation is lower than its bid price by ex- timation of pmin is discussed in Section 6.3.

cluding those quantities from its bid using the forbidden The utility estimation also requires an estimation of the

ranges mechanism. In this example, the guest uses a for- unit price to be paid for the allotted memory amount, pest .

bidden range to exclude the quantities [1700, 2000] MBs of The guest’s utility is its valuation of the memory it gets

memory from its range, since it is not economical to bid for minus the charge. The guest estimates its utility from bidding

them with a unit price of p(2200M B). (p, qm ) by dividing it to two components: (1) its estimate

The forbidden ranges mechanism allows the guest to bid of the valuation improvement from winning the memory

p(q) without a risk of operating at a loss. However, the guest it expects to win and (2) its estimate for the charge. For

may have something to gain by bidding with a unit-price concave valuation functions V (·), the estimated utility is

that is less than p(q). If the guest does not get the maximal maximized when p(qm ) = pmin . In such cases, the guest

memory quantity it bid for, it can try exploring its strategy needs only estimate and predict pmin to bid optimally. For

space. It can retain q, lower the bid price, and decrease the other (non-concave) functions, to find the memory quantity

forbidden ranges. Thus the guest enables the host to give that maximizes the estimated utility the guest must evaluate

it a partial allocation in more cases, when the alternative the estimated charge. To this end it assesses the estimated

might be not getting any memory at all. In Figure 3, the memory quantity it will get qest as described above and the

lowest bid-price worth exploring is labeled as “Low Slope”: estimated unit price it will pay according to Section 6.3.

it eliminates any need of forbidden ranges. If several values of qm maximize the estimated utility, the

Ginseng: Market-Driven Memory Allocation 7 2013/11/11guest prefers to bid with higher p values, which improve its memcached benchmarking utility. To test a large number

chances of winning the auction. of guests quickly, we configured memslap such that mem-

cached’s performance graphs saturated at 2GB. To this end

6.3 Predicting Guest Utility we ran memslap with a key size of 249 bytes, a value size

In this subsection we describe the learning algorithms used of 1024 bytes, a window size of 100K, and a get/set ratio of

by the guest to predict its utility. The guest evaluates pmin 30:70, for 200 seconds each time. The application’s perfor-

for the current round on the basis of ten recent borderline mance is defined as the “get” hits per second. 1

bids that are announced by the host. The price to be paid, MemoryConsumer is an elastic memory benchmark. It

p0 , depends mainly on losing bids. To predict p0 , the guest tries to write to a random 1MB-sized cell from a predefined

maintains a historical table of (p0 , q 0 ) pairs, and uses it as a range. If the address is within the range of memory currently

basic estimate for pest . The pest estimate is further bounded available to the program, then 1MB of data is actually writ-

from above by the highest losing bid price in the last auction ten to the memory address and it is considered a hit. Af-

round. ter each attempt, whether a hit or a miss, it sleeps for 0.1

seconds, so that misses cost time. The application’s perfor-

mance is defined as the hits per second. This application is

7. Experimental Setup

tailored as a pure memory overcommitment benchmark, in

In this section we describe the experimental setup in which order to create clean tests, unhindered by bottlenecks in re-

we evaluate Ginseng. sources other than memory. As with memcached, we chose

Alternative Memory Allocation Methods. We com- a range of 1950 cells, so that performance graphs would sat-

pared Ginseng with memory overcommitment and allocation urate at 2GB.

methods that are available to commercial IaaS providers: We profiled the performance of each workload with vary-

static, host-swapping and MOM. In the Static method, ing amounts of memory to create its perf(mem, load) func-

each guest is allocated a fixed amount of memory without tion. We measured performance under different loads for

any overcommitment. This is a common method in public four concurrent guests without memory overcommitment,

clouds. When relying on host-swapping, each guest gets a as also done by Hines et al. [27]. We gradually increased

fixed memory quantity regardless of the number of guests, and decreased the physical memory in small steps, wait-

and the host is allowed to swap guest memory to balance ing in each step for the performance to stabilize. For mem-

memory between guests as it sees fit. This method is the cached we waited and measured the performance for 200

fallback of many overcommitment methods. The Memory seconds, and for MemoryConsumer for 60 seconds. The

Overcommitment Manager (MOM) [34] collects data from perf(mem, load) graphs can be seen in Figure 2 for the elas-

its guests to learn about their memory pressure and continu- tic Memcached and Figure 2 for MemoryConsumer.

ously adjusts their balloon sizes to make the guests feel the Load. We defined “load” for memcached and Memo-

same memory pressure as the host. This is a state-of-the-art ryConsumer as the number of concurrent requests being

overcommitment method that is freely available, but it is not made. We used coordinated dynamic loads, where each

a black-box method: it relies on probes inside the guests, and pair of guests exchange their loads every Tload . The load-

it can be easily circumvented by a malicious guest. exchange timing is not coordinated among the different

Workloads. To experiment with overcommitment trade- guest pairs in the experiments. Loads are in the range [2, 10].

offs, we used benchmarks of elastic memory applications: The total load is always the number of guests ×6, so that

applications that can improve their performance when given the aggregate hits per second of different experiments will

more memory on-the-fly over a large range of memory quan- be comparable. The load values and their exchange tim-

tities, and can return memory to the system when needed. We ing were chosen to increase the diversity among the guests,

experimented with a modified elastic memcached and with as expected in a real system, where guests’ loads change

MemoryConsumer, a dedicated dynamic memory bench- independently of other guests. Guests with different loads

mark. Both applications interacted with the Ginseng guest also have different memory valuation functions, and are thus

agent to dynamically adjust their heap sizes when they won more diverse, as in a real system.

or lost memory: the Ginseng agent informed the applica- Machine Setup. We used a cloud host with 12GB of

tion of the upcoming change and the application reacted by RAM and two Intel(R) Xeon(R) E5620 CPUs @ 2.40GHz

reducing its working-set size accordingly, so that when the with 12MB LLC. Each CPU has 4 cores with hyper-threading

balloon is inflated, the system would not run out of memory. enabled, for a total of 16 hardware threads. The host ran

Elastic memcached is a version of memcached that can Linux with kernel 2.6.35-31-server-#62-Ubuntu, and

change its heap size on-the-fly to respond to guest memory the guests ran 3.2.0-29-generic-#46-Ubuntu. To re-

changes. When ordered, memcached can free some internal- duce measurement noise, we disabled EIST, NUMA, and

cache slabs (the less-needed ones, according to its internal C-STATE in the BIOS and Kernel Samepage Merging

statistics), or alternatively increase its internal cache size.

Memcached was driven by a memslap client, a standard 1 Elastic-memcached is available from ANON. INFO..

Ginseng: Market-Driven Memory Allocation 8 2013/11/11Method/Memory (GB) Initial Maximal tings make more sense for a production VM than the default

Ginseng 0.6 10 settings (vm.overcommit memory=0) that are intended for

Static 11.25/N 11.25/N a bare-metal OS. In a VM with the default settings, an ap-

Host-swapping 10 10 plication which needs 600MB would have required on our

MOM 0.6 10 system 300MB more, which it could not make use of. These

Hinted host-swapping 2 2 300MB would only be available for use by other virtual ma-

Hinted MOM base 2 chines.

Time Scales. Three time scales define the usability of

Table 1: Guest configuration: initial and maximal memory memory borrowing and therefore the limits to the exper-

values for each overcommitment method. N denotes the iments we conducted: the typical time that passes before

number of guests. the change in physical memory begins to affect perfor-

mance (e.g., cache-warming time—time for the cache to

be filled with relevant data), Tmemory ; the time between

(KSM) [4] in the host kernel. To prevent networking bot- auction rounds, Tauction ; a typical time scale in which con-

tlenecks, we increased the network buffers. We dedicated ditions (e.g., load) change, Tload . Useful memory borrowing

hardware thread 0 to the host and pinned the guests to hard- requires Tload >> Tmemory . This condition is also neces-

ware threads 1 . . . N . When the host also drove the load for sary for on-line learning of the performance resulting from

memcached, memslap processes were randomly (uniformly) different memory quantities. To evaluate Tmemory , we per-

pinned to threads (N + 1) . . . 15. formed large step tests, making abrupt sizable changes in the

Memory Division. 0.75GB were dedicated to the host. To physical memory and measuring the time it took the perfor-

allow guests to both grow and shrink their memory alloca- mance to stabilize. We empirically determined good values

tions, we configured all guests with a high maximal memory for Tload on the basis of step tests results: 1000 seconds for

of 10GB, most of which was occupied by balloons, leaving memcached experiments, whereas for MemoryConsumer

each guest with a smaller initial memory. However, when 200 seconds are enough. We also used those step tests to

using host-swapping and MOM, extensive host-swapping verify that guest major faults were insignificant (indicating

caused the host to freeze when the maximal guest memory guest thrashing hardly happened), and to verify that the per-

was set to 10GB. Hence we also created a hinted (white-box) formance measurement method was getting enough time to

version of each of these methods to compare against: we in- evaluate the performance. For example, memslap required

formed the host that the applications actually cannot benefit 200 seconds to start experiencing cache misses.

from the full 10GB, and that a rational guest would only In realistic setups providers should set Tauctionstatic hinted-host-swapping Upper Bound

Figure 4: Valuation functions for different loads ginseng hinted-mom Ginseng Simulation

4.5

(a) MemoryConsumer (b) Elastic Memcached 4.0

3.5

Social Welfare [$/s]

8 9

load: 10 3.0

7 load: 8

8

2.5

Valuation [$/s]

Valuation [$/s]

6 load: 6 7

load: 4

6 2.0

5 load: 2

load: 1 5 1.5

4 load: 10

4 load: 8

1.0

3 3 load: 6 0.5

2 2 load: 4

load: 2 0.0

1 1 5 6 7 8 9 10 11 12 13

load: 1

Number of VMs

0 0

0.6 1.0 1.4 1.8 2.2 0.6 1.0 1.4 1.8 2.2

Memory [GB] Memory [GB] (a) MemoryConsumer, valuation is a square of perfor-

mance

static hinted-host-swapping Upper Bound

periments. Ginseng guests use the strategy described in Sec- ginseng hinted-mom Ginseng Simulation

tion 6. 3.5

In both benchmarks, perf(mem) is a concave function. To 3.0

evaluate Ginseng’s abilities over non-concave functions, we

Social Welfare [$/s]

2.5

used performance valuation functions Vp (perf) that make 2.0

the resulting composed valuation function V (mem) non-

1.5

concave.

1.0

In the first experiment set (MemoryConsumer), each

guest i’s valuation function is defined as Vi (mem) = fi · 0.5

2 0.0

(perf(mem)) , where the fi values were drawn from the 6 7 8 9 10 11 12 13

Number of VMs

Pareto distribution, a widely used model for income and as-

set distributions [50]. We used a Pareto index of 1.1, which is (b) Memcached, first guest valuation is piecewise linear

reasonable for income distributions [51], and a lower bound

of 10−4 Khit$

. Figure 5: Social welfare (mean and standard deviation) un-

The “square of performance” valuation function is char- der different allocation schemes as a function of the number

acteristic of on-line games and social networks, where the of guests. The dashed lines indicate simulation-based upper

memory requirements are proportional to the number of bounds on Ginseng’s social welfare.

users, and the income is proportional to user interactions,

which are proportional to the square of the number of users. their predicted performance (neglecting cache warmup, for

The composed valuation function is illustrated in Figure 4. example). The tighter bound results from a simulation of

In the second experiment set (elastic memcached), each Ginseng itself. The looser bound results from a white-box

guest i’s valuation function is defined as V(mem) = fi · on-line simulation, that results in the theoretically optimal

perf(mem), where the fi values were distributed according to allocations given full offline information. The MOM and

a Pareto distribution with a Pareto index of 1.36 (according host-swapping methods yield negligible social welfare val-

to Levy and Solomon’s wealth distribution [33]), bounded in ues for these experiments, and are not presented.

the range [10−4 , 100] Khit

$

. The bounding represents the fact As can be seen in Figure 5, Ginseng achieves much bet-

that on-line trading does not span the whole range of human ter social welfare than any other allocation method for both

transactions: some are too cheap or too expensive to be made workloads. It improves social welfare by up to 15.8× for

on-line. The highest coefficient was set as: memcached and up to 6.2× for MemoryConsumer, com-

$ pared with both black-box approaches (static) and white-

0.1 Khit perf(mem) < 3.4 Khit

s

f1 = $ (2) box approaches (hinted-mom). As the number of guests in-

1.8 Khit otherwise.

creases, so does the potential for increased social welfare,

This sort of piecewise-linear valuation functions character- because more individual utilities are aggregated to compose

izes service level agreements that distinguish usage levels by the social welfare. However, each guest is allocated a fixed

unit price. The valuation function for the first guest is shown amount of memory (base) on startup, reducing our host’s

in Figure 4. free memory, which is available for auction; hence the rela-

We calculated the social welfare for each experiment us- tive peak in social welfare for 7 guests (MemoryConsumer).

ing each VM’s measured performance that VM’s valuation In the Memcached experiment the relative peak is flat be-

function. The social welfare of the different experiments is cause the first guest’s valuation is much higher than the rest.

compared in Figure 5. The figures contain two upper bounds In both experiment sets, Ginseng achieves 83%–100% of the

for the social welfare, achieved by simulating Ginseng’s auc- optimal social welfare. The sharp decline in Ginseng’s social

tion and assuming the guests perform exactly according to welfare for 13 guests comes when Ginseng no longer has

Ginseng: Market-Driven Memory Allocation 10 2013/11/11static hinted-host-swapping Upper Bound

ginseng hinted-mom Ginseng Simulation

and memory quantities, and using Figure 2) with perfor-

3.0 mance values measured during Ginseng experiments for the

2.5 same loads and memory quantities. The experimental values

Performance [102 hits]

2.0

were collected after the memory usage stabilized (more than

Tmemory after a memory change). The comparison shows

1.5

that the profiled data is accurate enough, as can be seen when

1.0

comparing Ginseng’s results in the full experiments to its re-

0.5 sults with simulated guests in Figure 5.

0.0

5 6 7 8 9 10 11 12 13

Number of VMs

9. Discussion: Host Revenue and Collusion

(a) MemoryConsumer, valuation is a square of perfor-

mance. Performance is in terms of hits per second. Ginseng does not attempt to maximize host revenue directly.

static hinted-host-swapping Upper Bound Instead, it assumes that the host charges an admittance fee

ginseng hinted-mom Ginseng Simulation

for the seed virtual machine and maximizes the aggregate

2.5

client satisfaction (the social welfare). Maximizing social

2.0 welfare improves host revenues indirectly because better-

Performance [104 hits]

satisfied guests are willing to pay higher admittance fees.

1.5

Likewise, improving each cloud host’s hardware (memory)

1.0 utilization should allow the provider to run more guests on

each host.

0.5 The guests we implemented reach a steady state using

indirect negotiations that result from their on-line strategy

0.0

6 7 8 9

Number of VMs

10 11 12 13

(in Section 6.3). More sophisticated guests may directly col-

lude and negotiate to ease their way into a steady state of

(b) Memcached, first guest valuation is piecewise linear. Perfor-

their choice [6]. They can complete the deal among them-

mance is in terms of “get” hits per second.

selves by subletting memory to each other or by making

Figure 6: Performance (mean and standard deviation) under side-payments. Such guests might bid differently than their

different allocation schemes as a function of the number of true valuation of the memory, or coordinate the memory

guests. The dashed lines indicate the performance according quantities they bid for such that they only bid together for

to the simulation from which the upper bounds on Ginseng’s as much as the host can offer, thus reducing and even elimi-

social welfare were derived. nating all charges.

The bright side of collusion is that it shortens the time it

takes the system to converge to a steady state. In particular,

enough free memory to answer even the needs of the most when the colluding guests only change their desired quan-

valuable guest. tities and keep bidding a true unit-price, the allocation still

As we saw above, Ginseng provides much better so- optimizes the social welfare.

cial welfare than alternative memory allocation method. But If the host cares about its revenue from the extra memory

does it do so at the cost of reducing overall aggregate per- rental, e.g., due to power-related operational costs, it can set

formance? As can be seen in Figure 6, Ginseng provides a minimum (reserve) price for the extra memory. This can

aggregate performance that is roughly equivalent to the per- easily be implemented by adding a dummy bidder that bids

formance of the better methods, namely hinted-MOM and on behalf of the host for all the memory with a unit-price that

static division, while providing an order of magnitude better equals the operational costs. The dummy bid will prevent the

social welfare. host from renting the extra memory at a loss, and will also

limit the gain that guests can achieve at the expense of other

8.2 Impact of Off-Line Profiling guests.

In our experiments we used performance graphs that were The dark side of collusion is that if guests do not bid

measured in advance in a controlled environment. In real with their true valuations, the allocation will not necessar-

life, artificial intelligence methods should be used to col- ily be optimal. However, bidding with a unit-price which is

lect such data on-the-fly, considering both data accumula- not the true valuation carries the risks of losing the auction

tion and data freshness in view of changing environment or working at a loss, as described in Section 6.1. Colluding

conditions. Since the accuracy of the best on-the-fly meth- to bid unit prices which are not true valuations is beneficial

ods is bounded by the accuracy of hindsight, we can bound when the other guest’s bids and the auctionable memory are

the impact of refraining from on-the-fly evaluation on the known in advance. In a cloud platform guests may join the

performance graphs. In Figure 7 we compare our bench- auction between rounds, or the valuation of existing guests

marks’ predicted performance (according to measured load might change, and a new bid might be made below a ficti-

Ginseng: Market-Driven Memory Allocation 11 2013/11/116 10 contents. Gong, Gu and Wilkes [18] and Shen et al. [48] used

Theoretical Theoretical

Actual [0.1 hits/s]

5 8 learning algorithms to predict guest resource requirements.

Actual [khits/s]

4

6

Sekar and Maniatis [47] argued that all resource use must

3 be accurately attributed to the guests who use it so that it can

4

2 be billed. In contrast, Ginseng lays the burden of metering

1 2 on the client.

0 0 Guest Hint Techniques. Schwidefsky et al. [46] used

0 1 2 3 4 5 6 0 2 4 6 8 10

Predicted [khits/s] Predicted [0.1 hits/s] guest hints to improve host swapping. Miłoś et al. [39] incen-

(a) memcached (b) MemoryConsumer tivized guests to supply sharing hints by counting a shared

page as a fraction of a non-shared page. Like Ginseng, their

Figure 7: Comparison of predicted performance values (ac- method can be applied to non-cooperative guests.

cording to the profile graphs, given load and memory alloca- Resource Allocation with Funny Money Funny money

tion) with measured performance. was used in shared systems to control resource allocation.

However, when using funny money, the problem is attach-

ing real value to it. For example, Waldspurger [54] used a

tious high bid, forcing the colluding guest to pay more than

proportionally fair allocation using tickets, which stood for

the memory is worth to it. The host can further increase the

shares. Tickets had to be allocated by a centralized know-all

risk involved in such bids by introducing uncertainty to the

control. In a cloud there is no know-all control that can al-

supply, for example, by randomly limiting the auctionable

locate tickets to separate economic entities. However, such a

memory [2]. This will usually not greatly hinder the opera-

control is not needed in a cloud which already charges clients

tion of non-colluding guests, but it will make the planning of

real money, which has intrinsic value.

collusion much harder.

General Resource Allocation For Monotonically Ris-

ing, Concave Valuations. Kelly [30] used a proportionally

10. Related Work

fair allocation: clients bid prices, pay them, and get band-

White-Box Memory Overcommitment. Heo et al. [25] bal- width in proportion to their prices. His allocation is optimal

anced memory allocations according to desired performance for price taking clients (who do not anticipate their influence

levels. Under memory pressure they divided the memory ac- on the price they pay). Popa et al. [43] traded off proportional

cording to a fair share policy. Nathuji, Kansal and Ghaf- fairness with starvation prevention. Johari and Tsitsiklis [28]

farkhah [41]’s guests specified several performance and pay- computed the price of anarchy of Kelly’s auction, and Sang-

ment levels and the host chose which level to fulfill. This ap- havi and Hajek [45] improved the auction in this respect.

proach guarantees the host demand for any excess produc- Maillé and Tuffin [37] extended the PSP to multi-bids,

tion power it has. Our approach is guest oriented, leaving thus saving the auction rounds needed to reach equilibrium.

the designation of the current required resource amount in Their guests disclosed a sampling of their resource valuation

the hands of the guest. In Ginkgo, Hines et al. [27] and Gor- function to the host, which computed the optimal allocation

don et al. [19] used optimization with constraint satisfaction according to these approximated valuation functions. One

to optimize a general social welfare function of the guests’ such single auction has the complexity of a single PSP auc-

performance. These works assume guest cooperation, while tion, times the number of sampling points. Non-concave or

we analyze the guest as a non-cooperative, selfish agent. Our non-monotonically rising functions require more sampling

work is the first work on memory allocation which assumes points to express them with the same accuracy, thus increas-

non-cooperative guests. ing the multi-bid auction’s complexity. Though a multi-bid

Grey-Box and Black-Box Techniques. Magenheimer [36] auction is more efficient for static problems, it loses its ap-

used the guests’ own performance statistics to guide over- peal in dynamic problems which require repeated auction

commitment. Jones, Arpaci-Dusseau, and Arpaci-Dusseau [29] rounds anyhow. Other drawbacks of the multi-bid auction

inferred information about the unified buffer cache and vir- are that the guest needs to know the memory valuation func-

tual memory by monitoring I/O and inferring major page tion for the full range; that frequent guest updates pose a

faults. Zhao and Wang [61] monitored use of physical pages, burden to the host; and that the guest cannot directly explore

and Zhao et al. [60] balanced memory between VMS on working points which currently seem less than optimal. (It

the basis of on-the-fly intermittently-built miss-rate curves. can do so indirectly by faking its valuation function.) In con-

Waldspurger [55] randomly sampled pages to estimate the trast, the MPSP auction leaves the control over the currently

quantity of unused guest memory, to guide page reclaim. desired resource allocation to the guest, who best knows its

These methods can be circumvented by a selfish guest, and own current and future needs. Maillé and Tuffin also showed

like white-box methods, ignore the client’s valuation of per- that the PSP’s social welfare converges to theirs [38].

formance. Gupta et al. [22] did not require any guest cooper-

ation for their content based page sharing. Wood et al. [57]

allocated guests to physical hosts according to their memory

Ginseng: Market-Driven Memory Allocation 12 2013/11/11You can also read