Fisher Information in Noisy Intermediate-Scale Quantum Applications

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Fisher Information in Noisy Intermediate-Scale Quantum

Applications

Johannes Jakob Meyer1,2

1 DahlemCenter for Complex Quantum Systems, Freie Universität Berlin, 14195 Berlin, Germany

2 QMATH, Department of Mathematical Sciences, Københavns Universitet, 2100 København Ø, Denmark

26-06-2021

The recent advent of noisy intermediate- ers has become a very active field of research in quan-

scale quantum devices, especially near-term tum information science. Particularly prominent can-

quantum computers, has sparked extensive re- didates to make use of near-term quantum comput-

search efforts concerned with their possible ap- ers are variational quantum algorithms [3, 4] where

plications. At the forefront of the considered parametrized quantum states are combined with a

arXiv:2103.15191v3 [quant-ph] 6 Sep 2021

approaches are variational methods that use classical computer into a hybrid quantum-classical al-

parametrized quantum circuits. The classi- gorithm [5]. Another much researched direction is to

cal and quantum Fisher information are firmly use these quantum devices as quantum machine learn-

rooted in the field of quantum sensing and ing models [6], again employing parametrized quan-

have proven to be versatile tools to study such tum states.

parametrized quantum systems. Their util- Along with the development of these new tech-

ity in the study of other applications of noisy niques, there is a need for a better understanding of

intermediate-scale quantum devices, however, parametrized quantum systems and consequently the

has only been discovered recently. Hoping approaches that employ them. In the field of quan-

to stimulate more such applications, this ar- tum sensing, a particular type of parametrized quan-

ticle aims to further popularize classical and tum system – namely parametrized by the parameters

quantum Fisher information as useful tools for that are sensed – has long been studied. The princi-

near-term applications beyond quantum sens- pal tools employed in this regard are the classical and

ing. We start with a tutorial that builds an quantum Fisher information.

intuitive understanding of classical and quan- Intuitively, the quantum Fisher information is a

tum Fisher information and outlines how both measure of how much a parametrized quantum state

quantities can be calculated on near-term de- changes under a change of a parameter. This param-

vices. We also elucidate their relationship and eter could be the underlying magnetic field that a

how they are influenced by noise processes. quantum system is designed to sense, but it can also

Next, we give an overview of the core results of be a control knob turned by an experimenter or the

the quantum sensing literature and proceed to angle of a rotation gate that is changed by a quantum

a comprehensive review of recent applications computer programmer. The classical Fisher informa-

in variational quantum algorithms and quan- tion in turn captures how much a change of the under-

tum machine learning. lying parameter affects the probabilities with which

different outcomes of a specific measurement that is

Progress in science and engineering as well as con- performed on the parametrized quantum state are ob-

siderable investments have increased our capabilities served.

to precisely control quantum systems, leading to the As parametrized quantum states appear in many

development of quantum devices that are capable of approaches in NISQ applications beyond quantum

impressive feats. One prominent example of this new sensing, it is no surprise that the first works have ex-

generation of devices are quantum computers. They plored the use of classical and quantum Fisher infor-

have low numbers of qubits and are still plagued by mation to study them. We do, however, believe that

noise and relatively low coherence times and cannot there are many more fruitful applications waiting to

yet fulfill the promises of fault-tolerant quantum com- be discovered. This article thus intends to further

putation, but it was recently shown that they can popularize classical and quantum Fisher information

outperform classical computers [1] – however only in as versatile tools to understand NISQ applications.

certain contrived tasks with unknown practical rele- While much has been written about the Fisher in-

vance. Yet, given these positive results and the speed formation, in classical and quantum settings alike, the

of improvement of these devices, it is no surprise that barriers to penetrate the literature can be quite sub-

the search for practically relevant applications of these stantial. This article aims to complement the excel-

noisy intermediate-scale quantum (NISQ) [2] comput- lent reviews provided in Refs. [7–9] with a tutorial

Accepted in Quantum 2021-09-06, click title to verify. Published under CC-BY 4.0. 1that provides a very gentle and intuitive introduction the Appendix, alongside with proofs of the properties

to both the classical and quantum Fisher informa- of classical and quantum Fisher information. In the

tion that does require only little prerequisites. Since course of this work we will give recommendations on

many of the results related to these quantities were literature for further study.

developed in the context of quantum sensing, we will

also review the results from this field that are most

relevant to understanding applications of Fisher infor- 1 Parametrized Quantum States

mation. However, we will not explore specific appli-

The study of NISQ devices places a huge emphasis on

cations, as it is not the goal of this article to provide a

the study of parametrized quantum states, i.e. quan-

comprehensive introduction to quantum sensing. We

tum states that depend continuously on a vector of pa-

conclude this work with a comprehensive review of the

rameters θ ∈ Rd . We will denote them with |ψ(θ)i for

different uses of Fisher information that have emerged

pure states and ρ(θ) for mixed states. Parametrized

in quantum machine learning and variational quan-

quantum states arise in many settings: In NISQ com-

tum algorithms.

puting, parametrized quantum circuits are used as an

The principal target audience of this paper are peo- ansatz for variational quantum algorithms [3]. In

ple that are interested in learning about Fisher infor- quantum optimal control, the parameters of the ra-

mation and its use in the context of NISQ applica- dio frequency pulses determine the executed gate or

tions. It thus only requires familiarity with the ab- the created quantum state. In quantum metrology,

solute basics of NISQ applications. The intuitive ap- a quantity that needs to be measured, for example a

proach to the subject should also be beneficial to a magnetic field, is imprinted on a quantum state via

wider class of readers, e.g. people that are taking their an interaction, thus parametrizing it.

first steps in quantum sensing. After reading this pa- It is of course of tremendous interest to understand

per, the reader will have an intuitive understanding what happens if the parameters θ are changed. We

of the origins and applications of both classical and can attempt to quantify this by measuring the dis-

quantum Fisher information and will know how they tance between the parameters themselves – which can

are related to each other. We will discuss how these be done via the regular Euclidean distance. But only

quantities can be calculated in the context of NISQ in the rarest cases all parameters have an equal in-

applications, how the ever-present device noise enters fluence on the underlying state. So a smarter way to

the picture and how they are applied in quantum ma- measure distances between parameters would actually

chine learning and optimization of variational quan- be to measure the distance of the associated states. If

tum algorithms. Along the way, we will review impor- we are given a distance measure d between quantum

tant results from quantum sensing and try to demys- states, we can define – by a slight abuse of notation

tify technical terms usually encountered when read- – a new distance measure between the associated pa-

ing about Fisher information like “metric” or “pull- rameters:

back” and quantum-specific jargon like “Heisenberg

scaling”. d(θ, θ 0 ) = d(ρ(θ), ρ(θ 0 )). (1)

This work is organized as follows: In Sec. 1, we will This strategy of measuring the distance in the space

build intuition about parametrized quantum states of quantum states instead of the parameter space is

and how we can properly assign distances to pairs of known as a pullback, because we pull back the distance

parameters. Sec. 2 outlines how we can extract infor- measure to the space of parameters.

mation about parametrized quantum systems in the In the following we will be concerned with distance

form of information matrices. We follow up on this measures that are monotonic. This means that the

with the introduction of the classical Fisher informa- distance between two quantum states can only de-

tion matrix in Sec. 3 and the quantum Fisher informa- crease if both are subject to the same quantum op-

tion matrix in Sec. 4. We will put a particular focus eration. A quantum operation can take many forms,

on how we can actually compute these quantities in a including unitary evolution, noisy evolution or even

NISQ context. The subsequent Sec. 5 elucidates the measurements – but we will formalize this more later

relationship between the quantum and the classical on. Monotonicity is a very desirable property for

Fisher information, whereas Sec. 6 treats the role of a distance measure. Performing a quantum opera-

noise, a very important impediment and namesake of tion cannot add additional information, so it should

NISQ devices. In Sec. 7 we will highlight the differ- not be easier to distinguish two states after such an

ent areas in which the classical and quantum Fisher operation is performed. Moreover, if the operation

information have been applied in the context of NISQ is noisy, it will actually destroy information, which

devices, rounding up with a short outlook on fascinat- should be echoed in a decrease of the associated dis-

ing applications beyond the scope of NISQ devices in tance as adding noise cannot make two states more

Sec. 8. The work concludes with a look to the future distinguishable [10].

in Sec. 9. To make this work as self-contained and ex- We have already argued that it is more sensible to

planatory as possible, most derivations are found in measure distances between parameters of a quantum

Accepted in Quantum 2021-09-06, click title to verify. Published under CC-BY 4.0. 2has to satisfy the axiomatic definition of a divergence

as employed in statistics, which is less strict than the

definition of distance encountered in other areas of

mathematics.

In summary, we have now set the arena: the pa-

rameters θ define a quantum state ρ(θ) which then

undergoes the measurement M. We can measure dis-

tances between parameters by going to the space of

quantum states or to the space of probability distri-

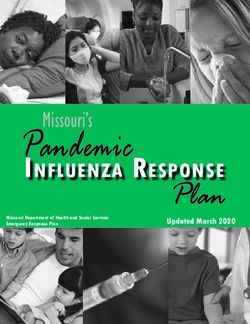

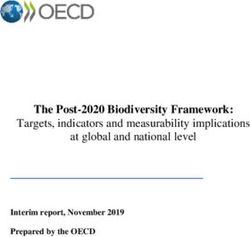

Figure 1: Large distances between parameters θ and θ 0 need

not correspond to large distances of the corresponding quan-

butions over the measurement outcomes, dependent

tum states ρ(θ) and ρ(θ 0 ). Equally, large distances between on the question we seek to answer. Fig. 1 illustrates

quantum states need not correspond to large distances be- these relations.

tween the output probability distributions after the measure-

ment, pM (θ) and pM (θ 0 ). Measuring the distance between

two parameters by measuring the distance between the corre- 2 Information Matrices

sponding quantum states or output probability distributions

is therefore a sensible approach known as a pullback. We are often confronted with scenarios where a quan-

tum system is in a state associated to a particular

parameter θ but where it is important to understand

state by measuring the distances in the space of quan- how much a change of the parameter θ in a partic-

tum states. But as we are mere classical observers, ular direction results in a change of the underlying

any NISQ application must involve a measurement of quantum state or the output probability distribution.

the underlying quantum state. A measurement will To gain that understanding, we will look how a

inevitably collapse the quantum state into a classi- slight perturbation of the parameter θ + δ reflects in

cal probability distribution over the possible measure- the chosen distance by analyzing d(θ, θ+δ). If the dis-

ment outcomes. We can formalize this by defining a tance measure d is differentiable, we can develop this

measurement M = {Πl }, where the operator Πl iden- into a Taylor series around δ = 0. Because d is a dis-

tifies the l-th outcome of the experiment [10]. In NISQ tance measure, we can assume that it is both positive

applications, the operators {Πl } are usually just the and vanishes for identical parameters, i.e. d(θ, θ) = 0

projectors onto the basis states. The probabilities of is a minimum. But we know that the first order con-

observing the different outcomes are then given by tributions vanish around minima and that the second

order is therefore the first contribution of the Taylor

pl (θ) = Tr{ρ(θ)Πl }. (2)

series that does not vanish. To write down the Taylor

We will use pM (θ) to refer to the full output distri- expansion, we first define the matrix M with entries

bution for a specific measurement M. We will drop

∂2

the subscript M if we talk about generic probability M (θ)ij = d(θ, θ + δ) . (4)

distributions. ∂δi ∂δj δ=0

If we fix a certain measurement M, we also fix

In more mathematical terms, this is the matrix of

a certain way of collapsing a parametrized quantum

second order derivatives – also known as the Hessian

state into a probability distribution which is now

– of the function

also dependent on the parameter θ. We thus have

a parametrized probability distribution. But now the gθ (δ) = d(θ, θ + δ), (5)

same argument that we used to motivate measuring

the distance between parameters in the space of quan- at δ = 0. With this we can express the Taylor expan-

tum states applies here, too. A large change in the sion as

underlying quantum state might not correspond to

a large change in the output probability distribution d

1 X

that is observed. If we fix a measurement, we should d(θ, θ + δ) = δi δj M (θ)ij + O(kδk3 ) (6)

2 i,j=1

therefore consider the pullback of a distance d between

two probability distributions 1 T

= δ M (θ)δ + O(kδk3 ). (7)

2

0 0

dM (θ, θ ) = d(pM (θ), pM (θ )). (3)

The matrix M (θ) therefore captures all we need to

In the following, we require some very natural prop- know about the local vicinity of θ in parameter space,

erties from the distance measures between quantum but measured by the distance d in the underlying

states or probability distributions we employ. First, space of either quantum states or output probability

that it is always positive d(θ, θ 0 ) ≥ 0 and second, distributions! Intuitively, large entries of M (θ) indi-

that the distance between identical objects is zero cate that a change in the corresponding parameters

d(θ, θ) = 0. This means that the distance measure results in a large change of the underlying object. In

Accepted in Quantum 2021-09-06, click title to verify. Published under CC-BY 4.0. 3the following, we will not denote the dependence on (KL) divergence, also known as the relative entropy.

θ explicitly and simply use the notation M . It is defined as1

The matrix M is an example of a metric. To elu- X pl (θ)

cidate the meaning of this, we first need to remind dKL (pM (θ), pM (θ 0 )) = pl (θ) log . (13)

ourselves how measuring distances and angles in Eu- l∈M

pl (θ 0 )

clidean space works through the standard scalar prod-

uct. It is defined as The intuition behind the KL divergence is not obvious

at first sight, but Nielsen and Chuang give some ac-

d

X counts in Ref. [10]. Imagine we are given an unknown

hδ, δ 0 i = δ T δ 0 = δi δi0 . (8) probability distribution that can be either pM (θ) or

i=1

pM (θ 0 ) and we are tasked with deciding which of the

The standard scalar product acts as an “umbrella” of two distributions it is. Then, the KL divergence es-

sorts as it allows us to measure lengths sentially captures how fast the “false negative” error

p decreases with the number of repetitions we are al-

kδk = hδ, δi, (9) lowed when there is a constraint on the “false posi-

tive” probability.

distances Now, we need to perform the second order expan-

q sion to get a formula for the corresponding informa-

d(δ, δ 0 ) = hδ − δ 0 , δ − δ 0 i, (10) tion matrix. For conciseness of the main text, you

find the complete derivation in App. A. The result of

and angles between vectors the calculation is the following formula for the infor-

mation matrix associated to the KL divergence:

hδ, δ 0 i

^(δ, δ 0 ) = arccos p . (11)

hδ, δihδ 0 , δ 0 i X ∂2

[MKL ]ij = pl (θ) log pl (θ) (14)

∂θi ∂θj

It is quite suggestive that δ T δ 0 = δ T Iδ 0 resembles l∈M

the expression in Eq. (7) with M replaced by the iden- X 1 ∂pl (θ) ∂pl (θ)

= . (15)

tity matrix I. And indeed, we can use the matrix M pl (θ) ∂θi ∂θj

l∈M

to define a new scalar product

The matrix MKL is nothing else than the (classical)

hδ, δ 0 iM = δ T M δ 0 (12) Fisher information matrix (CFIM) and we will in the

following denote it as I = MKL . A resource with

that now includes information about the local envi-

substantial information about it is the textbook by

ronment of ρ(θ) or pM (θ)! And this is what a metric

Lehmann and Casella [11]. For the reader’s conve-

does in a nutshell – it allows to measure distances and

nience, we list and prove the most important prop-

angles between vectors in parameter space, but with

erties of the classical Fisher information matrix in

the local structure of the underlying quantum states

App. B.

or probability distribution parametrized by those vec-

tors taken into account. Uniqueness. We derived the classical Fisher infor-

Because these matrices contain information about mation from the Kullback-Leibler divergence. But

the underlying quantum states and probability dis- what happens if we repeat the same procedure for an-

tributions – which are by nature objects that have other distance measure? It turns out that the deriva-

an information theoretic meaning – we will call them tion will always yield a constant multiple of the clas-

information matrices. sical Fisher information if the distance measure is

monotonic.

We shallowly introduced the idea of monotonicity

3 The Classical Fisher Information in Sec. 1, but we will formalize it now. To this end, we

first need another concept, namely that of a stochastic

After having kept the derivations general, we now

map. A stochastic map is a linear operation that takes

turn our attention to the Fisher information itself

in probability distributions and always outputs prob-

and begin with the classical case. As the name sug-

ability distributions. This is a very broad definition

gests, the classical Fisher information is defined for

and includes every admissible thing we can do with

parametrized probability distributions. In the con-

probability distributions. We formally call a distance

text of NISQ devices, this means on the probability

measure between two probability distributions p and

distributions of measurement outcomes. To make use

q monotonic if the distance cannot increase under any

of the machinery we developed in the last section,

we need a distance measure between probability dis- 1 Note that there are edge cases where the KL divergence is

tributions. There exists numerous ways to measure not properly defined, for example if there exists a pl (θ 0 ) = 0

distances between probability distributions, but one where at the same time pl (θ) 6= 0. We will exclude this cases

of the most popular is certainly the Kullback-Leibler in this work.

Accepted in Quantum 2021-09-06, click title to verify. Published under CC-BY 4.0. 4stochastic map T : proportional to the number of different measurement

outcomes which usually is exponential in the number

d(T [p], T [q]) ≤ d(p, q). (16) of qubits [13]. This problem is ameliorated if many

of the output probabilities are very small – in this

The fact that we always end up with a constant

case we need fewer samples to guarantee a good es-

multiple of the Fisher information if we start from a

timate. This can be understood intuitively: If we do

monotonic distance measure is known as the unique-

not observe a particular measurement outcome l, we

ness of the classical Fisher information which is a cel-

estimate pl = 0. But as we know that pl is small, this

ebrated result due to Morozova and Chentsov [12]. A

is already a good estimate.

particularly important and widely applied monotonic

As the estimation of the output probability dis-

distance measure between probability distributions is

tribution is a very important task in NISQ settings,

given by the total variation distance

there also exist more sophisticated methods than just

1X estimating the output probabilities from the number

dTV (p, q) = |pl − ql |. (17)

2 of times they were observed in a limited number of

l

test runs. One possibility is a Bayesian approach,

It measures the maximum difference in probability where a prior estimate of the output distribution is

that p and q can assign to the same event. It would updated using new samples. Techniques based on

be tempting to ask which information matrix is re- machine learning have also been shown to be very

lated to this distance measure. But we have to re- effective tools to capture information about quantum

member that we required the distance measure to be states and therefore also the associated output prob-

differentiable in order to define an information matrix ability distributions [14].

– and as the total variation distance includes an ab- We now turn to the derivatives. In many cases, gra-

solute value function, it is not differentiable at p = q dient based schemes are deployed to optimize Vari-

as would be required for our construction. The total ational Quantum Algorithms. We will now argue

variation distance therefore does not induce an infor- that these schemes already entail the calculation of

mation matrix. the derivatives of the output probabilities necessary

for the calculation of the Fisher information matrix.

Calculation. To work with the classical Fisher infor-

These methods use either finite differences or the

mation matrix in a NISQ context we need to actually

parameter-shift rule [15, 16]. In all cases, the deriva-

calculate it. If we take a closer look at Eq. (14), we

tives of expectation values are calculated by evalu-

see that we need two ingredients to do so: First, the

ating expectation values at different parameter set-

probabilities of the different measurement outcomes

tings. We will make this exemplary by considering

pl (θ) and second their derivatives with respect to the

two-sided finite differences, where the derivative is ap-

parameters θ, ∂pl (θ)/∂θi .

proximated via a small perturbation :

First, let us talk about the output probabilities.

Each run of a NISQ device with a fixed measurement ∂ hH(θ + ei ) − hH(θ − ei )i

setting will add a data point, and with sufficiently hH(θ)i ≈ , (19)

∂θi 2

many data points, the output probabilities pl (θ) can

where ei denotes the i-th unit vector, or, equivalently,

be estimated. This is also how one approaches the es-

that we only perturb θi . The following argument,

timation of expectation values: a unitary transforma-

however, equally works for other means of estimating

tion changes the measurementP basis to the eigenbasis

derivatives.

of the desired operator H = l hl |hl ihhl |. We then

In order to perform the finite-difference approxi-

perform multiple repetitions of the experiment, esti-

mation, we have to compute the expectation values

mate the output probabilities pl (θ) and then calculate

hH(θ ±ei )i, which proceeds via the estimation of the

the expectation value as

output probability distribution as explained above.

But this means that we actually compute the deriva-

X

hH(θ)i = pl (θ)hl . (18)

l tive of the output probability distribution:

P P

This means that an estimation of the output proba- ∂ l pl (θ + ei )hl − l pl (θ − ei )hl

hH(θ)i ≈

bilities is actually a prerequisite for the calculation of ∂θi 2

expectation values, the most ubiquitous primitive in (20)

NISQ settings. Note however that the output proba- X pl (θ + ei ) − pl (θ − ei )

bility distribution contains more information than the = hl (21)

expectation value – which means that a faithful esti- 2

l

mate of the probability distribution will usually re- X ∂pl (θ)

quire more runs of the experiment than an estimation ≈ hl . (22)

∂θi

l

of an expectation value.

In fact, in the worst case the number of samples This means that the same process we use to estimate

required to estimate the probability distribution is derivatives of expectation values will also yield the

Accepted in Quantum 2021-09-06, click title to verify. Published under CC-BY 4.0. 5derivatives of the output probabilities we need to cal- are included. Like stochastic maps, quantum channels

culate the classical Fisher information matrix. Again are a very broad concept, including not only unitary

a word of caution is advised, as the faithful estima- and noisy evolution but also measurements! This can

tion of the derivatives of the whole output probability actually be seen quite easily: measurements turn a

distribution will usually require more runs of the ex- quantum state into a classical probability distribu-

periment than used for estimating the derivative of an tion over the measurement outcomes. But as we just

expectation value. learned these are also a subset of all quantum states,

In summary, we learned that the processes that are which means that measurements match the require-

already used to compute expectation values and their ments of a quantum channel. A distance measure d

gradients implicitly give us the data necessary to esti- between quantum states is monotonic if it cannot in-

mate both the output probabilities pl (θ) and their crease under any quantum channel Φ:

derivatives ∂pl (θ)/∂θi and thus the whole classical

Fisher information matrix. This brings us to the con- d(Φ[ρ], Φ[σ]) ≤ d(ρ, σ). (23)

clusion that the calculation of the Fisher information

matrix is not harder than the calculation of expec- As quantum channels encompass basically everything

tation values and their derivatives which are routine we can do in quantum information processing, they

tasks in the context of NISQ computing. are heavily studied. You find introductions to quan-

We should also note that – especially for large quan- tum channels in the excellent book by Wilde [18], the

tum systems – one usually does not have sufficiently classic book by Nielsen and Chuang [10] and the more

many samples to accurately estimate the whole distri- mathematical lecture notes by Wolf [19].

bution, which means that many elements of the prob- To find a quantum generalization of the classical

ability distribution pl are estimated as zero. In this Fisher information, we will again use the machinery

case, the corresponding terms in the formula for the developed in Sec. 2. We will limit ourselves to pure

classical Fisher information of Eq. (14) would diverge. quantum states in this section to keep the develop-

This is usually mitigated by the fact that the associ- ments simple, but return to the general case in Sec. 6.

ated derivatives are also zero in which case we can just To start the machinery, we need to choose an ap-

remove the corresponding terms from the sum. At propriate distance measure between quantum states.

points where pl vanishes but a derivative ∂pl (θ)/∂θi From all possible contenders, the fidelity stands out

does not we actually have a discontinuity of the Fisher due to its beautiful operational interpretation. The

information [17], which means that it is not properly fidelity between two pure quantum states is given by

defined at these points, a property that is inherited

from the Kullback-Leibler divergence. Another way f (|ψ(θ)i , |ψ(θ 0 )i) = |hψ(θ)|ψ(θ 0 )i|2 . (24)

around this problem is to use a Bayesian approach for

The fidelity is so important because the probability

the estimation of the output probability distribution

with which we can distinguish the two states |ψ(θ)i

with a prior that does not contain zero probabilities.

and |ψ(θ 0 )i when using the optimal measurement is

df (|ψ(θ)i , |ψ(θ 0 )i) = 1 − f (|ψ(θ)i , |ψ(θ 0 )i). (25)

4 The Quantum Fisher Information

As we need a measure of distance that is 0 for indistin-

We always strive to find quantum generalizations of

guishable states, we will use df in our calculations.2

classical concepts, and the Fisher information is no ex-

We will again leave the complete derivation to

ception. We know that any classical probability distri-

App. D and directly go to the formula for the infor-

bution can be expressed by some quantum state with

mation matrix associated with the fidelity:

a diagonal density matrix, which means that classi-

cal probability distributions are actually a subset of

[Mf ]ij = 2 Re h∂i ψ(θ)|∂j ψ(θ)i

all quantum states. Because of the uniqueness of the (26)

classical Fisher information, any “quantum Fisher in- − h∂i ψ(θ)|ψ(θ)ihψ(θ)|∂j ψ(θ)i .

formation” should reduce to the classical Fisher infor-

In this formula, we have used the shorthand ∂i =

mation when looking on classical states.

∂/∂θi . If one checks the consistency of this infor-

We have furthermore learned that the classical

mation matrix with the classical Fisher information,

Fisher information is associated with monotonic dis-

however one realizes that the prefactor is wrong. As

tance measures. To properly define monotonicity in

this is only an artifact of how defined our distance

the quantum setting we first need to introduce the

we can simply correct this by multiplying with a con-

quantum generalization of stochastic maps, the quan-

stant. Doing so, we obtain the formula for the quan-

tum channels. Stochastic maps are linear operations

that map probability distributions to probability dis- 2 We could also have used a different convention for our cal-

tributions. Likewise, quantum channels are defined culations, where the square root of the fidelity is used instead

as linear operations that take density matrices in and of the fidelity. App. C contains an explanation why this will

output density matrices, even when ancillary systems only incur a constant prefactor.

Accepted in Quantum 2021-09-06, click title to verify. Published under CC-BY 4.0. 6tum Fisher information matrix (QFIM) which is as- Calculation. The quantum Fisher information is a

sociated with the distance 2df : much more peculiar object to work with than its clas-

sical counterpart. We will now outline the techniques

Fij = 4 Re h∂i ψ(θ)|∂j ψ(θ)i that have been developed to tackle the calculation of

(27)

− h∂i ψ(θ)|ψ(θ)ihψ(θ)|∂j ψ(θ)i . the quantum Fisher information. We will keep our

focus on the pure state case and come back to the

A great review about the quantum Fisher informa- practically important noisy case later.

tion matrix, various ways to calculate it and many In many NISQ applications, especially in NISQ

applications was recently written by Liu et al. [7]. computing, the parameters of the quantum state are

For the reader’s convenience, however, the important usually rotation angles of gates with a certain gener-

properties of the quantum Fisher information matrix ator, e.g. U (θi ) = e−iθi Gi . In this case, our job will

are summarized and proven in App. E. be a lot easier. If we execute the circuit that prepares

our state until the point where the gate in question

Non-Uniqueness. In the classical case, it does not

is applied and call the state before the gate happens

matter from which monotonic distance measure be-

|ψ0 i we can express the derivative in terms of the gen-

tween probability distributions we start our deriva-

erator:

tion, we will always end up with a constant multiple

of the classical Fisher information. But this is actually

|∂i ψ(θ)i = ∂i e−iθi Gi |ψ0 i = −iGi |ψ(θ)i . (30)

not the case in the quantum setting! Indeed, as was

proven by Pétz [20], there are infinitely many mono- Putting this into the formula for the quantum Fisher

tonic metrics in the quantum setting, and multiple information of Eq. (27), we get a simple formula for

ones have found application in quantum information the diagonal elements:

theory. To distinguish the quantum Fisher informa-

tion that arises from the fidelity from the other ones it Fii = 4 hψ0 |G2i |ψ0 i − hψ0 |Gi |ψ0 i2 .

(31)

is also often referred to as SLD quantum Fisher infor-

mation. Here, SLD stands for symmetric logarithmic This is nothing but the fourfold variance of the gener-

derivative. This is related to a different way in which ator Gi with respect to the state |ψ0 i. Note that we

we can define the (SLD) quantum Fisher information. dropped the real part from the formula as the vari-

It is ance is already a real number. This means that we

1 can get the diagonal elements of the quantum Fisher

Fij = Tr{ρ(Li Lj + Lj Li )} (28) information matrix by executing the circuit in ques-

2

tion until our gate happens and then evaluating the

where Li is the symmetric logarithmic derivative expectation values of the two observables G2i and Gi .

(SLD) operator corresponding to the coordinate θi . If we have multiple gates happening in parallel, we

It is implicitly defined by can also use the same approach to compute the off-

diagonal elements of the quantum Fisher information

∂ρ 1

= (Li ρ + ρLi ). (29) matrix related to these gates. If we evaluate the for-

∂θi 2 mula for the quantum Fisher information in this case,

You can think of this operator as a way to rewrite we get

derivatives of the quantum state ρ. We chose to not {Gi , Gj }

introduce the quantum Fisher information via this Fij = 4 hψ0 | |ψ0 i

approach because it is not only unintuitive but also 2 (32)

somewhat unwieldy. This, however, is the way in − hψ0 |Gi |ψ0 ihψ0 |Gj |ψ0 i ,

which the first results on the quantum Fisher infor-

mation were obtained [21]. where {Gi , Gj }/2 = (Gi Gj + Gj Gi )/2 can be under-

Pétz also showed that the (SLD) quantum Fisher stood as the “real part” of the product Gi Gj . The

information that we just derived from the fidelity quantity above is nothing else but the fourfold co-

between quantum states is special because it is the variance of the generators Gi and Gj with respect to

smallest monotone metric in a certain sense [20]. One the state |ψ0 i. This means that we can evaluate all

can furthermore make a case that it is the “most natu- “blocks” of the quantum Fisher information matrix

ral” in certain respects. If your are interested in this, corresponding to gates executed in parallel by eval-

have a look at Ref. [22] which contains a pedagogi- uating the aforementioned observables on the state

cal exposition relating it to the “natural” geometry of right before the layer of parallel gates is executed,

the Hilbert space of quantum states. Another useful |ψ0 i [23].

property of the SLD quantum Fisher information that Elements of the quantum Fisher information ma-

sets it apart from the competition is that it is actually trix that correspond to gates that are not executed in

defined for pure states – many other possible gener- parallel are harder to deal with, because the observ-

alizations of the classical Fisher information become ables that need to be evaluated now also depend on

infinite in this case. the intermediary circuit elements between the gates.

Accepted in Quantum 2021-09-06, click title to verify. Published under CC-BY 4.0. 7But we can still evaluate the elements of the quantum twice as many qubits, an additional ancilla and con-

Fisher information in this case using more sophisti- trolled SWAP operations but retains the same depth

cated techniques. up to some constant number of gates. We should also

If the quantum gates that shall be differentiated not forget that these approaches only work for pure

support a parameter-shift rule we can do this across states. As soon as the operations preparing the state

layers. A parameter-shift rule [16] states that the ex- become noisy, the quantities calculated via these ap-

pectation value of any operator O evaluated on a state proaches do not coincide with the fidelity but instead

|ψ(θ)i, hO(θ)i = hψ(θ)|O|ψ(θ)i can be differentiated with the state overlap which does not give rise to the

as quantum Fisher information.

∂ π π The property that the quantum Fisher information

hO(θ)i = r hO(θ + ei )i − hO(θ − ei )i is associated to the second derivatives of the fidelity

∂θi 4r 4r

(33) can also be used to get an approximation to the quan-

tum Fisher information matrix via a finite differences

where the constant r depends on the nature of the approach. We have introduced the quantum Fisher

gate in question. We see that the derivative can be information matrix as the second derivative of twice

evaluated by running the same circuit but “shifting” the fidelity distance. With finite differences, we can

the parameter in question in both directions. Luckily, approximate the second derivative of any function g(t)

most quantum gates available on near-term quantum as

devices support such a parameter-shift rule.

Using the parameter-shift rule and the fact that the g(t + ) − 2g(t) + g(t − )

∂t2 g(t) ≈ . (35)

quantum Fisher information matrix can be expressed 2

via the second derivatives of the fidelity, the authors

If we now set

of Ref. [24] derived a formula for the quantum Fisher

information that reads

g(t) = 2df (θ, θ + tv) (36)

1 π

Fij = − |hψ(θ)|ψ(θ + (ei + ej ) )i|2

2 2 for some arbitrary unit vector v, we see that g(0) = 0

π because df is a distance and g(t+) = g(t−) for small

−|hψ(θ)|ψ(θ + (ei − ej ) )i|2

2 (34) , because the second order is the first non-vanishing

π 2

−|hψ(θ)|ψ(θ − (ei − ej ) )i| order of the Taylor expansion of df . Putting all of

2 this together allows us to compute the projection of

π

+|hψ(θ)|ψ(θ − (ei + ej ) )i|2 . the quantum Fisher information matrix in a particular

2

direction:

This formula contains the fidelities between different

parametrizations of the same state. To evaluate those 4df (θ, θ + v)

v T Fv ≈ , (37)

via quantum circuits, we have two principal ways: if 2

we denote the circuit preparing the quantum state

|ψ(θ)i as U (θ), then the overlap |hψ(θ)|ψ(θ 0 )i|2 can where v is an arbitrary vector of unit length and

be evaluated via first applying the unitary U (θ) and is small. We can therefore use quantum circuits that

then the inverse of U (θ 0 ). After this circuit, the over- calculate the overlap between two pure states along

lap is exactly the probability of observing the outcome with small perturbations to approximate the quantum

0 [25]: Fisher information matrix. Note that you might also

find other formulas that contain the square root of

the fidelity. As argued in App. C, these formulas are

|0i U (θ) U † (θ 0 ) 0

equally valid and stem from a different convention for

the fidelity distance.

Alternatively, we can make use of the SWAP test

which achieves the same thing: Recently, Ref. [26] followed this spirit of approx-

imation to generalize the simultaneous perturbation

|0i H H hZi stochastic approximation (SPSA) method for the

stochastic approximation of gradients to the calcula-

tion of the quantum Fisher information matrix. The

|0i U (θ) original SPSA method computes estimates of the gra-

dient of a function f (θ) using the finite differences ap-

proximation with small random perturbations of the

|0i U † (θ 0 ) parameters. The same strategy can be applied to the

finite differences expression for the second derivative,

where two distinct random perturbations are used to

Both approaches have their downsides. The compute obtain an estimate of the Hessian matrix. Applied

and reverse approach has twice the depth of the orig- to the estimation of the quantum Fisher information

inal state preparation and the SWAP test requires matrix, the technique proceeds by first selecting two

Accepted in Quantum 2021-09-06, click title to verify. Published under CC-BY 4.0. 8random vectors of unit length, v 1 and v 2 . Then, for

a small , the following quantity is computed:

δF = fθ (v 1 + v 2 ) − fθ (−v 1 )

(38)

− fθ (−v 1 + v 2 ) + fθ (+v 1 ).

The shorthand fθ (δ) denotes the fidelity of the system

state with the state whose parameters are perturbed

by δ,

fθ (δ) = |hψ(θ)|ψ(θ + δ)i|2 . (39)

Figure 2: A visual explanation of the matrix inequality A ≥ B

From this quantity, we can use the outer products of in the case of 2×2 matrices. To every positive matrix, we can

the perturbation vectors to generate an approxima- assign an ellipse by considering its action on the unit sphere

tion of the quantum Fisher information matrix as – in the 2D case the unit circle. The axes of the ellipse are

then given by the eigenvectors scaled to the length of the

δF

F̂ = − (v 1 v T2 + v 2 v T1 ). (40) associated eigenvector. The relation A ≥ B means that the

22 ellipsis of B lies inside the ellipsis of A. If the ellipses do not

Note that the authors of Ref. [26] use a different con- touch, we have the stronger relation A > B.

vention for the quantum Fisher information matrix

that causes a difference in the prefactor. This ap-

But this also must hold in the limit kδk → 0, where we

proximation is not enough to faithfully estimate the

can drop higher order terms and only use the second

whole quantum Fisher information matrix because it

order approximation of the distance. This means that

has a maximal rank of 2. But we can average mul-

tiple such estimates to get an approximation of the 1 T 1

quantum Fisher information matrix with increasing δ M [Φ[ρ(θ)]]δ ≤ δ T M [ρ(θ)]δ. (43)

2 2

quality.

We assumed that δ was a very short vector to derive

this inequality, but now we can also rescale it again to

5 Relation of Classical and Quantum arbitrary length. This means that the above inequal-

ity holds for any δ and that it implies the matrix

Fisher Information inequality

To clarify the relation between classical and quantum

M [Φ[ρ(θ)]] ≤ M [ρ(θ)]. (44)

Fisher information, we first return to the notion of

monotonicity that we required for the distance mea-

You might be a bit confused, as we are looking at

sures we look at. Intuitively, we would expect that the

matrices here and not numbers. The matrix inequal-

associated information measure also “decreases” if a

ity A ≥ B implies that the matrix A − B has only

quantum channel is applied to the underlying quan-

non-negative eigenvalues, a statement that is equiva-

tum system.

lent to δ T Aδ ≥ δ T Bδ for any vector δ. A more visual

And indeed, the monotonicity of the distance mea-

explanation of this is given in Fig. 2.

sure carries over to the associated information matrix.

We have shown that the monotonicity of the dis-

To elucidate how this happens, we will now use the

tance measure means that the associated information

notation of quantum channels and quantum states,

matrix also has a monotonicity property as it can also

because it includes the case of stochastic maps on

only decrease under quantum operations. This fact

classical probability distributions. The following rea-

helps us to shine light on the relation between the

soning is therefore valid for both the classical and the

quantum Fisher information and the classical Fisher

quantum Fisher information matrix. Recall that the

information. Remember that we already learned that

information matrix arises at the second order approxi-

we can also model measurements as quantum chan-

mation when we perturb the parameters of a quantum

nels. Together with the monotonicity of informa-

state slightly:

tion matrices we just derived we know that applying

1 T a measurement M need necessarily make the infor-

d(ρ(θ), ρ(θ + δ)) = δ M [ρ(θ)]δ + O(kδk3 ). (41) mation matrix associated to any monotonic distance

2

smaller:

The condition of monotonicity implies that the dis-

tance measure decreases if we apply some sort of quan- M [M[ρ(θ)] ≤ M [ρ(θ)]. (45)

tum channel Φ, that can represent a variety of differ-

ent operations: But the outcome of a measurement will always be

a classical probability distribution over the measure-

d(Φ[ρ(θ)], Φ[ρ(θ + δ)]) ≤ d(ρ(θ), ρ(θ + δ)). (42) ment outcomes, ergo M[ρ(θ)] is a classical probability

Accepted in Quantum 2021-09-06, click title to verify. Published under CC-BY 4.0. 9distribution. And due to the uniqueness of the clas- and consequently we can always find a measurement

sical Fisher information we therefore know that no that achieves the quantum Fisher information for ev-

matter what kind of distance we used to derive M , ery individual parameter θi . The optimal measure-

after the measurement it will be a constant multiple ment can be found by computing the SLD operator Li

of the classical Fisher information matrix associated that realizes the derivative of the underlying quantum

with the probability distribution after the measure- state with respect to the parameter θi as in Eq. (29) [7]

ment: and can depend on the actual value of θ [28]. But

for multiple parameters we cannot necessarily find a

M [M[ρ(θ)] = αI[M[ρ(θ)]]. (46) measurement that achieves equality of classical and

As we are free to rescale the distance measure we use quantum Fisher information matrix, as the optimal

and therefore also the associated information matrix measurements for the individual parameters need not

we can always make it consistent with the classical be compatible with each other. A detailed discus-

Fisher information when evaluated on classical prob- sion of the question of optimal measurements is found

ability distributions, which means that we can choose in Refs. [29, 30]. More detailed studies quantifying

α = 1, which we will also assume in the following. the (in)compatibility of different measurements can

The relation is especially true for the quantum and be found in Refs. [31–33].

the classical Fisher information, where we have that

I[M[ρ(θ)]] ≤ F[ρ(θ)] for all M. (47) 6 The Role of Noise

Let us quickly take a step back and marvel at the As is already evident from the name noisy

feat we just accomplished: We have used the sim- intermediate-scale quantum devices, noise is one of

ple requirement of monotonicity of the distance mea- the principal impediments and a defining factor for

sure to show that any quantum information matrix NISQ devices. It is therefore imperative for us to un-

is an upper bound to the classical Fisher informa- derstand how noise influences the classical and quan-

tion matrix associated with any probability distribu- tum Fisher information. We already got to know the

tion that results of a measurement of the state. We concept of a quantum channel and learned that it can

have initially motivated the derivation of this quan- also be used to model any noisy quantum evolution.

tity via a geometric intuition, and it also pops up But up to now, we have only treated the quantum

here: Indeed, a quantum information measure like the Fisher information for pure quantum states, resting

quantum Fisher information will measure how much on the particularly simple formula for the fidelity for

the underlying quantum state ρ(θ) will change if we pure states. If we wish to extend it to mixed quantum

change the parameters of the state slightly. The re- states, we have to find a quantity that reproduces the

lation we just derived tells us now that the change of known fidelity formula for pure states but also stays

the underlying quantum state directly gives us an up- valid for mixed quantum states. This generalization is

per bound on how much of this change we can make given by the Bures fidelity, also known as Uhlmann’s

visible when performing a measurement. fidelity 3

This also gives us as a hint on what kinds of ap-

plications these two quantities enable. The quan- fB (ρ, σ) = Tr{(ρ1/2 σρ1/2 )1/2 }2 . (48)

tum Fisher information really captures information

about the underlying quantum state and therefore The notation ρ1/2 denotes the unique positive matrix

also phenomena that are quantum mechanical in na- square root of ρ, which is the only positive semidefinite

ture. But the importance of the classical Fisher in- matrix that fulfills (ρ1/2 )2 = ρ. If we look at the

formation should not be discounted, as we are always eigendecomposition of ρ,

forced to perform measurements to extract informa- X

tion about the quantum system, which means that at ρ= λi |λi ihλi |, (49)

the end of the day the classical Fisher information will i

always be the measure that quantifies the objects we we can easily see that it is given by

can actually observe. This means that both quanti-

ties are very important for near-term applications, as ρ1/2 =

Xp

λi |λi ihλi |. (50)

the classical Fisher information captures the outputs i

of our experiments, but the quantum Fisher informa-

tion can inform us about the quantum phenomena Constructions of the form ρ1/2 σρ1/2 often appear in

happening and also quantifies the ultimate limits of the context of quantum information theory because

our approaches. this is a way to multiply ρ and σ that – contrary to

One might wonder if there always exists a measure- just using ρσ – yields a Hermitian matrix.

ment that achieves equality of the classical and the

3 Again, there exist different conventions if the square should

quantum Fisher information matrix. In the single-

parameter case this is actually always possible [27], be included or not.

Accepted in Quantum 2021-09-06, click title to verify. Published under CC-BY 4.0. 10As in the pure case, we need to transform the fi- This constitutes the quantum part of the quantum

delity to get the proper distance measure associated Fisher information. The changing of the eigenvec-

to it, namely the Bures distance tors is a non-classical phenomenon as they are always

fixed and identified with the different measurement

dB (ρ, σ) = 2 − 2fB (ρ, σ). (51)

outcomes for classical states.

The prefactor 2 ensures that the the associated infor-

mation matrix is consistent with the classical Fisher Calculation. We have seen that the noisy quantum

information matrix, as in Sec. 4. Fisher information is much more complicated than

We won’t reproduce the whole derivation of the the quantum Fisher information for pure states. To

quantum Fisher information matrix for the Bures dis- evaluate it exactly one usually has to perform a full

tance, as it is quite laborious. The interested reader tomography of the underlying state, an operation that

can refer to Ref. [34] for a detailed derivation. The is too costly for near-term applications because the

resulting formula is: number of samples is exponential in the number of

X 2 Re(hλk |∂i ρ|λl ihλl |∂j ρ|λk i) qubits [36]. For quantum states that are nearly pure

Fij = (52) approximations can be used, but they also break down

λk + λl

kl

λk +λl 6=0 at certain noise levels [37, 38].

X (∂i λk )(∂j λk ) Recently, variational approaches for the computa-

= + 4λk Re(h∂i λk |∂j λk i) tion of the Bures fidelity fB have been suggested.

λk

k They can be used to compute the quantum Fisher

λk 6=0

information for noisy states in conjunction with the

X 8λk λl

− Re(h∂i λk |λl ihλl |∂j λk i). (53) perturbation techniques described in Sec. 4. Ref. [39]

λk + λl

kl proposes to alleviate the resource requirements for to-

λk ,λl 6=0

mography through the use of a variational quantum

Let us analyze this behemoth. First, we see that the autoencoder. A variational autoencoder compresses

equations contain a division by λk + λl . As ρ is a pos- an N -qubit input state into K qubits [40]. The au-

itive semidefinite matrix, the eigenvalues λk cannot thors of Ref. [39] show that the compressed state of

be negative, which means that the case excluded in a perfectly trained autoencoder has the same spec-

the sum can only occur if both λk and λl are zero. It trum as the original state. They propose to estimate

is an advantage of the second equation that the sums the Bures fidelity between two states ρ and σ by first

now only run over λk and λl that are non-zero. performing tomography of the compressed state of ρ

Now let’s go over the two parts of the second equa- and then running separate SWAP tests that involve

tion. The first term, the eigenstates of ρ and the state σ. The fidelity is

X (∂i λk )(∂j λk ) then computed in classical post-processing and guar-

, (54) antees on the estimation precision are given based on

λk

k

λk 6=0 how well the autoencoder was trained. The authors

provide numerical evidence that the proposed strat-

looks familiar. Recall that in the eigenbasis, ρ is di-

egy works well for low-rank states if the variational

agonal and therefore represents a classical probability

circuit for the autoencoder is suitably chosen.

distribution over the basis states with probabilities λk

– this means that the term above is the “classical” Ref. [41], on the other hand, proposed to exploit

part of the quantum Fisher information and quanti- Uhlmann’s theorem that states that the Bures fidelity

fies how the eigenvalues themselves change. Note that of two states ρ and σ is equal to the maximum fidelity

here we only sum over λk 6= 0 – which means that we over all possible purifications |ψρ i and |ψσ i:

effectively exclude the possibility of an λk being zero

but ∂i λk being nonzero. In such a case, the rank fB (ρ, σ) = max f (|ψρ i , |ψσ i). (56)

|ψρ i,|ψσ i

of the density matrix would change which causes the

quantum Fisher information to be undefined [17, 35]. The authors suggest to use a variational circuits to

This, however, is more of a technicality as there al- learn purifications of the input states ρ and σ and then

ways exists a full-rank state arbitrarily close to any perform another optimization to extract the maxi-

state we could be interested. mum in Eq. (56). Another approach for the calcula-

The next term now captures how much the eigen- tion of the Bures fidelity was put forward in Ref. [42].

states themselves change under the parameters θi and It is based on a different subroutine, purity minimiza-

θj : tion, that can be used to calculate the matrix square

X roots in Eq. (48). The two latter approaches require

4λk Re(h∂i λk |∂j λk i)

multiple copies of the input states and therefore incur

k

λk 6=0 an overhead that is large for NISQ applications. They

X 8λk λl (55) furthermore rely on the success of variational subrou-

− Re(h∂i λk |λl ihλl |∂j λk i). tines which is closely tied to the successful selection of

λk + λl

kl

λk ,λl 6=0 a circuit ansatz. These approaches are therefore only

Accepted in Quantum 2021-09-06, click title to verify. Published under CC-BY 4.0. 11You can also read