Solving Constrained Trajectory Planning Problems Using Biased Particle Swarm Optimization

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

1 Solving Constrained Trajectory Planning Problems Using Biased Particle Swarm Optimization Runqi Chai, Member, IEEE, Antonios Tsourdos, Al Savvaris, Senchun Chai, Senior Member, IEEE, and Yuanqing Xia, Senior Member, IEEE, Abstract—Constrained trajectory optimization has been a and Hexi [3] applied an indirect method in order to produce critical component in the development of advanced guidance the energy-optimal trajectory for an irregular asteroid landing and control systems. An improperly planned reference trajectory mission. Pontani and Conway developed an indirect heuristic can be a main cause of poor online control performance. Due to the existence of various mission-related constraints, the approach in [4] and successfully implemented this method to feasible solution space of a trajectory optimization model may be address a low-thrust orbital transfer problem. Furthermore, in restricted to a relatively narrow corridor, thereby easily resulting their later work [5], this indirect approach was extended to in local minimum or infeasible solution detection. In this work, we solve more complex trajectory design problems with enhanced are interested in making an attempt to handle the constrained numerical accuracy. trajectory design problem using a biased particle swarm op- timization approach. The proposed approach reformulates the While the results from an indirect method can be treated original problem to an unconstrained multi-criterion version by as the theoretically optimal solutions, the application of indi- introducing an additional normalized objective reflecting the total rect methods is usually limited to relatively low-dimensional amount of constraint violation. Besides, to enhance the progress problems and it tends to be ineffective with respect to problems during the evolutionary process, the algorithm is equipped with containing complex path constraints. On the other hand, a a local exploration operation, a novel -bias selection method, and an evolution restart strategy. Numerical simulation experi- direct approach applies a “discretize then optimize” strategy, ments, obtained from a constrained atmospheric entry trajectory where state and/or control variables are firstly discretized such optimization example, are provided to verify the effectiveness of that the original problem is reformulated to a nonlinear pro- the proposed optimization strategy. Main advantages associated gramming problem (NLP) or more precisely, a static parameter with the proposed method are also highlighted by executing a optimization problem containing a finite number of decision number of comparative case studies. variables [6], [7]. A primary advantage of applying this kind of method is that it can be easily combined with well-developed Index Terms—Trajectory optimization, particle swarm opti- parameter optimization solvers in order to address the resulting mization, local exploration, bias selection, restart strategy. NLP. In addition, different types of system constraints are represented by a relatively straightforward manner. As a result, I. I NTRODUCTION we pay more attention to the implementation of “discretize ONSTRAINED trajectory planning problems widely ex- C ist in the aerospace industry and considerable attention has been given to research advanced trajectory optimization then optimize” approaches. In recent years, extensive applications of direct meth- ods can be found in the context of flight vehicle trajec- algorithms during the last decade. Although this step is often tory optimization and robotic motion planning problems in performed offline in practical applications, an improperly difficult environments [8]–[10]. For instance, a direct col- planned reference trajectory may significantly damage the location method was adopted in [8] in order to calculate online control performance or even result in a failure of the the optimal flight trajectory for an entry vehicle during the mission. Therefore, to gain enhanced guidance and control Mars entry phase. Path constrains were imposed such that performance, a proper design of the optimal maneuver trajec- the vehicle can fly along a restricted corridor. The authors tory is highly demanded. It should be noted that approaches to in [9] implemented a direct shooting method to plan the address this kind of problem mainly fall into two categories: motion of a manipulator. In their work, both the higher order indirect methods and direct methods [1], [2]. An indirect dynamics and the contact/friction force constraints were taken approach uses an “optimize then discretize” strategy, where into account, thereby making the optimization model much first-order optimality conditions for the differential algebraic more complex. In addition, a direct trajectory optimization equations (DAE)-constrained systems are directly derived and framework for general manipulation platforms was established solved. Many important contributions have been made on in [10], wherein multiple mission-related constraints such as applying this type of method [3]–[5]. For example, Yang the environmental constraints, collision avoidance constraints, and some geometric constraints were involved in the problem R. Chai, A. Tsourdos and A. Savvaris are with the School of Aerospace, Transport and Manufacturing, Cranfield University, UK, formulation and considered during the optimization. e-mail: (r.chai@cranfield.ac.uk), (a.tsourdos@cranfield.ac.uk), and Apart from direct methods, the design and test of heuristic (a.savvaris@cranfield.ac.uk). methods such as particle swarm optimization (PSO), genetic S. Chai and Y. Xia are with the school of Automation, Beijing Institute of Technology, Beijing, China, e-mail: (chaisc97@163.com), algorithm (GA) and differential evolution (DE) have also (xia yuanqing@bit.edu.cn). received significant attention for solving the constrained tra-

2 jectory planning problems. The main reason of using these fitness value will be considered as a better individual compared particular optimization algorithms is due to the fact that the to the others among the current swarm. This method is numerical gradient-dependent optimization algorithms such fairly straightforward to understand and easy to implement. as the interior-point method (IPM) [11], sequential quadratic However, difficulties may occur in balancing the emphasis programming (SQP) [12], [13], and other modified versions between the mission objective and penalty terms. [14], [15] only guarantee convergence toward local optima, In order to avoid the assignment of penalty functions and whereas heuristic methods are more likely to locate the glob- additional penalty factors, we apply a locally enhanced multi- ally optimal result. This advantage has been highlighted by a objective PSO (MOPSO) method to tackle the constrained number of relevant works and because of this, various heuristic trajectory planning problems. This method is performed by approaches have been proposed to address constrained space firstly defining the total amount of constraint violation as an vehicle trajectory optimization problems [16]–[19]. For exam- additional objective, thereby reformulating the constrained op- ple, an improved PSO algorithm was reported in [17] to solve timization problem as an unconstrained multi-criterion version. a reusable launch vehicle reentry trajectory design problem. In Then classical non-dominant sorting process is used to rank all this approach, a modified mutation mechanism was designed the candidate solutions. Note that the use of a PSO algorithm to facilitate the evolutionary process. A parallel optimization in constrained problems using a multi-objective approach framework incorporating PSO, GA, and DE was suggested by have been reported in some important works [26], [27] and the authors of [18], wherein an Earth-to-Mars interplanetary applications of this strategy to constrained engineering opti- problem, together with a multiple-impulse rendezvous mission, mization problems have attracted significant attention (e.g., a was addressed. Although the two problems were successfully detailed review can be found in [28]). However, most reported addressed by this hybrid method, simulation results also re- works addressed the problem by purely relying on the pareto vealed that the performance of the proposed method tends dominance. Based on our previous experiments [23], [24], it to be problem dependent. Furthermore, in [19], by defining was found that a direct implementation of MOPSO and pareto the number of switches as the optimization parameters, a dominance rules to the transformed multi-objective trajectory segmented PSO method was advocated to explore the optimal optimization problem may lack search bias in terms of the control sequence with a bang-bang structure for a time-optimal mission constraints. Therefore, a biased search toward the slew maneuver task. However, if a maneuver planning problem feasible region should be introduced, otherwise the algorithm contains singular arcs or the optimal control sequence does not performance might be degraded significantly. hold a bang-bang structure, this PSO-based trajectory planner The main contribution of this paper lies in the following may not be able to generate promising results or even fail to four aspects: find feasible solutions. Commonly, each particle among the swarm represents 1) We present an attempt to address the insufficient bias a candidate solution to an optimization problem. Based on issue for standard MOPSO algorithm by introducing a the reported experimental results, most of the researchers or constraint violation (CV)-based bias selection strategy. engineers concluded that with a proper selection of algorithm Then, this strategy is extended to a more general form, parameters, the PSO has the capability of getting rid of local named the -bias selection strategy, such that it can minima for different trajectory design problems [16], [20]. become more flexible to optimize the objective function This can be attributed by the fact that in the swarm update and reduce the solution infeasibility at the same time. formula, both the experience of the group of particles (e.g., 2) An evolution restart strategy is designed and embedded the so-called social component) and the experience of each in the biased MOPSO algorithm such that it can acquire individual (e.g., the so-called cognitive component) are taken an enhanced capability to avoid getting stuck in local into account. Moreover, compared with other evolutionary infeasible regions. optimization algorithms such as the GA and DE, the PSO can 3) The proposed approach is applied to solve a constrained benefit from a reduced number of function evaluations, thus atmospheric entry trajectory design problem which is making it more efficient. This key finding was validated by similar to the one investigated in [23] except that more the investigations presented in [21] and [22]. Benefiting from constraints are modeled and included in the optimization the key features discussed above, the implementation of PSO model. Case studies, along with detailed analysis, are and its enhanced versions to various engineering optimization provided to emphasize the importance of the proposed problems can be appreciated and encouraged. bias selection process as well as the evolution restart When applying bio-inspired optimization methods to strategy. handle trajectory planning problems, the constraint handling 4) The proposed method is compared to other evolutionary strategy usually plays a key role and it can significantly algorithms and an off-the-shelf numerical optimal control affect the performance of the optimization process. It is worth solver (named CASADI). Comparative results not only noting that in most PSO-based trajectory optimization solvers, characterize the key feature but also highlight the main a penalty function (PF) approach is commonly applied to deal advantage of applying the designed approach. with various parameter constraints adhered to the optimization To the best of the authors’ knowledge, the extended bias model [17], [23]–[25]. That is, an additional term (e.g., the selection method and the evolution restart strategy are firstly so-called penalty term) reflecting the constraint violation is combined in the locally enhanced-MOPSO in this paper augmented in the fitness function, and the particle with smaller for addressing the constrained reentry trajectory optimization

3

problem. static version of problem (5) can be written as:

The rest of this article is constructed as follows. In N∑︁

k −1

Section II, the locally enhanced-MOPSO, along with the min 1 = ( ( i ), ( i )) + Φ( ( Nk ), ( Nk ))

designed -bias selection method and the evolution restart u(ti )

i=0

strategy, is introduced. Section III presents the optimal control s.t. ∀ i , ∈ {0, 1, ..., k }

s

formulation of the constrained atmospheric entry trajectory ∑︁

( i+1 ) = ( i ) + ∆ℎ j ( j , ij )

design problem in detail. Numerical simulation experiments

j=1

as well as a number of comparative studies are demonstrated s

∑︁

in Section IV. Finally, this article is concluded in Section V. j = ( i ) + ∆ℎ jm ( m , im )

m=1

( ( i ), ( i )) ≤ 0

( Nk ) = f

II. B IASED PARTICLE S WARM O PTIMIZATION A PPROACH (5)

where m and im stand for the intermediate state and

A. Constrained Optimal Control Problem control values defined on [ i , i+1 ]. ∆ℎ is the step length,

while j and jm are discretization coefficients determined by

In a constrained optimal control problem, a dynamical

the applied numerical integration method. Take fourth order

system is commonly adhered, which has the form of

Runge-Kutta method as an example, ( 1 , 2 , 3 , 4 ) can be set

( )

˙ = ( ( ), ( )) (1) to ( 16 , 13 , 31 , 16 ), whereas the non-zero elements of jm can be

assigned as ( 21 , 32 , 43 )=( 12 , 21 , 1), respectively.

where ( ) ∈ Rnx and ( ) ∈ Rnu represent, respectively, the

system state and control variables defined on the time interval Instead of directly addressing the constrained optimiza-

∈ [ 0 , f ]. x and u are the dimensions of the state and tion problem (5), a slight modification of the problem for-

control. Variable path constraints and boundary conditions are mulation may also be effective. For example, the standard

frequently considered for a number of practical missions. They interior-point method (as well as its enhanced versions) applies

can be expressed by: the barrier function and solves the scalarized version of

the problem. In this subsection, we introduce an additional

( ( ), ( )) ≤ 0 normalized objective function so as to transform problem (5)

( 0 ) = 0 (2) to an unconstrained multi-objective version, which will then

( f ) = f be optimized by the MOPSO algorithm introduced in the

The objective function is used to evaluate the system following subsections.

performance for a specific mission profile. A general form If the optimization problem contains inequality con-

of it can be written as: straints and terminal constraints, the constraint violation

∫︁ tf value of a candidate solution ( , ) for the th inequality

min 1 = ( ( ), ( )) + Φ( ( f ), f ) (3) constraint and th terminal constraint can be written as:

u(t) t0 ⎧

⎨ 0,i i ( , ) ≤ 0;

where ( ( ), ( )) and Φ( ( f ), f ) are, respectively, the

⎪

g (x,u)

process and terminal performance indicators. As a result, the ig = ḡ i , 0 ≤ i ( , ) ≤ ¯i ; (6)

i ( , ) ≥ ¯i .

⎪

⎩ 1,

overall constrained optimal control problem can be modeled

as:

¯jf ;

⎧

∫︁ tf ⎪

⎪ 1, j ( Nk ) ≥

⎪ j j

x (tNk )−xf

min 1 = ( ( ), ( )) + Φ( ( f ), f ) , jf ≤ j ( Nk ) ≤ ¯jf ;

⎪

⎪

⎪

⎨ x̄jf −xj (tNk )

⎪

u(t) t0

⎪

s.t. ∀ ∈ [ 0 , f ] jxf = 0, j ( Nk ; ) = jf ; (7)

( )

˙ = ( ( ), ( )) (4) ⎪

⎪ j j

xf −x (tNk ) j j j

, f ≤ ( Nk ) ≤ f ;

⎪

⎪

( ( ), ( )) ≤ 0 xjf −xjf

⎪

⎪

⎪

jf ≥ j ( Nk ).

⎪

( 0 ) = 0 ⎩ 1,

( f ) = f

In Eq.(6) and Eq.(7), ¯i =max( i ( , )) is the maximum

violation value of the th inequality constraint in the cur-

rent searching space. The terms ¯jf and jf can be defined

B. Unconstrained Multi-Objective Optimal Control Problem analogically. jf stands for the th targeted terminal state

A direct transcription method is adopted to solve the value. For simplicity, constraint functions defined in Eq.(6) and

constrained optimal control problem given by Eq.(4). That is, Eq.(7) assume scalar values of the constraints. If the constraint

the control variable is parameterized over a finite set of tem- functions of a candidate solution become a vector, to execute

Nk −1

poral nodes { i }i=0 , in which k stands for the size of the the division operation, each element in the vector should be

temporal set. The discretized control sequence is then denoted ¯jf or jf , correspondingly.

divided by ¯i ,

as = ( 0 , ..., Nk −1 ). After the control discretization and ¯jf and jf are mainly applied to normalize

Note that ¯i ,

numerical integration for ordinary differential equations, the each constraint violation. From the definition of Eq.(6) and4

Eq.(7), it is obvious that the values of ig , jxf ∈ [0, 1] In terms of the personal best position of the th particle

are able to reflect the magnitude of constraint violation for pj ( ), this vector should be updated via

the constraints given by (2). As a result, based on Eq.(6) ⎧

and Eq.(7), the normalized constraint violation function can ⎨ rand{pj ( − 1), zj ( )} if zj ( ) ̸⊀≻ pj ( − 1)

pj ( ) = p ( − 1) if zj ( ) ≺ pj ( − 1)

be added together, thereby resulting in a scalar constraint ⎩ j

violation 2 ∈ [0, 1] which has the form of: zj ( ) if zj ( ) ≻ pj ( − 1)

(13)

m n

1 ∑︁ i 1 ∑︁ j where pj ( −1) is the personal best position of the -th particle

2 = g + (8) at the ( −1)-th iteration. Here, the notation ≺ is the dominant

i=1 j=1 xf

relation determined by the concept of Pareto optimal, and z1 ≺

The scalar constraint violation is considered as a separate z2 means z1 is dominated by z2 . In Eq.(13), the character

objective function to be minimized. ̸⊀≻ means the mutually dominant relation. In this case, the

By minimizing the objective functions given by Eq.(3) algorithm randomly selects one of these two vectors.

and Eq.(8), the original problem formulation has been trans- The nondominated solutions are then collected to form

formed to an unconstrained bi-objective version. A compact an external archive A( ) = [z1 ( ), z2 ( ), ..., zNa ( )], where

form of this unconstrained bi-objection optimal control model |A( )| = a stands for the number of nondominated solutions

is written as: in the current archive and this number will be changed during

∫︁ tf the evolutionary process. Note that a ≤ A , in which

min 1 = ( ( ), ( )) + Φ( ( f ), f ) A represents the maximum size of the archive specified by

u(t) t0

m n (9) the designer. To update A( ), the following algorithm (e.g.,

∑︁ 1 ∑︁ j Algorithm 1) is performed. Note that in Algorithm 1, |·| stands

min 2 = 1

ig +

u(t) m

i=1

j=1 xf for the size of a set. After performing A( ) = A( )∪A( −1)

Algorithm 1 Archive update process

Input: A( − 1) and p( );

Output: A( );

C. MOPSO Algorithm /*Main update process*/

for := 1, 2, ..., |p( )| do

To find a control sequence such that the two mission ob- for := 1, 2, ..., |A( − 1)| do

if zm ≺ pj then

jectives considered in Eq.(9) are optimized, certain parameter Remove zm from A( − 1)

optimization algorithms should be adopted. In this paper, we Set an indicator = 1

focus on the design and test of a modified MOPSO algorithm. else

MOPSO is a typical bio-inspired multi-objective optimization Break

algorithm [29]. For the considered problem, each particle end if

end for

among the swarm represents a potential control sequence if ̸= 1 then ◁ //*No individual in A( − 1) dominates

consisting of a position vector z and a velocity vector v: pj *//

Add pj to A( )

z( ) = [u1 ( ), u2 ( ), ..., uNj ( )]

(10) end if

v( ) = [v1 ( ), v2 ( ), ..., vNj ( )] end for

In Eq.(10), j and = 1, 2, ..., s are, respectively, the Perform A( ) = A( ) ∪ A( − 1)

Output A( )

size of the swarm and the index of the current iteration. For /*End archive update process*/

convenience reasons, we denote zj , = 1, 2, ..., j as the

-th component of z( ) in the rest of the paper. During the

in Algorithm 1, if the size of A( ) is greater than A , then we

optimization iteration, the particle explores the searching area

delete the most infeasible one or the less optimal one according

by introducing a recurrence relation:

to the value of 1 or 2 until the size reaches A .

z( + 1) = z( ) + v( + 1) (11)

D. -Bias Selection

where v( + 1) is given by:

As investigated in the previous work [23], [24], the

v( + 1) = · v( ) performance of using a heuristic algorithm to trajectory op-

+ 1 1 · (p( ) − z( )) (12) timization problems might be significantly degraded if certain

+ 2 2 · (g( ) − z( )) actions are not taken to put emphasis on the searching direction

Variables appeared in Eq.(12) are defined below: toward the feasible region. Consequently, we design a bias

selection strategy, named -bias selection, to further update

: The inertia weight factor; the external archive.

p( ): The personal best position in the th iteration; Prior to introduce the -bias selection strategy in detail, a

g( ): The global best position in the th iteration; reduced version of this strategy is firstly presented to illustrate

1 , 2 : Factors reflecting strength of attraction; the concept of bias selection. Subsequently, this reduced

1 , 2 : Two random constants on (0, 1]. version will be extended to a more general one.5

Let us consider two candidate particles z1 and z2 among the reported results, it was verified that such a local update

the set A( ). Apparently, compared to 1 , the total degree of strategy has the capability of improving the quality of the final

constraint violation 2 has a higher priority and should be solution. Therefore, we introduce this approach to update the

biased. If we denote the value of 1 and 2 for z1 and z2 as elements in the archive, thus making more progresses during

1 (z1 ), 1 (z2 ), 2 (z1 ), and 2 (z2 ), then a constraint violation the iteration.

(CV)-based bias selection strategy can be designed. That is, Let us denote the directional derivative of the two objec-

the particle z1 is considered superior with respect to z2 if and tives along em as

only if the following CV-dominance conditions are triggered: {︂

1 (zm + ∆ · em ) − 1 (zm )

}︂

1) ( 1 (z1 ) < 1 (z2 )) ∧ ( 2 (z1 ) = 2 (z2 ) = 0); ∇em 1 (zm ) = lim

∆→0 {︂ ∆ }︂ (15)

2) 0 < 2 (z1 ) < 2 (z2 ); 2 (zm + ∆ · em ) − 2 (zm )

3) ( 2 (z1 ) = 0) ∧ ( 2 (z2 ) > 0). ∇em 2 (zm ) = lim

∆→0 ∆

The CV-based selection rules suggest that the comparison

between particles should be made strictly according to the in which = 1, ..., a , and zm ∈ A( ). ∆ stands for the step

biased objective (e.g., 2 ). In this way, the nondominated length. It was shown in [31] that the above two directional

feasible candidate can always be preserved until a more derivatives can be further written as:

optimal candidate is obtained. In the following, we extend ∇em 1 (zm ) = (∇ 1 (zm ))T · em

(16)

the CV-based bias selection strategy to a more general -bias ∇em 2 (zm ) = (∇ 2 (zm ))T · em

selection method. Specifically, in this strategy, the particle z1

In Eq.(16), ∇ (zm ) stands for the gradient of with respect

is considered superior to another candidate z2 if the following

to zm . A direction vector em which descends both 1 and 2

-dominance conditions are triggered:

can be obtained via

1) (z2 ≺ z1 ) ∧ ( 2 (z1 ) ≤ ) ∧ ( 2 (z2 ) ≤ ); (︁ )︁

∇J1 (zm ) ∇J2 (zm )

2) < 2 (z1 ) < 2 (z2 ); em = − 1 ‖∇J 1 (zm )‖

+ 2 ‖∇J2 (zm )‖ (17)

3) ( 2 (z1 ) ≤ ) ∧ ( 2 (z2 ) > ).

where the two weight coefficients hold 1 + 2 = 1, and 1 <

According to Eq.(9), it is obvious that 2 ∈ [0, 1]. If = 0, 2 . Subsequently, the elements among the current archive are

then the -dominance conditions reduce to the CV-dominance updated via

conditions. On the contrary, if = 1, then the -dominance ẑm = zm + ∆ · em (18)

conditions are equivalent to the standard Pareto-dominance

rules ≺ (no bias case) which are widely applied in the multi- It is important to remark that by viewing the definition of

objective approaches (see e.g., [26] and [27]). The value of g and xf , it is obvious that the resulting objective function

∈ [0, 1] can be viewed as a balancing parameter able to 2 may not be differentiable at some points. Hence, we

adjust the degree between these two extreme cases. Here we replace their equations by a piecewise smooth form in practical

present a simple adaptive formula in order to set : applications. More precisely, the repair of g is achieved by

Eq.(19), where and are positive constants. Note that the

= 2max − 2min (14) repair of xf (e.g., ( xf , , )) can be obtained analogically.

where 2max and 2minrepresent, respectively, the maximum In this study, the gradient update process is performed in every

and minimum 2 values in the archive. At the beginning of generation.

the evolution, when all the particles are relatively far from the

feasible border, tends to be small according to Eq.(14) and F. Evolution Restart Strategy

more emphasis/attention can be paid to the constraint violation. For some practical constrained optimization problems,

On the other hand, when some of the particles are close to complex constraints might be involved in the problem formu-

the feasible border or they are already in the feasible region, lation. Due to the strong nonconvexity or nonlinearity of these

tends to be larger such that 1 and 2 can be considered at the constraints, the feasible searching space can be significantly

same time. Compared to the CV-based bias selection strategy, restricted and the PSO algorithm is likely to stagnate in one

the extended -bias selection strategy offers more flexibility to of the local infeasible regions. In order to tackle this problem,

simultaneously optimize the objective function and reduce the we propose an evolution restart strategy.

solution infeasibility. Note that the -bias selection strategy The key component of this restart strategy is to determine

will be applied to update the external archive A( ) at the end whether the current archive has already got stuck in an

of each iteration. infeasible region. Actually, this can be reflected by analyzing

2 value for all the particles. If the difference of 2 value

E. Local Exploration between particles is small, then the current swarm is highly

Early works on developing MOPSO suggested that this likely to converge to an infeasible region. More precisely, the

algorithm has a strong global exploration ability [26], [28]. To following two conditions can be applied as an indicator of

also emphasize the local exploration of the searching process, getting stuck in infeasible regions:

a gradient-assisted operation can be introduced. It is worth 1) ∀zm ∈ A( ), 2 (zm ) ̸= 0,

noting that the combination of an evolutionary algorithm with 2) The variance of 2 (zm ) is less than a restart threshold .

a gradient-based method to improve the local search can be If these conditions are triggered, the evolution restart strategy

found in a number of previous works [30], [31]. Based on will be executed. That is, all the particles among the swarm6

⎧

⎪ 1, g > 1 + ;

⎨ (− 2g + 2(1 + ) g − ( − 1)2 )/4 ,

⎪

1 − ≤ g ≤ 1 + ;

⎪

⎪

( g , , ) = g , < g < 1 − ; (19)

( + )2 /4 , − ≤ g ≤ ;

⎪

⎩ g

⎪

⎪

⎪

0, g < − .

are randomly re-generated on their searching space. Although Algorithm 3 General steps for the optimization process

evolution histories may contain valuable information and can Input: The algorithm parameters , 1 , 2 , 1 , 2 , 1 , 2 ,

potentially provide feedback so as to guide the optimization, ∆, j , = 1, and s ;

determining whether these historical data are promising is Output: The final archive A( );

/*Main optimization iteration*/

still a challenging issue. In addition, a large amount of space Step 1: Randomly initialize the position and velocity vectors

should be pre-allocated to store these historical data. Hence, of the particles;

we decide to simply discard these data and restart the evolution Step 2: Obtain the state trajectory using numerical

by randomly re-initializing all the particles in the swarm. integration;

Step 3: Calculate the two objective values for all particles

among the current swarm;

G. Overall Algorithm Framework Step 4: Apply the nondominant sorting and Algorithm 1

to construct and update the archive A( );

For the proposed algorithm, the global best particle g( ) Step 5: Update A( ) via the gradient-assisted local

is selected from the updated archive A( ). Note that in the exploration;

transformed problem formulation, 1 is the primary mission Step 6: Perform the -bias selection process to

update A( );

objective to be optimized, while 2 reflects the constraint

Step 7: Search the global best particle g( ) from A( )

violation of the solution. Therefore, g( ) can be selected by via Algorithm 2;

following the procedures specified in Algorithm 2. Step 8: Update the velocity and position vectors of the

particles;

Algorithm 2 g( ) selection process Step 9: Check whether the termination condition is

triggered?

Input: A( ); if not, set = + 1 and return back to Step 2.

Output: g( ); Step 10: Terminate the optimization and output the final

/*Main process*/ archive A( );

Initialize F( ) = {} and IF( ) = {} /*End optimization iteration*/

for := 1, 2, ..., |A( )| do

if 2 (zm ) > 0 then

IF( ) = IF( ) ∪ zm

else III. C ONSTRAINED ATMOSPHERIC E NTRY P ROBLEM

F( ) = F( ) ∪ zm

end if In this section, an optimal control formulation of the

end for constrained atmospheric entry trajectory design problem is

if F( ) = ∅ then detailed. Specifically, the system dynamics used to describe the

g( ) = arg minzm ∈IF(s) 2 (zm ) motion of the spacecraft are formulated in Section III.A. Fol-

else

lowing that, a number of entry boundary and path constraints

g( ) = arg minzm ∈F(s) 1 (zm )

end if are constructed in Section III.B. Finally, the mission objectives

Output g( ) selected to assess the performance of the entry maneuver are

/*End the process*/ introduced in Section III.C.

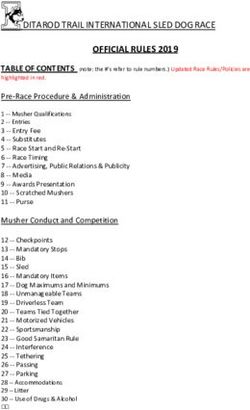

In summary, the conceptual block diagram of the pro- A. System Model

posed MOPSO-based trajectory optimization algorithm is vi-

The following set of first order differential equations can

sualized in Fig. 1. In order to clearly present how the opti-

be used for describing the motion of the entry vehicle:

mization process is executed, the general steps are summarised ⎡ ⎤

in the pseudocode (see Algorithm 3). Note that in Step 9 sin ⎡

0

⎤

V sin ψ cos γ

of Algorithm 3, the evolutionary process is terminated when ⎢

⎢ r cos φ

⎥

⎥ ⎢ 0 ⎥

either of the following two rules can be triggered: ⎢ V cos ψ cos γ ⎥ ⎢

⎢ 0 ⎥

⎥

⎢ r ⎥

D

⎢ ⎥

1) ≥ s ; ˙ = ⎢

⎢

−m − sin ⎥ + ⎢

⎥

⎢ 0 ⎥

⎥

2

2) ∀zm ∈ A( ), 2 (zm ) = 0 and the difference of E( 2 ) ⎢

⎢ L cos σ V −gr

+ ( rV ) cos

⎥

⎥ ⎢ 0 ⎥

⎢ ⎥

between two consecutive iterations (e.g., the -th and the ⎢ L sinmVσ V 0 ⎦

⎥

⎣ mV cos γ + r sin cos tan ⎦ ⎣

( − 1)-th iteration) is less than a tolerance value . σ − σ

In the second rule, E(·) outputs the expectation value and this (20)

rule indicates no further improvement can be made among where the system state variables are defined as =

feasible solutions. [ p , a , ]T ∈ R7 . Here, p = [ℎ, , ]T ∈ R3 determines the7

1). Problem formulation and transformation phase

Objective

Temporal set

function

System dynamics Unconstrained Multi-

Constrained trajectory Problem solving via the

& objective trajectory

optimization model proposed MOPSO

Boundary conditions optimization model

Path Constraints

constraints reformulation

2). Problem solving phase

Calculate the updated Update the particle

particle velocity vector position vector

No

No Perform BS- No Execute non- Compute

Termination RS Generate the

condition? condition?

based archive E generation? dominant objective

initial swarm

update sorting values

Yes Yes Yes

Output final Perform local

archive search

Fig. 1: Conceptual block diagram of the proposed algorithm

3-D position of the entry vehicle, consisting of the altitude ℎ, 1) Safety corridor constraints: To protect the structure

longitude and latitude , respectively. The radius distance of the entry vehicle, the aerodynamic heat transfer rate , the

can be obtained via = ℎ + e , where e denotes the dynamic pressure , and the load factor L must be restricted

radius of the Earth. The components of a = [ , , ]T ∈ R3 to certain safety corridors during the entire flight. This can be

stand for the velocity, flight path angle (FPA), and the heading expressed by:

angle of the entry vehicle, respectively. [ , c ] denotes the 0 ≤ ( , , ) ≤ ¯ (21)

actual and demanded bank angle profiles, and the control

0 ≤ ( , ) ≤ ¯ (22)

variable is assigned as = c . The physical meaning of other

variables/parameters appeared in Eq.(20), together with their ¯

0 ≤ L ( , ) ≤ (23)

values or calculation equations, can be found in Table I.

¯ ¯ ,

In Eqs.(21)-(23), the permissable peak values of ( , ¯ ) are

TABLE I: Variable Definitions set to (125, 280, 2.5). The heat transfer rate is a function

Variables Calculation/values of radial distance , velocity and angle of attack (AOA)

g: gravity g = rµ2 , whereas the dynamic pressure and load factor L are

r: radius distance r = h + Re

ρ: atmospheric density ρ = ρ0 exp(−h/H)

mainly determined by the radial distance and the velocity .

D: drag force D = 12 ρCD V 2 The value of (in degree) can be computed via the following

L: lift force L = 21 ρCL V 2 equation [32]:

CD : drag coefficient: CD = CD 0 + CD 1 α + CD 2 α 2

CL : lift coefficient: CL =CL0 + CL1 α

40 − 1 ( − ˆ )2 /3402 , if < ˆ ;

{︂

S: reference area S=250m2 = (24)

Re : radius of the Earth Re =6371.2km 40, if ≥ ˆ .

ρ0 : sea-level air density ρ0 =1.2256kg/m3

H: density scale height

m: mass

H=7.25km

m=92073kg

in which ˆ = 4570m/s, and the value of 1 is equal to

µ: gravitational parameter µ=398603.2km3 /s2 0.20705.

It is worth noting that in Eq.(21), the heat transfer rate

B. Entry Phase Constraints consists of two major components:

During the planetary entry flight, the following four types ( , , ) = r ( ) · d ( , ) (25)

of constraints are required to be satisfied:

in which r ( ) stands for the aerodynamic heat flux and

1) Safety corridor constraints;

is calculated via Eq.(26), whereas d ( , ) represents the

2) Variable terminal boundary constraints;

radiation heat transfer and is given by Eq.(27).

3) State and control path constraints;

4) Angular rate constraints. r ( ) = 0 + 1 + 2 2 + 3 3 (26)8

d ( , ) = Q 0.5 3.07 (27) delivery tends to be much easier.

In Eq.(26), ( 0 , 1 , 2 , 3 )=(1.067, −1.101, 0.6988, −0.1903).

Furthermore, the dynamic pressure and load factor can be C. Objectives

calculated via Eq.(28) and Eq.(29), respectively. The atmospheric entry mission is established as an opti-

mization problem. Different performance indices reflecting the

( , ) = 12 2 (28) quality of the entry flight are formulated in objective functions.

√

L ( , ) = L2 +D 2

(29) For example:

mg

∙ An efficiency-related measure can be designed by min-

2) Boundary constraints: The terminal boundary con- imizing the flight time duration (e.g., the terminal time

straints are imposed such that the flight states can reach instant f . That is,

specific values at f in order to start the terminal area energy

management phase [11], [16]. Specifically, the altitude and Obj1 = min f (33)

flight path angle are required to satisfy ∙ A safety-related measure can be selected by minimizing

|ℎ( f ) − ℎf | ≤ hf the total amount of aerodynamic heat. That is,

(30)

| ( f ) − f | ≤ γf ∫︁ tf

Obj2 = min (34)

where ℎf = 30 and f = −5∘ are the targeted terminal t0

altitude and flight path angle values, respectively. hf = 500m ∙ An entry capability-related measure can be designed by

and γf = 0.1∘ stand for the permissable errors. Moreover, the maximizing the cross range (e.g. the terminal ( f )). That

terminal velocity is required to satisfy is,

fmin ≤ ( f ) ≤ fmax Obj3 = max ( f ) (35)

where fmin and fmax are set to 900m/s and 1100m/s, ∙ An energy-related measure can be designed by minimiz-

resulting in ( f ) ∈ [900, 1100] / . ing the terminal kinetic energy, which is written as

3) State and control path constraints: The state and Obj4 = min ( f ) (36)

control path constraints are imposed such that system state and

control variables can be constrained within tolerant regions In the later simulation result section, all the above objec-

during the entire entry flight (e.g., ∀ ∈ [0, f ]). These tives will be considered separately.

constraints can be written as:

ℎ ≤ ℎ( ) ≤ ℎ̄ ≤ ( ) ≤ ¯ IV. T EST R ESULTS AND A NALYSIS

≤ ( ) ≤ ¯ ≤ ( ) ≤ ¯ A. Test Case Specification

(31)

≤ ( ) ≤ ¯ ≤ ( ) ≤ ¯ To carried out the simulations, algorithm-related pa-

≤ ( ) ≤ ¯ c ≤ c ( ) ≤

¯c rameters are firstly assigned. Specifically, 1 and 2 are

where = [ℎ, , , , , , ] and = c denote the randomly generated on the interval [0, 1]. [ 1 , 2 ] is set to

¯ ,

¯ ¯ , ¯ , ,

¯ [1.49445, 1.49445], while is calculated via = (1 + 1 )/2.

lower bounds of and , while ¯ = [ℎ̄, , ¯ ] and

1 and 2 are set to 0.3 and 0.7, respectively. [ j , s , k ]

¯= ¯c represent the upper bounds with respect to and ,

is assigned as [40, 2000, 100]. = 10−6 . The demanded bank

respectively.

angle is randomly initialized within the region c ∈ [−90, 1]∘ .

4) Angular rate constraints: Early studies suggested that

The proposed algorithm is performed on a PC with Intel Quar-

compared to the position and velocity profiles, more oscilla-

Core i7-4790 CPU (8GB RAM).

tions can be found in the angular variable trajectories, which

For the considered atmospheric entry problem, four test

is usually not desirable [11], [16]. Therefore, different from

cases are investigated. Case stands for minimizing 1 = Obji ,

some existing research works [11], [16], [17], [25], the angular

∈ {1, 2, 3, 4} while simultaneously satisfying all types of

rate constraints are also considered in this work such that the

constraints. By carrying out the reformulation process shown

evolution of the corresponding angular variables can become

in Fig.1, the total amount of constraint violation value is

smoother. Specifically, these constraints can be modeled as:

considered as an additional objective function 2 for each

˙ ≤ ( )

˙ ≤ ¯˙ mission case, thereby resulting in four unconstrained bi-

¯

≤ ( ) ≤ ˙

˙ ˙ (32) objective formulations.

˙ ≤ ( )

˙ ≤ ˙¯ To highlight the advantage of using the proposed de-

sign, comparative studies were performed between the bi-

˙ ] ¯˙ ¯

¯˙ ,

in which the values of [ ,˙ , ˙ and [ , ]

˙ are assigned as ased MOPSO approach and other well-developed trajectory

[−0.5 , −0.5 , −0.5 ]/s and [0.5 , 0.5 , 0.5∘ ]/s, respectively.

∘ ∘ ∘ ∘ ∘

optimization algorithms. For example, a PSO-based trajectory

Imposing these constraints might be helpful for some particu- optimization method suggested in [16], [17], together with

lar uses of the entry vehicle such as the reginal reconnaissance an artificial bee colony-based trajectory planning algorithm

[14] and payload delivery [33]. Since the angular trajectories reported in [25], is chosen for the comparative study. It is

are less likely to have instantaneous variations, the informa- worth noting that for these two evolutionary methods, the

tion gathering of inaccessible areas or high-precision payload penalty function strategy is applied to deal with constraints9 existing in the optimization model. In addition, the evolution × 104 8 15 restart strategy introduced in Section II.F is also applied in Proposed Latitude (deg) PFABC Altitude (m) these methods. We abbreviate these two methods as PFPSO 6 10 PFPSO CASADI and PFABC, respectively. 4 5 2 0 0 500 1000 1500 0 20 40 60 80 B. Performance of Different Methods Time (s) Longitude (deg) Optimal results calculated by applying different heuristic 2 8000 Velocity (m/s) trajectory optimization algorithms for a single trial are firstly 0 6000 FPA (deg) presented and analyzed in this subsection. The optimized -2 4000 state/control evolutions, along with the corresponding path -4 2000 constraint history, are visualized in Figs.2-5 for the four entry -6 0 0 500 1000 1500 0 500 1000 1500 mission cases. Time (s) Time (s) 0 150 Bank angle (deg) -20 4 100 Heating × 10 8 20 Proposed -40 Latitude (deg) PFABC Altitude (m) 15 50 6 PFPSO -60 10 CASADI -80 0 4 0 500 1000 1500 0 500 1000 1500 5 Time (s) Time (s) 2 0 0 200 400 600 800 1000 1200 0 10 20 30 40 Time (s) Longitude (deg) 2 8000 Fig. 3: State/control/constraint evolutions: Case 2 Velocity (m/s) 0 6000 FPA (deg) -2 4000 × 104 -4 2000 8 20 Proposed Latitude (deg) PFABC Altitude (m) -6 0 15 0 200 400 600 800 1000 1200 0 200 400 600 800 1000 1200 6 PFPSO 10 CASADI Time (s) Time (s) 4 -20 150 5 Bank angle (deg) 2 0 -40 100 Heating 0 500 1000 1500 0 10 20 30 40 50 Time (s) Longitude (deg) -60 50 2 8000 Velocity (m/s) -80 0 0 6000 FPA (deg) 0 200 400 600 800 1000 1200 0 200 400 600 800 1000 1200 Time (s) Time (s) -2 4000 -4 2000 -6 0 Fig. 2: State/control/constraint evolutions: Case 1 0 500 1000 1500 0 500 1000 1500 Time (s) Time (s) 0 150 Bank angle (deg) As can be observed from Figs.2-5, the pre-specified -20 100 Heating entry terminal boundary conditions and safety-related path -40 constraints can be satisfied for all the mission cases, thereby -60 50 confirming the validity of the investigated heuristic meth- -80 0 0 500 1000 1500 0 500 1000 1500 ods. That is, both the penalty function-based and the multi- Time (s) Time (s) objective transformation-based constraint handling strategies are able to guide the searching direction toward the feasible region. In terms of the flight trajectories, same trend can be Fig. 4: State/control/constraint evolutions: Case 3 observed from the solutions generated by different heuristic algorithms for all the considered mission cases. Moreover, for the third mission case, the three evolutionary methods can This solver has become increasingly popular and it has been produce almost identical solutions. Moreover, by viewing the applied in the literature to address a number of motion system state and control profiles, it is clear that the obtained planning or trajectory optimization problems [36], [37]. The trajectories are relatively smooth. This can be attributed to the four mission cases are solved using CASADI with = 10−6 differential equation imposed on the actual bank angle variable as the optimization tolerance. The optimized trajectories are . This equation can also be understood as a first-order filter visualized in Figs.2-5. and it indirectly restricts the rate of the actual bank angle. As can be viewed from Figs.2-5, the trajectories produced A comparison is also made between the proposed method by the proposed method and CASADI are comparable and and another numerical optimal control solver, named CASADI generally follow a same trend. However, CASADI has its [34], for solving the constrained atmospheric entry problem unique features. For example, compared to the developed (the interior point solver IPOPT [35] is applied in CASADI). approach, CASADI is able to produce much smoother bank

10 TABLE II: Optimal results obtained via different methods Case PFPSO PFABC Proposed CASADI No. 1 2 1 2 1 2 1 2 Case.1 977.85 0 945.32 0 908.23 0 1061.15 0 Case.2 40212 0 40613 0 39591 0 40018 0 Case.3 16.066 0 16.060 0 16.068 0 15.839 0 Case.4 947.50 0 964.88 0 936.16 0 1086.81 0 the better 1 values are not the result of infeasible solutions. × 104 8 20 Proposed In addition, multiple trials were executed to compare Latitude (deg) PFABC Altitude (m) 15 6 10 PFPSO CASADI the convergence ability and robustness of the proposed ap- 4 5 proach and the CASADI. Specifically, 100 independent trials were performed using the proposed method with randomly 2 0 0 500 1000 1500 0 20 40 60 initialized swarms. Similarly, 100 trials were performed using Time (s) Longitude (deg) CASADI by specifying different initial guess values. The 2 8000 resulting solution pairs for these two methods are collected Velocity (m/s) 0 6000 FPA (deg) to generate the histograms (as displayed in Fig. 6 and Fig. 7) -2 4000 such that the relative difference between these two methods in -4 2000 terms of 1 and 2 can be clearly shown. -6 0 0 500 1000 1500 0 500 1000 1500 Time (s) Time (s) 0 150 Bank angle (deg) -20 100 Heating -40 50 -60 -80 0 0 500 1000 1500 0 500 1000 1500 Time (s) Time (s) Fig. 5: State/control/constraint evolutions: Case 4 angle profiles for all the mission cases. This is apparent from Figs.2-5, where more oscillations can be identified on the bank angle trajectories generated using the proposed method and other heuristic methods. This is mainly due to the randomness of the evolutionary process. To provide a clear demonstration of the performance achieved via different optimization methods, quantitative re- Fig. 6: Histograms of 1 for the two methods sults for cases 1-4 are tabulated in Table II. It should be noted that to only compare 1 is not that relevant in cases where solutions can be infeasible. It is trivial that the objective function value can be improved if infeasible solutions can In Fig. 6, the lower and upper outlier boundaries for the occur. Hence, in the comparison shown in Table II, 1 and proposed approach and CASADI are indicated by the blue 2 are pairwise compared between different methods (e.g., a and red vertical lines, respectively. From the results presented 1 value is associated with a 2 value, and it is relevant to in Fig. 7, it is obvious that the proposed approach is able to compare this pair with other pairs). drive the candidate solution to the feasible region for all the From the solution pairs displayed in Table II, although trials. As for CASADI, on the other hand, outliers can be the relative differences are in general not really significant, it found in the obtained 2 histograms, indicating that CASADI can be seen that using the proposed approach is able to achieve converges to the local infeasible solution for multiple times. better solutions with more optimal objective values for all the In addition, by viewing the corresponding 1 histograms, a considered mission cases. Note that for mission case 3, the number of outliers can also be detected. This can be attributed aim is to maximize the final latitude value. Hence, a larger to the result of infeasible solutions. Hence, for the considered objective value is desired for this mission case. According to problem, CASADI tends to be sensitive with respect to the the reported solution pairs and trajectory profiles, no constraint initial guess values and has a greater possibility of converging defined in Section III.B is violated, thus guaranteing the to local infeasible solutions. From this point of view, benefiting effectiveness of both the CASADI and the proposed methods. from the evolution restart strategy developed in Section II.F, More importantly, based on these results, one can rule out that the proposed approach is more robust than its counterpart.

11 the feasible solution and drive the current swarm/population moving toward the feasible region. Next, the average value of 1 for each optimization iteration is presented in Fig. 9. Case 1 ×10 4 Case 2 1400 6 Proposed Proposed Average J 1 value Average J 1 value 1300 PFABC PFABC 5.5 PFPSO PFPSO 1200 5 1100 4.5 1000 4 900 0 500 1000 0 100 200 300 400 500 Iteration Iteration Case 3 Case 4 16.2 6000 Proposed Average J 1 value Average J 1 value 5000 PFABC 16 PFPSO 4000 15.8 Fig. 7: Histograms of 2 for the two methods Proposed 3000 15.6 PFABC 2000 PFPSO 15.4 1000 0 500 1000 0 20 40 60 C. Convergence Analysis for Evolutionary Methods Iteration Iteration In this subsection, we focus on the analysis of conver- Fig. 9: 1 evolutions: Cases 1-4 gence performance of different evolutionary trajectory op- timization methods investigated in this paper. Specifically, Similar to the results presented in Fig. 8, the proposed attention is given to the evolution histories of 1 as well as multi-objective approach has the capability of producing faster 2 for the considered four mission cases. Firstly, the average 1 convergence histories for all the considered mission cases value of 2 for each optimization iteration is presented in Fig. in comparison to its counterparts. More precisely, the average 8. 1 cost value achieved via the proposed algorithm converges to a more optimal steady value in less optimization iterations. Case 1 Case 2 0.6 0.6 Then this average 1 value remains almost the same and Proposed Proposed PFABC PFABC does not decrease significantly in later optimization iterations. Average J 2 value Average J 2 value PFPSO PFPSO 0.4 0.4 To clearly illustrate this behaviour, convergence results for mission case 4 are partly extracted and presented. For instance, 0.2 0.2 by limiting the maximum number of iterations to 60, the history of the average 1 value is shown in the last subfigure 0 0 0 10 20 30 40 50 0 10 20 30 40 50 of Fig. 9. From this subfigure, it is apparent that enhanced Iteration Iteration convergence performance can be obtained by applying the Case 3 Case 4 0.6 0.6 Proposed proposed algorithm for solving the constrained atmospheric Proposed entry trajectory optimization problem. Average J 2 value Average J 2 value PFABC PFABC PFPSO PFPSO 0.4 0.4 0.2 0.2 D. Computational Performance of Different Methods Apart from the results compared in terms of algorithm 0 0 0 10 20 30 40 50 0 10 20 30 40 50 iterations, performance comparison between the new algorithm Iteration Iteration and other methods should also be done in terms of the com- putational cost. To achieve this, attention is firstly given to the Fig. 8: 2 evolutions: Cases 1-4 computational times required by CASADI and the proposed method. Note that an important parameter which can have As can be seen from Fig.8, the proposed multi-objective an impact on the resulting computational times is the index approach tends to result in faster 2 convergence histories of optimization tolerance . By specifying different levels, for the four mission cases in comparison to the PFABC and mission case 1 to case 4 are re-performed and the average the PFPSO algorithms. Specifically, by applying the proposed computational results of 50 successful runs are tabulated in method, the number of optimization iterations required to steer Table III. the average value of 2 to zero is less than or equal to 10 According to the data shown in Table III, it is obvious for all mission cases. While for other methods, this number that the computational times required by the proposed method becomes almost double. The 2 evolution trajectories highlight are generally less than that of CASADI except for mission the fact that the proposed approach is able to quickly locate case 1 and mission case 3 when is set to 10−6 . In addition,

12 TABLE III: Computational performance of CASADI and the proposed method (in seconds) Case Proposed CASADI No. = 10−6 = 10−7 = 10−8 = 10−6 = 10−7 = 10−8 Case.1 102.24 108.37 113.15 87.86 144.34 208.87 Case.2 32.38 34.33 36.72 39.98 79.98 114.52 Case.3 50.14 54.56 58.25 41.62 82.63 125.56 Case.4 19.42 21.21 23.08 25.47 53.86 82.35 the computational performance of CASADI is more sensitive ∙ Experiment 1: We compare the results produced by with respect to in comparison with the proposed approach. applying the proposed algorithm with and without the That is, the computation times required by CASADI tend to bias selection strategy. largely increase as becomes tighter. By contrast, only a slight ∙ Experiment 2: We compare the results produced by increase of the computation time can be seen from the reported applying the proposed algorithm with and without the results for the proposed global exploration-based approach. local exploration method. As for different evolutionary algorithms studied in this paper, we design the comparative experiments by introducing Case 1 0.6 three indicators. These indicators can reflect the computational MOPSO with Bias Selection MOPSO without Bias Selection cost required by the evolutionary algorithms from different aspects: 0.5 ∙ 1 : The average computation time required for different evolutionary algorithms to find the first feasible solution. 0.4 Average J 2 value ∙ 2 : The average computation time required for different evolutionary algorithms to drive the entire population to 0.3 the feasible region. ∙ 3 : The average computation time required for different 0.2 evolutionary algorithms to drive the average 1 value of all feasible solutions to reach a certain level ˆ1 . 0.1 For mission cases 1-4, we assign the ˆ1 values as (1000, 41000, 16, 1000). 50 independent runs were executed 0 for all the four mission cases and the average results are 0 10 20 30 40 50 60 70 80 90 100 tabulated in Table IV. Iteration In fact, due to the implementation of local exploration Fig. 10: Experiment 1: 2 results for mission case 1 process, -bias selection method, and evolution restart strategy, the proposed method performs additional steps and tends to be more costly at each iteration. However, certain benefits can be Case 2 0.6 obtained by performing these additional steps. For example, as MOPSO with Bias Selection MOPSO without Bias Selection can be observed in Table IV, the proposed approach tends to be less time-consuming than its counterparts in terms of finding 0.5 the first feasible solution and driving the entire population to the feasible region for all the mission cases. Moreover, 0.4 Average J 2 value compared to other algorithms, the proposed approach can rapidly drive the candidate solution set to achieve a desirable 0.3 level. As such, the effectiveness and advantages of performing these additional steps can be appreciated. 0.2 E. Impact of the Bias Selection Strategy and Local Exploita- 0.1 tion Process In previous subsections, it is illustrated that we can 0 achieve better final solutions by applying the proposed method 0 10 20 30 40 50 60 70 80 90 100 Iteration in comparison to other trajectory optimization planners. How- ever, it is still not clear whether the implementation of the Fig. 11: Experiment 1: 2 results for mission case 2 proposed bias selection strategy as well as the gradient-based local exploration method is able to make contributions to The average constraint violation histories (e.g., 2 evo- the problem solving process. Therefore, new experiments are lutions) for mission case 1 and case 2 are presented in Fig. designed to further test the impact of applying the bias selec- 10 and Fig. 11, respectively. According to the presented 2 tion strategy and the local exploration method. Two additional trajectories, it is evident that without using the bias selection experiments are performed: strategy, the proposed algorithm does not work as well as the

13 TABLE IV: Computational performance of different evolutionary methods Case Proposed PFABC PFPSO No. 1 (s) 2 (s) 3 (s) 1 (s) 2 (s) 3 (s) 1 (s) 2 (s) 3 (s) Case.1 0.64 5.23 63.25 1.62 8.64 64.36 2.05 43.27 125.43 Case.2 0.78 7.44 26.68 2.78 15.53 37.50 1.82 15.15 51.15 Case.3 0.73 6.86 25.74 2.36 8.89 31.26 1.44 8.58 30.08 Case.4 0.77 6.89 17.91 2.29 13.21 18.72 1.58 13.26 18.36 1350 Case 1 in Fig. 12 and Fig. 13, it is clear that MOPSO equipped MOPSO with Local Exploitation with the local exploration method can quickly steer 1 to MOPSO without Local Exploitation 1300 a more optimal steady value for the considered atmospheric 1250 entry mission cases. Consequently, we can conclude that it is beneficial to apply the gradient-based local exploration 1200 method to update the candidate set during the optimization Average J 1 value 1150 iteration. Note that for experiment 1 and experiment 2, similar conclusions can also be made for mission cases 3-4. So we 1100 omit the the presentation of their results for space reasons. 1050 1000 F. Impact of the Restart Strategy In this subsection, we are interested in studying and 950 testing the effectiveness of the restart strategy (RS) proposed 900 in Section II.F. Experiments were designed by comparing the 0 100 200 300 400 500 600 700 800 900 1000 Iteration results produced via the proposed algorithm with and without this strategy. Note that empirical studies were carried out and Fig. 12: Experiment 2: 1 results for mission case 1 the value of the restart threshold is set to 10−3 throughout the simulation. ×10 4 Case 2 6 MOPSO with Local Exploitation MOPSO without Local Exploitation 0.6 5.8 Failure example: Case 1 Failure example: Case 2 5.6 Failure example: Case 3 0.5 Failure example: Case 4 5.4 Average J 1 value J 2 : Constraint violation 5.2 0.4 5 4.8 0.3 4.6 4.4 0.2 4.2 4 0.1 3.8 0 50 100 150 200 250 300 350 400 450 500 0 Iteration 0 20 40 60 80 100 120 140 160 180 200 Iteration Fig. 13: Experiment 2: 1 results for mission case 2 Fig. 14: Failure case examples one equipped with the bias selection strategy. More specifi- 100 independent runs were performed for the four mis- cally, after executing a large number of iterations, there are sion cases and statistical results including the average value of still infeasible solutions among the swarm. In addition, the 2 the primary objective (denoted as mean( 1 )), the average con- convergence history tends to be much slower if a search bias straint violation value of failure cases (denoted as mean( 2 )), is not introduced to the multi-objective trajectory optimization times of infeasible solution converged (denoted as d ), and the process. Hence, we can conclude that the implementation of successful rate (computed via s = 1 − d /100) are tabulated the bias selection strategy is able to have positive influences in Table V. for guiding the multi-objective optimization process to find In addition, Fig. 14 illustrates examples of convergence more promising solutions. failure (collected from Table V) for the four mission cases. As for the experiment 2, the corresponding 1 evolution As can be seen from Fig. 14, the 2 evolution trajectories for results for the entry mission case 1 and 2 are illustrated in Fig. different mission cases converge to a value which is above 12 and Fig. 13, respectively. From the trajectories displayed zero. This further confirms that the evolution process has the

You can also read