RCT: RANDOM CONSISTENCY TRAINING FOR SEMI-SUPERVISED SOUND EVENT DETECTION

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

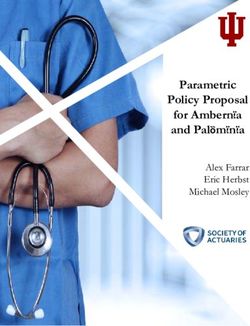

RCT: RANDOM CONSISTENCY TRAINING FOR SEMI-SUPERVISED SOUND EVENT DETECTION Nian Shao1,3 Erfan Loweimi2 Xiaofei Li1∗ 1 Westlake University & Westlake Institute for Advanced Study, Hangzhou, China 2 Centre for Speech Technology Research (CSTR), University of Edinburgh, Edinburgh, UK 3 School of Philosophy, Psychology & Language Sciences, University of Edinburgh, Edinburgh, UK arXiv:2110.11144v1 [eess.AS] 21 Oct 2021 ABSTRACT Many SSL approaches involve the scheme of consistency Sound event detection (SED), as a core module of acoustic regularization (CR), which constrains the model prediction to environmental analysis, suffers from the problem of data defi- be invariant to any noise applied on the inputs [3] or hid- ciency. The integration of the semi-supervised learning (SSL) den states [4, 5]. However, there exists a potential risk for largely mitigates such problem at almost no extra cost. This CR known as confirmation bias, especially when consistency paper researches on several core modules of SSL, and intro- loss is too heavily weighted in training [6]. To alleviate such duces a random consistency training (RCT) strategy. First, risk, MeanTeacher [6] applies a consistency constraint in the a self-consistency loss is proposed to fuse with the teacher- model parameter space, which takes an exponential moving student model, aiming at stabilizing the training. Second, a average (EMA) of the training student model as a teacher to hard mixup data augmentation is proposed to account for the generate pseudo labels for unlabelled data. The adoption of additive property of sounds. Third, a random augmentation such method to SED achieved the top performance in 2018 scheme is applied to combine different types of data aug- DCASE challenge [7], and became a role model, inspiring mentation methods with high flexibility. Performance-wise, many variants in semi-supervised SED [8–11]. the proposed strategy achieves 44.0% and 67.1% in terms of Data augmentation plays an important role in SSL, where the PSDS1 and PSDS2 metrics proposed by the DCASE chal- its feasibility and diversity largely affects the performance lenge, which outperforms other widely-used strategies. [12]. Typically, there are two categories of data augmenta- tions: data warping and oversampling [13]. In audio process- Index Terms— Semi-supervised learning, sound event ing, widely applied methods such as SpecAugment [14], time detection, data augmentation, consistency regularization shift [11] and pitch shift [15] belongs to the former class. On the other hand, mixup [16, 17] conducts a linear interpolation 1. INTRODUCTION of two classes of data points to oversample the dataset, which utilizes the mixed samples to smooth the decision boundaries Sound conveys a substantial amount of information about the by pushing them into low-density region. While the effec- environment. The skill of recognizing the surrounding envi- tiveness of each individual method is illustrated in the respec- ronment is taken for granted by human while it is a challeng- tive work, combining them is not guaranteed to result in a ing task for machines [1]. Sound event detection (SED) aims performance gain [13]. This is owing to having a large hy- to detect sound events within an audio stream by labeling the perparameter searching space which complicates finding the events as well as their corresponding occurrence timestamps. optimal set of hyperparameters. Such uncertainty prevents Taking advantage of deep neural networks, promising results efficient deployment of data augmentation methods for SSL have been obtained for SED [2]. However, the high annota- SED, where each sample is heavily augmented by multiple tion cost poses obstacles on its further development. augmentations [8–10]. Although CR has been applied for One solution for such data deficiency problem is semi- specific data augmentations as for mixup (ICT [3]) and time supervised learning (SSL). Since weakly-labeled (clip-level shift (SCT [11]), a more generalized usage of CR for a range annotated) and unlabelled data of sound event records are of data augmentation methods remained unexplored. abundant, recently, a domestic environment SED task was re- In this work, we propose a new SSL strategy for SED, leased by Detection and Classification of Acoustic Scenes and which consists of three novel modules: i) fuse MeanTeacher Events (DCASE) challenge1 . The challenge established a sys- and a self-consistency loss, where the latter provides an extra tematically organized semi-supervised dataset [2] to facilitate stabilization and regularization for the MeanTeacher training the development of semi-supervised SED. procedure; ii) a hard mixup scheme. Because of the additive ∗ Corresponding author. property of sound, the mixture of sounds would be considered 1 http://dcase.community/challenge2021

as concurrent sound events, rather than as an intermediate Time shift Time mask Pitch shift

point between two sounds as is done in the vanilla mixup [16];

iii) adapt RandAugment [18], an efficient data augmentation

Audio warping

policy which perturbs each data point with a randomly se-

Input sample

lected transformation, which allows different types of aug- Hard mixup

mentations to be exploited in an unified way. The proposed

strategy is referred to as random consistency training (RCT).

Teacher model Student model

Experiments show that each proposed module outperforms its

′ ′ Unsupervised Loss

competing method in the literature. As a whole, the proposed ෩ . , ෩ , ෩ , , ෩ ,

SSL strategy remarkably outperforms other counterpart ones, ′ ′ Self-consistency

and achieves top performance in DCASE 2021 challenge. , ,

Loss

′ ′

෩ , , ෩ , ෩ , , ෩ ,

2. THE PROPOSED METHOD

MeanTeacher Loss

SED is defined as a multi-class detection problem where the random selection EMA loss object

onset and offset timestamps of multiple sound events should

be recognized from the input audio clips. We denote the

(l) Fig. 1: Flowchart of RCT: both hard mixup and audio warping

time-frequency domain audio clips as Xi ∈ RT ×K , where are first applied for data augmentation; MeanTeacher [6] and self-

T is the number of frames and K is the dimension of LogMel consistency are then used for SSL training. Subscripts R and M are

filterbank features. Three types of data annotations are used set to distinguish the predictions of audio warping and hard mixup.

for training data, i.e. weakly labeled, strongly labeled and

unlabeled, which are indicated by superscript l ∈ {w, s, u}, feasible for images, whereas the interpolation of two audio

respectively. i denotes the index of the sample among a to- clips produces a new audio clip due to the additive property

(l)

tal of N (l) data points of one type. Let C and Yi be the of the sound. As a result, a combination of multiple audio

number of sound event classes and data labels, respectively. clips could be regarded as a realistic sample containing du-

The weakly labeled and strongly labeled data have the clip- plicated sound events, and should be recognized as an audio

(w) clip with duplicated sound classes. Thus, the audio mixup is

level and frame-level labels denoted by Yi ∈ RC and

(s) 0 proposed to directly add multiple samples together, and the

Yi ∈ RT ×C , respectively. Since the time resolution of

mixture is labeled with all the classes in all original samples.

sound events is normally much lower than the one of sound

The sound energies of the mixed samples are remained un-

signals, we use pooling layers in the CNN, which results in a

changed, as they reflect the real energy of sounds. Moreover,

coarser time resolution T 0 rather than T for the predictions.

we find that combining more than two audio clips could bring

The baseline model is a CRNN consists of a 7-layer CNN

extra benefits. That is, it further condenses the distribution of

with Context Gating layers [19], cascaded by a 2-layer bidi-

sound events and can help the model toward better discrimi-

rectional GRU. An attention module is added at the end to

nating the sound events. Therefore, we randomly add two or

produce different levels of predictions [7]. MeanTeacher [6]

three samples together in hard mixup.

is employed for SSL. As shown in Fig. 1, a teacher model

Audio warping: We use three audio warping methods

is obtained by an EMA of the student CRNN model to pro-

in this work, and their warping magnitudes are set to d ∈

vide pseudo labels for unlabeled samples. The training loss is

{1, 2, . . . , 9}. One warping method is randomly chosen with

L = LSupervised + LMeanTeacher , where LSupervised is the cross-

a random d for each mini-batch: Time shift [11] circularly

entropy loss for the labeled data, and LMeanTeacher is the Mean-

shifts each audio clip along time axis with a duration of d

Teacher mean square error (MSE) loss for the unlabeled data.

seconds; Time mask [14] randomly selects 5d intervals from

the audio clip to be masked to 0 and the length of each mask

interval is empirically set to 0.1 s; Pitch shift [15] randomly

2.1. Random data augmentation for audio raises or lowers the pitch of the audio clip by d/2 semitones,

RandAugment [18] was proposed as an efficient way of incor- where both pitches and formants are stretched.

porating different types of image transformations. In RCT, we Hard mixup and audio warping are both applied to each

take such idea to construct a general audio warping methods sample in a mini-batch. As shown in Fig. 1, the two methods

for consistency regularization. Random data augmentation is triple the batch size in each training step.

accomplished by combining it with the proposed hard mixup,

which means each training sample is augmented twice. 2.2. Self-consistency training

Hard mixup: The vanilla mixup [16] conducts an in-

terpolation of two data points belonging to different classes, The MeanTeacher loss used in [7] has already shown a no-

aiming to smooth the decision boundary. Such operation is table capacity in mining information from unlabeled data.15

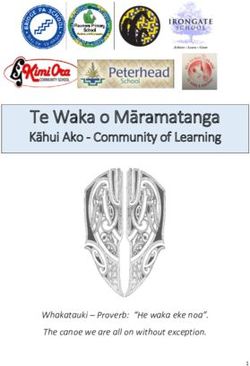

To further utilize the unsupervised data, we propose to ap- PSDS1

14 PSDS2

Relative PSDS Performance (%)

ply self-consistency regularization in addition to the Mean-

Teacher loss. Let Ŷi

(w)

∈ RC and Ỹi

(w)

∈ RC denote 13

the weak (clip-level) predictions of original and augmented 12

(s) 0

samples of the student model, and similarly Ŷi ∈ RT ×C 11

(s) 0

and Ỹi ∈ RT ×C for the strong (frame-level) predictions. 10

Self-consistency regulates the model by an extra MSE loss 9

(w)

N 8

1 X (w) (w)

LSC = r(step) kDp(w) (Ŷi ) − Ỹi k22 2 3 4 5 6 7 8 9

N (w) C i Maximum Transformation Magnitude (dmax)

(s)

N Fig. 2: The relative performance gain as a function of maximum

1 X (s) (s)

+ r(step) (s) 0

kDp(s) (Ŷi ) − Ỹi k22 , (1) transformation magnitude (dmax ). The transformation magnitude

N CT i (d) is randomly selected from [1, dmax ]. Relative performance

gain computed using the baseline performance (PSDS1 = 34.74%,

where k · k2 denotes the Euclidean norm for a vector/matrix, PSDS2 = 53.66%). The markers and vertical lines represent the

r(step) is a ramp-up function varying along the training step, mean and standard deviation computed using three trials.

(l)

and Dp (·) (l ∈ {w, s}) is a transformation on the predic-

tions of original samples, as the labels should correspondingly 3. EXPERIMENTAL RESULTS AND DISCUSSION

change for augmented samples. Pitch shift and time mask do

not change the labels. Time shift should accordingly shift the We use the baseline model [7] on DCASE 2021 Task 4 dataset

2

strong labels (or predictions) along time axis. As for hard to test the performance of the proposed method. The dataset

mixup, the mixed audio clip includes all the sound classes consists of 1578 weakly-labeled, 10000 synthesized strongly-

presented in the original audio clips. However, the labels for labeled and 14412 unlabeled audio clips. Each 10-second au-

the combined sound classes cannot be trivially obtained by dio clip are first resampled to 16 kHz and then frame blocked

adding the predictions of original samples, since the summa- with a frame length of 128 ms (2048 samples) and a hop

tion of two soft-predictions is meaningless. Instead, we define length of 16 ms (256 samples). After 2048-point fast Fourier

a non-linear transformation for hard mixup transform, 128-dimensional LogMel features are extracted for

each frame, converting the 10-second audio clip into a 626 ×

(l) (l) (l)

Dmixup (Ŷi ) = ∨i∈M harden(Ŷi ), (2) 128 spectrogram. All samples are normalized to [−1, 1] be-

fore feeding into the network.

where ∨ indicates element-wise OR operation, M is an arbi-

The batch size is 48, consisting of 12 weakly-labeled, 12

trary set consists of two or three data samples used in hard

strongly-labeled and 24 unlabeled data points. The learning

mixup, and harden(·) is an empirically estimated element-

rate ramps up to 10−3 until 50 and is scheduled by Adam op-

wise binary hardening function which ceils (or floors) the ma-

timizer [20] until the end of training, i.e. 200 epochs. The

trix elements to 1 (or 0) if the elements are larger than 0.95 (or

weight for MeanTeacher and self-consistency losses, r(step),

smaller than 0.05). This transformation first hardens the pre-

linearly ramps up from 0 to 2 at epoch 50 and is then kept

dictions of original samples, from which the active/inactive

unchanged. The system performance is evaluated through

sound classes are combined. The total loss L used for train-

polyphonic sound detection scores (PSDSs) [21] according

ing the CRNN model will be

to the DCASE 2021 challenge guidelines. The metrics takes

L = LSupervised + LMeanTeacher + LSC . (3) both response speed (PSDS1 ) and cross-trigger performance

(PSDS2 ) into account; the larger the better for both metrics.

In the proposed self-consistency loss, the student model is

regulated to give the consistent predictions for the origi- 3.1. Ablation study on SED

nal and augmented samples. Such consistency constraint

between the original and augmented samples always holds In the random augmentation policy, the only hyperparameter

regardless of the correctness of the predictions. This is differ- that needs to be grid-searched is the maximum transformation

ent from ICT [3], which replaces the MeanTeacher loss with a magnitude (dmax ). Fig. 2 shows the grid-searching results. As

new loss in the form of Eq. (1). In ICT [5], the predictions of seen, trend-wise the performance improves as the maximum

original samples are from the MeanTeacher model. Denoted transformation magnitude increases (until 5 or 6).

0(w) 0(s) (w) (s) Table 1 shows the result of ablation study, in which each

as Ŷi and Ŷi , they substitute Ŷi and Ŷi from the

student model in Eq. (1). However, such pseudo labels highly proposed module is added step by step. As seen, the proposed

rely on the correctness of the MeanTeacher method predic- schemes lead to noticeable positive contributions, including

tions, while incorrect pseudo labels may mislead the student 2 http://dcase.community/challenge2021/task-sound-event-detection-and-

model; hence, reduce the training efficiency. separation-in-domestic-environmentsTable 1: Ablation study for RCT. Different modules are added step Table 2: Comparing the proposed SSL strategy with other strategies.

by step and each score is obtained by averaging at least three trials. Each score is obtained by at least averaging three trials.

Model PSDS1 (%) PSDS2 (%) Model PSDS1 (%) PSDS2 (%)

Baseline 34.74 53.66 Baseline [7] 34.74 53.66

+ Vanilla mixup [16] 34.89 57.85 SCT [11] 36.03 55.59

+ Hard mixup 36.35 57.42 ICT [3] 37.68 57.70

+ RandAugment 38.09 58.45 ICT+SCT [11] 37.03 58.70

+ ICT consistency [3] 38.02 59.19 RCT (proposed) 40.12 61.39

+ Self-consistency 40.12 61.39

Table 3: Comparing the proposed system with DCASE2021 top-

RandAugment, hard mixup and self-consistency. In addition, ranked submissions. All models are named in the form of network

we conduct experiments to substitute the latter two modules architecture plus the SSL strategy, where DA, IPL, NS stand for data

by vanilla mixup [16] and ICT-like consistency [3]. While augmentation, improved pseudo label [10], and noisy student [23]

vanilla mixup is slightly better in cross-trigger, hard mixup Model PSDS1 (%) PSDS2 (%)

gives more significant gain in response time. Self-consistency

outperforms the ICT-like consistency in both metrics, which CRNN (baseline) [7] 34.74 53.66

demonstrates the superiority of the proposed modules. FBCRNN+MLFL [24] 40.10 59.70

CRNN+IPL [10] 40.70 65.30

3.2. Comparison with other semi-supervised strategies CRNN+DA [25] 41.90 63.80

CRNN+HeavyAug. [9] 43.36 63.92

To evaluate the proposed SSL strategy collectively, we re- RCRNN+NS [23] 45.10 67.90

produce and compare with other widely used SSL strategies, SKUnit+ICT/SCT [16] 45.35 67.14

including ICT [3], SCT [11] and their combination, using CRNN+RCT (proposed) 43.95 67.11

the same baseline network as the proposed strategy. Table

2 shows the comparison results. Compared with the baseline strates the flexibility of RCT and its capacity in incorporating

MeanTeacher model, ICT largely improves the performance new audio transformations with a low tuning cost overhead.

by its proposed teacher-student consistency loss. SCT perfor- The proposed system is compared with DCASE2021 top-

mance is not as good as ICT, which indicates that the time ranked submissions in Table 3. The scores of DCASE2021

shift is not as efficient as interpolation (mixup). Combining submissions are directly quoted from the challenge results.

ICT and SCT does not outperform ICT alone, which indi- The proposed system noticeably outperforms all other sys-

cates that the naive addition of ICT and SCT [11] is not an tems employing the baseline CRNN network, which again

effective way to combine multiple different augmentations. verifies the superiority of the proposed RCT strategy. On the

In contrast, as shown in Table 1, the proposed strategy is able other side, the performance of the proposed system is very

to efficiently combine multiple different augmentations, and close to the two first-ranked submissions [8, 23]. They both

leverage them. Overall, the proposed method remarkably out- use more powerful networks, i.e. SKUnit [8] and RCRNN

performs ICT and SCT, due to the strength of each proposed [23], which were shown in [8,23] to be able to largely improve

modules and the efficient combination of them. the performance. The proposed framework is independent of

the network and can be applied along with more advanced

3.3. Comparison with DCASE2021 submissions architectures to achieve higher performance.

To further assess the efficacy of the proposed method and

conduct fair comparisons with the DCASE 2021 submitted 4. CONCLUSION

models, we also employed some existing post-processing and

ensembling techniques in our model. A temperature factor In this paper, we developed a novel semi-supervised learning

of 2.1 was used for inference temperature tuning [8], and (SSL) strategy, named random consistency training (RCT),

the class-wise median filters {3, 28, 7, 4, 7, 22, 48, 19, 10, 50} for sound event detection (SED) task. The proposed method

[22] were deployed. Moreover, model ensembling was ap- improves several core modules of SSL, including unsuper-

plied to fuse the predictions of multiple differently trained vised training loss and data augmentation schemes. It leads

models. We trained eleven models with different variants of to achieving high performance on the DCASE 2021 challenge

RCT: substituting time masking with frequency masking [14]; dataset. As for future work, we deem that better results could

adding FilterAug [9] into audio warping choices; randomly be obtained when RCT is combined with more advanced aug-

selecting two or one methods in audio warping; and, reduced mentations or architectures. Besides, since RCT is not task-

the weight of the MeanTeacher loss. We found that all dif- specific, it can potentially be applied in various audio process-

ferent variants achieve reasonable performance. This demon- ing tasks which is another broad avenue for future work.5. REFERENCES [13] C. Shorten and T. M. Khoshgoftaar, “A survey on image data augmentation for deep learning,” Journal of Big [1] T. Virtanen, M. D. Plumbley, and D. Ellis, Computa- Data, vol. 6, no. 1, pp. 1–48, 2019. tional analysis of sound scenes and events, Springer, 2018. [14] D. S. Park, W. Chan, Y. Zhang, Ch. Chiu, B. Zoph, E. D. Cubuk, and Q. V. Le, “Specaugment: A simple data aug- [2] N. Turpault, R. Serizel, A. Shah, and J. Sala- mentation method for automatic speech recognition,” in mon, “Sound event detection in domestic environ- INTERSPEECH, 2019. ments with weakly labeled data and soundscape syn- thesis,” in Acoustic Scenes and Events 2019 Workshop [15] B. McFee, E. J. Humphrey, and J. P. Bello, “A software (DCASE2019), 2019, p. 253. framework for musical data augmentation.,” in ISMIR, 2015, vol. 2015, pp. 248–254. [3] V. Verma, A. Lamb, J. Kannala, Y. Bengio, and D. Lopez-Paz, “Interpolation consistency training for [16] H. Zhang, M. Cisse, Y. N. Dauphin, and D. Lopez-Paz, semi-supervised learning,” in International Joint Con- “mixup: Beyond empirical risk minimization,” in ICLR, ference on Artificial Intelligence, 2019, pp. 3635–3641. 2018. [4] P. Bachman, O. Alsharif, and D. Precup, “Learning [17] Y. Tokozume, Y. Ushiku, and T. Harada, “Learning from with pseudo-ensembles,” Advances in neural informa- between-class examples for deep sound recognition,” in tion processing systems, vol. 27, pp. 3365–3373, 2014. ICLR, 2018. [5] L. Samuli and A. Timo, “Temporal ensembling for [18] E. D. Cubuk, B. Zoph, J. Shlens, and Q. V. Le, “Ran- semi-supervised learning,” in ICLR, 2017. daugment: Practical automated data augmentation with a reduced search space,” in CVPR, 2020, pp. 702–703. [6] A. Tarvainen and H. Valpola, “Mean teachers are bet- ter role models: Weight-averaged consistency targets [19] M. Antoine, L. Ivan, and S. Josef, “Learnable pooling improve semi-supervised deep learning results,” Ad- with context gating for video classification,” CoRR, vol. vances in Neural Information Processing Systems, vol. abs/1706.06905, 2017. 30, 2017. [20] D. P. Kingma and J. Ba, “Adam: A method for stochastic [7] L. JiaKai, “Mean teacher convolution system for dcase optimization,” in ICLR, 2015. 2018 task 4,” Detection and Classification of Acoustic [21] A. Mesaros, T. Heittola, and T. Virtanen, “Metrics for Scenes and Events, 2018. polyphonic sound event detection,” Applied Sciences, [8] X. Zheng, H. Chen, and Y. Song, “Zheng ustc team’s vol. 6, no. 6, 2016. submission for dcase2021 task4 – semi-supervised [22] Y. Liu, C. Chen, J. Kuang, and P. Zhang, “Semi- sound event detection,” Tech. Rep., DCASE2021 Chal- supervised sound event detection based on mean teacher lenge, June 2021. with power pooling and data augmentation,” Tech. Rep., [9] H. Nam, B-Y. Ko, G-T. Lee, S-H. Kim, W-H. Jung, S- DCASE2020 Challenge, June 2020. M. Choi, and Y-H. Park, “Heavily augmented sound [23] N. K. Kim and H. K. Kim, “Self-training with noisy stu- event detection utilizing weak predictions,” Tech. Rep., dent model and semi-supervised loss function for dcase DCASE2021 Challenge, June 2021. 2021 challenge task 4,” Tech. Rep., DCASE2021 Chal- [10] Y. Gong, C. Li, X. Wang, L. Ma, S. Yang, and Z. W. Wu, lenge, June 2021. “Improved pseudo-labeling method for semi-supervised [24] G. Tian, Y. Huang, Z. Ye, S. Ma, X. Wang, H. Liu, sound event detection,” Tech. Rep., DCASE2021 Chal- Y. Qian, R. Tao, L. Yan, K. Ouchi, J. Ebbers, and lenge, June 2021. R. Haeb-Umbach, “Sound event detection using met- ric learning and focal loss for dcase 2021 task 4,” Tech. [11] Ch. Koh, Y-S. Chen, Y-W. Liu, and M. R. Bai, “Sound Rep., DCASE2021 Challenge, June 2021. event detection by consistency training and pseudo- labeling with feature-pyramid convolutional recurrent [25] R. Lu, W. Hu, D. Zhiyao, and J. Liu, “Integrating neural networks,” in ICASSP, 2021, pp. 376–380. advantages of recurrent and transformer structures for sound event detection in multiple scenarios,” Tech. [12] Q. Xie, Z. Dai, E. Hovy, T. Luong, and Q. Le, “Unsuper- Rep., DCASE2021 Challenge, June 2021. vised data augmentation for consistency training,” Ad- vances in Neural Information Processing Systems, vol. 33, 2020.

You can also read