Federated Deep AUC Maximization for Heterogeneous Data with a Constant Communication Complexity

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Federated Deep AUC Maximization for Heterogeneous Data

with a Constant Communication Complexity

Zhuoning Yuan * 1 Zhishuai Guo * 1 Yi Xu 2 Yiming Ying 3 Tianbao Yang 1

Abstract distributed over multiple clients, e.g., mobile phones, organi-

zations. An important feature of FL is that the data remains

Deep AUC (area under the ROC curve)

at its own clients, allowing the preservation of data privacy.

Maximization (DAM) has attracted much atten-

This feature makes FL attractive not only to internet com-

tion recently due to its great potential for imbal-

panies such as Google and Apple but also to conventional

anced data classification. However, the research

industries such as those that provide services to hospitals

on Federated Deep AUC Maximization (FDAM)

and banks in the big data era (Rieke et al., 2020; Long et al.,

is still limited. Compared with standard federated

2020). Data in these industries is usually collected from

learning (FL) approaches that focus on decom-

people who are concerned about data leakage. But in order

posable minimization objectives, FDAM is more

to provide better services, large-scale machine learning from

complicated due to its minimization objective is

diverse data sources is important for addressing model bias.

non-decomposable over individual examples. In

For example, most patients in hospitals located in urban

this paper, we propose improved FDAM algo-

areas could have dramatic differences in demographic data,

rithms for heterogeneous data by solving the popu-

lifestyles, and diseases from patients who are from rural

lar non-convex strongly-concave min-max formu-

areas. Machine learning models (in particular, deep neural

lation of DAM in a distributed fashion, which can

networks) trained based on patients’ data from one hospital

also be applied to a class of non-convex strongly-

could dramatically bias towards its major population, which

concave min-max problems. A striking result of

could bring serious ethical concerns (Pooch et al., 2020).

this paper is that the communication complexity

of the proposed algorithm is a constant indepen- One of the fundamental issues that could cause model bias

dent of the number of machines and also inde- is data imbalance, where the number of samples from differ-

pendent of the accuracy level, which improves ent classes are skewed. Although FL provides an effective

an existing result by orders of magnitude. The framework for leveraging multiple data sources, most exist-

experiments have demonstrated the effectiveness ing FL methods still lack the capability to tackle the model

of our FDAM algorithm on benchmark datasets, bias caused by data imbalance. The reason is that most ex-

and on medical chest X-ray images from differ- isting FL methods are developed for minimizing the conven-

ent organizations. Our experiment shows that tional objective function, e.g., the average of a standard loss

the performance of FDAM using data from multi- function on all data, which are not amenable to optimizing

ple hospitals can improve the AUC score on test- more suitable measures such as area under the ROC curve

ing data from a single hospital for detecting life- (AUC) for imbalanced data. It has been recently shown

threatening diseases based on chest radiographs. that directly maximizing AUC for deep learning can lead

to great improvements on real-world difficult classification

tasks (Yuan et al., 2020b). For example, Yuan et al. (2020b)

1. Introduction reported the best performance by DAM on the Stanford

CheXpert Competition for interpreting chest X-ray images

Federated learning (FL) is an emerging paradigm for large- like radiologists (Irvin et al., 2019).

scale learning to deal with data that are (geographically)

However, the research on FDAM is still limited. To the

*

Equal contribution 1 Department of Computer Science, Uni- best of our knowledge, Guo et al. (2020a) is the only work

versity of Iowa 2 Machine Intelligence Technology, Alibaba Group that was dedicated to FDAM by solving the non-convex

3

Department of Mathematics and Statistics, State University of strongly-concave min-max problem in a distributed man-

New York at Albany. Correspondence to: Tianbao Yang . ner. Their algorithm (CODA) is similar to the standard

FedAvg method (McMahan et al., 2017) except that the

Proceedings of the 38 th International Conference on Machine periodic averaging is applied both to the primal and the

Learning, PMLR 139, 2021. Copyright 2021 by the author(s).Federated Deep AUC Maximization with a Constant Communication Complexity

Table 1. The summary of sample and communication complexities of different algorithms for FDAM under a µ-PL condition in both

heterogeneous and homogeneous settings, where K is the number of machines and µ 1. NPA denotes the naive parallel (large

mini-batch) version of PPD-SG (Liu et al., 2020) for DAM, where M denotes the batch size in the NPA. The ⇤ indicate the results that are

e suppresses a logarithmic factor.

derived by us. O(·)

Heterogeneous

⇣ Data

⌘ Homogeneous

⇣ Data

⌘ Sample

⇣ Complexity

⌘

NPA (M < 1

) e

O 1

+ 1 e

O 1

+ 1 e M + 21

O

Kµ✏ KM µ2 ✏ µ✏ KM µ2 ✏ µ✏ µ✏ µ K✏

⇣ ⌘⇤ ⇣ ⌘⇤ ⇣ ⌘⇤

NPA (M 1

) e 1

O e 1

O e M

O

Kµ✏ µ µ µ

⇣ ⌘ ⇣ ⌘⇤ ⇣ ⌘

CODA+ (CODA) e K + 11/2 + 3/21 1/2

O e K

O e 1 + 21

O

µ

⇣ ⌘ µ✏ µ ✏ ⇣ µ⌘ ⇣ µ✏ µ K✏

⌘

CODASCA e 1

O e 1

O e 1 + 21

O

µ µ µ✏ µ K✏

dual variables. Nevertheless, their results on FDAM are • Third, we conduct experiments on benchmark datasets

not comprehensive. By a deep investigation of their algo- to verify our theory by showing CODASCA can enjoy a

rithms and analysis, we found that (i) although their FL larger communication window size than CODA+ without

algorithm CODA was shown to be better than the naive sacrificing the performance. Moreover, we conduct empir-

parallel algorithm (NPA) with a small mini-batch for DAM, ical studies on medical chest X-ray images from different

the NPA using a larger mini-batch at local machines can hospitals by showing that the performance of CODASCA

enjoy a smaller communication complexity than CODA; (ii) using data from multiple organizations can improve the

the communication complexity of CODA for homogeneous performance on testing data from a single hospital.

data becomes better than that was established for the hetero-

geneous data, but is still worse than that of NPA with a large 2. Related Work

mini-batch at local clients. These shortcomings of CODA

for FDAM motivate us to develop better federated averag- Federated Learning (FL). Many empirical stud-

ing algorithms and analysis with a better communication ies (Povey et al., 2014; Su & Chen, 2015; McMahan et al.,

complexity without sacrificing the sample complexity. 2016; Chen & Huo, 2016; Lin et al., 2020b; Kamp et al.,

2018; Yuan et al., 2020a) have shown that FL exhibits

This paper aims to provide more comprehensive results

good empirical performance for distributed deep learning.

for FDAM, with a focus on improving the communication

For a more thorough survey of FL, we refer the readers

complexity of CODA for heterogeneous data. In particular,

to (McMahan et al., 2019). This paper is closely related

our contributions are summarized below:

to recent studies on the design of distributed stochastic

• First, we provide a stronger baseline with a simpler al- algorithms for FL with provable convergence guarantee.

gorithm than CODA named CODA+, and establish its

The most popular FL algorithm is Federated Averaging (Fe-

complexity in both homogeneous and heterogeneous data

dAvg) (McMahan et al., 2017), also referred to as local

settings. Although CODA+ has a slight change from

SGD (Stich, 2019). Stich (2019) is the first that establishes

CODA, its analysis is much more involved than that of

the convergence of local SGD for strongly convex functions.

CODA, which is based on the duality gap analysis instead

Yu et al. (2019b;a) establishes the convergence of local SGD

of the primal objective gap analysis.

and their momentum variants for non-convex functions. The

• Second, we propose a new variant of CODA+ named CO- analysis in (Yu et al., 2019b) has exhibited the difference of

DASCA with a much improved communication complex- communication complexities of local SGD in homogeneous

ity than CODA+. The key thrust is to incorporate the idea and heterogeneous data settings, which is also discovered

of stochastic controlled averaging of SCAFFOLD (Karim- in recent works (Khaled et al., 2020; Woodworth et al.,

ireddy et al., 2020) into the framework of CODA+ to cor- 2020b;a). These latter studies provide a tight analysis of

rect the client-drift for both local primal updates and local local SGD in homogeneous and/or heterogeneous data set-

dual updates. A striking result of CODASCA under a PL tings, improving its upper bounds for convex functions and

condition for deep learning is that its communication com- strongly convex functions than some earlier works, which

plexity is independent of the number of machines and the sometimes improve over large mini-batch SGD, e.g., when

targeted accuracy level, which is even better than CODA+ the level of heterogeneity is sufficiently small.

in the homogeneous data setting. The analysis of CO-

DASCA is also non-trivial that combines the duality gap Haddadpour et al. (2019) improve the complexities of lo-

analysis of CODA+ for a non-convex strongly-concave cal SGD for non-convex optimization by leveraging the

min-max problem and the variance reduction analysis of Polyak-Łojasiewicz (PL) condition. (Karimireddy et al.,

SCAFFOLD. The comparison between CODASCA and 2020) propose a new FedAvg algorithm SCAFFOLD by

CODA+ and the NPA for FDAM is shown in Table 1. introducing control variates (variance reduction) to correctFederated Deep AUC Maximization with a Constant Communication Complexity

for the ‘client-drift’ in the local updates for heterogeneous 2017; McKinney et al., 2020; Wang et al., 2016). Some

data. The communication complexities of SCAFFOLD works have already achieved expert-level performance in

are no worse than that of large mini-batch SGD for both different tasks (Ardila et al., 2019; McKinney et al., 2020;

strongly convex and non-convex functions. The proposed Litjens et al., 2017). Recently, Yuan et al. (2020b) employ

algorithm CODASCA is inspired by the idea of stochastic DAM for medical image classification and achieve great

controlled averaging of SCAFFOLD. However, the analysis success on two challenging tasks, namely CheXpert com-

of CODASCA for non-convex min-max optimization under petition for chest X-ray image classification and Kaggle

a PL condition of the primal objective function is non-trivial competition for melanoma classification based on skin le-

compared to that of SCAFFOLD. sion images. However, to the best of our knowledge, the

application of FDAM methods on medical datasets from

AUC Maximization. This work builds on the foundations

different hospitals have not be thoroughly investigated.

of stochastic AUC maximization developed in many previ-

ous works. Ying et al. (2016) address the scalability issue

of optimizing AUC by introducing a min-max reformula- 3. Preliminaries and Notations

tion of the AUC square surrogate loss and solving it by a

We consider federated learning of deep neural networks

convex-concave stochastic gradient method (Nemirovski

by maximizing the AUC score. The setting is the same to

et al., 2009). Natole et al. (2018) improve the conver-

that was considered as in (Guo et al., 2020a). Below, we

gence rate by adding a strongly convex regularizer into the

present some preliminaries and notations, which are mostly

original formulation. Based on the same min-max formu-

the same as in (Guo et al., 2020a). In this paper, we consider

lation as in (Ying et al., 2016), Liu et al. (2018) achieve

the following min-max formulation for distributed problem:

an improved convergence rate by developing a multi-stage

K

algorithm by leveraging the quadratic growth condition of 1 X

the problem. However, all of these studies focus on learning min max f (w, a, b, ↵) = fk (w, a, b, ↵), (1)

w2Rd ↵2R K

k=1

a linear model, whose corresponding problem is convex and (a,b)2R2

strongly concave. Yuan et al. (2020b) propose a more robust where K is the total number of machines. This formula-

margin-based surrogate loss for the AUC score, which can tion covers a class of non-convex strongly concave min-

be formulated as a similar min-max problem to the AUC max problems and specifically for the AUC maximization,

square surrogate loss. fk (w, a, b, ↵) is defined below.

Deep AUC Maximization (DAM). (Rafique et al., 2018) fk (w, a, b, ↵) = Ezk [Fk (w, a, b, ↵; zk )]

is the first work that develops algorithms and convergence ⇥

= Ezk (1 p)(h(w; xk ) a)2 I[yk =1]

theories for weakly convex and strongly concave min-max

problems, which is applicable to DAM. However, their con- + p(h(w; xk ) b)2 I[yk = 1] (2)

vergence rate is slow for a practical purpose. Liu et al. (2020) k

+ 2(1 + ↵)(ph(w; x )I[yk = 1]

consider improving the convergence rate for DAM under ⇤

k

a practical PL condition of the primal objective function. (1 p)h(w, x )I[yk =1] ) p(1 p)↵2 .

Guo et al. (2020b) further develop more generic algorithms

for non-convex strongly-concave min-max problems, which where zk = (xk , y k ) ⇠ Pk , Pk is the data distribution on

can also be applied to DAM. There are also several stud- machine k, p is the ratio of positive data. When k =

ies (Yan et al., 2020; Lin et al., 2020a; Luo et al., 2020; l , 8k 6= l, this is referred to as the homogeneous data

Yang et al., 2020) focusing on non-convex strongly concave setting; otherwise heterogeneous data setting.

min-max problems without considering the application to Notations. We define the following notations:

DAM. Based on Liu et al. (2020)’s algorithm, Guo et al.

(2020a) propose a communication-efficient FL algorithm v = (wT , a, b)T , (v) = max f (v, ↵),

↵

(CODA) for DAM. However, its communication cost is still 1 2

high for heterogeneous data. s (v) = (v) + kv vs 1k ,

2

DL for Medical Image Analysis. In past decades, machine 1

f s (v, ↵) = f (v, ↵) + kv vs 1k

2

learning, especially deep learning methods have revolution- 2

ized many domains such as machine vision, natural language 1

processing. For medical image analysis, deep learning meth- Fks (v, ↵; zk ) = Fk (v, ↵; zk ) + kv vs 1k

2

2

ods are also showing great potential such as in classification

v⇤ = arg min (v), v⇤ s = arg min s (v).

of skin lesions (Esteva et al., 2017; Li & Shen, 2018), in- v v

terpretation of chest radiographs (Ardila et al., 2019; Irvin

et al., 2019), and breast cancer screening (Bejnordi et al., Assumptions. Similar to (Guo et al., 2020a), we make the

following assumptions throughout this paper.Federated Deep AUC Maximization with a Constant Communication Complexity

Assumption 1. Algorithm 1 CODA+

(i) There exist v0 , 0 > 0 such that (v0 ) (v⇤ ) 0 . 1: Initialization: (v0 , ↵0 , ).

(ii) PL condition: (v) satisfies the µ-PL condition, i.e., 2: for s = 1, ..., S do

µ( (v) (v⇤ )) 12 kr (v)k2 ; (iii) Smoothness: For 3: vs , ↵s = DSG+(vs 1 , ↵s 1 , ⌘s , I s , );

any z, f (v, ↵; z) is `-smooth in v and ↵. (v) is L-smooth, 4: end for

i.e., kr (v1 ) r (v2 )k Lkv1 v2 k. 5: Return vS , ↵S .

(iv) Bounded variance:

E[krv fk (v, ↵) rv Fk (v, ↵; z)k2 ] 2 Algorithm 2 DSG+(v0 , ↵0 , ⌘, T, I, )

(3) Each machine does initialization: v0k = v0 , ↵0k = ↵0 ,

E[|r↵ fk (v, ↵) r↵ Fk (v, ↵; z)|2 ] 2

.

for t = 0, 1, ..., T 1 do

Each machine k updates its local solution in parallel:

To quantify the drifts between different clients, we introduce k

vt+1 = vtk ⌘(rv Fk (vtk , ↵tk ; zkt ) + (vtk v0 )),

the following assumption. ↵t+1 = ↵tk + ⌘r↵ Fk (vtk , ↵tk ; zkt ),

k

Assumption 2. Bounded client drift: if t + 1 mod I = 0 then

PK

K

k

vt+1 =K 1 k

vt+1 , ⇧ communicate

1 X k=1

krv fk (v, ↵) rv f (v, ↵)k2 D2 P

K

K k

↵t+1 = 1 k

↵t+1 , ⇧ communicate

k=1 K

(4) k=1

K

1 X end if

kr↵ fk (v, ↵) r↵ f (v, ↵)k2 D2 . end for✓

K ◆

k=1 P

K P

T P

K P

T

Return v̄ = 1

K

1

T vtk , ↵

¯ = 1

K

1

T ↵tk .

k=1 t=1 k=1 t=1

Remark. D quantifies the drift between the local objectives

and the global objective. D = 0 denotes the homogeneous

data setting that all the local objectives are identical. D > 0 smaller communication complexity for homogeneous data

corresponds to the heterogeneous data setting. than that for heterogeneous data while the previous analysis

of CODA yields the same communication complexity for

4. CODA+: A stronger baseline homogeneous data and heterogeneous data.

In this section, we present a stronger baseline than We have the following lemma to bound the convergence for

CODA (Guo et al., 2020a). The motivation is that (i) the the subproblem in each s-th stage.

CODA algorithm uses a step to compute the dual variable Lemma 1. (One call of Algorithm 2) Let (v̄, ↵ ¯ ) be the

from the primal variable by using sampled data from all output of Algorithm 2. Suppose Assumption 1 and 2 hold.

clients; but we find this step is unnecessary by an improved By running Algorithm 2 with given input v0 , ↵0 for T

analysis; (ii) the complexity of CODA for homogeneous iterations, = 2`, and ⌘ min( 3`+3`12 /µ2 , 4`

1

), we have

data is not given in its original paper. Hence, CODA+ is a for any v and ↵

simplified version of CODA but with much refined analysis.

We present the steps of CODA+ in Algorithm 1. It is similar 1 1

to CODA that uses stagewise updates. In s-th stage, a E[f s (v̄, ↵) f s (v, ↵

¯ )] kv0 vk2 + (↵0 ↵)2

⌘T ⌘T

strongly convex strongly concave subproblem is constructed: ✓ 2 ◆

3` 3` 3⌘ 2

+ + (12⌘ 2 I 2 + 36⌘ 2 I 2 D2 )II>1 + ,

2µ2 2 K

| {z }

min max f (v, ↵) + kv v0s k2 , (5) A1

v ↵ 2

where v0s is the output of the previous stage. where µ2 = 2p(1 p) is the strong concavity coefficient of

f (v, ↵) in ↵.

CODA+ improves upon CODA in two folds. First, CODA+

algorithm is more concise since the output primal and dual Remark. Note that the term A1 on the RHS is the drift of

variables of each stage can be directly used as input for clients caused by skipping communication. When D = 0,

the next stage, while CODA needs an extra large batch of i.e., the machines have homogeneous data distribution, we

data after each stage to compute the dual variable. This need ⌘I = O K 1

, then A1 can be merged with the last

modification not only reduces the sample complexity, but term. When D > 0, we need ⌘I 2 = O K 1

, which means

also makes the algorithm applicable to a boarder family that I has to be smaller in heterogeneous data setting and

of nonconvex min-max problems. Second, CODA+ has a thus the communication complexity is higher.Federated Deep AUC Maximization with a Constant Communication Complexity

Remark. The key difference between the analysis of To correct the client drift, we propose to leverage the idea of

CODA+ and that of CODA lies at how to handle the term stochastic controlled averaging due to (Karimireddy et al.,

(↵0 ↵)2 in Lemma 1. In CODA, the initial dual variable 2020). The key idea is to maintain and update a control

↵0 is computed from the initial primal variable v0 , which variate to accommodate the client drift, which is taken into

reduces the error term (↵0 ↵)2 to one similar to kv0 vk2 , account when updating the local solutions. In the proposed

which is then bounded by the primal objective gap due to algorithm CODASCA, we apply control variates to both

the PL condition. However, since we do not conduct the primal and dual variables. CODASCA shares the same

extra computation of ↵0 from v0 , our analysis directly deals stagewise framework as CODA+, where a strongly convex

with such error term by using the duality gap of f s . This strongly concave subproblem is constructed and optimized

technique is originally developed by (Yan et al., 2020). in a distributed fashion approximatly in each stage. The

steps of CODASCA are presented in Algorithm 3 and Algo-

µ/L̂

Theorem 1. Define L̂ = L + 2`, c = 5+µ/L̂

. rithm 4. Below, we describe the algorithm in each stage.

Set = 2`, ⌘s = ⌘0 exp( (s 1)c), Ts = Each stage has R communication rounds. Between two

212

⌘0 min(`,µ2 ) exp((s 1)c). To return vS such that rounds, there are I local updates, and each machine k does

E[ (vS ) (v )] ✏, it suffices to choose S

⇤

the local updates as

✓ ⇢ ◆

5L̂+µ 2 0 2⌘0 12( 2 )

O µ max log ✏ , log S + log ✏ 5K . k

vr,t+1 k

= vr,t ⌘l (rv Fks (vr,t

k k

, ↵r,t ; ztr,t ) ckv + cv )

✓ ⇣ ⌘◆ k k

The iteration complexity is O e max L̂ ↵r,t+1 = ↵r,t + ⌘l (r↵ Fks (vr,t

k k

, ↵r,t ; zkr,t ) ck↵ + c↵ ),

µ✏⌘0 K , µ2 K✏

0

⇣ ⌘

and the communication complexity is O e K where ckv , cv are local and global control variates for the

µ

primal variable, and ck↵ , c↵ are local and global control vari-

by setting Is = ⇥( K⌘ 1

) if D = 0, and is

✓ ✓

1/2

s ◆◆ ates for the dual variable. Note that rv Fks (vr,t k k

, ↵r,t ; ztr,t )

e max K + L̂1/2 and r↵ Fk (vr,t , ↵r,t ; zr,t ) are unbiased stochastic gradient

s k k k

O µ

0

,

µ(⌘0 ✏)1/2 µ

K

+ µ3/2 ✏1/2

by set-

on local data. However, they are biased estimate of global

ting Is = ⇥( pK⌘ 1 e suppresses

) if D > 0, where O

s gradient when data on different clients are heterogeneous.

logarithmic factors. Intuitively, the term ckv + cv and ck↵ + c↵ work to correct

the local gradients to get closer to the global gradient. They

Remark. Due to the PL condition, the step size ⌘ decreases also play a role of reducing variance of stochastic gradi-

geometrically. Accordingly, I increases geometrically due ents, which is helpful as well to reduce the communication

to Lemma 1, and I increases with a faster rate when the data complexity in the homogeneous data setting.

are homogeneous than that when data are heterogeneous. In At each communication round, the primal and dual variables

result, the total number of communications in homogeneous on all clients get aggregated, averaged and broadcast to all

setting is much less than that in heterogeneous setting. clients. The control variates c at r-th round get updated as

5. CODASCA ckv = ckv cv +

1 k

(vr 1 vr,I )

I⌘l

Although CODA+ has a highly reduced communication (6)

1

complexity for homogeneous data, it is still suffering from ck↵ = ck↵ c↵ + (↵k ↵r 1 ),

I⌘l r,I

a high communication complexity for heterogeneous data.

Even for the homogeneous data, CODA+ has a worse com- which is equivalent to

munication complexity with a dependence on the number of

◆ ⇣

clients K than the NPA algorithm with a large batch size. 1X

I

ckv = rv fks (vr,t

k k

, ↵r,t ; zkr,t )

Can we further reduce the communication complexity for I t=1

(7)

FDAM for both homogeneous and heterogeneous data I

1X

✓ ⌘

without using a large batch size? ck↵ = r↵ fks (vr,t

k k

, ↵r,t ; zkr,t ).

I t=1

The main reason for the degeneration in the heterogeneous

data setting is the data difference. Even at global optimum Notice that they are simply the average of stochastic gradi-

(v⇤ , ↵⇤ ), the gradient of local functions in different clients ents used in this round. An alternative way to compute the

could be different and non-zero. In the homogeneous data control variates is by computing the stochastic gradient with

setting, different clients still produce different solutions due a large batch of extra samples at each client, but this would

to stochastic error (cf. the ⌘ 2 2 I term of A1 in Lemma 1). bring extra cost and is unnecessary. cv and c↵ are averages

These together contribute to the client drift. of ckv and ck↵ over all clients. After the local primal and dualFederated Deep AUC Maximization with a Constant Communication Complexity

Algorithm 3 CODASCA Algorithm 4 DSGSCA+(v0 , ↵0 , ⌘l , ⌘g , I, R, )

1: Initialization: (v0 , ↵0 , ). Each machine does initialization: v0,0 k

= v0 , ↵0,0 k

= ↵0 ,

2: for s = 1, ..., S do k k

cv = 0, c↵ = 0

3: vs , ↵s = DSGSCA+(vs 1 , ↵s 1 , ⌘l , ⌘g , Is , Rs , ); for r = 1, ..., R do

4: end for for t = 0, 1, ..., I 1 do

5: Return vS , ↵S . Each machine k updates its local solution in parallel:

k k

vr,t+1 = vr,t ⌘l (rv Fks (vr,t

k k

, ↵r,t ; zkr,t ) ckv +cv ),

variables are averaged, an extrapolation step with ⌘g > 1 is

↵r,t+1 = ↵r,t+⌘l (r↵ Fk (vr,t , ↵r,t ; zkr,t ) ck↵+c↵ ),

k k s k k

performed, which will boost the convergence.

end for

In order to establish the convergence of CODASCA, we first ckv = ckv cv + I⌘1 l (vr 1 vr,I k

)

present a key lemma below. k k 1 k

c↵ = c↵ c↵ + I⌘l (↵r,I ↵r 1 )

Lemma 2. (One call of Algorithm 4) Under the same PK PK

setting as in Theorem 2, with ⌘˜ = ⌘l ⌘g I 40` µ2

2 , for

cv = K 1

ckv , c↵ = K 1

ck↵ ⇧ communicate

k=1 k=1

v = arg min f (v, ↵r̃ ), ↵ = arg max f (vr̃ , ↵) we have

0 s 0 s

P

K PK

v ↵

vr = 1

K

k

vr,I , ↵r = 1

K

k

↵r,t ⇧ communicate

s 0 s 0 2 0 2 k=1 k=1

E[f (vr̃ , ↵ ) f (v , ↵r̃ )] kv0 vk vr = vr 1 + ⌘g (vr vr 1 ),

⌘l ⌘g T

↵r = ↵r 1 + ⌘g (↵r ↵r 1 )

2 10⌘l 2 10⌘l ⌘g 2

Broadcast vr , ↵r , cv , c↵ ⇧ communicate

+ (↵0 ↵ 0 )2 + +

⌘l ⌘g T ⌘g K end for

| {z }

A2 Return vr̃ , ↵r̃ where r̃ is randomly sampled from 1, ..., R

where T = I · R is the number of iterations for each stage. (ii) Each stage has the same number of communication

Remark. Compared the above bound with that in Lemma 1, rounds. However, Is increases geometrically. Therefore, the

in particular the term A2 vs the term A1 , we can see that number of iterations and samples in a stage increase geomet-

CODASCA will not be affected by the data heterogeneity rically. Theoretically, we can also set ⌘ls to the same value as

D > 0, and the stochastic variance is also much reduced. the one in the last stage, correspondingly Is can be set as a

As will seen in the next theorem, the value of ⌘˜ and R will fixed large value. But this increases the number of required

keep the same in all stages. Therefore, by decreasing local samples without further speeding up the convergence. Our

step size ⌘l geometrically, the communication window size setting of Is is a balance between skipping communications

Is will increase geometrically to ensure ⌘˜ O(1). and reducing sample complexity. For simplicity, we use the

fixed setting of Is to compare CODASCA and the baseline

The convergence result of CODASCA is presented below. CODA+ in our experiment to corroborate the theory.

p

Theorem 2. Define ⌘ c = 4`+ 53 L̂. Set ⌘g = K,

L̂ = L+2`, 248

⇣ (iii) The local step size ⌘l of CODASCA decreases p similarly

2µ1 ⌘˜

Is = I0 exp c+2µ1 (s 1) , R = 1000

⌘˜µ2 , ⌘l = ⌘g Is =

s

as the step size ⌘ in CODA+. But Is = O(1/( K⌘ p l )) in

s

⇣ ⌘

p ⌘˜ 2µ µ2 CODASCA increases faster than that Is = O(1/( K⌘s ))

exp c+2µ (s 1) , ⌘˜ min{ 3`+3`12 /µ2 , 40` 2 }.

KI0 ⇢ in CODA+ on heterogeneous data. It is noticeable that dif-

After S = O(max c+2µ

log 4✏0 c+2µ 160L̂S ⌘˜

2

ferent from CODA+, we do not need Assumption 2 which

2µ ✏ , 2µ log (c+2µ)✏ KI0 )

bounds the client drift, meaning that CODASCA can be ap-

stages, the output vS satisfies E[ (vS ) (v )] ✏. The ⇤

plied to optimize the global objective even if local objectives

⇣ ⌘

communication complexity is O e 1

. The iteration com- arbitrarily deviate from the global function.

⇣ ⌘ µ

e max{ 1 , 21 } .

plexity is O µ✏ µ K✏ 6. Experiments

⇣ ⌘

Remark. (i) The number of communications is O e 1 , in- In this section, we first verify the effectiveness of CO-

µ

dependent of number of clients K and the accuracy level ✏. DASCA compared to CODA+ on various datasets, including

This is a significant improvement over CODA+, which has two benchmark datasets, i.e., ImageNet, CIFAR100 (Deng

a communication complexity of O e K/µ + 1/(µ3/2 ✏1/2 ) et al., 2009; Krizhevsky et al., 2009) and a constructed large-

in heterogeneous setting. Moreover, O e (1/(µ)) is a nearly scale chest X-ray dataset. Then, we demonstrate the effec-

optimal rate up to a logarithmic factor, since O(1/µ) is tiveness of FDAM on improving the performance on a single

the lower bound communication complexity of distributed domain (CheXpert) by using data from multiple sources. For

strongly convex optimization (Karimireddy et al., 2020; Ar- notations, K denotes the number of “clients” (# of machines,

jevani & Shamir, 2015) and strongly convexity is a stronger # of data sources) and I denotes the communication win-

condition than the PL condition. dow size. The code used for the experiments are availableFederated Deep AUC Maximization with a Constant Communication Complexity

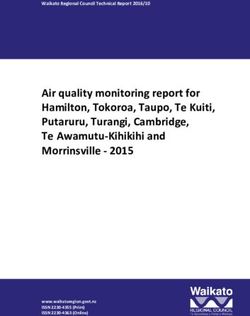

Figure 1. Top row: the testing AUC score of CODASCA vs # of iterations for different values of I on ImageNet-IH and CIFAR100-IH

with imratio = 10% and K=16, 8 on Densenet121. Bottom row: the achieved testing AUC vs different values of I for CODASCA and

CODA+. The AUC score in the legend in top row figures represent the AUC score at the last iteration.

we follow similar steps to construct imbalanced heteroge-

Table 2. Statistics of Medical Chest X-ray Datasets.

neous data. We keep the testing/validation set untouched

Dataset Source Samples

CheXpert Stanford Hospital (US) 224,316 and keep them balanced. For imbalance ratio (imratio),

ChestXray8 NIH Clinical Center (US) 112,120 we explore two ratios: 10% and 30%. We refer to the

PadChest Hospital San Juan (Spain) 110,641 constructed datasets as ImageNet-IH (10%), ImageNet-IH

MIMIC-CXR BIDMC (US) 377,110 (30%), CIFAR100-IH (10%), CIFAR100-IH (30%). Due

ChestXrayAD H108 and HMUH (Vietnam) 15,000 to the limited space, we only report imratio=10% with

DenseNet121 and defer the other results to supplement.

at https://github.com/yzhuoning/LibAUC.

Parameters and Settings. We train Desenet121 on all

Chest X-ray datasets. Five medical chest X-ray datasets,

datasets. For the parameters in CODASCA/CODA+, we

i.e., CheXpert, ChestXray14, MIMIC-CXR, PadChest,

tune 1/ in [500, 700, 1000] and ⌘ in [0.1, 0.01, 0.001]. For

ChestXray-AD (Irvin et al., 2019; Wang et al., 2017; John-

learning rate schedule, we decay the step size by 3 times

son et al., 2019; Bustos et al., 2020; Nguyen et al., 2020)

every T0 iterations, where T0 is tuned in [2000, 3000, 4000].

are collected from different organizations. The statistics

We experiment with a fixed value of I selected from [1,

of these medical datasets are summarized in Table 2. We

32, 64, 128, 512, 1024] and we include experiments with

construct five binary classification tasks for predicting five

increasing Is in the supplement. We tune ⌘g in [1.1, 1, 0.99,

popular diseases, Cardiomegaly (C0), Edema (C1), Con-

0.999]. The local batch size is set to 32 for each machine.

solidation (C2), Atelectasis (C3), P. Effusion (C4), as in

We run a total of 20000 iterations for all experiments.

CheXpert competition (Irvin et al., 2019). These datasets

are naturally imbalanced and heterogeneous due to different 6.1. Comparison with CODA+

patients’ populations, different data collection protocols and

We plot the testing AUC on ImageNet (10%) vs # of iter-

etc. We refer to the whole medical dataset as ChestXray-IH.

ations for CODASCA and CODA+ in Figure 1 (top row)

Imbalanced and Heterogeneous (IH) Benchmark by varying the value of I for different values of K. Results

Datasets. For benchmark datasets, we manually construct on CIFAR100 are shown in the Supplement. In the bottom

the imbalanced heterogeneous dataset. For ImageNet, we row of Figure 1, we plot the achieved testing AUC score vs

first randomly select 500 classes as positive class and 500 different values of I for CODASCA and CODA+. We have

classes as negative class. To increase data heterogeneity, the following observations:

we further split all positive/negative classes into K groups • CODASCA enjoys a larger communication window

so that each split only owns samples from unique classes size. Comparing CODASCA and CODA+ in the bottom

without overlapping with that of other groups. To increase panel of Figure 1, we can see that CODASCA enjoys a

data imbalance level, we randomly remove some samples larger communication window size without hurting the per-

from positive classes for each machine. Please note that due formance than CODA+, which is consistent with our theory.

to this operation, the whole sample set for different K is

different. We refer to the proportion of positive samples in • CODASCA is consistently better for different values

all samples as imbalance ratio (imratio). For CIFAR100, of K. We compare the largest value of I such that the perfor-

mance does not degenerate too much compared with I = 1,Federated Deep AUC Maximization with a Constant Communication Complexity

Table 3. Performance on ChestXray-IH testing set when K=16. Table 5. Performance of FDAM on Chexpert validation set for

Method I C0 C1 C2 C3 C4 DenSenet161.

1 0.8472 0.8499 0.7406 0.7475 0.8688 of sources C0 C1 C2 C3 C4 AVG

CODA+ 512 0.8361 0.8464 0.7356 0.7449 0.8680 K=1 0.8946 0.9527 0.9544 0.9008 0.9556 0.9316

CODASCA 512 0.8427 0.8457 0.7401 0.7468 0.8680 K=2 0.8938 0.9615 0.9568 0.9109 0.9517 0.9333

CODA+ 1024 0.8280 0.8451 0.7322 0.7431 0.8660 K=3 0.9008 0.9603 0.9568 0.9127 0.9505 0.9356

CODASCA 1024 0.8363 0.8444 0.7346 0.7481 0.8674 K=4 0.8986 0.9615 0.9561 0.9128 0.9564 0.9367

K=5 0.8986 0.9612 0.9568 0.9130 0.9552 0.9370

Table 4. Performance of FDAM on Chexpert validation set for

DenseNet121. Parameters and Settings. Due to the limited computing

#of sources C0 C1 C2 C3 C4 AVG

K=1 0.9007 0.9536 0.9542 0.9090 0.9571 0.9353 resources, we resize all images to 320x320. We follow

K=2 0.9027 0.9586 0.9542 0.9065 0.9583 0.9361 the two stage method proposed in (Yuan et al., 2020b) and

K=3 0.9021 0.9558 0.9550 0.9068 0.9583 0.9356 compare with the baseline on a single machine with a single

K=4 0.9055 0.9603 0.9542 0.9072 0.9588 0.9372 data source (CheXpert training data) (K=1) for learning

K=5 0.9066 0.9583 0.9544 0.9101 0.9584 0.9376

DenseNet121, DenseNet161. More specifically, we first

which is denoted by Imax . From the bottom figures of Fig- train a base model by minimizing the Cross-Entropy loss on

ure 1, we can see that the Imax value of CODASCA on Ima- CheXpert training dataset using Adam with a initial learning

geNet is 128 (K=16) and 512 (K=8), respectively, and that rate of 1e-5 and batch size of 32 for 2 epochs. Then, we

of CODA+ on ImageNet is 32 (K=16) and 128 (K=8). This discard the trained classifier, use the same pretrained model

demonstrates that CODASCA enjoys consistent advantage for initializing the local models at all machines and continue

over CODA+, i.e., when K = 16, Imax CODASCA CODA+

/Imax = 4, training using CODASCA. For the parameter tuning, we try

and when K = 8, Imax CODASCA CODA+

/Imax = 4. The same phe- I=[16, 32, 64, 128], learning rate=[0.1, 0.01] and we fix

nomena occur on CIFAR100 data. =1e-3, T0 =1000 and batch size=32.

Next, we compare CODASCA with CODA+ on the Results. We report all results in term of AUC score on the

ChestXray-IH medical dataset, which is also highly het- CheXpert validation data in Table 4 and Table 5. We can see

erogeneous. We split the ChestXray-IH data into K = 16 that using more data sources from different organizations

groups according to the patient ID and each machine only can efficiently improve the performance on CheXpert. For

owns samples from one organization without overlapping DenseNet121, the average improvement across all 5 classifi-

patients. The testing set is the collection of 5% data sam- cation tasks from K = 1 to K = 5 is over 0.2% which is

pled from each organization. In addition, we use train/val significant in light of the top CheXpert leaderboard results.

split = 7:3 for the parameter tuning. We run CODASCA Specifically, we can see that CODASCA with K=5 achieves

and CODA+ with the same number of iterations. The per- the highest validation AUC score on C0 and C3, and with

formance on testing set are reported in Table 3. From the K=4 achieves the highest on C1 and C4. For DenseNet161,

results, we can observe that CODASCA performs consis- the improvement of average AUC is over 0.5%, which dou-

tently better than CODA+ on C0, C2, C3, C4. bles the 0.2% improvement for DenseNet121.

6.2. FDAM for improving performance on CheXpert

7. Conclusion

Finally, we show that FDAM can be used to leverage data

from multiple hospitals to improve the performance at a In this work, we have conducted comprehensive studies of

single target hospital. For this experiment, we choose CheX- federated learning for deep AUC maximization. We ana-

pert data from Stanford Hospital as the target data. Its lyzed a stronger baseline for deep AUC maximization by

validation data will be used for evaluating the performance establishing its convergence for both homogeneous data

of our FDAM method. Note that improving the AUC score and heterogeneous data. We also developed an improved

on CheXpert is a very challenging task. The top 7 teams variant by adding control variates to the local stochastic

on CheXpert leaderboard differ by only 0.1% 1 . Hence, gradients for both primal and dual variables, which dramat-

we consider any improvement over 0.1% significant. Our ically reduces the communication complexity. Besides a

procedure is following: we gradually increase the number strong theory guarantee, we exhibit the power of FDAM

of data resources, e.g., K = 1 only includes the CheXpert on real world medical imaging problems. We have shown

training data, K = 2 includes the CheXpert training data that our FDAM method can improve the performance on

and ChestXray8, K = 3 includes the CheXpert training medical imaging classification tasks by leveraging data from

data and ChestXray8 and PadChest, and so on. different organizations that are kept locally.

1

https://stanfordmlgroup.github.io/

competitions/chexpert/Federated Deep AUC Maximization with a Constant Communication Complexity

Acknowledgements min-max problems. arXiv preprint arXiv:2006.06889,

2020b.

We are grateful to the anonymous reviewers for their con-

structive comments and suggestions. This work is partially Haddadpour, F., Kamani, M. M., Mahdavi, M., and

supported by NSF #1933212 and NSF CAREER Award Cadambe, V. R. Local SGD with periodic averaging:

#1844403. Tighter analysis and adaptive synchronization. In Ad-

vances in Neural Information Processing Systems 32

References (NeurIPS), pp. 11080–11092, 2019.

Ardila, D., Kiraly, A. P., Bharadwaj, S., Choi, B., Reicher, Irvin, J., Rajpurkar, P., Ko, M., Yu, Y., Ciurea-Ilcus, S.,

J. J., Peng, L., Tse, D., Etemadi, M., Ye, W., Corrado, Chute, C., Marklund, H., Haghgoo, B., Ball, R. L., Sh-

G., et al. End-to-end lung cancer screening with three- panskaya, K. S., Seekins, J., Mong, D. A., Halabi, S. S.,

dimensional deep learning on low-dose chest computed Sandberg, J. K., Jones, R., Larson, D. B., Langlotz, C. P.,

tomography. Nature medicine, 25(6):954–961, 2019. Patel, B. N., Lungren, M. P., and Ng, A. Y. Chexpert: A

large chest radiograph dataset with uncertainty labels and

Arjevani, Y. and Shamir, O. Communication complex- expert comparison. In The Thirty-Third AAAI Conference

ity of distributed convex learning and optimization. In on Artificial Intelligence (AAAI), pp. 590–597, 2019.

Advances in Neural Information Processing Systems 28

(NeurIPS), pp. 1756–1764, 2015. Johnson, A. E. W., Pollard, T. J., Berkowitz, S., Green-

baum, N. R., Lungren, M. P., Deng, C.-y., Mark, R. G.,

Bejnordi, B. E., Veta, M., Van Diest, P. J., Van Ginneken, and Horng, S. Mimic-cxr: A large publicly available

B., Karssemeijer, N., Litjens, G., Van Der Laak, J. A., database of labeled chest radiographs. arXiv preprint

Hermsen, M., Manson, Q. F., Balkenhol, M., et al. Di- arXiv:1901.07042, 2019.

agnostic assessment of deep learning algorithms for de-

tection of lymph node metastases in women with breast Kamp, M., Adilova, L., Sicking, J., Hüger, F., Schlicht, P.,

cancer. Jama, 318(22):2199–2210, 2017. Wirtz, T., and Wrobel, S. Efficient decentralized deep

learning by dynamic model averaging. In Joint European

Bustos, A., Pertusa, A., Salinas, J.-M., and de la Iglesia- Conference on Machine Learning and Knowledge Discov-

Vayá, M. Padchest: A large chest x-ray image dataset with ery in Databases (ECML/PKDD), pp. 393–409. Springer,

multi-label annotated reports. Medical Image Analysis, 2018.

66:101797, 2020.

Karimireddy, S. P., Kale, S., Mohri, M., Reddi, S. J., Stich,

Chen, K. and Huo, Q. Scalable training of deep learning S. U., and Suresh, A. T. Scaffold: Stochastic controlled

machines by incremental block training with intra-block averaging for on-device federated learning. In Proceed-

parallel optimization and blockwise model-update filter- ings of the 37th International Conference on Machine

ing. In 2016 IEEE International Conference on Acoustics, Learning (ICML), 2020.

Speech and Signal Processing (ICASSSP), pp. 5880–5884,

2016. Khaled, A., Mishchenko, K., and Richtárik, P. Tighter theory

for local SGD on identical and heterogeneous data. In The

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., and Fei- 23rd International Conference on Artificial Intelligence

Fei, L. ImageNet: A Large-Scale Hierarchical Image and Statistics (AISTATS), pp. 4519–4529, 2020.

Database. In Proceedings of the IEEE annual Conference

on Computer Vision and Pattern Recognition (CVPR), Krizhevsky, A., Nair, V., and Hinton, G. CIFAR-10 and

2009. CIFAR-100 datasets. URl: https://www. cs. toronto.

edu/kriz/cifar. html, 6:1, 2009.

Esteva, A., Kuprel, B., Novoa, R. A., Ko, J., Swetter, S. M.,

Blau, H. M., and Thrun, S. Dermatologist-level classifi- Li, Y. and Shen, L. Skin lesion analysis towards melanoma

cation of skin cancer with deep neural networks. Nature, detection using deep learning network. Sensors, 18(2):

542(7639):115–118, 2017. 556, 2018.

Guo, Z., Liu, M., Yuan, Z., Shen, L., Liu, W., and Yang, Lin, T., Jin, C., and Jordan, M. I. On gradient descent ascent

T. Communication-efficient distributed stochastic AUC for nonconvex-concave minimax problems. In Proceed-

maximization with deep neural networks. In Proceed- ings of the 37th International Conference on Machine

ings of the 37th International Conference on Machine Learning (ICML), volume 119, pp. 6083–6093, 2020a.

Learning (ICML), pp. 3864–3874, 2020a.

Lin, T., Stich, S. U., Patel, K. K., and Jaggi, M. Don’t use

Guo, Z., Yuan, Z., Yan, Y., and Yang, T. Fast objective and large mini-batches, use local SGD. In 8th International

duality gap convergence for non-convex strongly-concave Conference on Learning Representations (ICLR), 2020b.Federated Deep AUC Maximization with a Constant Communication Complexity

Litjens, G., Kooi, T., Bejnordi, B. E., Setio, A. A. A., Nesterov, Y. E. Introductory Lectures on Convex Optimiza-

Ciompi, F., Ghafoorian, M., van der Laak, J. A. W. M., tion - A Basic Course, volume 87 of Applied Optimization.

van Ginneken, B., and Sánchez, C. I. A survey on deep Springer, 2004.

learning in medical image analysis. Medical Image Anal-

Nguyen, H. Q., Lam, K., Le, L. T., Pham, H. H., Tran,

ysis, 42:60–88, 2017.

D. Q., Nguyen, D. B., Le, D. D., Pham, C. M., Tong,

Liu, M., Zhang, X., Chen, Z., Wang, X., and Yang, T. Fast H. T., Dinh, D. H., et al. Vindr-cxr: An open dataset of

stochastic auc maximization with O (1/n)-convergence chest x-rays with radiologist’s annotations. arXiv preprint

rate. In Proceedings of 35th International Conference on arXiv:2012.15029, 2020.

Machine Learning (ICML), pp. 3195–3203, 2018. Pooch, E. H., Ballester, P., and Barros, R. C. Can we trust

deep learning based diagnosis? the impact of domain shift

Liu, M., Yuan, Z., Ying, Y., and Yang, T. Stochastic AUC

in chest radiograph classification. In International Work-

maximization with deep neural networks. In 8th Interna-

shop on Thoracic Image Analysis, pp. 74–83. Springer,

tional Conference on Learning Representations (ICLR),

2020.

2020.

Povey, D., Zhang, X., and Khudanpur, S. Parallel training

Long, G., Tan, Y., Jiang, J., and Zhang, C. Federated learn- of dnns with natural gradient and parameter averaging.

ing for open banking. In Federated Learning, pp. 240– arXiv preprint arXiv:1410.7455, 2014.

254. Springer, 2020.

Rafique, H., Liu, M., Lin, Q., and Yang, T. Non-convex min-

Luo, L., Ye, H., Huang, Z., and Zhang, T. Stochastic re- max optimization: Provable algorithms and applications

cursive gradient descent ascent for stochastic nonconvex- in machine learning. arXiv preprint arXiv:1810.02060,

strongly-concave minimax problems. In Advances in 2018.

Neural Information Processing Systems 33 (NeurIPS),

Rieke, N., Hancox, J., Li, W., Milletari, F., Roth, H. R.,

2020.

Albarqouni, S., Bakas, S., Galtier, M. N., Landman, B. A.,

McKinney, S. M., Sieniek, M., Godbole, V., Godwin, J., Maier-Hein, K., et al. The future of digital health with

Antropova, N., Ashrafian, H., Back, T., Chesus, M., Cor- federated learning. NPJ digital medicine, 3(1):1–7, 2020.

rado, G. S., Darzi, A., et al. International evaluation of Stich, S. U. Local SGD converges fast and communicates

an AI system for breast cancer screening. Nature, 577 little. In 7th International Conference on Learning Rep-

(7788):89–94, 2020. resentations (ICLR), 2019.

McMahan, B., Moore, E., Ramage, D., Hampson, S., and Su, H. and Chen, H. Experiments on parallel training of deep

y Arcas, B. A. Communication-efficient learning of deep neural network using model averaging. arXiv preprint

networks from decentralized data. In Proceedings of the arXiv:1507.01239, 2015.

20th International Conference on Artificial Intelligence

and Statistics (AISTATS), pp. 1273–1282, 2017. Wang, D., Khosla, A., Gargeya, R., Irshad, H., and Beck,

A. H. Deep learning for identifying metastatic breast

McMahan, H. B., Moore, E., Ramage, D., Hampson, S., et al. cancer. arXiv preprint arXiv:1606.05718, 2016.

Communication-efficient learning of deep networks from Wang, X., Peng, Y., Lu, L., Lu, Z., Bagheri, M., and Sum-

decentralized data. arXiv preprint arXiv:1602.05629, mers, R. M. Chestx-ray8: Hospital-scale chest x-ray

2016. database and benchmarks on weakly-supervised classi-

fication and localization of common thorax diseases. In

McMahan, H. B. et al. Advances and open problems in

Proceedings of the IEEE Conference on Computer Vision

federated learning. Foundations and Trends® in Machine

and Pattern Recognition (CVPR), pp. 2097–2106, 2017.

Learning, 14(1), 2019.

Woodworth, B. E., Patel, K. K., and Srebro, N. Minibatch

Natole, M., Ying, Y., and Lyu, S. Stochastic proximal algo- vs local SGD for heterogeneous distributed learning. In

rithms for auc maximization. In Proceedings of the 35th Advances in Neural Information Processing Systems 33

International Conference on Machine Learning (ICML), (NeurIPS), 2020a.

pp. 3707–3716, 2018.

Woodworth, B. E., Patel, K. K., Stich, S. U., Dai, Z., Bullins,

Nemirovski, A., Juditsky, A., Lan, G., and Shapiro, A. Ro- B., McMahan, H. B., Shamir, O., and Srebro, N. Is

bust stochastic approximation approach to stochastic pro- local SGD better than minibatch sgd? In Proceedings of

gramming. SIAM Journal on optimization, 19(4):1574– the 37th International Conference on Machine Learning

1609, 2009. (ICML), pp. 10334–10343, 2020b.Federated Deep AUC Maximization with a Constant Communication Complexity Yan, Y., Xu, Y., Lin, Q., Liu, W., and Yang, T. Optimal epoch stochastic gradient descent ascent methods for min- max optimization. In Advances in Neural Information Processing Systems 33 (NeurIPS), 2020. Yang, J., Kiyavash, N., and He, N. Global convergence and variance reduction for a class of nonconvex-nonconcave minimax problems. Advances in Neural Information Processing Systems 33 (NeurIPS), 2020. Ying, Y., Wen, L., and Lyu, S. Stochastic online auc maxi- mization. In Advances in Neural Information Processing Systems 29 (NeurIPS), pp. 451–459, 2016. Yu, H., Jin, R., and Yang, S. On the linear speedup analy- sis of communication efficient momentum SGD for dis- tributed non-convex optimization. In Proceedings of the 36th International Conference on Machine Learn- ing, ICML 2019, 9-15 June 2019, Long Beach, California, USA, pp. 7184–7193, 2019a. Yu, H., Yang, S., and Zhu, S. Parallel restarted sgd with faster convergence and less communication: Demystify- ing why model averaging works for deep learning. In Proceedings of the AAAI Conference on Artificial Intelli- gence, volume 33, pp. 5693–5700, 2019b. Yuan, Z., Guo, Z., Yu, X., Wang, X., and Yang, T. Accel- erating deep learning with millions of classes. In 16th European Conference on Computer Vision (ECCV), pp. 711–726, 2020a. Yuan, Z., Yan, Y., Sonka, M., and Yang, T. Robust deep auc maximization: A new surrogate loss and empirical studies on medical image classification. arXiv preprint arXiv:2012.03173, 2020b.

You can also read