Exploiting Document Structures and Cluster Consistencies for Event Coreference Resolution

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Exploiting Document Structures and Cluster Consistencies

for Event Coreference Resolution

Hieu Minh Tran1 , Duy Phung1 and Thien Huu Nguyen2

1

VinAI Research, Vietnam

2

Department of Computer and Information Science, University of Oregon,

Eugene, OR 97403, USA

{v.hieutm4,v.duypv1}@vinai.io, thien@cs.uoregon.edu

Abstract event mentions and predict their coreference (Chen

et al., 2009; Lu et al., 2016; Lu and Ng, 2017).

We study the problem of event coreference To this end, an important step in ECR models is

resolution (ECR) that seeks to group coref- to transform event mention pairs into representa-

erent event mentions into the same clusters.

tion vectors to encode discriminative features for

Deep learning methods have recently been ap-

plied for this task to deliver state-of-the-art coreference prediction. Early work on ECR has

performance. However, existing deep learn- achieved feature representation via feature engi-

ing models for ECR are limited in that they neering where multiple features are hand-designed

cannot exploit important interactions between for input event mention pairs (Lu and Ng, 2017). A

relevant objects for ECR, e.g., context words major problem with feature engineering is the spar-

and entity mentions, to support the encoding sity of the features that limits the generalization

of document-level context. In addition, con-

to unseen data. Representation learning in deep

sistency constraints between golden and pre-

dicted clusters of event mentions have not been learning models has recently been introduced to

considered to improve representation learning address this issue, leading to more robust methods

in prior deep learning models for ECR. This with better performance for ECR (Nguyen et al.,

work addresses such limitations by introduc- 2016; Choubey and Huang, 2018; Huang et al.,

ing a novel deep learning model for ECR. At 2019; Barhom et al., 2019). As such, there are at

the core of our model are document structures least two limitations in existing deep learning mod-

to explicitly capture relevant objects for ECR. els for ECR that will be addressed in this work to

Our document structures introduce diverse

improve the performance.

knowledge sources (discourse, syntax, seman-

tics) to compute edges/interactions between First, as event mentions pairs for coreference

structure nodes for document-level representa- prediction might belong to long-distance sentences

tion learning. We also present novel regular- in documents, capturing document-level context be-

ization techniques based on consistencies of tween the event mentions (i.e., beyond the two sen-

golden and predicted clusters for event men- tences that host the event mentions) might present

tions in documents. Extensive experiments useful information for ECR. As their first limita-

show that our model achieve state-of-the-art

tion, prior deep learning models for ECR has only

performance on two benchmark datasets.

attempted to encode document-level context via

1 Introduction hand-designed features (Kenyon-Dean et al., 2018;

Barhom et al., 2019) that still suffer from the fea-

Event coreference resolution (ECR) is the task of ture sparsity issue. In addition, such prior work is

clustering event mentions (i.e., trigger words that unable to exploit ECR-related objects in documents

evoke an event) in a document such that each clus- (e.g., entity mentions, context words) and their

ter represents a unique real world event. For ex- connections/interactions (possibly beyond sentence

ample, the three event mentions in Figure 1, i.e., boundary) to aid representation learning. An exam-

“refuse to sign, “raised objections”, and “doesn’t ple for the importance of context words, entity men-

sign”, should be grouped into the same cluster to tions, and their interactions for ECR can be seen in

indicate their coreference to the same event. Figure 1. Here, to decisively determine the coref-

A common component in prior ECR models in- erence of “raised objections” and “doesn’t sign”,

volves a binary classifier that receives a pair of ECR systems should recognize “Trump” and “the

4840

Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics

and the 11th International Joint Conference on Natural Language Processing, pages 4840–4850

August 1–6, 2021. ©2021 Association for Computational LinguisticsCoreferential event mentions

Donald Trump continued to refuse to sign a relief package

agreed in Congress and headed instead to the golf course….

Trump, who is spending the Christmas and New Year

holiday at his Mar-a-Lago resort in Florida, raised objections

to the $900bn relief bill only after it was passed by Congress

last week, having been negotiated by his own treasury

secretary Steven Mnuchin… All these folks and their families

will suffer if Trump doesn’t sign the damn bill.

Coreferential entity mentions

Figure 1: An example for event coreference resolution.

$900bn relief bill” as the arguments of “raised ob- ters (provided by human) and predicted clusters

jections”, and “Trump” and “the damn bill” as the (generated by models) to promote representation

arguments of “doesn’t sign”. The systems should learning. In particular, it is intuitive that ECR mod-

also be able to realize the coreference relation be- els can achieve better performance if their predicted

tween the two entity mentions “Trump”, and be- event clusters are more similar to the golden event

tween “the $900bn relief bill” and “the damn bill” clusters in the data. To this end, we propose to

to conclude the same identity for the event men- obtain different inconsistency measures between

tions (i.e., as they involve the same arguments). golden and predicted clusters that will be incorpo-

As such, it is helpful to identify relevant entity rated into the overall loss function for minimization.

mentions, context words and leverage their rela- As such, we expect that the consistency/similarity

tions/interactions to improve representation vectors regularization between two types of clusters can

for event mentions in ECR. Motivated by this issue, provide useful training signals to improve repre-

we propose to form graphs for documents (called sentation vectors for event mentions in ECR. To

document structures) to explicitly capture relevant our knowledge, this is also the first work to ex-

objects and interactions for ECR that will be con- ploit cluster consistency-based regularization for

sumed to learn representation vectors for event representation learning in ECR. Finally, we con-

mentions. In particular, context words, entity men- duct extensive experiments for ECR on the KBP

tions, and event mentions will serve as the nodes benchmark datasets. The experiments demonstrate

in our document structures due to their intuitive the benefits of the proposed methods and lead to

relevance to ECR. Different types of knowledge state-of-the-art performance for ECR.

sources will then be exploited to connect the nodes

for the document structures, featuring discourse in- 2 Related Work

formation (e.g., to connect coreferring entity men-

tions), syntactic information (e.g., to directly link Event coreference resolution is broadly related to

event mentions and their arguments), and seman- works on entity coreference resolution that aim to

tic similarity (e.g., to connect words/event men- resolve nouns phrases/mentions for entities (Raghu-

tions with similar meanings). Such rich document nathan et al., 2010; Ng, 2010; Durrett and Klein,

structures allows us to model the interactions of 2013; Lee et al., 2017a; Joshi et al., 2019b,a). How-

relevant objects for ECR beyond sentence level ever, resolving event mentions has been considered

for document-level context. Using graph convolu- as a more challenging task than entity coreference

tional neural networks (GCN) (Kipf and Welling, resolution due to the more complex structures of

2017; Nguyen and Grishman, 2018) for represen- event mentions (Yang et al., 2015).

tation learning, we expect enriched representation Our work focuses on the within-document set-

vectors from the document structures can further ting for ECR where input event mentions are ex-

improve the performance of ECR systems. To our pected to appear in the same input documents;

knowledge, this is the first time that rich document however, we also note prior works on cross-

structures are employed for ECR. document ECR (Lee et al., 2012a; Adrian Bejan

and Harabagiu, 2014; Choubey and Huang, 2017;

Second, prior deep learning models for ECR Kenyon-Dean et al., 2018; Barhom et al., 2019;

fails to leverage consistencies between golden clus- Cattan et al., 2020). As such, for within-document

4841ECR, previous methods have applied feature-based 3.2 Document Structure

models for pairwise classifiers (Ahn, 2006; Chen

This component aims to learn representation vec-

et al., 2009; Cybulska and Vossen, 2015; Peng

tors for the event mentions in E using an interaction

et al., 2016), spectral graph clustering (Chen and Ji,

graph G = {N , E} for D that facilitates the enrich-

2009), information propagation (Liu et al., 2014),

ment of representation vectors for event mentions

markov logic networks (Lu et al., 2016), joint mod-

with relevant objects and interactions at document

eling of ECR with event detection (Araki and Mi-

level. As such, the nodes and edges in G for our

tamura, 2015; Lu et al., 2016; Chen and Ng, 2016;

ECR problem are constructed as follows:

Lu and Ng, 2017), and recent deep learning models

Nodes: The node set N for our interaction graph

(Nguyen et al., 2016; Choubey and Huang, 2018;

G should capture relevant objects for the corefer-

Huang et al., 2019; Lu et al., 2020; Choubey et al.,

ence between event mentions in D. Toward this

2020). Compared to previous deep learning works

goal, we consider all the context words (i.e., wi ),

for ECR, our model presents a novel representation

event mentions, and entity mentions in D as rel-

learning framework based on document structures

evant objects for our ECR problem. For conve-

to explicitly encode important interactions between

nience, let M = {m1 , m2 , . . . , m|M | } be the set

relevant objects, and representation regularization

of entity mentions in D. The node set N for G

to exploit the cluster consistency between golden

is thus created by the union of D, E, and M :

and predicted clusters for event mentions.

N = D ∪ E ∪ M = {n1 , n2 , . . . , n|N | }. To

achieve a fair comparison, we use the predicted

3 Model event mentions that are provided by (Choubey and

Huang, 2018) in the datasets for E. The Stanford

Formally, in ECR, given an input document D =

CoreNLP toolkit is employed to obtain the entity

w1 , w2 , . . . , wN (of N words/tokens) with a set of

mentions M .

event mentions E = {e1 , e2 , . . . , e|E| }, the goal is

Edges: The edges between the nodes in N

to group the event mentions in E into clusters to

for G will be represented by an adjacency matrix

capture the coreference relation between mentions.

A = {aij }i,j=|N | (aij ∈ R) in this work. As

Our ECR model consists of four major components:

A will be consumed by Graph Convolutional Net-

(i) Document Encoder to words into representation

works (GCN) to learn representation vectors for

vectors, (ii) Document Structure to create graphs

ECR, the value/score aij between two nodes ni

for documents and learn rich representation vec-

and nj in N is expected to estimate the importance

tors for event mentions, (iii) End-to-end Resolu-

(or the level of interaction) of nj for the represen-

tion to simultaneously resolve the coreference for

tation computation of ni . This structure allows ni

the entity mentions in D, and (iv) Cluster Consis-

and nj of N to directly interact and influence the

tency Regularization to regularize representation

representation computation of each other even if

vectors based on consistency constraints between

they are sequentially far away from each other in

golden and predict event mention clusters. Figure

D. As presented in the introduction, we explore

2 presents an overview of our model for ECR.

three types of information to design the edges E

(or compute the interaction scores aij ) for G in our

3.1 Document Encoder model, including discourse-based, syntax-based

In the first step, we transform each word wi ∈ and semantic-based information.

D into a representation vector xi by feeding D Discourse-based Edges: Due to multiple sen-

into the pre-trained language model BERT (Devlin tences and event/entity mentions involved in the

et al., 2019). In particular, as BERT might split wi input document D, we need to understand where

into several word-pieces, we average the hidden such objects span and how they relate to each other

vectors of the word-pieces of wi in the last layer of to effectively encode document context for ECR.

BERT to obtain the representation vector xi for wi . To this end, we propose to exploit three types of dis-

To handle long documents with BERT, we divide D course information to obtain the interaction graph

into segments of 512 consecutive word-pieces that G, i.e., sentence boundary, coreference structure,

will be encoded separately. The resulting sequence and mention span for event/entity mentions in D.

X = x1 , x2 , . . . , xn for D is then sent to the next Sentence Boundary: Our motivation for this in-

steps for further computation. formation is that event/entity mentions appearing

4842Donald

G1 G2 ... G5 Component Structures

Trump

continue

refuse

Structure Combination

to

sign Initial Word

…. Representation

raise

objections BERT Initial Document Abstract End-to-End Cluster

to Entity Mention Structure GCN Event Mention Coreference Consistency

Representation Representation Resolution

the

.... Initial

Event Mention

doesn’t Representation

sign

….

Golden Cluster

Figure 2: An overview of the proposed ECR model.

in the same sentences tend to be more contextually only set to 1 (i.e., 0 otherwise) if ni is a word

related to each other than those in different sen- (ni ∈ D) in the span of the entity/event mention nj

tences. As such, event/entity mentions in the same (nj ∈ E ∪M ) or vice verse. aspan

ij is important as it

sentences might involve more helpful information helps ground representation vectors of event/entity

for the representation computation of each other mentions to the contextual information in D.

in our problem. To capture this intuition, we com- Syntax-based Edges: We expect the dependency

pute the sentence boundary-based interaction score trees of the sentences in D to provide beneficial

asent

ij for the nodes ni and nj in N where asent ij =1 information to connect the nodes in N for effec-

if ni and nj are the event/entity mentions of the tive representation learning in ECR. For example,

same sentences in D (i.e., ni , nj ∈ E ∪ M ); and 0 dependency trees have been used to retrieve im-

otherwise. We will use asentij as an input to compute portant context words between an event mentions

the overall interaction score aij for G later. and their arguments in prior work (Li et al., 2013;

Entity Coreference Structure: Instead of con- Veyseh et al., 2020a,b). To this end, we propose

sidering within-sentence information as in asent ij ,

to employ the dependency relations/connections

coreference structure focuses on the connection of between the words in D to obtain a syntax-based

entity mentions across sentences to enrich their rep- interaction score adep

ij for each pair of nodes ni and

resentations with the contextual information of the nj in N , serving as an additional input for aij . In

coreferring ones. As such, to enable the interaction particular, by inheriting the graph structures of the

of representations for coreferring enity mentions, dependency trees of the sentences in D, we set

we compute the conference-based score acoref ij for adep

ij to 1 if ni and nj are two words in the same

each pair of nodes ni and nj to contribute to the sentence (i.e., ni , nj ∈ D) and there is an edge be-

overall score aij for representation learning. Here, tween them in the corresponding dependency tree1 ,

acoref

ij is set to 1 if ni and nj are coreferring entity and 0 otherwise.

mentions in D, and 0 otherwise. Note that we also Semantic-based Edges: This information lever-

use the Stanford CoreNLP toolkit to determine the ages the semantic similarity of the nodes in N to

coreference of entity mentions in this work. enrich the overall interaction scores aij for G. Our

Mention Span: The sentence boundary and coref- motivation is that a node ni will contribute more

erence structure scores model interactions of event to the representation computation of another node

and entity mentions in D based on discourse struc- nj for ECR if ni is more semantically related to

ture. To connect event and entity mentions to nj . In particular, as the representation vectors for

context words wi for representation learning, we the nodes in N have captured the contextual se-

employ the mention span-based interaction score mantics of the words in D, we propose to explore

aspan

ij as another input for aij . Here, aspan ij is 1

We use Stanford CoreNLP to parse sentences.

4843a novel source of semantic information that relies where q is a learnable vector of size 5.

on external knowledge for the words to compute Representation Learning: Given the combined

interaction scores between the nodes N in our doc- interaction graph G with the adjacency matrix

ument structures for ECR. We expect the external A = {aij }i,j=|N | , we use GCNs to induce rep-

knowledge for the words to provide complemen- resentation vectors for the nodes in N for ECR. In

tary information to the contextual information in particular, our GCN model takes the initial repre-

D, thus further enriching the overall interaction sentation vectors vi of the nodes ni ∈ N as the

scores aij for the nodes in N . To this end, we pro- input. Here, the initial representation vector vi for

pose to utilize WordNet (Miller, 1995), a rich net- a word node ni ∈ D is directly obtained from the

work of word meanings, to obtain external knowl- BERT-based representation vector xc ∈ X (i.e.,

edge for the words in D. The word meanings (i.e., vi = xc ) of the corresponding word wc for ni . In

synsets) in WordNet are connected to each other contrast, for event and entity mentions, their initial

via different semantic relations (e.g., synonyms, representation vectors are obtained by max-pooling

hyponyms). In particular, our first step to generate the contextualized embedding vectors in X that

knowledge-based similarity scores involves map- correspond to the words in the event/entity men-

ping each word node ni ∈ D ∩ N to a synset tions’ spans. For convenience, we organize vi into

node Mi in WordNet using a Word Sense Disam- rows of the input matrix H0 = [v1 , . . . , v|N | ]. The

biguation (WSD) tool. In particular, we employ GCN model then involves G layers that generate

WordNet 3.0 and the state-of-the-art BERT-based the matrix Hl at the l-th layer for the nodes in N

WSD model in (Blevins and Zettlemoyer, 2020) to (1 ≤ l ≤ G) via: Hl = ReLU (AHl−1 Wl ) (Wl is

perform the word-synset mapping in this work. Af- the weight matrix for the l-th layer). The output of

terward, we compute a knowledge-based similarity the GCN model after G layers is HG whose rows

score astruct

ij for each pair of word nodes ni and nj are denoted by HG = [h1 , . . . , h|N | ], serving as

in D ∩ N using the structure-based similarity of more abstract representation vectors for the nodes

their linked synsets Mi and Mj in WordNet (i.e., ni in the coreference prediction for event mentions.

astruct

ij = 0 if either ni or nj is not a word node in Also, for convenience, let {re1 , . . . , re|E| } ⊂ HG

D ∩ N ). Accordingly, the Lin similarity measure be the set of GCN-induced representation vectors

(Lin et al., 1998) for synset nodes in WordNet is uti- for the event mention nodes in e1 , . . . , e|E| in E.

2∗IC(LCS(Mi ,Mj ))

lized for this purpose: astructij = IC(Mi )+IC(M j)

.

Here, IC and LCS represent the information con- 3.3 End-to-end Coreference Resolution

tent of synset nodes and the least common sub-

sumer of two synsets in the WordNet hierarchy To facilitate the incorporation of the consistency

(the most specific ancestor node) respectively2 . regularization between golden and predicted clus-

Structure Combination: Up to now, five scores ters into the training process, we perform and end-

have been generated to capture the level of inter- to-end procedure that seeks to simultaneously re-

actions in representation learning for each pair of solve the coreference for the event mentions in

nodes ni and nj in N according to different in- E in a single process. Motivated by the entity

coref coreference resolution in (Lee et al., 2017b), we

formation sources (i.e., asent ij , aij , aspan

ij , adep

ij implement the end-to-end resolution via a set of

and astruct

ij ). For convenience, we group the five

antecedent assignments for the event mentions in

scores for each node pair ni and nj into a vector

coref

E. In particular, we assume that the event mentions

dij = [asentij , aij , aspan

ij , adep struct ] of size 5.

ij , aij in E are enumerated in their appearance order in D.

To combine the scores in dij into an overall rich As such, our model aims to link each event mention

interaction score aij for ni and nj in G, we use the ei ∈ E to one of its prior event mention in the set

following normalization: Yi = {, e1 , . . . , ei−1 } ( is a dumpy antecedent).

X Here, a link of ei to a non-dumpy antecedent ej in

aij = exp(dij q T )/ exp(diu q T ) (1)

Yi represents a coreference relation between ei and

u=1..|N |

ej . In contrast, a dumpy assignment for ei indicates

2

We use the nltk tool to obtain the Lin sim- that ei is not coreferent with any prior event men-

ilarity: https://www.nltk.org/howto/wordnet. tion. By forming a coreference graph with ei as

html. We tried other WordNet-based similarities available

in nltk (e.g., Wu-Palmer similarity), but the Lin similarity the nodes, the non-dumpy antecedent assignments

produced the best results in our experiments. for every event mention in E can be utitlized to

4844connect coreference event mentions. Connected Intra-cluster Consistency: This constraint con-

components from the coreference graph can then cerns the inner information of each cluster, char-

be returned to serve as predicted event mention acterizing the structure of each individual event

clusters in D. mention in its golden and predicted clusters in T

In order to predict the coreferent antecedent and P. In particular, for each event mention ei ∈ E,

yi ∈ Y for an event mention ei , we compute the we expect its distances to the centroid vectors of

distribution over the possible antecedents in Yi the corresponding golden and predicted clusters

for ei via: P (yi | ei , Yi ) = es(ei ,yi )

where Ti0 and Pi0 in T and P (respectively) to be similar,

P s(ei ,y 0 )

y 0 ∈Y(i) e i.e., Ti0 ∈ T , Pi0 ∈ P, ei ∈ Ti0 , ei ∈ Pi0 . As such,

s(ei , ej ) is a score function to determine the coref- we compute the distances between the representa-

erence likelihood between ei and ej in D. To this tion vector rei of ei to the centroid vectors rTi0 and

end, we set s(ei , ) = 0 for all ei ∈ E. Inspired

rPi0 via the Euclidean distances krei − rTi0 k22 and

by (Lee et al., 2017b), we obtain the score function

krei − rPi0 k22 . Afterward, the differences Linner

s(ei , ej ) for ei and ej by leveraging their GCN-

between the two distances for golden and predicted

induced representation vectors rei and rej via:

clusters are aggregated over all event mentions and

s(ei , ej ) = sm (ei ) + sm (ej ) + sc (ei , ej ) + sa (ei , ej ) added into the overall

P|E| loss function for minimiza-

sm (ei ) = w>

m FFm (rei ) tion: Linner = i=1 |krei − rTi0 k22 − krei − rPi0 k22 |.

sc (ei , ej ) = w>

a FFc ([rei , rej , rei rej ]) Inter-cluster Consistency: In this constraint, we

sa (ei , ej ) = re>i W c rej expect that the structure among the clusters Ti

in the golden set T is consistent with those for

where Fm and FFc are two-layer feed-forward net-

the predicted event cluster set P (i.e., inter-cluster

works, w> >

m and w a are learnable vectors, W c regulation). To implement this idea, we encode

is a weight matrix, and is the element-wise

the structure of the clusters in a set via the av-

multiplication. At the inference time, we em-

erage of the pairwise distances between the cen-

ploy the greedy decoding to predict the antecedent

troid vectors of the clusters. In particular, the

ŷi for ei : ŷi = argmaxP (yi |ei , Yi ). For train-

inter-cluster structure scores for the golden and

ing, we use the negative log-likelihood as the loss

predicted clusters in T and P are computed via:

function in our end-to-end framework: Lpred = P|T | P|T |

P|E| sT = |T |(|T2 |−1) i=1 j=i+1 krTi − rTj k22 , and

− i=0 log P (yi∗ |ei , Yi ) (yi∗ ∈ Yi is the golden P|P| P|P|

2 2

antecedent for ei ). sP = |P|(|P|−1) i=1 j=i+1 krPi − rPj k2 . The

difference between the structure scores for golden

3.4 Cluster Consistency Regularization and predicted clusters T and P is then included

To further improve representation learning for ECR, into the overall loss function for minimization:

we propose to regularize the induced representa- Linter = |sT − sP |.

tion vectors of the event mentions in E to explicitly Inter-set Similarity: This constraint aims to di-

enforce the consistency/similarity between golden rectly promote the similarity between the golden

and predicted event mention clusters in D. This clusters in T and the predicted clusters in P. As

is based on our motivation that ECR models will such, for the golden and predicted cluster sets T

perform better if they can produce more similar and P, we first obtain the overall centroid vec-

event mention clusters to the golden ones. As such, tors uT and uP (respectively) by averaging the

for convenience, let T = {T1 , T2 , . . . , T|T | } and centroid vectors of their member clusters: uT =

P = {P1 , P2 , . . . , P|P| } be the golden and pre- averageT ∈T (rT ) and uP = averageP ∈P (rP ). The

dicted sets of event mentions in E respectively, Euclidean distance Lsim is then integrated into the

i.e., Ti , Pj ⊂ E, and T1 ∪ T2 ∪ . . . ∪ T|T | = overall loss for minimization: Lsim = kuT −uP k22 .

P1 ∪P2 ∪. . .∪P|P| = E. Also, for each cluster C in Note that Linner , Linter , and Lsim will be zero if

T or P, we compute a centroid vector rC for it by the predicted clusters in P are the same as those in

averaging the representation vectors of the event the golden clusters in T .

mention members: rC = averagee∈C (re ). This To summarize, the overall loss function L to

leads to the centroid vectors {rT1 , rT2 , . . . , rT|T | } train our ECR model in this work is: L =

and {rP1 , rP2 , . . . , rP|P| } for T and P respectively. Lpred + αinner Linner + αinter Linter + αsim Lsim

We propose the following regularization terms for with αinner , αinter , and αsim as the trade-off pa-

cluster consistency: rameters.

48454 Experiments approach in (Choubey and Huang, 2018), and the

discourse structure profiling model in (Choubey

4.1 Dataset & Hyperparameters

et al., 2020) (also the model with the best reported

Following prior work (Choubey and Huang, 2018), performance in KBP datasets). In addition, we

we train our ECR models on the KBP 2015 dataset examine the following baselines of StructECR to

(Mitamura et al., 2015) and evaluate the models highlight the benefits of the proposed components:

on the KBP 2016 and KBP 2017 datasets for ECR

E2E-Only: This variant implements the end-to-

(Mitamura et al., 2016, 2017). In particular, the

end resolution model described in Section 3.3

KBP 2015 dataset includes 360 annotated docu-

where all event mentions in a document are re-

ments for ECR (181 documents from discussion

solved simultaneously in a single process. How-

forum and 179 documents from news articles). We

ever, different from our full model StructECR, E2E-

use the same 310 documents from KBP 2015 as in

Only does not include the document structure com-

(Choubey and Huang, 2018) for the training data

ponent with GCN for representation learning, i.e.,

and the remaining 50 documents for the develop-

it directly uses the initial representation vectors vi

ment data. Also, similar to (Choubey and Huang,

(induced from BERT) for the event mentions in the

2018), the news articles in KBP 2016 (85 docu-

computation of the distribution P (yi |ei , Yi ). Also,

ments) and KBP 2017 (83 documents) are lever-

the cluster consistency regularization in Section 3.4

aged for test datasets. To ensure a fair comparison,

is also not included in this model.

we use the predicted event mentions provided by

(Choubey and Huang, 2018) in all the datasets. Fi- Pairwise: This model is similar to E2E-Only in

nally, we report the ECR performance based on that it does not applies the document structures and

the official KBP 2017 scorer (version 1.8)3 . The regularization terms in StructECR. In addition, in-

scorer employs four coreference scoring measures, stead of simultaneously resolving event mentions

including MUC (Vilain et al., 1995), B 3 (Bagga in documents, Pairwise predicts the coreference for

and Baldwin, 1998), CEAF-e (Luo, 2005), BLANC every pair of event mentions separately. In particu-

(Lee et al., 2012b), and the unweighted average of lar, the representation vectors vei and vej for two

their F1 scores (AVGF 1 ). event mentions ei and ej (included from BERT)

Hyper-parameters for the models are fine-tuned are combined via [vei , vej , vei vej ]. This vector

by the AVGF 1 scores over development data. The is then sent into a feed-forward network to pro-

selected values from the tuning process include: 1e- duce a distribution over possible coreference labels

5 for the learning rate of the Adam optimizer (se- between ei and ej (i.e., two labels for being coref-

lected from [1e-5, 2e-5, 3e-5, 4e-5, 5e-5]); 8 for the erent or not). The coreference labels for every pair

mini-batch size (selected from [8, 16, 32, 64]); 128 of event mentions are then gathered in a corefer-

hidden units for all the feed-forward network and ence graphs among event mentions; the connected

GCN layers (selected from [64, 128, 256, 512]); components will be returned for the event clusters.

2 layers for the GCN model, G = 2 (selected Table 1 reports the performance of the ECR mod-

from [1, 2, 3, 4]), and αinner = 0.1, αinter = els on the KBP 2016 and KBP 2017 datasets. As

0.1, and αsim = 0.1 for the trade-off parame- can be seen from the table, E2E-Only performs

ters in the overall loss function L (selected from comparably or better than prior state-of-the-art

[0.1, 0, 2, . . . , 0.9]). Finally, we use the BERTbase models for ECR, e.g., (Choubey and Huang, 2018)

model (of 768 dimensions) for the pre-trained word and (Choubey et al., 2020), that employ extensive

embeddings (updated during the training). feature engineering. In addition, the better perfor-

mance of E2E-Only over Pairwise (for both KBP

4.2 Performance Evaluation 2016 and KBP 2017) illustrates the benefits of end-

We compare the proposed model for ECR with to-end coreference resolution for event mentions in

document structures and cluster consistency regu- documents. Most importantly, the proposed model

larization (called StructECR) with prior work ECR StructECR significantly outperforms all the base-

models in the same evaluation setting, including line models for which the performance improve-

the joint model between ECR and event detection ment over E2E-Only is 1.94% and 1.26% (i.e.,

(Lu and Ng, 2017), the integer linear programming AVGF 1 scores) over the KBP 2016 and KBP 2017

3 datasets respectively. This clearly demonstrates

https://github.com/hunterhector/

EvmEval the benefits of the proposed ECR model with rich

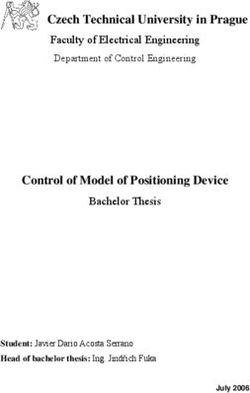

4846KBP 2016 KBP 2017

Model B3 CEAFe MUC BLANC AVGF 1 B3 CEAFe MUC BLANC AVGF 1

(Lu and Ng, 2017) 50.16 48.59 32.41 32.72 40.97 - - - - -

(Choubey and Huang, 2018) 51.67 49.10 34.08 34.08 42.23 50.35 48.61 37.24 31.94 42.04

(Choubey et al., 2020) 52.78 49.70 34.62 34.49 42.90 51.68 50.57 37.8 33.39 43.36

Pairwise 52.16 49.84 30.79 32.21 41.25 50.97 48.80 36.92 31.86 42.14

E2E-Only 50.89 50.43 36.05 33.93 42.83 51.60 52.03 38.53 33.02 43.80

StructECR 52.77 52.29 38.37 35.66 44.77 51.93 52.82 40.73 34.75 45.06

Table 1: Models’ performance on the KBP 2016 and KBP 2017 datasets. The performance improvement of

StructECR over E2E-Only is significant with p < 0.01.

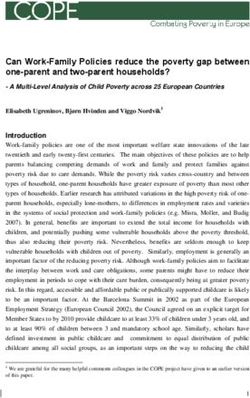

Model B3 CEAFe MUC BLANC AVGF 1

document structures and cluster consistency regu- StructECR (full) 76.86 69.99 66.40 69.02 70.57

larization for representation learning. StructECR - asent

ij 75.37 69.73 62.42 69.49 69.25

StructECR - acoref

ij 75.07 69.74 62.97 69.67 69.36

StructECR - aspan 75.32 70.32 63.44 66.97 69.01

4.3 Ablation Study ij

StructECR - adep 74.66 69.76 62.72 69.14 69.07

ij

StructECR - astruct 75.44 69.53 61.82 71.48 69.57

Two major components in the proposed model ij

StructECR - Entity Nodes 74.67 69.71 63.01 67.35 68.69

StructECR involve the document structures and StructECR - GraphCombine 75.41 69.74 62.38 68.90 69.11

StructECR - Doc Structures 74.15 66.78 60.24 66.32 66.87

the cluster consistency regularization. This sec- StructECR - Linner 75.09 68.44 62.25 68.01 68.45

StructECR - Linter 74.80 67.98 61.92 67.71 68.10

tion performs an ablation study to reveal the con- StructECR - Lsim 75.13 68.12 62.03 68.95 68.56

tribution of such components for the full model. StructECR - Regularization 74.46 67.55 60.74 68.28 67.76

First, for the document structures, we examine

the following ablated models: (i) “StructECR - Table 2: Performance on the KBP 2015 dev set.

x”: where x is one of the five interaction scores

KBP 2016 KBP 2017

used to compute the unified score aij for G (i.e., NW → DF DF → NW NW → DF DF → NW

coref

asent

ij , aij , aspan

ij , adep struct ). For exam-

ij , and aij Pairwise 60.51 58.11 59.22 59.10

span E2E-Only 65.82 62.01 62.56 62.52

ple, “StructECR - aij ” implies a variant of

StructECR 68.19 65.83 65.19 65.12

StructECR where the span-based interaction score

aspan

ij is not included in the compuation of the over- Table 3: Cross-domain performance (AVGF 1 ). NW

all score aij ; (ii) “StructECR - Entity Nodes: this and DF represent news articles and discussion forum

model excludes the entity mention nodes from the documents respectively. X → Y implies models trained

interaction graph G in StructECR (i.e., N = D ∪ E on domain X and tested on domain Y.

only); (iii) “StructECR - GraphCombine”: in-

stead of unifying the five interaction scores in dij

into an overall score aij in Equation 1, this model {Linner , Linter , Lsim }): these models exclude one

considers each of the five generated interaction of the regularization terms for the consistency be-

scores as forming a separate interaction graph, thus tween golden and predicted clusters from the over-

producing six different graphs. The GCN model is all loss function L; and (vi) StructECR - Regular-

then applied over those five graphs (using the same ization: this model completely ignores the consis-

initial representation vectors vi for the nodes ni in tency regularization component from StructECR.

N ). The outputs of the GCN model for the same Table 2 shows the performance of the models

node ni (with different graphs) are then concate- on the development data of the KBP 2015 dataset.

nated to compute the final representation vector hi As can be seen, the elimination of any component

for ni ; and (iv) StructECR - Doc Structures: this from StructECR would significantly hurt the per-

model removes the GCN model from StructECR. formance, thus clearly demonstrating the benefits

As such, the interaction graph G is not used and of the designed document structures and cluster

the GCN-induced representation vectors hi are re- consistency regularization in StructECR.

placed by the initial BERT-induced representation

vectors vi in the computation for end-to-end reso- 4.4 Cross-domain Evaluation

lution and consistency regularization. To further demonstrate the benefits for the pro-

Second, for the cluster consistency regular- posed model StructECR, we evaluate StructECR

ization, we evaluate the following ablated mod- and the baseline models Pairwise and E2E-Only in

els for StructECR: (v) StructECR - y (y ∈ the cross-domain setting. In this setting, we aim

4847to train the models on one domain (the source do- ther expressed or implied, of ARO, ODNI, IARPA,

main) and evaluate them on another domain (the the Department of Defense, or the U.S. Govern-

target domain). We leverage the KBP 2016 and ment. The U.S. Government is authorized to re-

KBP 2017 datasets for this experiment. In particu- produce and distribute reprints for governmental

lar, KBP 2016 annotates ECR data for 85 newswire purposes notwithstanding any copyright annotation

and 84 discussion forum documents (i.e., two do- therein. This document does not contain technol-

mains/genres) while KBP 2017 provides annotated ogy or technical data controlled under either the

data for ECR on 83 news articles and 84 discus- U.S. International Traffic in Arms Regulations or

sion forum documents. As such, for each dataset, the U.S. Export Administration Regulations.

we consider two setups where documents in one

domain (i.e., newswire or discussion forum) are

used for the source domain, leaving documents in References

the other domain for the target domain data. We Cosmin Adrian Bejan and Sanda Harabagiu. 2014. Un-

use the same hyper-parameters that are tuned on supervised event coreference resolution. In Compu-

the development set of KBP 2015 for the models tational Linguistics (CL).

in this experiment. Table 3 presents the perfor- David Ahn. 2006. The stages of event extraction. In

mance of the models. It is clear from the table that Proceedings of the Workshop on Annotating and

StructECR are significantly and substantially better Reasoning about Time and Events.

than the baseline models (p < 0.01) over differ-

Jun Araki and Teruko Mitamura. 2015. Joint event trig-

ent datasets and settings for the source and target

ger identification and event coreference resolution

domains, thereby confirming the domain general- with structured perceptron. In Proceedings of the

ization advantages of StructECR for ECR. 2015 Conference on Empirical Methods in Natural

Language Processing (EMNLP).

5 Conclusion

Amit Bagga and Breck Baldwin. 1998. Entity-

We present a novel end-to-end coreference resolu- based cross-document coreferencing using the vec-

tion framework for event mentions based on deep tor space model. In Proceedings of the Inter-

national Conference on Computational Linguistics

learning. The novelty in our model is twofold. First, (COLING).

document structures are introduced to explicitly

capture relevant objects and their interactions in Shany Barhom, Vered Shwartz, Alon Eirew, Michael

documents to aid representation learning. Second, Bugert, Nils Reimers, and Ido Dagan. 2019. Re-

visiting joint modeling of cross-document entity and

several regularization techniques are proposed to event coreference resolution. In Proceedings of the

exploit the consistencies between human-provided 57th Annual Meeting of the Association for Compu-

and machine-generated clusters of event mentions tational Linguistics (ACL).

in documents. We perform extensive experiments

Terra Blevins and Luke Zettlemoyer. 2020. Moving

on two benchmark datasets for ECR to demonstrate down the long tail of word sense disambiguation

the advantages of the proposed model. In the future, with gloss informed bi-encoders. In Proceedings of

we plan to extend our models to related problems the 58th Annual Meeting of the Association for Com-

in information extraction, e.g., event extraction. putational Linguistics (ACL).

Arie Cattan, Alon Eirew, Gabriel Stanovsky, Mandar

Acknowledgments Joshi, and Ido Dagan. 2020. Streamlining cross-

document coreference resolution: Evaluation and

This research has been supported by the Army Re- modeling. arXiv preprint arXiv:2009.11032.

search Office (ARO) grant W911NF-21-1-0112.

This research is also based upon work supported by Chen Chen and Vincent Ng. 2016. Joint inference over

the Office of the Director of National Intelligence a lightly supervised information extraction pipeline:

Towards event coreference resolution for resource-

(ODNI), Intelligence Advanced Research Projects scarce languages. In Proceedings of the Associa-

Activity (IARPA), via IARPA Contract No. 2019- tion for the Advancement of Artificial Intelligence

19051600006 under the Better Extraction from Text (AAAI).

Towards Enhanced Retrieval (BETTER) Program.

Zheng Chen and Heng Ji. 2009. Graph-based event

The views and conclusions contained herein are coreference resolution. In Proceedings of the Work-

those of the authors and should not be interpreted shop on Graph-based Methods for Natural Lan-

as necessarily representing the official policies, ei- guage Processing.

4848Zheng Chen, Heng Ji, and Robert Haralick. 2009. A Kian Kenyon-Dean, Jackie Chi Kit Cheung, and Doina

pairwise event coreference model, feature impact Precup. 2018. Resolving event coreference with

and evaluation for event coreference resolution. In supervised representation learning and clustering-

Proceedings of the Workshop on Events in Emerging oriented regularization. In Proceedings of the

Text Types. Eighth Joint Conference on Lexical and Computa-

tional Semantics (*SEM).

Prafulla Kumar Choubey and Ruihong Huang. 2017.

Event coreference resolution by iteratively unfold- Thomas N. Kipf and Max Welling. 2017. Semi-

ing inter-dependencies among events. In Proceed- supervised classification with graph convolutional

ings of the 2017 Conference on Empirical Methods networks. In Proceedings of the International Con-

in Natural Language Processing (EMNLP). ference on Learning Representations (ICLR).

Prafulla Kumar Choubey and Ruihong Huang. 2018. Heeyoung Lee, Marta Recasens, Angel Chang, Mihai

Improving event coreference resolution by modeling Surdeanu, and Dan Jurafsky. 2012a. Joint entity and

correlations between event coreference chains and event coreference resolution across documents. In

document topic structures. In Proceedings of the Proceedings of the 2012 Conference on Empirical

56th Annual Meeting of the Association for Compu- Methods in Natural Language Processing (EMNLP).

tational Linguistics (ACL).

Heeyoung Lee, Marta Recasens, Angel Chang, Mihai

Surdeanu, and Dan Jurafsky. 2012b. Joint entity and

Prafulla Kumar Choubey, Aaron Lee, Ruihong Huang, event coreference resolution across documents. In

and Lu Wang. 2020. Discourse as a function of Proceedings of the 2012 Conference on Empirical

event: Profiling discourse structure in news arti- Methods in Natural Language Processing (EMNLP).

cles around the main event. In Proceedings of the

58th Annual Meeting of the Association for Compu- Kenton Lee, Luheng He, Mike Lewis, and Luke Zettle-

tational Linguistics (ACL). moyer. 2017a. End-to-end neural coreference reso-

lution. In Proceedings of the 2017 Conference on

Agata Cybulska and Piek T. J. M. Vossen. 2015. ”bag Empirical Methods in Natural Language Processing

of events” approach to event coreference resolution. (EMNLP).

supervised classification of event templates. In Int.

J. Comput. Linguistics Appl. Kenton Lee, Luheng He, Mike Lewis, and Luke Zettle-

moyer. 2017b. End-to-end neural coreference reso-

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and lution. In Proceedings of the 2017 Conference on

Kristina Toutanova. 2019. BERT: Pre-training of Empirical Methods in Natural Language Processing

deep bidirectional transformers for language under- (EMNLP).

standing. In Proceedings of the 2019 Conference of

the North American Chapter of the Association for Qi Li, Heng Ji, and Liang Huang. 2013. Joint event

Computational Linguistics: Human Language Tech- extraction via structured prediction with global fea-

nologies (NAACL-HLT). tures. In Proceedings of the 51th Annual Meet-

ing of the Association for Computational Linguistics

Greg Durrett and Dan Klein. 2013. Easy victories and (ACL).

uphill battles in coreference resolution. In Proceed-

ings of the 2013 Conference on Empirical Methods Dekang Lin et al. 1998. An information-theoretic def-

in Natural Language Processing (EMNLP). inition of similarity. In Proceedings of the Interna-

tional Conference on Machine Learning (ICML).

Yin Jou Huang, Jing Lu, Sadao Kurohashi, and Vincent

Zhengzhong Liu, Jun Araki, Eduard Hovy, and Teruko

Ng. 2019. Improving event coreference resolution

Mitamura. 2014. Supervised within-document event

by learning argument compatibility from unlabeled

coreference using information propagation. In Pro-

data. In Proceedings of the 2019 Conference of the

ceedings of the International Conference on Lan-

North American Chapter of the Association for Com-

guage Resources and Evaluation (LREC).

putational Linguistics: Human Language Technolo-

gies (NAACL-HLT). Jing Lu and Vincent Ng. 2017. Joint learning for

event coreference resolution. In Proceedings of the

Mandar Joshi, Danqi Chen, Y. Liu, Daniel S. Weld, 55th Annual Meeting of the Association for Compu-

Luke Zettlemoyer, and Omer Levy. 2019a. Spanbert: tational Linguistics (ACL).

Improving pre-training by representing and predict-

ing spans. In Transactions of the Association for Jing Lu, Deepak Venugopal, Vibhav Gogate, and Vin-

Computational Linguistics (TACL). cent Ng. 2016. Joint inference for event coreference

resolution. In Transactions of the Association for

Mandar Joshi, Omer Levy, Luke Zettlemoyer, and Computational Linguistics (TACL).

Daniel Weld. 2019b. BERT for coreference resolu-

tion: Baselines and analysis. In Proceedings of the Yaojie Lu, Hongyu Lin, Jialong Tang, Xianpei Han,

2019 Conference on Empirical Methods in Natural and Le Sun. 2020. End-to-end neural event coref-

Language Processing (EMNLP). erence resolution. In CoRR abs/2009.08153.

4849Xiaoqiang Luo. 2005. On coreference resolution per- Marc Vilain, John Burger, John Aberdeen, Dennis Con-

formance metrics. In Proceedings of the 2005 Con- nolly, and Lynette Hirschman. 1995. A model-

ference on Empirical Methods in Natural Language theoretic coreference scoring scheme. In Sixth Mes-

Processing (EMNLP). sage Understanding Conference (MUC-6).

George A Miller. 1995. Wordnet: a lexical database for Bishan Yang, Claire Cardie, and Peter Frazier. 2015. A

english. Communications of the ACM, 38(11):39– hierarchical distance-dependent Bayesian model for

41. event coreference resolution. In Transactions of the

Association for Computational Linguistics (TACL).

Teruko Mitamura, Zhengzhong Liu, and Eduard H.

Hovy. 2015. Overview of TAC-KBP 2015 event

nugget track. In Proceedings of the Text Analysis

Conference (TAC).

Teruko Mitamura, Zhengzhong Liu, and Eduard H.

Hovy. 2016. Overview of TAC-KBP 2016 event

nugget track. In Proceedings of the Text Analysis

Conference (TAC).

Teruko Mitamura, Zhengzhong Liu, and Eduard H.

Hovy. 2017. Events detection, coreference and se-

quencing: What’s next? overview of the TAC KBP

2017 event track. In Proceedings of the Text Analy-

sis Conference (TAC).

Vincent Ng. 2010. Supervised noun phrase coreference

research: The first fifteen years. In Proceedings of

the 48th Annual Meeting of the Association for Com-

putational Linguistics (ACL).

Thien Huu Nguyen, , Adam Meyers, and Ralph Grish-

man. 2016. New york university 2016 system for

kbp event nugget: A deep learning approach. In Pro-

ceedings of the Text Analysis Conference (TAC).

Thien Huu Nguyen and Ralph Grishman. 2018. Graph

convolutional networks with argument-aware pool-

ing for event detection. In Proceedings of the Asso-

ciation for the Advancement of Artificial Intelligence

(AAAI).

Haoruo Peng, Yangqiu Song, and Dan Roth. 2016.

Event detection and co-reference with minimal su-

pervision. In Proceedings of the 2016 Conference

on Empirical Methods in Natural Language Process-

ing (EMNLP).

Karthik Raghunathan, Heeyoung Lee, Sudarshan Ran-

garajan, Nathanael Chambers, Mihai Surdeanu, Dan

Jurafsky, and Christopher Manning. 2010. A multi-

pass sieve for coreference resolution. In Proceed-

ings of the 2010 Conference on Empirical Methods

in Natural Language Processing (EMNLP).

Amir Pouran Ben Veyseh, Franck Dernoncourt, Dejing

Dou, and Thien Huu Nguyen. 2020a. Exploiting the

syntax-model consistency for neural relation extrac-

tion. In Proceedings of the Annual Meeting of the

Association for Computational Linguistics (ACL).

Amir Pouran Ben Veyseh, Tuan Ngo Nguyen, and

Thien Huu Nguyen. 2020b. Graph transformer net-

works with syntactic and semantic structures for

event argument extraction. In Proceedings of the

Findings of the Conference on Empirical Methods

in Natural Language Processing (EMNLP).

4850You can also read