A surgical dataset from the da Vinci Research Kit for task automation and recognition

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Journal Title

XX(X):1–6

A surgical dataset from the da Vinci ©The Author(s) 2020

Reprints and permission:

Research Kit for task automation and sagepub.co.uk/journalsPermissions.nav

DOI: 10.1177/ToBeAssigned

recognition www.sagepub.com/

SAGE

Irene Rivas-Blanco1 , Carlos J. Pérez-del-Pulgar1 , Andrea Mariani2,3 , Claudio Quaglia 2,3 ,

Giuseppe Tortora 2,3 , Arianna Menciassi2,3 , and Vı́ctor F. Muñoz1

Abstract

The use of datasets is getting more relevance in surgical robotics since they can be used to recognise and automate

tasks. Also, this allows to use common datasets to compare different algorithms and methods. The objective of this work

is to provide a complete dataset of three common training surgical tasks that surgeons perform to improve their skills.

For this purpose, 12 subjects teleoperated the da Vinci Research Kit to perform these tasks. The obtained dataset

includes all the kinematics and dynamics information provided by the da Vinci robot (both master and slave side)

arXiv:2102.03643v1 [cs.RO] 6 Feb 2021

together with the associated video from the camera. All the information has been carefully timestamped and provided

in a readable csv format. A MATLAB interface integrated with ROS for using and replicating the data is also provided.

Keywords

Dataset, da Vinci Research Kit, automation, surgical robotics

Introduction Moreover, we provide a task evaluation based on time and

task-specific errors, and a questionnaire with personal data

Surgical Data Science (SDS) is emerging as a new of the subjects (gender, age, dominant hand) and previous

knowledge domain in healthcare. In the field of surgery, it experience using teleoperated systems and visuo-motor skills

can provide many advances in virtual coaching, surgeon skill (sport and musical instruments).

evaluation and complex tasks learning from surgical robotic

systems Vedula and Hager (2020), as well as in the gesture System description

recognition domain Pérez-del Pulgar et al. (2019), Ahmidi

et al. (2017). The development of large datasets related to The dVRK, supported by the Intuitive Foundation (Sunny-

the execution of surgical tasks using robotic systems would vale, CA), arose as a community effort to support research

support these advances, providing detailed information of in the field of telerobotic surgery Kazanzides et al. (2014).

the surgeon movements, in terms of both kinematics and This platform is made up of hardware of the first-generation

dynamics data, as well as video recordings. One of the da Vinci system along with motor controllers and a software

most known dataset in this field is the JIGSAWS dataset, framework integrated with the Robot Operating System

described in Gao et al. (2014). In this work, they defined (ROS) Chen et al. (2017). There are over thirty dVRK

three surgical tasks that were performed by 6 surgeons using platforms distributed in ten countries around the world. The

the da Vinci Research Kit (dVRK). This research platform, dVRK of the Scuola Superiore Sant’Anna has two Patient

based on the first-generation commercial da Vinci Surgical Side Manipulators (PSM), labelled as PSM1 and PSM2

System (by Intuitive Surgical, Inc., Sunnyvale, CA) and (Figure 1(a)), and a master console consisting of two Master

integrated with ad-hoc electronics, firmware and software, Tool Manipulators (MTM), labelled as MTML and MTMR

can provide kinematics and dynamics data along with the (Figure 1(b)). MTML controls PSM1, while MTMR controls

video recordings. The JIGSAWS dataset contains 76 motion PSM2. For these experiments, the stereo vision is provided

variables collected at 30 samples per second for 101 trials of using two commercial webcams, as the dVRK used for the

the tasks. experiments was not equipped with an endoscopic camera

and its manipulator. Each PSM has 6 joints following the

Thus, the objective of this work is to extend the available kinematics described in Fontanelli et al. (2017), and an

datasets related to surgical robotics. We present the Robotic

Surgical Manuevers (ROSMA) dataset, a large dataset

collected using the dVRK, in collaboration between the 1 University

of Malaga, Spain

University of Malaga (Spain) and The Biorobotics Insitute of 2 TheBioRobotics Institute, Scuola Superiore Sant’Anna, Pisa, Italy

the Scuola Superiore Sant’Anna (Italy), under a TERRINet 3 Department of Excellence in Robotics & AI, Scuola Superiore

(The European Robotics Research Infrastructure Network) Sant’Anna, Pisa, Italy

project. This dataset contains 160 kinematic variables Corresponding author:

recorded at 50 Hz for 207 trials of three different tasks. This Irene Rivas Blanco Edificio de Ingenierı́as UMA, Arquitecto Francisco

data is complemented with the video recordings collected Peñalosa 6, 29071, Málaga, Spain

at 15 frames per second with 1024 x 768 pixel resolution. Email: irivas@uma.es

Prepared using sagej.cls [Version: 2017/01/17 v1.20]2 Journal Title XX(X)

(a) Slave manipulators (b) Master console

Figure 1. da Vinci Research Kit platform available at The Biorobotics Institute of Scuola Superiore Sant’Anna (Pisa, Italy)

(a) Patient side kinematics (b) Surgeon side kinematics

Figure 2. Patient and surgeon side kinematics. Kinematics of each PSM is defined with respect to the common frame ECM, while

the MTMs are described with respect to frame HRSV.

(a) Post and sleeve (b) Pea on a peg (c) Wire Chaser

Figure 3. Snapshot of the three tasks in the ROSMA dataset at the starting position.

additional degree of freedom for opening and closing the of each manipulator is set. The motion of each manipulator

gripper. The tip of the instrument moves about a remote is described by its corresponding tool tip frame with respect

center of motion (RCM), where the origin of the base frame to a common frame, labelled as ECM, as shown in Figure

Prepared using sagej.clsSmith and Wittkopf 3

Table 1. Protocol for each task of the ROSMA dataset

Post and sleeve Pea on a peg Wire chaser

Goal To move the colored sleeves from To put the beads on the 14 pegs of To move a ring from one side to the

side to side of the board. the board. other side of the board.

Starting The board is placed with the peg All beads are on the cup. The board is positioned with the text

position rows in a vertical position (from left “one hand” in front. The three rings

to right: 4-2-2-4). The six sleeves are at the right side of the board.

are positioned over the 6 pegs on

one of the sides of the board.

Procedure The subject has to take a sleeve The subject has to take the beads The subject has to pick one of

with one hand, pass it to the other one by one out of the cup and place the rings and pass it through the

hand, and place it over a peg on them on top of the pegs. For the wire to the other side of the board.

the opposite side of the board. If a trials performed with the right hand, The subjects must use only hand

sleeve is dropped, it is considered a the beads are placed on the right to move the ring, but they are

penalty and it cannot be taken back. side of the board, and vice versa. If allowed to help themselves with

a bead is dropped, it is considered a the other hand if needed. If the

penalty and it cannot be taken back. ring is dropped, it is considered a

penalty but it must be taken back to

complete the task.

Repetitions Six trials: three from right to left, and Six trials: three placing the beads Six trials: three moving the rings

other three from left to right. on the pegs of the right side of the from right to left, and other three

board, and other three on the left from left to right.

side.

Penalty 15 penalty points if a sleeve is 15 penalty points when a bead is 10 penalty points when the ring is

dropped. dropped. dropped.

Score Time in seconds + penalty points. Time in seconds + penalty points. Time in seconds + penalty points.

* A trial is the data corresponding with the performance of one subject one instance of a specific task.

Table 2. Dataset summary: number of trials of each subject and exercise in the ROSMA dataset.

X01 X02 X03 X04 X05 X06 X07 X08 X09 X10 X11 X12 Total

Post and sleeve 6 6 5 5 5 6 6 6 4 6 6 4 65

Pea on a peg 6 6 6 6 6 6 6 6 5 6 6 6 71

Wire chaser 6 6 6 6 6 6 6 6 6 5 6 6 71

No. Trials per subject 18 18 17 17 17 18 18 18 16 17 17 16 207

2(a). The MTMs used to remotely teleoperate the PSMs out in accordance with the recommendations of our insti-

have 7-DOF, plus the opening and closing of the instrument. tution with written informed consent from the subjects in

The base frames of each manipulator are related through accordance with the declaration of Helsinki. Before starting

the common frame HRSV, as show in Figure 2(b). The the experiment, each subject was taught about the goal of

transformation between the base frames and the common the exercises and the error metrics. The overall length of the

one in both sides of the dVRK is described in the json data recorded is 8 hours, 19 minutes and 40 seconds, and the

configuration file that can be found in the ROSMA Github total amount of kinematic data is around 1.5 millions for each

repository* . parameter. The dataset contains 416 files divided as follows:

The ROSMA dataset contains the performance of three 207 data files in csv (comma-separated values) format; 207

tasks of the Skill Building Task Set (from 3-D Techical video data files in mp4 format; 1 file in cvs format with the

Services, Franklin, OH): post and sleeve (Figure 3(a)), exercises evaluation, named ’scores.csv’; and 1 file, also in

pea on a peg (Figure 3(b)), and Wire Chaser (Figure csv format, with the answers of the personal questionnaire,

3(c)). These training platforms for clinical skill development named ’User questionnaire - dvrk Dataset Experiment.csv’.

provide challenges that require motions and skills used The name of the data and video files follows the follow-

in laparoscopic surgery, such as hand-eye coordination, ing hierarchy: .

bimanual dexterity, depth perception or interaction between The description of each of these fields is as follows:

dominant and non-dominant hand. These exercises were provides a unique identifier for each user, and

born for clinical skill acquisition, but they are also commonly ranges from ’X01’ to ’X12’; may be one of

used in surgical research for different applications, such as the following labels, depending on the task being performed:

clinical skills acquisition Hardon et al. (2018) or testing of ’Pea on a Peg’, ’Post and Sleeve’, or ’Wire Chaser’; and

new devices Velasquez et al. (2016). The protocol for each is the repetition number of the user in the

exercise is described in Table 1. current task, ranging from ’01’ to ’06’. For example, the file

name ’X03 Pea on a Peg 04’ corresponds to the fourth trial

of the user ’X03’ performing the task Pea on a Peg.

Dataset structure

The ROSMA dataset is divided into three training tasks * https://github.com/SurgicalRoboticsUMA/rosma_

performed by twelve subjects. The experiments were carried dataset

Prepared using sagej.cls4 Journal Title XX(X)

Table 3. Structure of the columns of the data files.

Indices Number Label Unit ROS publisher

2-4 3 MTML position m /dvrk/MTML/position cartesian current/position

5-8 4 MTML orientation radians /dvrk/MTML/position cartesian current/orientation

9-11 3 MTML velocity linear m/s /dvrk/MTML/twist body current/linear

12-14 3 MTML velocity angular radians/s /dvrk/MTML/twist body current/angular

15-17 3 MTML wrench force N /dvrk/MTML/wrench body current/force

18-20 3 MTML wrench torque N·m /dvrk/MTML/wrench body current/torque

21-27 7 MTML joint position radians /dvrk/MTML/state joint current/position

28-34 7 MTML joint velocity radians/s /dvrk/MTML/state joint current/velocity

35-41 7 MTML joint effort N /dvrk/MTML/state joint current/effort

42-44 3 MTMR position m /dvrk/MTMR/position cartesian current/position

45-48 4 MTMR orientation m /dvrk/MTMR/position cartesian current/orientation

49-51 3 MTMR velocity linear m/s /dvrk/MTMR/twist body current/linear

52-54 3 MTMR velocity angular radians/s /dvrk/MTMR/twist body current/angular

55-57 3 MTMR wrench force N /dvrk/MTMR/wrench body current/force

58-60 3 MTMR wrench torque N·m /dvrk/MTMR/wrench body current/torque

61-67 7 MTMR joint position radians /dvrk/MTMR/state joint current/position

68-74 7 MTMR joint velocity radians/s /dvrk/MTMR/state joint current/velocity

75-81 7 MTMR joint effort N /dvrk/MTMR/state joint current/effort

82-84 3 PSM1 position m /dvrk/PSM1/position cartesian current/position

85-88 4 PSM1 orientation radians /dvrk/PSM1/position cartesian current/orientation

89-91 3 PSM1 velocity linear m/s /dvrk/PSM1/twist body current/linear

92-94 3 PSM1 velocity angular radians/s /dvrk/PSM1/twist body current/angular

95-97 3 PSM1 wrench force N /dvrk/PSM1/wrench body current/force

98-100 3 PSM1 wrench torque N·m /dvrk/PSM1/wrench body current/torque

101-106 6 PSM1 joint position radians /dvrk/PSM1/state joint current/position

107-112 6 PSM1 joint velocity radians/s /dvrk/PSM1/state joint current/velocity

113-118 6 PSM1 joint effort N /dvrk/PSM1/state joint current/effort

118-120 3 PSM2 position m /dvrk/PSM2/position cartesian current/position

120-124 4 PSM2 orientation radians /dvrk/PSM2/position cartesian current/orientation

125-128 3 PSM2 velocity linear m/s /dvrk/PSM2/twist body current/linear

129-131 3 PSM2 velocity angular radians/s /dvrk/PSM2/twist body current/angular

132-134 3 PSM2 wrench force N /dvrk/PSM2/wrench body current/force

135-137 3 PSM2 wrench torque N·m /dvrk/PSM2/wrench body current/torque

138-143 6 PSM2 joint position radians /dvrk/PSM2/state joint current/position

144-149 6 PSM2 joint velocity radians/s /dvrk/PSM2/state joint current/velocity

150-155 6 PSM2 joint effort N /dvrk/PSM2/state joint current/effort

The summary of the number of trials performed by each Video files

user and task is shown in Table 2. Each user performed a Images have been recorded with a commercial webcam at 15

total of six trials per task, but during the post-processing of frames per second, with a resolution of 1024 x 768 pixels.

the data, the authors found recording errors in some of them. Time of the internal clock of the computer recording the

That is the reason why there are some users with fewer trials images is shown at the right top corner, with a precision

in certain tasks. The full dataset is available for download † . of seconds. The webcam was placed in front of the dVRK

system so that PSM1 is on the right side of the images, and

PSM2 on the left side.

Data files

The data files are in csv format and contain 155 columns: Exercises evaluation

the first column, labelled as ’Date’ has the timestamp of

The file ’scores.csv’ contains the evaluation of each exercise

each set of measures, and the other 154 columns have the

according to the scoring of Table 1. Thus, for each file name,

kinematic data of the patient side manipulators (PSMs)

it features the execution time of the task (in seconds), the

and the master side manipulators (MSMs). The structure

number of errors, and the final score.

of these 154 columns is described in Table 3, which

shows the column indices for each kinematic motion, the

number of columns, the descriptive label of each variable, Personal questionnaire

the data units, and the ROS publishers of the data. The After completing the experiment, participants were asked to

descriptive labels of the columns has the following format: fill-in a form to collect personal data that could be useful

. for further studies and analysis of the data. The form has

The timestamp values have a precision of milliseconds, questions related with the following items: age, dominant

and are expressed in the format: Year-Month- hand, task preferences, medical background, previous

Day.Hour:Minutes:Seconds.Milliseconds. As data has

been recorded at 50 frames per seconds, the time step

between rows is 20 ms. † https://zenodo.org/record/3932964#.Xx_ZQ54zaUk

Prepared using sagej.clsSmith and Wittkopf 5

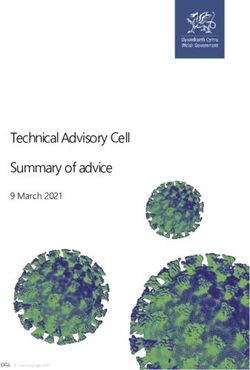

(a) MATLAB GUI (b) RVIZ Simulation

Figure 4. MATLAB GUI for reproducing the motion of the PSMs and the MTMs using the data provided by the dataset.

experience using the da Vinci or any teleoperated device, and Summary

hand-eye coordination skills. Questions demanding a level

The ROSMA dataset is a large surgical robotics collection

of expertise are multiple choice ranging from 1 (low) to 5

of data using the da Vinci Research Kit. The authors provide

(high).

a video showing the experimental setup used for collecting

the data, along with a demonstration of the performance of

Data synchronization the three exercises and the MATLAB GUI for visualizing

The kinematics data and the video data have been the data¶ . The main strength of our dataset versus the

recorded using two different computers, both running on JIGSAWS one (Gao et al. (2014)), is the large quantity

Ubuntu 16.04. The internal clocks of these computers of data recorded (155 kinematic variables, images, tasks

have been synchronized in a common time reference evaluation and questionnaire) and the higher number of users

using a Network Time Protocol (NTP) server. The internal (twelve instead of six). This high amount of data could

clocks synchronization has been repeated before starting the facilitate to advance in the field of artificial intelligence

experiment of a new user, if there was a break between users applied to the automation of tasks in surgical robotics, as well

higher than one hour. as surgical skills evaluation and gesture recognition.

Funding

Using the data

This work was partially supported by the European Commission

We provide MATLAB code that visualizes and replicates through the European Robotics Research Infrastructure Network

the motion of the four manipulators of the dVRK for a (TERRINet) under grant agreement 73099, and by the Andalusian

performance stored in the ROSMA dataset. This code has Regional Government, under grant number UMA18-FEDERJA-18.

been developed as the graphical user interface shown in

Figure 4(a). When the user browses a data file from the

References

ROSMA dataset, the code generates a mat file with all the

data of the file as a structure variable. Then, the user can Ahmidi N, Tao L, Sefati S, Gao Y, Lea C, Haro BB, Zappella L,

play the data by using the GUI buttons, which also allow to Khudanpur S, Vidal R and Hager GD (2017) A Dataset and

pause the reproduction or stop it. The time slider shows the Benchmarks for Segmentation and Recognition of Gestures

time progress of the reproduction, and at the top-right side, in Robotic Surgery. IEEE Transactions on Biomedical

the current timestamp and data frame are also displayed. Engineering 64(9): 2025–2041. DOI:10.1109/TBME.2016.

The GUI also gives the option of reproducing the data 2647680.

in ROS. If the checkbox ROS is on, the joint configuration Chen Z, Deguet A, Taylor RH and Kazanzides P (2017) Software

of each manipulator is sent through the corresponding ROS architecture of the da vinci research kit. In: Proceedings - 2017

topics: /dvrk//set position goal joint. Thus, if the 1st IEEE International Conference on Robotic Computing, IRC

ROS package of the dVRK (dvrk-ros) is running, the real 2017. Institute of Electrical and Electronics Engineers Inc.

platform or the simulated one using the visualization tool

RVIZ, the system will replicate the motion performed during

the data file trial. The dVRK package can be downloaded ‡ https://github.com/jhu-dvrk/dvrk-ros

from GitHub‡ , as well as our MATLAB code § . This package § https://github.com/SurgicalRoboticsUMA/rosma_

includes the MATLAB GUI and a folder with all the files dataset

required to launch the dVRK package with the configuration ¶ https://www.youtube.com/watch?v=gEtWMc5EkiA&

used during the data collection. feature=youtu.be

Prepared using sagej.cls6 Journal Title XX(X)

ISBN 9781509067237, pp. 180–187. DOI:10.1109/IRC.2017.

69.

Fontanelli GA, Ficuciello F, Villani L and Siciliano B (2017)

Modelling and identification of the da Vinci Research Kit

robotic arms. In: IEEE International Conference on Intelligent

Robots and Systems, volume 2017-Septe. Institute of Electrical

and Electronics Engineers Inc. ISBN 9781538626825, pp.

1464–1469. DOI:10.1109/IROS.2017.8205948.

Gao Y, Swaroop Vedula S, Reiley CE, Ahmidi N, Varadarajan B,

Lin HC, Tao L, Zappella L, Béjar B, Yuh DD, Chiung C,

Chen G, Vidal R, Khudanpur S and Hager GD (2014) JHU-

ISI Gesture and Skill Assessment Working Set (JIGSAWS): A

Surgical Activity Dataset for Human Motion Modeling. In:

MICCAI Workshop: Modeling and Monitoring of Computer

Assisted Interventions (M2CAI). Boston, MA.

Hardon SF, Horeman T, Bonjer HJ and Meijerink WJ (2018) Force-

based learning curve tracking in fundamental laparoscopic

skills training. Surgical Endoscopy 32(8): 3609–3621. DOI:

10.1007/s00464-018-6090-7.

Kazanzides P, Chen Z, Deguet A, Fischer GS, Taylor RH and

Dimaio SP (2014) An Open-Source Research Kit for the da

Vinci R Surgical System. In: IEEE International Conference

on Robotics & Automation (ICRA). Hong Kong, China. ISBN

9781479936854, pp. 6434–6439.

Pérez-del Pulgar CJ, Smisek J, Rivas-Blanco I, Schiele A and

Muñoz VF (2019) Using Gaussian Mixture Models for Gesture

Recognition During Haptically Guided Telemanipulation.

Electronics 8(7): 772. DOI:10.3390/electronics8070772.

Vedula SS and Hager GD (2020) Surgical data science: The new

knowledge domain. Innovative Surgical Sciences 2(3): 109–

121. DOI:10.1515/iss-2017-0004.

Velasquez CA, Navkar NV, Alsaied A, Balakrishnan S, Abinahed J,

Al-Ansari AA and Jong Yoon W (2016) Preliminary design of

an actuated imaging probe for generation of additional visual

cues in a robotic surgery. Surgical Endoscopy 30(6): 2641–

2648. DOI:10.1007/s00464-015-4270-2.

Prepared using sagej.clsYou can also read