Reasoning Over Semantic-Level Graph for Fact Checking

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Reasoning Over Semantic-Level Graph for Fact Checking

Wanjun Zhong1∗, Jingjing Xu3∗ , Duyu Tang2 , Zenan Xu1 , Nan Duan2 , Ming Zhou2

Jiahai Wang1 and Jian Yin1

1

The School of Data and Computer Science, Sun Yat-sen University.

Guangdong Key Laboratory of Big Data Analysis and Processing, Guangzhou, P.R.China

2

Microsoft Research 3 MOE Key Lab of Computational Linguistics, School of EECS, Peking University

{zhongwj25@mail2,xuzn@mail2,wangjiah@mail,issjyin@mail}.sysu.edu.cn

{dutang,nanduan,mingzhou}@microsoft.com

jingjingxu@pku.edu.cn

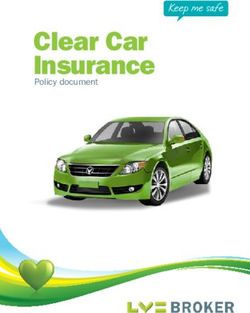

Abstract Claim: The Rodney King riots took place in the most populous county in the USA.

Fact knowledge

Fact checking is a challenging task because extracted from

1

evidence sentences

5

arXiv:1909.03745v3 [cs.CL] 25 Apr 2020

verifying the truthfulness of a claim requires

reasoning about multiple retrievable evidence. Evidence #1:

The 1992 Los Angeles riots, also known as the Rodney King riots were a series of riots, lootings,

In this work, we present a method suitable arsons, and civil disturbances that occurred in Los Angeles County, California in April and May

1992. 2

for reasoning about the semantic-level struc- 3

Evidence #2:

ture of evidence. Unlike most previous works, Los Angeles County, officially the County of Los Angeles, is the most populous county in the USA.

4

which typically represent evidence sentences

with either string concatenation or fusing the

features of isolated evidence sentences, our ap- Figure 1: A motivating example for fact checking and

proach operates on rich semantic structures of the FEVER task. Verifying the claim requires under-

evidence obtained by semantic role labeling. standing the semantic structure of multiple evidence

We propose two mechanisms to exploit the sentences and the reasoning process over the structure.

structure of evidence while leveraging the ad-

vances of pre-trained models like BERT, GPT

or XLNet. Specifically, using XLNet as the than the truth. The situation is more urgent as ad-

backbone, we first utilize the graph structure to vanced pre-trained language models (Radford et al.,

re-define the relative distances of words, with 2019) can produce remarkably coherent and fluent

the intuition that semantically related words texts, which lowers the barrier for the abuse of cre-

should have short distances. Then, we adopt ating deceptive content. In this paper, we study fact

graph convolutional network and graph atten-

checking with the goal of automatically assessing

tion network to propagate and aggregate infor-

mation from neighboring nodes on the graph. the truthfulness of a textual claim by looking for

We evaluate our system on FEVER, a bench- textual evidence.

mark dataset for fact checking, and find that Previous works are dominated by natural lan-

rich structural information is helpful and both guage inference models (Dagan et al., 2013; An-

our graph-based mechanisms improve the ac- geli and Manning, 2014) because the task requires

curacy. Our model is the state-of-the-art sys-

reasoning of the claim and retrieved evidence sen-

tem in terms of both official evaluation met-

rics, namely claim verification accuracy and

tences. They typically either concatenate evidence

FEVER score. sentences into a single string, which is used in top

systems in the FEVER challenge (Thorne et al.,

1 Introduction 2018b), or use feature fusion to aggregate the fea-

tures of isolated evidence sentences (Zhou et al.,

Internet provides an efficient way for individuals 2019). However, both methods fail to capture rich

and organizations to quickly spread information semantic-level structures among multiple evidence,

to massive audiences. However, malicious people which also prevents the use of deeper reasoning

spread false news, which may have significant in- model for fact checking. In Figure 1, we give a

fluence on public opinions, stock prices, even presi- motivating example. Making the correct prediction

dential elections (Faris et al., 2017). Vosoughi et al. requires a model to reason based on the understand-

(2018) show that false news reaches more people ing that “Rodney King riots” is occurred in “Los

∗

Work done while this author was an intern at Microsoft Angeles County” from the first evidence, and that

Research. “Los Angeles County” is “the most populous countyin the USA” from the second evidence. It is there- 2 Task Definition and Pipeline

fore desirable to mine the semantic structure of

With a textual claim given as the input, the prob-

evidence and leverage it to verify the truthfulness

lem of fact checking is to find supporting evidence

of the claim.

sentences to verify the truthfulness of the claim.

Under the aforementioned consideration, we We conduct our research on FEVER (Thorne

present a graph-based reasoning approach for fact et al., 2018a), short for Fact Extraction and VER-

checking. With a given claim, we represent the re- ification, a benchmark dataset for fact checking.

trieved evidence sentences as a graph, and then use Systems are required to retrieve evidence sentences

the graph structure to guide the reasoning process. from Wikipedia, and predict the claim as “SUP-

Specifically, we apply semantic role labeling (SRL) PORTED ”, “REFUTED ” or “NOT ENOUGH

to parse each evidence sentence, and establish links INFO (NEI) ”, standing for that the claim is sup-

between arguments to construct the graph. When ported by the evidence, refuted by the evidence,

developing the reasoning approach, we intend to and is not verifiable, respectively. There are two

simultaneously leverage rich semantic structures official evaluation metrics in FEVER. The first is

of evidence embodied in the graph and powerful the accuracy for three-way classification. The sec-

contextual semantics learnt in pre-trained model ond is FEVER score, which further measures the

like BERT (Devlin et al., 2018), GPT (Radford percentage of correct retrieved evidence for “SUP-

et al., 2019) and XLNet (Yang et al., 2019). ToOur Pipeline

PORTED ” and “REFUTED ” categories. Both the

achieve this, we first re-define the distance between statistic of FEVER dataset and the equation for

words based on the graph structure when producing calculating FEVER score are given in Appendix B.

contextual representations of words. Furthermore,

claim

we adopt graph convolutional network and graph

attention network to propagate and aggregate infor-

Document Selection Claim Verification

mation over the graph structure. In this way, the

reasoning process employs semantic representa-

documents SUPPORTED | REFUTED | NOTENOUGHINFO

tions at both word/sub-word level and graph level.

We conduct experiments on FEVER (Thorne Sentence Selection

et al., 2018a), which is one of the most influen-

tial benchmark datasets for fact checking. FEVER sentences evidence

consists of 185,445 verified claims, and evidence

sentences for each claim are natural language sen-

Figure 2: Our pipeline for fact checking on FEVER.

tences from Wikipedia. We follow the official eval-

The main contribution of this work is a graph-based

uation protocol of FEVER, and demonstrate that reasoning model for claim verification.

our approach achieves state-of-the-art performance

in terms of both claim classification accuracy and Here, we present an overview of our pipeline for

FEVER score. Ablation study shows that the in- FEVER, which follows the majority of previous

tegration of graph-driven representation learning studies. Our pipeline consists of three main compo-

mechanisms improves the performance. We briefly nents: a document retrieval model, a sentence-level

summarize our contributions as follows. evidence selection model, and a claim verification

model. Figure 2 gives an overview of the pipeline.

With a given claim, the document retrieval model

• We propose a graph-based reasoning approach retrieves the most related documents from a given

for fact checking. Our system apply SRL to collection of Wikipedia documents. With retrieved

construct graphs and present two graph-driven documents, the evidence selection model selects

representation learning mechanisms. top-k related sentences as the evidence. Finally,

the claim verification model takes the claim and

evidence sentences and outputs the veracity of the

• Results verify that both graph-based mech- claim.

anisms improve the accuracy, and our final The main contribution of this work is the graph-

system achieves state-of-the-art performance based reasoning approach for claim verification,

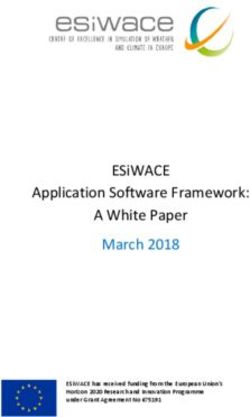

on the FEVER dataset. which is explained detailedly in Section 3. OurSRL results with verb “occurred”

ARG1

SRL results with verb “known”

riots, lootings, arsons, and

ADVERBIAL ARG1 civil disturbances

Evidence #1: as the Rodney

also

King riots

The 1992 Los Angeles riots, occurred

also known as the Rodney

King riots were a series of The 1992 Los VERB

riots, lootings, arsons, and known In Los Angeles in April and

Angeles riots

civil disturbances that VERB ARG2 County, California May 1992

occurred in Los Angeles LOCATION TEMPORAL

County, California in April Graph

and May 1992. Construction SRL results with verb “is”

ARG2

Evidence #2: VERB the most populous county in the USA

Los Angeles County, is

officially the County of Los

Angeles, is the most Los Angeles County, officially the County of Los Angeles

populous county in the USA. ARG1

Figure 3: The constructed graph for the motivating example with two evidence sentences. Each box describes

a “tuple” which is extracted by SRL triggered by a verb. Blue solid lines indicate edges that connect arguments

within a tuple and red dotted lines indicate edges that connect argument across different tuples.

strategies for document selection and evidence se- ways to construct the graph, such as open informa-

lection are described in Section 4. tion extraction (Banko et al., 2007), named entity

recognition plus relation classification, sequence-

3 Graph-Based Reasoning Approach to-sequence generation which is trained to produce

structured tuples (Goodrich et al., 2019), etc. In this

In this section, we introduce our graph-based rea-

work, we adopt a practical and flexible way based

soning approach for claim verification, which is

on semantic role labeling (Carreras and Màrquez,

the main contribution of this paper. Taking a claim

2004). Specifically, with the given evidence sen-

and retrieved evidence sentences1 as the input, our

tences, our graph construction operates in the fol-

approach predicts the truthfulness of the claim. For

lowing steps.

FEVER, it is a three-way classification problem,

which predicts the claim as “SUPPORTED ”, “RE- • For each sentence, we parse it to tuples2 with

FUTED ” or “NOT ENOUGH INFO (NEI) ”. an off-the-shelf SRL toolkit developed by Al-

The basic idea of our approach is to employ the lenNLP3 , which is a re-implementation of a

intrinsic structure of evidence to assess the truthful- BERT-based model (Shi and Lin, 2019).

ness of the claim. As shown in the motivating exam-

ple in Figure 1, making the correct prediction needs • For each tuple, we regard its elements with

good understanding of the semantic-level structure certain types as the nodes of the graph. We

of evidence and the reasoning process based on heuristically set those types as verb, argument,

that structure. In this section, we first describe location and temporal, which can also be eas-

our graph construction module (§3.1). Then, we ily extended to include more types. We create

present how to apply graph structure for fact check- edges for every two nodes within a tuple.

ing, including a contextual representation learning

• We create edges for nodes across different

mechanism with graph-based distance calculation

tuples to capture the structure information

(§3.2), and graph convolutional network and graph

among multiple evidence sentences. Our idea

attention network to propagate and aggregate infor-

is to create edges for nodes that are literally

mation over the graph (§3.3 and §3.4).

similar with each other. Assuming entity A

3.1 Graph Construction and entity B come from different tuples, we

add one edge if one of the following condi-

Taking evidence sentences as the input, we would tions is satisfied: (1) A equals B; (2) A con-

like to build a graph to reveal the intrinsic structure tains B; (3) the number of overlapped words

of these evidence. There might be many different

2

A sentence could be parsed as multiple tuples.

1 3

Details about how to retrieve evidence for a claim are https://demo.allennlp.org/

described in Section 4. semantic-role-labelingin the most populous

county in the USA

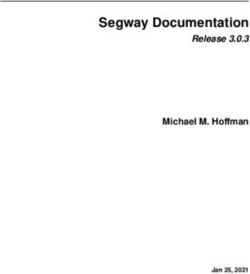

Graph

The Rodney

claim Convolutional

King riots take place

… Network

[SEP]

XLNet in Los Angeles Graph

… output

with The 1992 Los County, California

… Attention

sentence 1 Graph Angeles riots …

… Distance Graph as the Rodney Los Angeles

Convolutional King riots

is County,

sentence 2 Network officially …

… known

the most populous

also

county in the USA.

Figure 4: An overview of our graph-based reasoning approach for claim verification. Taking a claim and evidence

sentences as the input, we first calculate contextual word representations with graph-based distance (§3.2). After

that, we use graph convolutional network to propagate information over the graph (§3.3), and use graph attention

network to aggregate information (§3.4) before making the final prediction.

between A and B is larger than the half of the representation learning procedure will take huge

minimum number of words in A and B. memory space, which is also observed by Shaw

Figure 3 shows the constructed graph of the evi- et al. (2018).

dence in the motivating example. In order to obtain In this work, we adopt pre-trained model XL-

the structure information of the claim, we use the Net (Yang et al., 2019) as the backbone of our

same pipeline to represent a claim as a graph as approach because it naturally involves the concept

well. of relative position5 . Pre-trained model captures

Our graph construction module offers an ap- rich contextual representations of words, which is

proach on modeling structure of multiple evidence, helpful for our task which requires sentence-level

which could be further developed in the future. reasoning. Considering the aforementioned issues,

we implement an approximate solution to trade

3.2 Contextual Word Representations with

off between the efficiency of implementation and

Graph Distance

the informativeness of the graph. Specifically, we

We describe the use of graph for learning graph- reorder evidence sentences with a topology sort al-

enhanced contextual representations of words4 . gorithm with the intuition that closely linked nodes

Our basic idea is to shorten the distance be- should exist in neighboring sentences. This would

tween two semantically related words on the graph, prefer that neighboring sentences contain either

which helps to enhance their relationship when parent nodes or sibling nodes, so as to better cap-

we calculate contextual word representations with ture the semantic relatedness between different evi-

a Transformer-based (Vaswani et al., 2017) pre- dence sentences. We present our implementation

trained model like BERT and XLNet. Suppose in Appendix A. The algorithm begins from nodes

we have five evidence sentences {s1 , s2 , ... s5 } without incident relations. For each node with-

and the word w1i from s1 and the word w5j from out incident relations, we recursively visit its child

s5 are connected on the graph, simply concatenat- nodes in a depth-first searching way.

ing evidence sentences as a single string fails to

capture their semantic-level structure, and would After obtaining graph-based relative position of

give a large distance to w1i and w5j , which is the words, we feed the sorted sequence into XLNet

number of words between them across other three to obtain the contextual representations. Mean-

sentences (i.e., s2 , s3 , and s4 ). An intuitive way while, we obtain the representation h([CLS]) for

to achieve our goal is to define an N × N matrix a special token [CLS], which stands for the joint

of distances of words along the graph, where N is representation of the claim and the evidence in

the total number of words in the evidence. How- Transformer-based architecture.

ever, this is unacceptable in practice because the

4

In Transformer-based representation learning pipeline,

5

the basic computational unit can also be word-piece. For Our approach can also be easily adapted to BERT by

simplicity, we use the term “word” in this paper. adding relative position like Shaw et al. (2018).3.3 Graph Convolutional Network The graph learning mechanism will be per-

We have injected the graph information in Trans- formed separately for claim-based and evidence-

former and obtained h([CLS]), which captures the based graph. Therefore, we denote Hc and He

semantic interaction between the claim and the evi- as the representations of all nodes in claim-based

dence at word level 6 . As shown in our motivating graph and evidence-based graphs, respectively. Af-

example in Figure 1 and the constructed graph in terwards, we utilize the graph attention network to

Figure 3, the reasoning process needs to operate align the graph-level node representation learned

on span/argument-level, where the basic computa- for two graphs before making the final prediction.

tional unit typically consists of multiple words like 3.4 Graph Attention Network

“Rodney King riots” and “the most popular county

We explore the related information between two

in the USA”.

graphs and make semantic alignment for final pre-

To further exploit graph information beyond v v

diction. Let He ∈ RNe ×d and Hc ∈ RNc ×d

word level, we first calculate the representation

denote matrices containing representations of all

of a node, which is a word span in the graph, by

nodes in evidence-based and claim-based graph re-

averaging the contextual representations of words

spectively, where Nev and Ncv denote number of

contained in the node. After that, we employ multi-

nodes in the corresponding graph.

layer graph convolutional network (GCNs) (Kipf

We first employ a graph attention mechanism

and Welling, 2016) to update the node represen-

(Veličković et al., 2017) to generate a claim-specific

tation by aggregating representations from their

evidence representation for each node in claim-

neighbors on the graph. Formally, we denote G as

based graph. Specifically, we first take each hic ∈

the graph constructed by the previous graph con-

v Hc as query, and take all node representations hje ∈

struction method and make H ∈ RN ×d a matrix

He as keys. We then perform graph attention on

containing representation of all nodes, where N v

the nodes, an attention mechanism a : Rd × Rd →

and d denote the number of nodes and the dimen-

R to compute attention coefficient as follows:

sion of node representations, respectively. Each

row Hi ∈ Rd is the representation of node i. We eij = a(Wc hic , We hje ) (3)

introduce an adjacency matrix A of graph G and

which means the importance of evidence node j to

its degree matrix D, where

P we add self-loops to the claim node i. Wc ∈ RF ×d and We ∈ RF ×d

matrix A and Dii = j Aij . One-layer GCNs is the weight matrix and F is the dimension of

will aggregate information through one-hop edges,

attention feature. We use the dot-product function

which is calculated as follows:

as a here. We then normalize eij using the softmax

Hi

(1)

= ρ(AH

e i W0 ), (1) function:

exp(eij )

(1) αij = sof tmaxj (eij ) = P (4)

where Hi ∈ Rd is the new d-dimension represen- k∈Nev exp(eik )

e = D− 12 AD− 12 is the normal-

tation of node i, A After that, we calculate a claim-centric evidence

ized symmetric adjacency matrix, W0 is a weight representation X = [x1 , . . . , xNcv ] using the

matrix, and ρ is an activation function. To exploit weighted sum over He :

information from the multi-hop neighboring nodes, X

we stack multiple GCNs layers: xi = αij hje (5)

j∈Nev

(j+1) e (j) Wj ),

Hi = ρ(AHi (2) We then perform node-to-node alignment and cal-

culate aligned vectors A = [a1 , . . . , aNcv ] by

where j denotes the layer number and Hi0 is the the claim node representation H c and the claim-

initial representation of node i initialized from the centric evidence representation X,

contextual representation. We simplify H (k) as H

for later use, where H indicates the representation ai = falign (hic , xi ), (6)

of all nodes updated by k-layer GCNs. where falign () denotes the alignment function. In-

6

By “word” in “word-level”, we mean the basic computa- spired by Shen et al. (2018), we design our align-

tional unit in XLNet, and thus h([CLS]) capture the sophis- ment function as:

ticated interaction between words via multi-layer multi-head

attention operations. falign (x, y) = Wa [x, y, x − y, x y], (7)where Wa ∈ Rd×4∗d is a weight matrix and is and SEP and CLS are symbols indicating end-

element-wise Hadamard product. The final output ing of a sentence and ending of a whole input, re-

g is obtained by the mean pooling over A. We spectively. The final representation hcei ∈ Rd is

then feed the concatenated vector of g and the final obtained via extracting the hidden vector of the

hidden vector h([CLS]) from XLNet through a [CLS] token.

MLP layer for the final prediction. After that, we employ an MLP layer and a soft-

max layer to compute score s+ cei for each evidence

4 Document Retrieval and Evidence candidate. Then, we rank all the evidence sentences

Selection by score s+cei . The model is trained on the training

In this section, we briefly describe our document re- data with a standard cross-entropy loss. Following

trieval and evidence selection components to make the official setting in FEVER, we select top 5 evi-

the paper self contained. dence sentences. The performance of our evidence

selection model is shown in Appendix C.

4.1 Document Retrieval

The document retrieval model takes a claim and 5 Experiments

a collection of Wikipedia documents as the input,

and returns m most relevant documents. We evaluate on FEVER (Thorne et al., 2018a),

We mainly follow Nie et al. (2019), the top- a benchmark dataset for fact extraction and ver-

performing system on the FEVER shared task ification. Each instance in FEVER dataset con-

(Thorne et al., 2018b). The document retrieval sists of a claim, groups of ground-truth evi-

model first uses keyword matching to filter candi- dence from Wikipedia and a label (i.e., “SUP-

date documents from the massive Wikipedia docu- PORTED ”, “REFUTED ” or “NOT ENOUGH

ments. Then, NSMN (Nie et al., 2019) is applied INFO (NEI) ”), indicating its veracity. FEVER

to handle the documents with disambiguation titles, includes a dump of Wikipedia, which contains

which are 10% of the whole documents. Docu- 5,416,537 pre-processed documents. The two of-

ments without disambiguation title are assigned ficial evaluation metrics of FEVER are label ac-

with higher scores in the resulting list. The input curacy and FEVER score, as described in Section

to the NSMN model includes the claim and can- 2. Label accuracy is the primary evaluation metric

didate documents with disambiguation title. At a we apply for our experiments because it directly

high level, NSMN model has encoding, alignment, measures the performance of the claim verification

matching and output layers. Readers who are in- model. We also report FEVER score for compar-

terested are recommended to refer to the original ison, which measures whether both the predicted

paper for more details. label and the retrieved evidence are correct. No

Finally, we select top 10 documents from the evidence is required if the predicted label is NEI.

resulting list.

5.1 Baselines

4.2 Sentence-Level Evidence Selection We compare our system to the following baselines,

Taking a claim and all the sentences from retrieved including three top-performing systems on FEVER

documents as the input, evidence selection model shared task, a recent work GEAR (Zhou et al.,

returns the top k most relevant sentences. 2019), and a concurrent work by Liu et al. (2019b).

We regard evidence selection as a semantic

matching problem, and leverage rich contextual • Nie et al. (2019) employ a semantic matching

representations embodied in pre-trained models neural network for both evidence selection

like XLNet (Yang et al., 2019) and RoBERTa (Liu and claim verification.

et al., 2019a) to measure the relevance of a claim

to every evidence candidate. Let’s take XLNet as • Yoneda et al. (2018) infer the veracity of each

an example. The input of the sentence selector is claim-evidence pair and make final prediction

by aggregating multiple predicted labels.

cei = [Claim, SEP, Evidencei , SEP, CLS]

where Claim and Evidencei indicate tokenized • Hanselowski et al. (2018) encode each claim-

word-pieces of original claim and ith evidence can- evidence pair separately, and use a pooling

didate, d denotes the dimension of hidden vector, function to aggregate features for prediction.Label FEVER The last row in Table 2 corresponds to the base-

Method

Acc (%) Score (%) line where all the evidence sentences are simply

Hanselowski et al. (2018) 65.46 61.58 concatenated as a single string, where no explicit

Yoneda et al. (2018) 67.62 62.52 graph structure is used at all for fact verification.

Nie et al. (2019) 68.21 64.21

GEAR (Zhou et al., 2019) 71.60 67.10 Model Label Accuracy

KGAT (Liu et al., 2019b) 72.81 69.40 DREAM 79.16

DREAM (our approach) 76.85 70.60 -w/o Relative Distance 78.35

-w/o GCN&GAN 77.12

Table 1: Performance on the blind test set on FEVER. -w/o both above modules 75.40

Our approach is abbreviated as DREAM.

Table 2: Ablation study on develop set.

• GEAR (Zhou et al., 2019) uses BERT to ob-

As shown in Table 2, compared to the XLNet

tain claim-specific representation for each evi-

baseline, incorporating both graph-based modules

dence sentence, and applies graph network by

brings 3.76% improvement on label accuracy. Re-

regarding each evidence sentence as a node in

moving the graph-based distance drops 0.81% in

the graph.

terms of label accuracy. The graph-based distance

• KGAT (Liu et al., 2019b) is concurrent with mechanism can shorten the distance of two closely-

our work, which regards sentences as the linked nodes and help the model to learn their

nodes of a graph and uses Kernel Graph At- dependency. Removing the graph-based reason-

tention Network to aggregate information. ing module drops 2.04% because graph reason-

ing module captures the structural information and

5.2 Model Comparison performs deep reasoning about that. Figure 5 gives

a case study of our approach.

Table 1 reports the performance of our model and

baselines on the blind test set with the score showed 5.4 Error Analysis

on the public leaderboard7 . As shown in Table 1,

We randomly select 200 incorrectly predicted in-

in terms of label accuracy, our model significantly

stances and summarize the primary types of errors.

outperforms previous systems with 76.85% on the

The first type of errors is caused by failing to

test set. It is worth noting that, our approach, which

match the semantic meaning between phrases that

exploits explicit graph-level semantic structure of

describe the same event. For example, the claim

evidence obtained by SRL, outperforms GEAR

states “Winter’s Tale is a book”, while the evi-

and KGAT, both of which regard sentences as the

dence states “Winter ’s Tale is a 1983 novel by

nodes and use model to learn the implicit structure

Mark Helprin”. The model fails to realize that

of evidence 8 . By the time our paper is submitted,

“novel” belongs to “book” and states that the claim

our system achieves state-of-the-art performance

is refuted. Solving this type of errors needs to in-

in terms of both evaluation metrics on the leader-

volve external knowledge (e.g. ConceptNet (Speer

board.

et al., 2017)) that can indicate logical relationships

5.3 Ablation Study between different events.

The misleading information in the retrieved evi-

Table 2 presents the label accuracy on the develop- dence causes the second type of errors. For exam-

ment set after eliminating different components (in- ple, the claim states “The Gifted is a movie”, and

cluding the graph-based relative distance (§3.2) and the ground-truth evidence states “The Gifted is an

graph convolutional network and graph attention upcoming American television series”. However,

network (§3.3 and §3.4) separately in our model. the retrieved evidence also contains “The Gifted is

7

The public leaderboard for perpetual evaluation of a 2014 Filipino dark comedy-drama movie”, which

FEVER is https://competitions.codalab.org/ misleads the model to make the wrong judgment.

competitions/18814#results. DREAM is our user

name on the leaderboard.

8

We don’t overclaim the superiority of our system to

6 Related Work

GEAR and KGAT only comes from the explicit graph struc-

ture, because we have differences in other components like In general, fact checking involves assessing the

sentence selection and the pre-trained model. truthfulness of a claim. In literature, a claim can beText: Congressional Space Medal of Honor is the tion phase, participants typically extract named en-

highest award given only to astronauts by NASA.

Tuples: ('Congressional Space Medal of Honor', 'is',

tities from a claim as the query and use Wikipedia

Claim 'the highest award given only to astronauts by search API. In the evidence selection phase, partici-

NASA’) pants measure the similarity between the claim and

('the highest award’, 'given','only', 'to astronauts',

'by NASA') an evidence sentence candidate by training a classi-

Text: The highest award given by NASA , fication model like Enhanced LSTM (Chen et al.,

Congressional Space Medal of Honor is awarded by 2016) in a supervised setting or using string simi-

the President of the United States in Congress 's

name on recommendations from the Administrator larity function like TFIDF without trainable param-

Evidence #1 of the National Aeronautics and Space eters. Padia et al. (2018) utilizes semantic frames

Administration .

Tuples: ('The highest award','given','by NASA’) for evidence selection. In this work, our focus is

('Congressional Space Medal of Honor','awarded','by the claim classification phase. Top-ranked three

the President of the United States')

systems aggregate pieces of evidence through con-

Text: To be awarded the Congressional Space Medal

of Honor , an astronaut must perform feats of catenating evidence sentences into a single string

extraordinary accomplishment while participating in (Nie et al., 2019), classifying each evidence-claim

space flight under the authority of NASA .

Tuples: ('awarded', 'the Congressional Space Medal pair separately, merging the results (Yoneda et al.,

Evidence #2 of Honor’) 2018), and encoding each evidence-claim pair fol-

('To be awarded the Congressional Space Medal of

Honor',’an astronaut','perform','feats of

lowed by pooling operation (Hanselowski et al.,

extraordinary accomplishment’) 2018). Zhou et al. (2019) are the first to use BERT

('an astronaut', 'participating','in space flight','under

the authority of NASA' )

to calculate claim-specific evidence sentence rep-

resentations, and then develop a graph network to

Figure 5: A case study of our approach. Facts shared aggregate the information on top of BERT, regard-1

across the claim and the evidence are highlighted with ing each evidence as a node in the graph. Our work

different colors. differs from Zhou et al. (2019) in that (1) the con-

struction of our graph requires understanding the

syntax of each sentence, which could be viewed as

a text or a subject-predicate-object triple (Nakas- a more fine-grained graph, and (2) both the contex-

hole and Mitchell, 2014). In this work, we only tual representation learning module and the reason-

consider textual claims. Existing datasets differ ing module have model innovations of taking the

from data source and the type of supporting ev- graph information into consideration. Instead of

idence for verifying the claim. An early work training each component separately, Yin and Roth

by Vlachos and Riedel (2014) constructs 221 la- (2018) show that joint learning could improve both

beled claims in the political domain from POLITI- claim verification and evidence selection.

FACT.COM and CHANNEL4.COM, giving meta-

data of the speaker as the evidence. POLIFACT is 7 Conclusion

further investigated by following works, including

Ferreira and Vlachos (2016) who build Emergent In this work, we present a graph-based approach

with 300 labeled rumors and about 2.6K news ar- for fact checking. When assessing the veracity of a

ticles, Wang (2017) who builds LIAR with 12.8K claim giving multiple evidence sentences, our ap-

annotated short statements and six fine-grained la- proach is built upon an automatically constructed

bels, and Rashkin et al. (2017) who collect claims graph, which is derived based on semantic role la-

without meta-data while providing 74K news ar- beling. To better exploit the graph information, we

ticles. We study FEVER (Thorne et al., 2018a), propose two graph-based modules, one for calculat-

which requires aggregating information from multi- ing contextual word embeddings using graph-based

ple pieces of evidence from Wikipedia for making distance in XLNet, and the other for learning repre-

the conclusion. FEVER contains 185,445 anno- sentations of graph components and reasoning over

tated instances, which to the best of our knowledge the graph. Experiments show that both graph-based

is the largest benchmark dataset in this area. modules bring improvements and our final system

The majority of participating teams in the is the state-of-the-art on the public leaderboard by

FEVER challenge (Thorne et al., 2018b) use the the time our paper is submitted.

same pipeline consisting of three components, Evidence selection is an important component

namely document selection, evidence sentence se- of fact checking as finding irrelevant evidence may

lection, and claim verification. In document selec- lead to different predictions. A potential solutionis to jointly learn evidence selection and claim ver- Ben Goodrich, Vinay Rao, Peter J Liu, and Moham-

ification model, which we leave as a future work. mad Saleh. 2019. Assessing the factual accuracy

of generated text. In Proceedings of the 25th ACM

SIGKDD International Conference on Knowledge

Acknowledge Discovery & Data Mining, pages 166–175. ACM.

Wanjun Zhong, Zenan Xu, Jiahai Wang and Andreas Hanselowski, Hao Zhang, Zile Li, Daniil

Jian Yin are supported by the National Natu- Sorokin, Benjamin Schiller, Claudia Schulz, and

ral Science Foundation of China (U1711262, Iryna Gurevych. 2018. Ukp-athene: Multi-sentence

U1611264,U1711261,U1811261,U1811264, textual entailment for claim verification. arXiv

preprint arXiv:1809.01479.

U1911203), National Key R&D Program of

China (2018YFB1004404), Guangdong Ba- Thomas N Kipf and Max Welling. 2016. Semi-

sic and Applied Basic Research Foundation supervised classification with graph convolutional

(2019B1515130001), Key R&D Program of networks. arXiv preprint arXiv:1609.02907.

Guangdong Province (2018B010107005). The Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Man-

corresponding author is Jian Yin. dar Joshi, Danqi Chen, Omer Levy, Mike Lewis,

Luke Zettlemoyer, and Veselin Stoyanov. 2019a.

Roberta: A robustly optimized bert pretraining ap-

References proach. arXiv preprint arXiv:1907.11692.

Gabor Angeli and Christopher D Manning. 2014. Natu- Zhenghao Liu, Chenyan Xiong, and Maosong Sun.

ralli: Natural logic inference for common sense rea- 2019b. Kernel graph attention network for fact veri-

soning. In Proceedings of the 2014 conference on fication. arXiv preprint arXiv:1910.09796.

empirical methods in natural language processing

(EMNLP), pages 534–545. Ndapandula Nakashole and Tom M Mitchell. 2014.

Language-aware truth assessment of fact candidates.

Michele Banko, Michael J Cafarella, Stephen Soder- In Proceedings of the 52nd Annual Meeting of the

land, Matthew Broadhead, and Oren Etzioni. 2007. Association for Computational Linguistics (Volume

Open information extraction from the web. In Ijcai, 1: Long Papers), pages 1009–1019.

volume 7, pages 2670–2676.

Yixin Nie, Haonan Chen, and Mohit Bansal. 2019.

Xavier Carreras and Lluı́s Màrquez. 2004. Introduc- Combining fact extraction and verification with neu-

tion to the conll-2004 shared task: Semantic role ral semantic matching networks. In Proceedings of

labeling. In Proceedings of the Eighth Confer- the AAAI Conference on Artificial Intelligence, vol-

ence on Computational Natural Language Learning ume 33, pages 6859–6866.

(CoNLL-2004) at HLT-NAACL 2004, pages 89–97.

Ankur Padia, Francis Ferraro, and Tim Finin. 2018.

Qian Chen, Xiaodan Zhu, Zhenhua Ling, Si Wei, Team UMBC-FEVER : Claim verification using se-

Hui Jiang, and Diana Inkpen. 2016. Enhanced mantic lexical resources. In Proceedings of the

lstm for natural language inference. arXiv preprint First Workshop on Fact Extraction and VERification

arXiv:1609.06038. (FEVER), pages 161–165, Brussels, Belgium. Asso-

ciation for Computational Linguistics.

Ido Dagan, Dan Roth, Mark Sammons, and Fabio Mas-

simo Zanzotto. 2013. Recognizing textual entail- Alec Radford, Jeffrey Wu, Rewon Child, David Luan,

ment: Models and applications. Synthesis Lectures Dario Amodei, and Ilya Sutskever. 2019. Language

on Human Language Technologies, 6(4):1–220. models are unsupervised multitask learners. OpenAI

Blog, 1(8).

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and

Kristina Toutanova. 2018. Bert: Pre-training of deep Hannah Rashkin, Eunsol Choi, Jin Yea Jang, Svitlana

bidirectional transformers for language understand- Volkova, and Yejin Choi. 2017. Truth of varying

ing. arXiv preprint arXiv:1810.04805. shades: Analyzing language in fake news and polit-

ical fact-checking. In Proceedings of the 2017 Con-

Robert Faris, Hal Roberts, Bruce Etling, Nikki ference on Empirical Methods in Natural Language

Bourassa, Ethan Zuckerman, and Yochai Benkler. Processing, pages 2931–2937.

2017. Partisanship, propaganda, and disinformation:

Online media and the 2016 us presidential election. Peter Shaw, Jakob Uszkoreit, and Ashish Vaswani.

2018. Self-attention with relative position represen-

William Ferreira and Andreas Vlachos. 2016. Emer- tations. arXiv preprint arXiv:1803.02155.

gent: a novel data-set for stance classification. In

Proceedings of the 2016 conference of the North Dinghan Shen, Xinyuan Zhang, Ricardo Henao, and

American chapter of the association for computa- Lawrence Carin. 2018. Improved semantic-aware

tional linguistics: Human language technologies, network embedding with fine-grained word align-

pages 1163–1168. ment. arXiv preprint arXiv:1808.09633.Peng Shi and Jimmy Lin. 2019. Simple bert models for A Typology Sort Algorithm

relation extraction and semantic role labeling. arXiv

preprint arXiv:1904.05255.

Algorithm 1 Graph-based Distance Calculation Al-

Robert Speer, Joshua Chin, and Catherine Havasi. 2017. gorithm.

Conceptnet 5.5: An open multilingual graph of gen-

eral knowledge. In Thirty-First AAAI Conference on Require: A sequence of nodes S = {si , s2 , · · · , sn }; A set

of relations R = {r1 , r2 , · · · , rm }

Artificial Intelligence.

1: function DFS(node, visited, sorted sequence)

James Thorne, Andreas Vlachos, Christos 2: for each child sc in node’s children do

Christodoulopoulos, and Arpit Mittal. 2018a. 3: if sc has no incident edges and visited[sc ]==0

then

Fever: a large-scale dataset for fact extraction and

4: visited[sc ]=1

verification. arXiv preprint arXiv:1803.05355. 5: DFS(sc , visited)

James Thorne, Andreas Vlachos, Oana Cocarascu, 6: end if

7: end for

Christos Christodoulopoulos, and Arpit Mittal. 8: sorted sequence.append(0, node)

2018b. The fact extraction and verification (fever) 9: end function

shared task. arXiv preprint arXiv:1811.10971. 10: sorted sequence = []

11: visited = [0 for i in range(n)]

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob 12: S,R = changed to acyclic graph(S,R)

Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz 13: for each node si in S do

Kaiser, and Illia Polosukhin. 2017. Attention is all 14: if si has no incident edges and visited[i] == 0 then

you need. In Advances in neural information pro- 15: visited[i] = 1

cessing systems, pages 5998–6008. 16: for each child sc in si ’s children do

17: DFS(sc , visited, sorted sequence)

Petar Veličković, Guillem Cucurull, Arantxa Casanova, 18: end for

Adriana Romero, Pietro Lio, and Yoshua Bengio. 19: sorted sequence.append(0,si )

2017. Graph attention networks. arXiv preprint 20: end if

arXiv:1710.10903. 21: end for

22: return sorted sequence

Andreas Vlachos and Sebastian Riedel. 2014. Fact

checking: Task definition and dataset construction.

In Proceedings of the ACL 2014 Workshop on Lan-

guage Technologies and Computational Social Sci- B FEVER

ence, pages 18–22.

The statistic of FEVER is shown in Table 3.

Soroush Vosoughi, Deb Roy, and Sinan Aral. 2018.

The spread of true and false news online. Science, Split SUPPORTED REFUTED NEI

359(6380):1146–1151. Training 80,035 29,775 35,659

Dev 6,666 6,666 6,666

William Yang Wang. 2017. ” liar, liar pants on fire”: Test 6,666 6,666 6,666

A new benchmark dataset for fake news detection.

arXiv preprint arXiv:1705.00648. Table 3: Split size of SUPPORTED, REFUTED and

NOT ENOUGH INFO (NEI) classes in FEVER.

Zhilin Yang, Zihang Dai, Yiming Yang, Jaime Car-

bonell, Ruslan Salakhutdinov, and Quoc V Le.

2019. Xlnet: Generalized autoregressive pretrain- FEVER score is calculated with equation 8,

ing for language understanding. arXiv preprint where y is the ground truth label, ŷ is the predicted

arXiv:1906.08237. label, E = [E1 , · · · , Ek ] is a set of ground-truth

Wenpeng Yin and Dan Roth. 2018. Twowingos: A two- evidence, and Ê = [Ê1 , · · · , Ê5 ] is a set of pre-

wing optimization strategy for evidential claim veri- dicted evidence.

fication. arXiv preprint arXiv:1808.03465.

def

Takuma Yoneda, Jeff Mitchell, Johannes Welbl, Pon- Instance Correct(y, ŷ, E, Ê) =

tus Stenetorp, and Sebastian Riedel. 2018. Ucl ma- y = ŷ ∧ (y = N EI ∨ Evidence Correct(E, Ê))

chine reading group: Four factor framework for fact (8)

finding (hexaf). In Proceedings of the First Work-

shop on Fact Extraction and VERification (FEVER),

pages 97–102. C Evidence Selection Results

Jie Zhou, Xu Han, Cheng Yang, Zhiyuan Liu, Lifeng In this part, we present the performance of the

Wang, Changcheng Li, and Maosong Sun. 2019. sentence-level evidence selection module that we

GEAR: Graph-based evidence aggregating and rea- develop with different backbone. We take the con-

soning for fact verification. In Proceedings of the

57th Annual Meeting of the Association for Compu-

catenation of claim and each evidence as input, and

tational Linguistics, pages 892–901, Florence, Italy. take the last hidden vector to calculate the score for

Association for Computational Linguistics. evidence ranking. In our experiments, we try bothRoBERTa and XLNet. From Table 4, we can see

that RoBERTa performs slightly better than XLNet

here. When we submit our system on the leader-

board, we use RoBERTa as the evidence selection

model.

Dev. Set Test Set

Model

Acc. Rec. F1 Acc. Rec. F1

XLNet 26.60 87.33 40.79 25.55 85.34 39.33

RoBERTa 26.67 87.64 40.90 25.63 85.57 39.45

Table 4: Results of evidence selection models.

D Training Details

In this part, we describe the training details of our

experiments. We employ cross-entropy loss as the

loss function. We apply AdamW as the optimizer

for model training. For evidence selection model,

we set learning rate as 1e-5, batch size as 8 and

maximum sequence length as 128.

In claim verification model, the XLNet network

and graph-based reasoning network are trained sep-

arately. We first train XLNet and then freeze the

parameters of XLNet and train the graph-based rea-

soning network. We set learning rate as 2e-6, batch

size as 6 and set maximum sequence length as 256.

We set the dimension of node representation as 100.You can also read