Time-Aware Evidence Ranking for Fact-Checking

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Time-Aware Evidence Ranking for Fact-Checking

Liesbeth Allein,1,2 Isabelle Augenstein,3 Marie-Francine Moens 1

1

Department of Computer Science, KU Leuven, Belgium

2

Text and Data Mining Unit, Joint Research Centre of the European Commission, Italy

3

Department of Computer Science, University of Copenhagen, Denmark

Liesbeth.Allein@kuleuven.be, augenstein@di.ku.dk, Sien.Moens@kuleuven.be

arXiv:2009.06402v1 [cs.CL] 10 Sep 2020

Abstract to a claim’s veracity. It has chiefly confined evidence

relevance and ranking to the semantic relatedness between

Truth can vary over time. Therefore, fact-checking decisions claim and evidence, even though learning to rank research

on claim veracity should take into account temporal informa-

tion of both the claim and supporting or refuting evidence.

has interpreted relevance more diversely. We argue that

Automatic fact-checking models typically take claims and the temporal features of evidence should be taken into

evidence pages as input, and previous work has shown that account when delineating evidence relevance and evidence

weighing or ranking these evidence pages by their relevance should be ranked accordingly. In other words, evidence

to the claim is useful. However, the temporal information of ranking should be time-aware. Our choice for time-aware

the evidence pages is not generally considered when defin- evidence ranking is also motivated by the importance of

ing evidence relevance. In this work, we investigate the hy- temporal features in document retrieval and ranking (Dakka,

pothesis that the timestamp of an evidence page is crucial Gravano, and Ipeirotis 2010) and the increasing popularity

to how it should be ranked for a given claim. We delineate of time-aware ranking models in microblog search (Zahedi

four temporal ranking methods that constrain evidence rank- et al. 2017; Rao et al. 2017; Chen et al. 2018; Liu et al. 2019;

ing differently: evidence-based recency, claim-based recency,

claim-centered closeness and evidence-centered clustering

Martins, Magalhães, and Callan 2019) and recommendation

ranking. Subsequently, we simulate hypothesis-specific ev- systems (Xue et al. 2019; Zhang et al. 2020).

idence rankings given the evidence timestamps as gold stan-

dard. Evidence ranking is then optimized using a learning In this paper, we optimize the evidence ranking in a

to rank loss function. The best performing time-aware fact- content-based fact-check model using four temporal evi-

checking model outperforms its baseline by up to 33.34%, dence ranking methods. These newly proposed methods are

depending on the domain. Overall, evidence-based recency based on recency and time-dependence characteristics of

and evidence-centered clustering ranking lead to the best re- both claim and evidence, and constrain evidence ranking

sults. Our study reveals that time-aware evidence ranking not following diverse relevance hypotheses. As ground-truth

only surpasses relevance assumptions based purely on seman- evidence rankings are unavailable for training, we simulate

tic similarity or position in a search results list, but also im-

proves veracity predictions of time-sensitive claims in partic-

specific rankings for each proposed ranking method given

ular. the timestamp of each evidence snippet as gold standard.

For example, evidence snippets are ranked in descending

order according to their publishing time when training

Introduction a recency-based hypothesis. When a time-dependence

While some claims are, by definition, incontestably true and hypothesis is adopted, they are ranked in ascending order

factual at any time, the truthfulness or veracity of others according to their temporal distance to either a claim’s or

are subject to time indications and temporal dynamics the other evidence snippets’ publishing time. Ultimately,

(Halpin 2008). Those claims are said to be time-sensitive or time-aware evidence ranking is directly optimized using a

time-dependent (Yamamoto et al. 2008). Time-insensitive dedicated, learning to rank loss function.

claims or facts such as “Smoking increases the risk of The contributions of this work are as follows:

cancer” and “The earth is flat” can be supported or refuted • We propose to model the temporal dynamics of evidence

by evidence, independent of its publishing time. On the for content-based fact-checking and show that it outper-

other hand, time-sensitive claims such as “Face masks forms a ranking of evidence documents based purely on

are obligatory on public transport” and “Brad Pitt is back semantic similarity - as used in prior work - by up to

together with Jennifer Aniston” are best supported or 33.34%.

refuted by evidence from the time period in which the

claim was made, as the veracity of the claim may vary • We test various hypotheses of how to define evidence rele-

over time. Nonetheless, automated fact-checking research vance using timestamps and explore the performance dif-

has paid little attention to the temporal dynamics of truth ferences between those hypotheses.

and, by extension, the temporal relevance of evidence • We fine-tune evidence ranking by optimizing a learningto rank loss function; this elegant, yet effective approach that the timestamp of a piece of evidence is crucial to how

requires only a few adjustments to the model architecture its relevance should be defined and how it should be ranked

and can be easily added to any other fact-check model. for a given claim.

• Moreover, optimizing evidence ranking using a dedicated,

learning to rank loss function is, to our knowledge, novel The dynamics of time in fact-checking have not been

in automated fact-checking research. widely studied yet. Yamamoto et al. (2008) incorporated the

idea of temporal factuality in a fact-check model. Uncertain

facts are input as queries in a search engine, and a fact’s

Related Work trustworthiness is determined based on the detected senti-

Automated fact-checking is generally approached in two ment and frequency of alternative and counter facts in the

ways: content-based (Santos et al. 2020; Zhou et al. 2020) search results in a given time frame. However, the authors

and propagation-based (Volkova et al. 2017; Liu et al. point out that their system is slow and the naive Bayes

2020; Shu, Wang, and Liu 2019) fact-checking. In the classifier is not able to detect semantic similarities between

former approach, a claim’s veracity prediction is based on alternative and counter facts, resulting in non-conclusive

either its content and its stylistic and linguistics properties results. Moreover, the frequency of a fact can be misleading,

(claim-only prediction), or both textual inference and with incorrect claims possibly having more hits than correct

semantic relatedness between claim and supporting/refuting ones (Li, Meng, and Yu 2011). Hidey et al. (2020) recently

evidence (claim-evidence prediction). In the latter, a claim’s published an adversarial dataset that can be used to evaluate

veracity prediction is based on the social context in which a fact-check model’s temporal reasoning abilities. In this

a claim propagates, i.e., the interaction and relationship dataset, arithmetic, range and verbalized time indications

between publishers, claims and users (Shu, Wang, and Liu are altered using date manipulation heuristics. Zhou,

2019), and its development over time (Ma et al. 2015; Shu Zhao, and Jiang (2020) studied pattern-based temporal

et al. 2017). In this paper, we predict a claim’s veracity fact extraction. By first extracting temporal facts from a

using supporting and refuting evidence. This content-based corpus of unstructured texts using textual pattern-based

approach is favored over propagation-based fact-checking methods, they model pattern reliability based on time cues

as it allows for early misinformation1 detection when such as text generation timestamps and in-text temporal

propagation information is not yet available (Zhou et al. tags. Unreliable and incorrect temporal facts are then

2020). Moreover, claim-only content-based fact-checking automatically discarded. However, a large amount of data

focuses too strongly on a claim’s linguistic and stylistic is needed to determine a claim’s veracity with that method,

features and is found to be limited in terms of detecting which might not be available for new claims yet.

high-quality machine-generated misinformation (Schuster

et al. 2020). We therefore opt for claim-evidence content-

based fact-checking.

Model Architecture

We start from a common base model whose evidence rank-

Previous work in claim-evidence fact-checking has ing module we will directly optimize relying on several

differentiated between pieces of evidence in various man- time-aware relevance hypotheses. We take the Joint Veracity

ners. Some consider evidence equally important (Thorne Prediction and Evidence Ranking model presented in Au-

et al. 2018; Mishra and Setty 2019). Others weigh or rank genstein et al. (2019) as the base model (Fig 1). It classifies

evidence according to its assumed relevance to the claim. a given claim sentence using claim metadata and a corre-

Liu et al. (2020), for instance, linked evidence relevance sponding set of evidence snippets. The evidence snippets

to node and edge kernel importance in an evidence graph are extracted from various sources and consist of multiple,

using neural matching kernels. Li, Meng, and Yu (2011) often incomplete sentences. As the claims are obtained from

and Li, Meng, and Clement (2016) defined evidence various fact-check organisations/domains with multiple in-

relevance in terms of evidence position in a search engine’s house veracity labels, the model outputs domain-specific

ranking list, while Wang, Zhu, and Wang (2015) related classification labels.

evidence relevance with source popularity. However, The model takes as input claim sentence ci ∈ C =

evidence relevance has been principally associated with {c1 , ..., cN }, evidence set Ei = {ei1 , ..., eiK } and meta-

semantic similarity between claim-evidence pairs and is data vector mi ∈ M = {m1 , ..., mN }; with N the total

computed using language models (Zhong et al. 2020), number of claims, metadata vectors and evidence sets, and

textual entailment/inference models (Hanselowski et al. K ≤ 10 the total number of evidence snippets in evidence

2018; Zhou et al. 2019; Nie, Chen, and Bansal 2019), set Ei ∈ E = {E1 , ...EN }. The model first encodes ci

cosine similarity (Miranda et al. 2019), or token matching and Ei = {ei1 , ..., eiK } using a shared bidirectional skip-

and sentence position in the evidence document (Yoneda connection LSTM, while a CNN encodes mi ; respectively

et al. 2018). As opposed to previous work, we hypothesize resulting in hci , HEi = {hei1 , ..., heiK } and hmi . Each

1

heij ∈ HEi is then fused with hci and hmi following a nat-

We choose to use ‘misinformation’ instead of ‘disinformation’

or ‘fake news’, as the latter two entail the author’s intention of de-

ural language inference matching method proposed by Mou

liberately conveying wrong information to influence their readers, et al. (2016):

while ‘misinformation’ merely entails wrong information without

inferring the author’s intention (Shu et al. 2017). fij = [hci ; heij ; hci − heij ; hci ◦heij ; hmi ] (1)Time-Aware

Ranking

ground-truth

temporal ranking

claim

ListMLE

bidirectional

LSTM

evidence 1 evidence

ranking

evidence 2

softmax

... ranking scores

evidence i

p(labels)

label

embedding

label scores

metadata CNN

word instance

embeddings representation

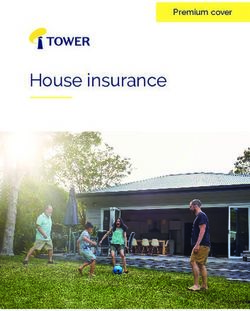

Figure 1: Training architecture of the time-aware fact-check model. During model pre-training, the base model is only trained

on the veracity classification task. During model fine-tuning, the ListMLE loss function is used to additionally optimize the

evidence ranking using the ranking scores yielded by the evidence ranking module.

where semi-colon denotes vector concatenation, “−” only the veracity classification task is optimized. In this

element-wise difference and “◦” element-wise multiplica- section, we explain how we retrieve timestamps from the

tion. Each combined evidence-claim representation fij ∈ dataset and introduce our four temporal ranking methods

Fi = {fi1 , ..., fiK } is fed to a label embedding layer and ev- and the learning to rank loss function for evidence ranking.

idence ranking layer. In the label embedding layer, similar- Temporal or time-denoting references can be classified

ity between fij and all labels across all domains are scored into three categories: explicit (i.e., time expressions that

by taking their dot product, resulting in label similarity ma- refer to a calendar or clock system and denote a specific

trix Sij . A domain-specific mask is then applied over Sij date and/or time), indexical (i.e., time expressions that can

and a fully-connected layer with Leaky ReLU activation re- only be evaluated against a given index time, usually a

turns domain-specific label vector lij . In the evidence rank- timestamp2 ) and vague references (i.e., time expressions

ing layer, a fully-connected layer followed by a ReLU acti- that cannot be precisely resolved in time) (Schilder and Ha-

vation function returns a ranking score r(fij ) for each fij . bel 2001). In the dataset, explicit time references are pro-

Subsequently, the ranking scores for all fij are concatenated vided for each claim ci (timestamps) and a large number

in an evidence ranking vector Ri = [r(fi1 ); ...; r(fiK )]. The of evidence snippets eij (publishing timestamp at the be-

dot product between domain-specific label vector lij and its ginning of the snippet). For all ci ∈ C and eij ∈ E,

respective evidence ranking score r(fij ) is taken, the result- we retrieve their timestamp t in year-month-day, respec-

ing vectors are summed and domain-specific label probabili- tively resulting in C t = {t(c1 ), ..., t(cN )} and E t =

ties pi are obtained by applying a softmax function. Finally, {{t(e11 ), ..., t(e1K )}, ..., {t(eN1 ), ..., t(eNK )}}. For each

the model outputs the domain-specific veracity label with evidence snippet eij ∈ E, we then calculate its temporal

the highest probability. A cross-entropy loss and RMSProp distance to ci in days:

optimization are employed for learning the model parame-

ters of the classification task. ∆t(eij ) = t(ci ) − t(eij ) (2)

We are aware that using a Transformer model for claim, where positive and negative ∆t(eij ) denote evi-

evidence and metadata encoding is expected to achieve dence snippets that are, respectively, published later

higher results, but we opted for this model architecture for and earlier than t(ci ). This results in ∆E t =

the sake of direct comparability. {{∆t(e11 ), ..., ∆t(e1K )}, ..., {∆t(eN1 ), ..., ∆t(eNK )}}.

The ranking of these explicit time references in terms of

Time-Aware Evidence Ranking their relevance to a claim can be divided into two types:

recency-based and time-dependent ranking (Kanhabua and

We aim at predicting a claim’s veracity label while directly Anand 2016; Bansal et al. 2019). Based on these temporal

optimizing evidence ranking on one of the temporal rele- ranking types, we delineate four temporal ranking methods

vance hypotheses. To this purpose, we simultaneously opti- and define ranking constraints for each method. We then

mize veracity classification and evidence ranking by means simulate a ground-truth evidence ranking for each evidence

of separate loss functions. This contrasts with the base

2

model, in which evidence ranking is learned implicitly and E.g., yesterday, next Saturday, four days ago, in two weeks.set Ei that respects the method-specific constraints. It is pos- For two evidence snippets in the same evidence set, the con-

sible that a constraint excludes an evidence snippet from the straint imposes a higher ranking score r(eik ) for eik and a

ground-truth evidence ranking or a snippet lacks a times- lower ranking score r(eij ) for eij if ∆t(eik ) is larger than

tamp. Consequently, the snippet’s ranking score r(fij ) can- ∆t(eij ). If ∆t(eik ) and ∆t(eij ) are equal, eik and eij obtain

not be optimized directly. In order to deal with excluded the same ranking score.

evidence or missing timestamps, we apply a mask over the

predicted evidence ranking vector Ri = [r(fi1 ), ..., r(fiK )] Claim-based recency ranking

and compute the loss over the ranking scores of the included Evidence snippets published just before or at the

evidence snippets with timestamps. Still, we assume that same time as a claim are more relevant than

the direct optimization of these evidence snippets’ ranking evidence snippets published long before a claim.

scores will influence the ranking score of the others, as they

may contain similar explicit or indexical time references fur-

As for the evidence-based recency ranking method, the

ther in their text or exhibit similar patterns.

claim-based recency ranking method assumes that evidence

For optimizing evidence ranking, we need a loss function

gradually takes into account more information on a subject

that measures how correctly an evidence snippet is ranked

over time and is progressively more informative and rele-

with regard to the other snippets in the same evidence set.

vant. However, only evidence that had been published be-

For this, the ListMLE loss (Xia et al. 2008) is computed:

fore or at the same time as the claim is considered in this

N

X approach, as fact-checkers could only base their claim ve-

ListM LE(r(E), R) = − logP (Ri |Ei ; r) (3) racity judgements on information that was available at that

i=1 time. In other words, we mimic the information accessibil-

ity and availability at claim time t(ci ), and evidence snippets

K

Y exp(r(ERiu )) are ranked accordingly. For ground truth evidence ranking

P (Ri |Ei ; r) = PK (4) Ri , all eij with ∆t(eij ) ≤ 0 are sorted in descending order

u=1 v=u exp(r(ERiv )) and ranked according to the following constraint:

ListMLE is a listwise, non-measure-specific learning to rank

algorithm that uses the negative log-likelihood of a ground ∀eij , eik ∈ Ei : ∆t(eij ) ≤ ∆t(eik ) ∧ ∆t(eij ), ∆t(eik ) ≤ 0

truth permutation as loss function (Liu 2011). It is based =⇒ r(eij ) ≤ r(eik )

on the Plackett-Luce model, that is, the probability of a per- (6)

mutation is first decomposed into the product of a stepwise

conditional probability, with the u-th conditional probability For two evidence snippets in the same evidence set, the con-

standing for the probability that the snippet is ranked at the straint imposes a higher ranking score r(eik ) for eik and a

u-th position given that the top u - 1 snippets are ranked cor- lower ranking score r(eij ) for eij if ∆t(eik ) is larger than

rectly. ListMLE is used for optimizing the evidence ranking ∆t(eij ) and both ∆t(eik ) and ∆t(eij ) are negative or zero.

with each of the four temporal ranking methods. If ∆t(eik ) and ∆t(eij ) are equal, eij and eik obtain the same

ranking score.

Recency-Based Ranking

Time-Dependent Ranking

Our recency-based ranking methods follow the hypothesis

that recently published information requires a higher rank- Instead of defining evidence relevance as a property propor-

ing than information published in the distant past (Li and tional to recency, the time-dependent ranking methods in-

Croft 2003). Relevance is considered proportional to time: troduced in this paper define evidence relevance in terms of

the more recent the publishing date, the more relevant the closeness: claim-evidence and evidence-evidence closeness.

evidence. We approach recency-based ranking in two differ- We discuss two time-dependent ranking methods: claim-

ent ways: evidence-based recency and claim-based recency. centered closeness and evidence-centered clustering rank-

ing.

Evidence-based recency ranking

Evidence snippets published later in time are more Claim-centered closeness ranking

relevant than those published earlier in time, Evidence snippets are more relevant when they are

independent of a claim’s publishing time. published around the same time as the claim and

become less relevant as the temporal distance

between claim and evidence grows.

Our evidence-based recency ranking method follows the

hypothesis that more information on a subject becomes

available over time and, thus, evidence posted later in time is The claim-centered closeness ranking method follows the

favored over evidence published earlier in time. The ground- hypothesis that a topic and its related subtopics are dis-

truth evidence ranking Ri ∈ R = {R1 , ..., RN } for each of cussed around the same time (Martins, Magalhães, and

the N claims satisfies the following constraint: Callan 2019). That said, the likelihood that an evidence

snippet concerns the same topic or event as the claim de-

∀eij , eik ∈ Ei : ∆t(eij ) ≤ ∆t(eik ) creases when the temporal distance between claim and evi-

(5)

=⇒ r(eij ) ≤ r(eik ) dence increases. In other words, evidence posted around thesame time as the claim is considered more relevant than evi- for each claim as structured metadata. For the evidence

dence posted long before or after the claim. For ground truth snippets, however, we need to extract their timestamp from

ranking Ri , we rank all eij based on |∆t(eij )| in ascending the evidence text itself. As an explicit date indication is

order, satisfying the following constraint: often provided at the beginning of an evidence snippet’s

text, we extract this date indication from the snippet itself.

∀eij , eik ∈ Ei : |∆t(eij )| ≤ |∆t(eik )| Both claim and evidence date are then automatically

(7)

=⇒ r(eij ) ≥ r(eik ) formatted as year-month-day using Python’s datetime

module.3 If datetime is unable to correctly format an

For two evidence snippets in the same evidence set, the con-

extracted timestamp, we do not include it in the dataset.

straint imposes a higher ranking score r(eij ) for eij and a

Finally, the dataset is split in training (27,940), develop-

lower ranking score r(eik ) for eik if |∆t(eij )| is smaller than

ment (3,493) and test (3,491) set in a label-stratified manner.

|∆t(eik )|. If |∆t(eij )| and |∆t(eik )| are equal, eij and eik

obtain the same ranking score.

Pre-Training. Similar to Augenstein et al. (2019),

the model is first pre-trained on all domains before it is

Evidence-centered clustering ranking fine-tuned for each domain separately using the following

Evidence snippets that are clustered in time are hyperparameters: batch size [32], maximum epochs [150],

more relevant than evidence snippets that are word embedding size [300], weight initialization word

temporally distant from the others. embedding [Xavier uniform], number of layers BiLSTM

[2], skip-connections BiSTM [concatenation], hidden size

Efron et al. (2014) formulate a temporal cluster hypoth- BiLSTM [128], out channels CNN [32], kernel size CNN

esis, in which they suggest that relevant documents have a [32], activation function CNN [ReLU], weight initialization

tendency to cluster in a shared document space. Following evidence ranking fully-connected layers (FC) [Xavier uni-

that hypothesis, the evidence-centered clustering method as- form], activation function FC 1 [ReLU], activation function

signs ranking scores to evidence snippets in terms of their FC 2 [Leaky ReLU], label embedding dimension [16],

temporal vicinity to the other snippets in the same evi- weight initialization label embedding [Xavier uniform],

dence set. We first detect the medoid of all ∆t(eij ) ∈ weight initialization label embedding fully-connected layer

∆Eit by computing a pairwise distance matrix, summing [Xavier uniform], activation function label embedding fully-

the columns and finding the argmin of the summed pair- connected layer [Leaky ReLU]. We use a cross-entropy

wise distance values. Then, the Euclidean distance be- loss function and optimize the model using RMSProp [lr

tween all ∆t(eij ) and the medoid is calculated: ∆Eicl = = 0.0002]. During each epoch, batches from each domain

{∆cl(eij ), ..., ∆cl(eiK )}. We rank ∆Eicl in ascending or- are alternately fed to the model with the maximum number

der, resulting in ground-truth ranking Ri . Ground-truth of batches for all domains equal to the maximum number

ranking Ri satisfies the following constraint: of batches for the smallest domain so that the model is not

biased towards the larger domains. Early stopping is applied

∀eij , eik ∈ Ei : |∆cl(eij )| ≤ |∆cl(eik )| and pre-training is performed in 10 runs. Pre-training the

(8) model on all domains is advantageous, as some domains

=⇒ r(eij ) ≥ r(eik )

contain few training data. In this phase, the model is trained

For two evidence snippets in the same evidence set, the con- on the veracity classification task. Evidence ranking is not

straint imposes a higher ranking score r(eij ) for eij and a yet optimized using the four temporal ranking methods.

lower ranking score r(eik ) for eik if |∆cl(eij )| is smaller

than |∆cl(eik )|. If |∆cl(eij )| and |∆cl(eik )| are equal, eij Fine-Tuning. We select the best performing pre-trained

and eik obtain the same ranking score. model based on the development set for each domain

individually and fine-tune it on that domain, as done in Au-

Experimental Setup genstein et al. (2019). In contrast to the pre-training phase,

Dataset. We opt for the MultiFC dataset (Augenstein et al. the model now outputs not only the label probabilities for

2019), as it is the only large, publicly available fact-check veracity classification, but also the ranking scores computed

dataset that provides temporal information for both claims by the evidence ranking module. In other words, the model

and evidence pages, and follow their experimental setup. is fine-tuned on two tasks instead of one. For the veracity

The dataset contains 34,924 real-world claims extracted classification task, cross-entropy loss is computed over the

from 26 different fact-check websites. The fact-check label probabilities, and RMSProp [lr = 0.0002] optimizes

domains are abbreviated to four-letter contractions (e.g., all the model parameters except the evidence ranking

Snopes to snes, Washington Post to wast). For each claim, parameters. For the evidence ranking task, ListMLE loss is

metadata such as speaker, tags and categories are included computed over the ranking scores, and Adam [lr = 0.001]

and a maximum of ten evidence snippets crawled from the optimizes only the evidence ranking parameters. We use the

Internet using the Google Search API are used to predict

a claim’s veracity label. Metadata is integrated into the 3

We randomly extracted 150 timestamps from claims and evi-

model, as it provides additional external information about dence snippets in the dataset and manually verified whether date-

a claim’s position in the real world. Regarding temporal time correctly parsed them in year-month-day. As zero mistakes

information, the dataset provides an explicit timestamp were found, we consider datetime sufficiently accurate.ListMLE loss function from the allRank library (Pobrotyn

et al. 2020).

1.0

0.9

Results 0.8

Table 1 displays the test results for the veracity classifica- 0.7

tion task. We use Micro and Macro F1 score as evaluation 0.6

metrics, and the results of our base model are comparable 0.5

to those of Augenstein et al. (2019). As an additional base- 0.4

line, we optimize evidence ranking on the evidence snip- 0.3

pet’s position in the Google search ranking list: the higher 0.2

in the list, the higher ranked in the simulated ground-truth 0.1

evidence ranking. Directly optimizing evidence ranking on 0.0

the temporal ranking methods leads to an overall increase 0.1

in Micro F1 score for three of the four methods: +2.56% 0.2

clck

faly

abbc

fani

obry

pose

peck

thal

vogo

vees

mpws

bove

ranz

afck

faan

para

huca

hoer

pomt

snes

tron

thet

chct

farg

wast

goop

(evidence-based recency), +1.60% (claim-based recency)

and +2.55% (evidence-centered clustering). If we take the Domains

best performing ranking method for each domain individu-

ally, time-aware evidence ranking surpasses the base model Figure 2: Share of evidence with retrievable timestamp (in

by 5.51% (Micro F1) and 3.40% (Macro F1). Moreover, it gray) and Micro F1 improvements/decline of time-aware

outperforms search engine ranking by 11.89% (Micro F1) fact-checking over base model (in green) per domain.

and 7.23% (Macro F1). When looking at each domain indi-

vidually, it appears that the temporal ranking methods have a

different effect on the test results. Regarding recency-based ods, other domains consistently perform worse (chct, faan,

ranking, fine-tuning the model on evidence-based recency pomt) or their performance remains the same across all tem-

ranking results in the highest test results for seven domains poral ranking methods (clck, faly, fani farg, mpws, ranz).

(abbc, obry, para, peck, thet, tron and vogo), while claim- We assume that temporal evidence ranking has a larger im-

based recency ranking achieves the best test results for three pact on the veracity classification of time-sensitive claims

domains (huca, thet and vees). The time-dependent ranking than time-insensitive claims. The effect of time-aware evi-

methods also return the highest test results for multiple do- dence ranking consequently depends on the share of time-

mains: claim-centered closeness for afck, and evidence- sensitive/time-insensitive claims in the domains. We there-

centered clustering for abbc, hoer, pose, thal, thet and fore retrieve the categories from several domains and an-

wast. These improvements over base model Micro F1 scores alyze their time-sensitivity. The analysis confirms our as-

can be substantial: +20.37% (abbc), +25% (para, thet) and sumption; domains which mainly tackle time-sensitive sub-

+33.34% (peck). For some domains, the test results of all jects such as politics, economy, climate and entertainment

the temporal ranking hypotheses are identical to those of the (abbc, para, thet) benefit more from time-aware evidence

base model (clck, faly, fani, farg, mpws, ranz). For only ranking than domains discussing both time-sensitive and

three of the 26 domains (chct, faan and pomt), the model time-insensitive subjects such as food, language, humor and

performs worse when the temporal ranking methods are ap- animals (snes, tron).

plied. Method-specific. Each domain reacts differently to the

temporal ranking methods. However, not every domain

Discussion prefers a specific temporal ranking method. For the clck,

Overall. Introducing time-awareness in a fact-check model faly and fani domains, for instance, all temporal ranking

by directly optimizing evidence ranking using temporal methods return the same test results. This is likely caused

ranking methods positively influences the model’s classifi- by the low share of evidence snippets for which temporal

cation performance. Moreover, time-aware evidence rank- information could be extracted (approx. 10%, see Fig. 2).

ing outperforms search engine evidence ranking, suggest- When inspecting the models, we found that the supposed

ing that the temporal ranking methods themselves - and not hypothesis-specific ground-truth rankings are shared across

merely the act of direct evidence ranking optimization - lead all four temporal ranking methods in those three domains.

to higher results. The evidence-based recency and evidence- As a result, the model is fine-tuned identically with each

centered clustering methods lead to the highest metric scores of the four methods, leading to identical test results for the

on average. However, their advantage over the other time- time-aware ranking models. This indicates that a sufficient

aware evidence ranking methods is not large: +.96/.95% number of evidence snippets with temporal information is

(Micro F1) over claim-based recency and +2.90/2.89% (Mi- needed for temporal ranking hypotheses to be effective.

cro F1) over claim-centered closeness, respectively. Regarding recency-based ranking, a preference for either

Domain-specific. Time-aware evidence ranking impacts evidence-based or claim-based recency ranking might de-

the domains’ classification performance to various extents. pend on the share of evidence posted after the claim date. If

While classification performance for some domains (abbc, an evidence set mainly consists of later-posted evidence, the

hoer, para, thet) increases with all temporal ranking meth- ranking of only a few evidence snippets is directly optimizedBase Model Search Ranking Evidence Recency Claim Recency Claim Closeness Evidence Clustering Time-Aware Ranking

Micro Macro Micro Macro Micro Macro Micro Macro Micro Macro Micro Macro Micro Macro

abbc .3148 .2782 .5185 .2276 .5185 .2276 .4444 .2707 .5000 .2222 .5185 .2276 .5185 .2276

afck .2222 .1385 .2778 .1298 .1944 .0880 .3056 .1436 .3333 .1517 .2500 .1245 .3333 .1517

bove 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.

chct .5500 .2967 .4000 .2194 .4500 .2468 .4750 .2613 .4250 .2268 .4750 .2562 .4750 .2613

clck .5000 .2500 .5000 .2500 .5000 .2500 .5000 .2500 .5000 .2500 .5000 .2500 .5000 .2500

faan .5000 .5532 .3182 .1609 .3182 .1609 .4091 .2918 .3182 .1728 .3182 .1609 .4091 .2918

faly .6429 .2045 .3571 .1316 .6429 .2045 .6429 .2045 .6429 .2045 .6429 .2045 .6429 .2045

fani 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.

farg .6923 .1023 .6923 .1023 .6923 .1023 .6923 .1023 .6923 .1023 .6923 .1023 .6923 .1023

goop .8344 .1516 .1062 .0316 .8344 .1516 .8311 .1513 .8344 .1516 .8278 .1510 .8344 .1516

hoer .4104 .2091 .3731 .0781 .4403 .2096 .4403 .2241 .4478 .2030 .4552 .2453 .4552 .2453

huca .5000 .2857 .6667 .5333 .5000 .2857 .6667 .5333 .5000 .2857 .5000 .3333 .6667 .5333

mpws .8750 .6316 .8750 .6316 .8750 .6316 .8750 .6316 .8750 .6316 .8750 .6316 .8750 .6316

obry .5714 .3520 .4286 .1500 .5714 .3716 .3571 .1563 .4286 .2763 .3571 .2902 .5714 .3716

para .1875 .1327 .3750 .1698 .4375 .2010 .3750 .1692 .3750 .1692 .3750 .1692 .4375 .2010

peck .5833 .2593 .4167 .2083 .9167 .6316 .6667 .4082 .4167 .2652 .8333 .5617 .9167 .6316

pomt .2143 .2089 .1679 .0319 .1500 .0766 .1616 .0961 .1830 .0605 .1964 .0637 .1964 .0637

pose .4178 .0987 .4178 .0982 .3973 .0967 .4178 .0992 .4178 .0992 .4247 .2080 .4247 .2080

ranz 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.

snes .5646 .0601 .5646 .0601 .5600 .0600 .5646 .0601 .5646 .0601 .5646 .0601 .5646 .0601

thal .5556 .2756 .5556 .3000 .4444 .1391 .2778 .1333 .5000 .3075 .5556 .3600 .5556 .3600

thet .3750 .2593 .6250 .3556 .6250 .3556 .6250 .3556 .6250 .3056 .6250 .3556 .6250 .3556

tron .4767 .0258 .4767 .0258 .4767 .0366 .4738 .0258 .4767 .0258 .4767 .0258 .4767 .0366

vees .7097 .2075 .1774 .2722 .7097 .2075 .7258 .3021 .7097 .2075 .7097 .2075 .7258 .3021

vogo .5000 .2056 .1875 .0451 .5469 .2463 .5313 .2126 .4063 .1628 .5000 .2062 .5469 .2463

wast .1563 .0935 .2188 .0718 .2188 .0718 .3438 .1656 .0938 .0286 .3438 .2785 .3438 .2785

avg. .5521 .3185 .4883 .2802 .5777 .3097 .5681 .2977 .5487 .2912 .5776 .3259 .6072 .3525

Table 1: Overview of classification test results (Micro F1 and Macro F1), with improvements over base model results underlined

and highest temporal ranking results for each domain in bold. The last column contains the highest results returned by the four

temporal ranking methods.

with the claim-based recency ranking method, leaving the sion causes a preference for time-dependent ranking meth-

model to indirectly learn the ranking scores of the others. In ods is rejected.

that case, the evidence-based method might be favored over

the claim-based method. However, the share of later-posted Conclusion

evidence is not consistently different in domains preferring

evidence-based recency than in domains favoring claim- Introducing time-awareness in evidence ranking arguably

based recency ranking. Concerning time-dependent rank- leads to more accurate veracity predictions in fact-check

ing, evidence and claim (claim-centered closeness), and evi- models - especially when they deal with claims about time-

dence and evidence (evidence-centered clustering) are more sensitive subjects such as politics and entertainment. These

likely to discuss the same topic when they are published performance gains also indicate that evidence relevance

around the same time. The time-dependent ranking methods should be approached more diversely instead of merely as-

would thus increase classification performance for domains sociating it with the semantic similarity between claim and

in which the dispersion of evidence snippets in the claim- evidence. By integrating temporal ranking constraints in a

specific evidence sets is small. We measure the temporal neural architecture via appropriate loss functions, we show

dispersion of each evidence set using the standard devia- that a fact-check model is able to learn time-aware evidence

tion in domains which mainly favor time-dependent ranking rankings in an elegant, yet effective manner. To our knowl-

over recency-based ranking (afck, faan, pomt, thal; Group edge, evidence ranking optimization using a dedicated rank-

1), and vice versa (chct, vogo; Group 2). We then check ing loss has not been done before in previous work on fact-

whether the domains in these groups display similar dis- checking. Whereas this study is limited to integrating time-

persion values. Kruskal-Wallis H tests indicate that disper- awareness in the evidence ranking as part of automated fact-

sion values statistically differ between domains in the same checking, future research could build on these findings to

group (Group 1: H = 258.63, p < 0.01; Group 2: H = explore the impact of time-awareness at other stages of fact-

192.71, p < 0.01). Moreover, Mann-Whitney U tests on do- checking, e.g., document retrieval or evidence selection, and

main pairs suggest that inter-group differences are not con- in domains beyond fact-checking.

sistently larger than intra-group differences (e.g. thal-chct:

M dn = 337.53, M dn = 239.26, p = 0.021 > 0.01; thal- Acknowledgments

pomt: M dn = 337.53, M dn = 661.25, p = 9.95e−5 <

0.01). Therefore, the hypothesis that small evidence disper- This work was supported by COST Action CA18231 and

realised with the collaboration of the European CommissionJoint Research Centre under the Collaborative Doctoral Part- SIGIR conference on Research and Development in Infor-

nership Agreement No 35332. mation Retrieval, 1235–1238. New York: Association for

Computing Machinery. doi:10.1145/2911451.2914805.

References Li, X.; and Croft, W. B. 2003. Time-Based Language

Augenstein, I.; Lioma, C.; Wang, D.; Lima, L. C.; Hansen, Models. In Proceedings of the Twelfth International Con-

C.; Hansen, C.; and Simonsen, J. G. 2019. MultiFC: A ference on Information and Knowledge Management, 469–

Real-World Multi-Domain Dataset for Evidence-Based Fact 475. New York: Association for Computing Machinery. doi:

Checking of Claims. In Proceedings of the 2019 Confer- 10.1145/956863.956951.

ence on Empirical Methods in Natural Language Process- Li, X.; Meng, W.; and Clement, T. Y. 2016. Verification

ing and the 9th International Joint Conference on Natural of Fact Statements with Multiple Truthful Alternatives. In

Language Processing (EMNLP-IJCNLP), 4685–4697. Hong Proceedings of the 12th International Conference on Web

Kong: Association for Computational Linguistics. doi: Information Systems and Technologies - Volume 2: WEBIST,

10.18653/v1/D19-1475. 87–97. SciTePress. doi:10.5220/0005781800870097.

Bansal, R.; Rani, M.; Kumar, H.; and Kaushal, S. 2019. Li, X.; Meng, W.; and Yu, C. 2011. T-Verifier: Verifying

Temporal Information Retrieval and Its Application: A Sur- Truthfulness of Fact Statements. In 2011 IEEE 27th Interna-

vey. In Shetty, N. R.; Patnaik, L. M.; Nagaraj, H. C.; Ham- tional Conference on Data Engineering, 63–74. Hannover:

savath, P. N.; and Nalini, N., eds., Emerging Research in IEEE. doi:10.1109/ICDE.2011.5767859.

Computing, Information, Communication and Applications. Liu, T.-Y. 2011. Learning to Rank for Information Retrieval.

Advances in Intelligent Systems and Computing, 251–262. Heidelberg: Springer.

Singapore: Springer. doi:10.1007/978-981-13-6001-5 19.

Liu, X.; Kar, B.; Zhang, C.; and Cochran, D. M. 2019. As-

Chen, Q.; Hu, Q.; Huang, J. X.; and He, L. 2018. TAKer: sessing Relevance of Tweets for Risk Communication. In-

Fine-Grained Time-Aware Microblog Search with Kernel ternational Journal of Digital Earth 12(7): 781–801. doi:

Density Estimation. IEEE Transactions on Knowledge and 10.1080/17538947.2018.1480670.

Data Engineering 30(8): 1602–1615. doi:10.1109/TKDE. Liu, Z.; Xiong, C.; Sun, M.; and Liu, Z. 2020. Fine-grained

2018.2794538. Fact Verification with Kernel Graph Attention Network. In

Dakka, W.; Gravano, L.; and Ipeirotis, P. 2010. Answer- Proceedings of the 58th Annual Meeting of the Association

ing General Time-Sensitive Queries. IEEE Transactions on for Computational Linguistics, 7342–7351. Online: Associ-

Knowledge and Data Engineering 24(2): 220–235. doi: ation for Computational Linguistics. doi:10.18653/v1/2020.

10.1109/TKDE.2010.187. acl-main.655.

Efron, M.; Lin, J.; He, J.; and de Vries, A. 2014. Tempo- Ma, J.; Gao, W.; Wei, Z.; Lu, Y.; and Wong, K.-F. 2015.

ral Feedback for Tweet Search with Non-Parametric Density Detect Rumors Using Time Series of Social Context In-

Estimation. In Proceedings of the 37th International ACM formation on Microblogging Websites. In Proceedings of

SIGIR Conference on Research & Development in Informa- the 24th ACM International on Conference on Information

tion Retrieval, 3342. New York: Association for Computing and Knowledge Management, 1751–1754. New York: As-

Machinery. doi:10.1145/2600428.2609575. sociation for Computing Machinery. doi:10.1145/2806416.

2806607.

Halpin, T. 2008. Temporal Modeling and ORM. In OTM

Martins, F.; Magalhães, J.; and Callan, J. 2019. Modeling

Confederated International Conferences” On the Move to

Temporal Evidence from External Collections. In Proceed-

Meaningful Internet Systems”, 688–698. Berlin, Heidelberg:

ings of the Twelfth ACM International Conference on Web

Springer. doi:10.1007/978-3-540-88875-8 93.

Search and Data Mining, 159–167. New York: Association

Hanselowski, A.; Zhang, H.; Li, Z.; Sorokin, D.; Schiller, for Computing Machinery. doi:10.1145/3289600.3290966.

B.; Schulz, C.; and Gurevych, I. 2018. UKP-Athene: Multi- Miranda, S.; Nogueira, D.; Mendes, A.; Vlachos, A.;

Sentence Textual Entailment for Claim Verification. In Secker, A.; Garrett, R.; Mitchel, J.; and Marinho, Z. 2019.

Proceedings of the First Workshop on Fact Extraction and Automated Fact Checking in the News Room. In The World

VERification (FEVER), 103–108. Brussels: Association for Wide Web Conference, 3579–3583. New York: Association

Computational Linguistics. doi:10.18653/v1/W18-5516. for Computing Machinery. doi:10.1145/3308558.3314135.

Hidey, C.; Chakrabarty, T.; Alhindi, T.; Varia, S.; Krstovski, Mishra, R.; and Setty, V. 2019. SADHAN: Hierarchical At-

K.; Diab, M.; and Muresan, S. 2020. DeSePtion: Dual Se- tention Networks to Learn Latent Aspect Embeddings for

quence Prediction and Adversarial Examples for Improved Fake News Detection. In Proceedings of the 2019 ACM

Fact-Checking. In Proceedings of the 58th Annual Meet- SIGIR International Conference on Theory of Information

ing of the Association for Computational Linguistics, 8593– Retrieval, 197–204. New York: Association for Computing

8606. Online: Association for Computational Linguistics. Machinery. doi:10.1145/3341981.3344229.

doi:10.18653/v1/2020.acl-main.761.

Mou, L.; Men, R.; Li, G.; Xu, Y.; Zhang, L.; Yan, R.;

Kanhabua, N.; and Anand, A. 2016. Temporal Information and Jin, Z. 2016. Natural Language Inference by Tree-

Retrieval. In Proceedings of the 39th International ACM Based Convolution and Heuristic Matching. In Proceed-ings of the 54th Annual Meeting of the Association for Com- Computational Linguistics (Volume 2: Short Papers), 647– putational Linguistics (Volume 2: Short Papers), 130–136. 653. Vancouver: Association for Computational Linguistics. Berlin: Association for Computational Linguistics. doi: doi:10.18653/v1/P17-2102. 10.18653/v1/P16-2022. Wang, T.; Zhu, Q.; and Wang, S. 2015. Multi-Verifier: A Nie, Y.; Chen, H.; and Bansal, M. 2019. Combining Fact Novel Method for Fact Statement Verification. World Wide Extraction and Verification with Neural Semantic Match- Web 18(5): 1463–1480. doi:10.1007/s11280-014-0297-x. ing Networks. In Proceedings of the 33rd AAAI Confer- Xia, F.; Liu, T.-Y.; Wang, J.; Zhang, W.; and Li, H. 2008. ence on Artificial Intelligence, 6859–6866. Honolulu: Asso- Listwise Approach to Learning to Rank: Theory and Algo- ciation for the Advancement of Artificial Intelligence. doi: rithm. In Proceedings of the 25th International Conference 10.1609/aaai.v33i01.33016859. on Machine Learning, 1192–1199. New York: Association Pobrotyn, P.; Bartczak, T.; Synowiec, M.; Bialobrzeski, R.; for Computing Machinery. doi:10.1145/1390156.1390306. and Bojar, J. 2020. Context-Aware Learning to Rank with Xue, T.; Jin, B.; Li, B.; Wang, W.; Zhang, Q.; and Tian, Self-Attention. In SIGIR eCom ’20. Virtual Event, China. S. 2019. A Spatio-Temporal Recommender System for On- Rao, J.; He, H.; Zhang, H.; Ture, F.; Sequiera, R.; Mo- Demand Cinemas. In Proceedings of the 28th ACM Inter- hammed, S.; and Lin, J. 2017. Integrating Lexical and Tem- national Conference on Information and Knowledge Man- poral Signals in Neural Ranking Models for Searching So- agement, 15531562. New York: Association for Computing cial Media Streams. In SIGIR 2017 Workshop on Neural Machinery. doi:10.1145/3357384.3357888. Information Retrieval (Neu-IR’17). Shinjuku. Yamamoto, Y.; Tezuka, T.; Jatowt, A.; and Tanaka, K. 2008. Santos, R.; Pedro, G.; Leal, S.; Vale, O.; Pardo, T.; Supporting Judgment of Fact Trustworthiness Considering Bontcheva, K.; and Scarton, C. 2020. Measuring the Im- Temporal and Sentimental Aspects. In International Con- pact of Readability Features in Fake News Detection. In ference on Web Information Systems Engineering, 206–220. Proceedings of The 12th Language Resources and Evalua- Springer. doi:10.1007/978-3-540-85481-4 17. tion Conference, 1404–1413. Marseille, France: European Yoneda, T.; Mitchell, J.; Welbl, J.; Stenetorp, P.; and Riedel, Language Resources Association. S. 2018. UCL Machine Reading Group: Four Factor Frame- Schilder, F.; and Habel, C. 2001. From Temporal Ex- work for Fact Finding (HexaF). In Proceedings of the First pressions to Temporal Information: Semantic Tagging of Workshop on Fact Extraction and VERification (FEVER), News Messages. In Proceedings of the ACL 2001 Work- 97–102. Brussels: Association for Computational Linguis- shop on Temporal and Spatial Information Processing, 65– tics. doi:10.18653/v1/W18-5515. 72. Toulouse, France: Association for Computational Lin- Zahedi, M.; Aleahmad, A.; Rahgozar, M.; Oroumchian, F.; guistics. and Bozorgi, A. 2017. Time Sensitive Blog Retrieval us- Schuster, T.; Schuster, R.; Shah, D. J.; and Barzilay, ing Temporal Properties of Queries. Journal of Information R. 2020. The Limitations of Stylometry for Detecting Science 43(1): 103–121. doi:10.1177/0165551515618589. Machine-Generated Fake News. Computational Linguistics Zhang, X.; Cheng, W.; Zong, B.; Chen, Y.; Xu, J.; Li, D.; 46: 1–18. doi:10.1162/COLI a 00380. and Chen, H. 2020. Temporal Context-Aware Represen- Shu, K.; Sliva, A.; Wang, S.; Tang, J.; and Liu, H. 2017. tation Learning for Question Routing. In Proceedings of Fake News Detection on Social Media: A Data Mining Per- the 13th International Conference on Web Search and Data spective. ACM SIGKDD Explorations Newsletter 19(1): 22– Mining, 753–761. New York: Association for Computing 36. doi:10.1145/3137597.3137600. Machinery. doi:10.1145/3336191.3371847. Shu, K.; Wang, S.; and Liu, H. 2019. Beyond News Con- Zhong, W.; Xu, J.; Tang, D.; Xu, Z.; Duan, N.; Zhou, M.; tents: The Role of Social Context for Fake News Detection. Wang, J.; and Yin, J. 2020. Reasoning Over Semantic-Level In Proceedings of the 12th ACM International Conference Graph for Fact Checking. In Proceedings of the 58th Annual on Web Search and Data Mining, 312–320. New York: As- Meeting of the Association for Computational Linguistics, sociation for Computing Machinery. doi:10.1145/3289600. 6170–6180. Online: Association for Computational Lin- 3290994. guistics. doi:10.18653/v1/2020.acl-main.549. Thorne, J.; Vlachos, A.; Christodoulopoulos, C.; and Mittal, Zhou, J.; Han, X.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; and A. 2018. FEVER: a Large-scale Dataset for Fact Extraction Sun, M. 2019. GEAR: Graph-based Evidence Aggregating and VERification. In Proceedings of the 2018 Conference and Reasoning for Fact Verification. In Proceedings of the of the North American Chapter of the Association for Com- 57th Annual Meeting of the Association for Computational putational Linguistics: Human Language Technologies, Vol- Linguistics, 892–901. Florence: Association for Computa- ume 1 (Long Papers), 809–819. New Orleans: Association tional Linguistics. doi:10.18653/v1/P19-1085. for Computational Linguistics. doi:10.18653/v1/N18-1074. Zhou, X.; Jain, A.; Phoha, V. V.; and Zafarani, R. 2020. Fake Volkova, S.; Shaffer, K.; Jang, J. Y.; and Hodas, N. 2017. News Early Detection: A Theory-Driven Model. Digital Separating Facts from Fiction: Linguistic Models to Clas- Threats: Research and Practice 1(2). doi:10.1145/3377478. sify Suspicious and Trusted News Posts on Twitter. In Pro- Zhou, Y.; Zhao, T.; and Jiang, M. 2020. A Probabilis- ceedings of the 55th Annual Meeting of the Association for tic Model with Commonsense Constraints for Pattern-based

Temporal Fact Extraction. In Proceedings of the Third Work- shop on Fact Extraction and VERification (FEVER), 18–25. Online: Association for Computational Linguistics. doi: 10.18653/v1/2020.fever-1.3.

You can also read