HYPERION RESEARCH UPDATE: Research Highlights In HPC, HPDA-AI, Cloud Computing, Quantum Computing, and Innovation Award Winners ISC19

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

HYPERION RESEARCH UPDATE:

Research Highlights In HPC, HPDA-AI, Cloud

Computing, Quantum Computing, and

Innovation Award Winners

ISC19

Earl Joseph, Steve Conway,

Bob Sorensen, and Alex NortonImportant Notes

▪ If you want a copy of the slides:

• Please register at our homepage

(www.HyperionResearch.com ):

→ or leave a business card

▪ Check out our web sites:

• www.HyperionResearch.com

• www.hpcuserforum.com

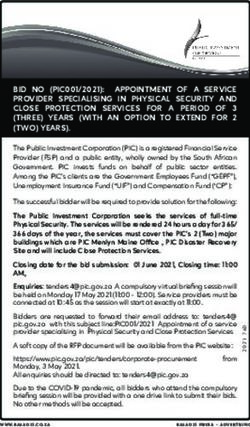

© Hyperion Research 2Agenda 1. Example Hyperion Research Projects 2. Update on the HPC Market 3. Cloud Computing Update and Our New Scorecard Tool 4. Quantum Computing Update 5. The Exascale Race 6. The ISC19 Innovation Award Winners 7. Conclusions and Predictions © Hyperion Research 3

The Hyperion Research Team

Earl Joseph Research studies & strategic consulting

Steve Conway Strategic consulting, HPC UF, Big Data, AI

Bob Sorensen Strategic research, government studies, QC

Alex Norton Special studies, new data analysis, clouds

Lloyd Coen

Mike Thorp Global sales management

Kurt Gantrish Global sales management

Jean Sorensen Business manager

Tom Christian Survey design & executive interviews

Nishi Katsuya Japan research and studies

© Hyperion Research 4Hyperion Research HPC Activities

• Track all HPC servers sold each quarter

• By 28 countries

• 4 HPC User Forum meetings each year

• Publish 85 plus research reports each year

• Visit all major supercomputer sites & write reports

• Assist in collaborations between buyers/users and vendors

• Assist governments in HPC plans, strategies and direction

• Assist buyers/users in planning and procurements

• Maintain 5 year forecasts in many areas/topics

• Develop a worldwide ROI measurement system

• HPDA program (includes ML/DL/AI)

• HPC Cloud usage tracking

• Quarterly tracking of GPUs/accelerators

• Cyber Security

• Quantum Computing

• Map applications to algorithms to architectures

© Hyperion Research 5Plan On Joining Us This Year:

2019 HPC User Forum Meetings

(www.hpcuserforum.com)

Plus China in August

September 9-11

Argonne National Laboratory

(Chicago area)

October 7-8

CSCS: Swiss National

Supercomputing Center (Lugano)

October 10-11

EPCC

(Edinburgh)

© Hyperion Research 2019 8HPDA & AI Key Takeaway:

Most Economically Important Use Cases

Precision Medicine

Automated Driving Systems

Fraud and anomaly detection

Affinity Marketing

Business Intelligence

Cyber Security

IoT

© Hyperion Research 10High Growth Areas: HPDA-AI (May 2019) • HPDA is growing faster than the overall HPC market • The AI subset is growing faster than all HPDA © Hyperion Research 2019 11

THE ROI From HPC & Success Stories www.HyperionResearch.com/roi-with-hpc/ © Hyperion Research 12

HPDA Algorithm Report: Mapping Algorithms to Verticals & System Requirements https://hyperionresearch.com/proceed-to-download/?doctodown=hpda-algorithm-report © Hyperion Research 13

HPDA Algorithm Report: Mapping Algorithms to Verticals & System Requirements https://hyperionresearch.com/proceed-to-download/?doctodown=hpda-algorithm-report © Hyperion Research 14

HPDA Algorithm Report: Mapping Algorithms to Verticals & System Requirements https://hyperionresearch.com/proceed-to-download/?doctodown=hpda-algorithm-report © Hyperion Research 15

Finding HPC Centers (Map) www.HyperionResearch.com/us-hpc-centers-activity/ © Hyperion Research B 16

Our Forecast On When & Where Exascale Systems Will Be Installed © Hyperion Research 17

QC Documents are Starting to Roll Out © Hyperion Research 18

A New Cloud Application Assessment Tool https://hyperionresearch.com/cloud-application-assessment-tool/ © Hyperion Research A 19

Price/Performance Trends of the

Largest Supercomputers

▪ This trendline projects

that the cost of a Linpack

PF will go from $100

million in 2009, to $110

thousand by 2028, a

roughly 1000X

improvement in a decade.

© Hyperion Research 20Tipping Points: How Quickly HPC Buyers Can Change © Hyperion Research 21

Tracking New AI Hardware: Emergence of AI-Specific Hardware Ecosystem © Hyperion Research 2019 22

HPC Market Update © Hyperion Research E 23

Top Trends in HPC

2018 was a very strong year with over 15% growth --

$13.7 billion (US$) in revenues!

• Supercomputers grew 23% = $5.4 billion in 2018

The top systems have started growing again after

over 4 years of softness

• The profusion of Exascale announcements are

generating a lot of buzz

Big data combined with HPC is creating new solutions

• Adding many new users/buyers to the HPC space

• AI/ML/DL & HPDA are the hot new areas

© Hyperion Research 24The Worldwide HPC Server Market:

$13.7 Billion in 2018

▪ Record revenues!

HPC

Servers

$13.7B

Supercomputers

(Over $500K) Workgroup

$5.4B (under $100K)

$2.0B

Divisional Departmental

($250K - $500K) ($250K - $100K)

$2.5B $3.9B

© Hyperion Research 252018 HPC Market By Verticals © Hyperion Research 26

HPC Market By Vendor Shares © Hyperion Research 27

HPC Market By Regions (US $ Millions) © Hyperion Research 28

The Broader HPC Market Forecast © Hyperion Research 29

The Total HPC Market Including Public Cloud Spending ▪ TOTAL HPC spending grew from $22B in 2013 to $26B in 2017, and is projected to reach $44B in 2022 © Hyperion Research 30

HPC Cloud Forecasts © Hyperion Research A 31

Major Trend

HPC in the Cloud

▪ Over 70% of HPC sites run some jobs in public clouds

• Up from 13% in 2011

▪ Over 10% of all HPC jobs are now running in clouds

• Public clouds are cost-effective for some jobs, but up to

10x more expensive for others

• Key concerns: security, data loss

▪ Private and hybrid cloud use is growing faster

▪ Large public clouds are going heterogeneous – and are

getting much better at running a broader set of HPC

workloads

• AWS with Ryft FPGAs, Google with NVIDIA GPGPUs,

Microsoft with Cray & Bright

© Hyperion Research 32Top Reasons for Using External Clouds

All Sites Government Only

1. Extra capacity (“surges”) 1. Special HW/SW features

2. Cost-effectiveness 2. Extra capacity (“surges”)

3. Isolate R&D projects 3. No on-premise data center

4. Special HW/SW features 4. Cost-effectiveness

5. Management decision 5. Management decision

6. No on-premise data center 6. Isolate R&D projects

© Hyperion Research 33How we Developed the HPC Cloud

Forecast

▪ A public cloud, as we define it, is any large, third-

party cloud that sits outside of an organization's

firewall and is accessible in return for payment

by anyone over the public Internet

• And is owned by a different organization

• It excludes private clouds

▪ Our market forecast is from the point of view of

the end user

• The amount end users spend in public clouds

• It’s not a measure of the hardware within the clouds

© Hyperion Research 34HPC Public Cloud Forecast © Hyperion Research 35

What We Often Hear

“My boss says, why can’t you do everything in the cloud?”

“Clouds are only for embarrassingly parallel jobs.”

“It’s much more expensive to run jobs in the cloud.”

“It’s much cheaper to run jobs in the cloud.”

“I’m worried about data security and data loss in the cloud.”

So we created a tool/scorecard to help understand

the fit of public clouds for HPC applications…

© Hyperion Research 36The Hyperion Research

Cloud Friendliness

Scorecard Tool

© Hyperion Research 37About This Tool

▪ This tool is designed to help users decide between two

popular environments for running HPC workloads:

• On premise facilities or in public clouds.

▪ The metrics and observations are drawn from our

global research and interactions with end users, CSPs

and other members of the worldwide HPC community.

▪ The scorecard at the end of this slide deck allows users

to record their responses concerning each application

or workload they evaluate using this tool.

Hyperion Research offers this tool without charge to the HPC community, in

the hope that it will be useful.

We welcome feedback on the tool: Contact Alex Norton,

anorton@hyperionres.com

© Hyperion Research 38How to Use This Tool

▪ To use the tool, you select a number on each

scale (between 0 to 10) for each attribute, and

then average them for an overall score.

• The tool consists of a series of “sliders" that you can

select between the two end points for each workload

you use the tool to evaluate: “Public Cloud Favored”

or “On Premise Favored.”

• The scorecard at the end shows where your overall

evaluation falls:

▪ A lower score favors the on-prem option

▪ A higher score favors the public clouds option

© Hyperion Research 39Tool Scorecard © Hyperion Research 40

Next Steps

✓ Provided as an online tool

➢ Go To: www.HyperionResearch.com (Special Projects)

▪ Collect data on a large, diverse set of HPC, HPDA &

AI applications (just started)

• And score them using this tool

▪ Then create a directory of HPC applications, and

identify what are the major road blocks for each area:

• Those that are public cloud friendly

• Those that fit better on-prem

• Those that are in the middle

▪ Then conduct custom studies for clients

© Hyperion Research 41Quantum Computing © Hyperion Research 2018 PRIVATE INFORMATION FOR DESIGNATED U.S. GOVERNMENT RECIPIENTS B 42

This Effort is an Amplification of Long-Term Coverage of QC by Hyperion Research © Hyperion Research 43

The Promise of QC is Substantial…

QC systems have the potential to exceed the performance

of conventional computers for problems of importance to

humankind and businesses alike in areas such as:

• Cybersecurity

• Materials science

• Chemistry

• Pharmaceuticals

• Machine learning

• Optimization

And the list grows longer each day

© Hyperion Research 2019 44… But So Are the Challenges Ahead

• Formidable technical issues in QC hardware and

software

• Uncertain performance gains

• Unclear time frames

• Wild West in algorithm/application progress

• Looming workforce issues

This complicates treating QC as a stable market along

side more traditional IT sectors

• Making a business case is tough

© Hyperion Research 2019 45Errors … Errors Everywhere © Hyperion Research 2019 46

Many Developers, Many Approaches

• D Wave • Rigetti

• IBM • Microsoft

• Google • Quantum Diamond

• Quantum Circuits Technologies

• ionQ • ATOS/Bull

• Intel • More….

▪ All are dealing with errors: NISQ is near-term

solution

© Hyperion Research 2019 47Key Questions To Be Addressed

• What are the leading development trends in QC

hardware and software?

• Who are the most significant QC developers and how do they

compare with counterpart efforts?

• What is the likelihood of success and time frame for leading QC

development efforts?

• Which verticals can benefit most from near-term QC

advances?

• What is the expected time frame/schedule for various

QC hardware and software availability?

• What are some of the key technology drivers and inhibitors in

QC development?

• What applications will benefit most from QC in both the

near and far term?

© Hyperion Research 2019 48What We Are Hearing, INPO

▪ Current business case -- FOMO

• Luckily, barrier to entry is low

• Divining ROI is not yet possible

▪ Provable algorithms may not be necessary

• ML/DL developers don’t lose sleep over this

• Best/worst case vs average case (GE)

▪ Quantum Supremacy/Advantage not necessary

• Demonstrated speed up over classical is all that

matters

• The enterprise space doesn't want to have to look too

far down the stack

▪ Living in the age of NISQ

• Pair with classical to get near-term results

• Concentrate on algorithms that are fault-tolerate

© Hyperion Research 2019 49How This Plays Out Near-Term

▪ Near-term QC Ramp Up

• Many exploring applications/use cases and not just for traditional

HPC but also in enterprise computing environments

• The QC/SME application developer may be the next unicorn

▪ Cloud Access Model looks to be short-term preference

▪ Crowd sourcing of algorithms/applications is current order

of the day

▪ Will this succeed?

▪ We are not at the Moore’s Law stage yet

▪ National agendas are a double-edged sword

• As are national security considerations

▪ A rising tide lifts all boats

• For now

© Hyperion Research 2019 50The Exascale Race © Hyperion Research 2018 PRIVATE INFORMATION FOR DESIGNATED U.S. GOVERNMENT RECIPIENTS 51

Projected Exascale System Dates

U.S. EU

▪ Sustained ES*: 2022-2023 ▪ PEAK ES: 2023-2024

▪ Peak ES: 2021 ▪ Pre-ES: 2020-2022 (~$125M)

▪ Vendors: US and then European

▪ ES Vendors: U.S.

▪ Processors: x86, ARM & RISC-V

▪ Processors: U.S. (some ARM?) ▪ Initiatives: EuroHPC, EPI, ETP4HPC, JU

▪ Cost: $500M-$600M per system ▪ Cost: Over $300M per system, plus heavy

(for early systems), plus heavy R&D investments

R&D investments

China Japan

▪ Sustained ES*: 2021-2022 ▪ Sustained ES*: ~2021/2022

▪ Peak ES: 2020 ▪ Peak ES: Likely as a AI/ML/DL system

▪ Vendors: Chinese (multiple sites) ▪ Vendors: Japanese

▪ Processors: Chinese (plus U.S.?) ▪ Processors: Japanese ARM

▪ 13th 5-Year Plan ▪ Cost: ~$1B, this includes both 1 system

▪ Cost: $350-$500M per system, and the R&D costs

plus heavy R&D ▪ They will also do many smaller size

systems

© Hyperion Research * 1 exaflops on a 64-bit real application 52Projected Exascale Investment Levels

(In Addition to System Purchases)

U.S. EU

▪ $1 to $2 billion a year in R&D ▪ About 5-6 billion euros in total (around

(around $10 billion over 7 $1 billion a year)

years) ▪ EU: 486M euros, Member States:

▪ Investments by both the 486M euros, Private sector: 422M

government & vendors euros

▪ Plans are to purchases ▪ Investments in multiple exascale and

pre-exascale systems

multiple exascale systems

each year ▪ Large EU CPU funding

China Japan

▪ Over $1billion a year in R&D (at ▪ Planned investment of over

least $10 billion over 7 years) $1billion* (over 5 years) for both the

▪ Investments by both R&D and purchase of 1 exascale

governments & vendors system

▪ Plans are to purchases multiple ▪ To be followed by a number of

smaller systems ~$100M to $150M

exascale systems each year each

▪ Investing in 3 pre-exascale ▪ Creating a new processor and a

systems starting in late 2018 new software environment

* Note that this includes both the system and R&D

© Hyperion Research 53US Exascale Plans

Frontier El Capitan NSF

A21 A22 (OLCF5) (ATS-4) NERSC-10 Frontera

Follow-on

Location ANL ANL ORNL LLNL LBNL/NER TACC

SC

Planned Delivery 2022 Q1 2022 2022 Q1 2022 2024 2024

Date/ Estimated

Early Operation 2022, Q2 2023 2022, Q3 2023 2025 2025

Planned/Realized ~1,000 1,300 or 1500- 4000-5000 8000- 500

Performance (Pflops) higher 3000 12000

Linpack Performance 800-900 780-1040 900-2100 2000-3000 2000-3000

(PFlops)

Linpack/Peak 80-90 60-70 60-70

Performance Ratio (est.) (est.) (est.) 50-60 50-60 50-55

(%)

High Performance

Conjugate Gradient 20.0-22.5 19.5-26.0 18-36 48-72 52-78 74

(PFlops/s)

GF/Watt 40 60-100 134-200 266-480

© Hyperion Research 54Chinese Exascale Plans

Sunway 2020 Sugon Exascale NUDT 2020

Key User/Developer Sunway/NRCPC Sugon/AMD NUDT

Planned Delivery Date/ 2020, 4Q (could slip 1- 2020, 4Q (could slip 2020, 4Q (could slip

Estimated 1.5 years) 1-1.5 years) 1-1.5 years)

Planned/Realized 1000 1024 1000

Performance (Pflops)

Linpack Performance 600-700 627-732 700-800

(PFlops)

Linpack/Peak 60-70 60-70 (est.) 70-80

Performance Ratio (%)

High Performance

Conjugate Gradient 6-7 9.4-10.1 14-16

(Pflops/s)

GF/Watt 30 34.13 20-30

Linpack GF/Watt 20-23 20.9 23.3-32.0

© Hyperion Research 55EU Exascale Plans © Hyperion Research 56

In Comparison: European Growth Compared To

USA Growth In Acquiring Supercomputers

▪ Europe has close to doubled their supercomputer purchases since

2005 (94.9% growth)

▪ Compared to the US growing by only 18.6% since 2005

© Hyperion Research 57The European JU Plan

New procurements for 8 systems:

http://europa.eu/rapid/press-release_IP-19-2868_en.htm

The JU along with the hosting sites, plan to acquire 8 supercomputers:

• 3 precursor to exascale machines (more than 150 Petaflops)

• And 5 petascale machines (at least 4 Petaflops)

The precursor to exascale systems are expected to provide 4-5 times

more computing power than the top PRACE supercomputers

• Together with the petascale systems, they will double the

supercomputing resources available for European-level use

• In the next few months the Joint Undertaking will sign

agreements with the selected hosting sites

• The supercomputers are expected to become operational during

the second half of 2020

© Hyperion Research 58New EU Processors © Hyperion Research 59

EPI Roadmap Targets Inclusion in Pre- and Full-Exascale Supercomputers © Hyperion Research 2019 60

EPI General Purpose Processor (GPP) and Variants © Hyperion Research 2019 61

The

HPC Innovation Award

and Winners

© Hyperion Research A 62Our Award Program © Hyperion Research 63

Examples Of Previous Winners © Hyperion Research 64

The Trophy For Winners

65HPC Award Program Goals

▪ #1 Help to expand the use of HPC by showing real ROI

examples:

▪ Expand the “Missing Middle” – SMBs, SMSs, etc. by providing

examples of what can be done with HPC

▪ Show mainstream and leading edge HPC success stories

▪ #2 Create a large database of success stories across

many industries/verticals/disciplines

▪ To help justify investments and show non-users ideas on how

to adopt HPC in their environment

▪ Creating many examples for funding bodies and politicians to

use and better understand the value of HPC → to help grow

public interest in expanding HPC investments

▪ For OEMs to demonstrate success stories using their products

© Hyperion Research 66Users Must Show the Value of the

Accomplishment

▪ Users are required to submit the value achieved with

their HPC system, using three broad categories:

a) Dollar value of their HPC project

– e.g., made $$$ in new revenues

– Or saved $$$ in costs

– Or made $$$ in profits

b) Scientific or engineering accomplishment from their project

– e.g. discovered how xyz really works, develop a new drug

that does xyz, etc.

c) The value to society as a whole from their project

– e.g. ended nuclear testing, made something safer, provided

protection against xyz, etc.

… and the investment in HPC that was required

© Hyperion Research 67ISC19 Winners: HPC User Innovation Awards © Hyperion Research 68

Massively Parallel Evolutionary Computation

for Empowering Electoral Reform: Quantifying

Gerrymandering via Multi-objective

Optimization and Statistical Analysis

▪ Wendy K. Tam Cho, NCSA

▪ Yan Liu, NCSA

Gerrymander: manipulate the

boundaries of electoral districts

so as to favor one party or group

© Hyperion Research 2019 69Project DisCo (Discovery of Coherent Structures) ▪ Adam Rupe, UC-Davis, NERSC ▪ James Crutchfield, UC-Davis ▪ Karthik Kashinath, NERSC ▪ Ryan James, UC-Davis ▪ Nalini Kumar, Intel ▪ Prabhat, NERSC © Hyperion Research 2019 70

Can machine learning help find modes of laser-driven fusion that have been undiscovered by traditional methods? ▪ Brian Spears, et al., LLNL © LLNL, 2019 71 © Hyperion Research

Hidden Earthquake Research

Caltech team: Zachary Ross, Daniel Trugman,

Egill Hauksson, Peter Shearer

© Hyperion Research 72We Invite Everyone

To Apply For The Next Round Of

Innovation Awards!

© Hyperion Research 73In Summary:

Some Predictions

For the Next Year

© Hyperion Research 74The Exascale Race

Will Drive New Technologies

▪ The global ES race is boosting funding for the

Supercomputers market segment and creating

widespread interest in HPC

▪ Exascale systems are being designed for HPC, AI,

HPDA, etc.

• This will drive new processor types, new

memories, new system designs, new software,

etc.

▪ And (in some cases) that HPC is too strategic to

depend on foreign sources

• This has led to indigenous technology initiatives

© Hyperion Research 2019 75The HPC-Enterprise Market Convergence Will Drive HPC Products into the Broader Enterprise Market ▪ Hyperion Research studies confirm that this convergence is occurring and speeding up ▪ Competitive forces are driving companies to aim more- complex questions at their data structures and push business operations closer to real time ▪ Important HPC capabilities: scalable parallel processing, ultrafast data movement and ultra-large, capable memory systems ▪ The HPC and commercial sectors are also converging around a shared need to extremely data-intensive AI-ML- DL workloads, both on the simulation and analytics side © Hyperion Research 2019 76

Many New Processors/Accelerators

Are on The Way

▪ Choices of processing elements (CPUs, accelerators)

will increase

• x86 will remain the dominant HPC CPU, but indigenous

CPUs will gain ground

▪ In AI, startups and large companies are developing

processors designed for analytics workloads

▪ NVIDIA is the dominant accelerator, but three-quarters

of respondents in our recent survey expect NVIDIA

GPUs to face "serious competition" in the next 4-5 years

▪ Processors exploiting ARM IP are planned for Europe

(EPI), Japan (Post-K computer) and China

© Hyperion Research 2019 77Storage Systems Will Increasingly Become More Critical ▪ Data-intensive HPC is driving new storage requirements ▪ Iterative methods will expand the size of data volumes needing to be stored ▪ Future architectures will allow computing and storage to happen more pervasively on the HPC infrastructure ▪ Metadata management will deal with data stored in multiple geographic locations and environments ▪ Physically distributed, globally shared memory will become more important ▪ More intelligence will need to be built into storage software © Hyperion Research 2019 78

Cloud Computing For HPC Workloads

Will Grow Fast, Via Tipping Points

▪ Running HPC workloads in CSP environments will grow

in step-function like leaps

• As clouds get better at running HPC workloads

▪ HPC on premise and cloud environments will more

closely resemble each other: the containerization of HPC

▪ 5G will be important for reducing latency in AI use cases

that rely on coupled local-cloud environments, such as

automated driving systems, precision medicine, fraud

detection, cyber security and Smart Cities/IoT

▪ Hybrid cloud environments will grow to be a highly viable

option for HPC users

© Hyperion Research 2019 79Artificial Intelligence Will Grow

Faster Than Everything Else

▪ The AI market is at an early stage but already highly

useful (e.g., visual and voice recognition)

• Once better understood, there are many high value use cases

that will drive adoption

▪ Advances in inferencing will reduce the amount of

training needed for today's AI tasks, but the need for

training will grow to support more challenging tasks

▪ The trust (transparency) issue that strongly affects AI

today will be overcome in time

▪ Learning models (ML, DL) have garnered most of the AI

attention, but graph analytics will also play a crucial role

with its unique ability to handle temporal and spatial

relationships

© Hyperion Research 2019 80Conclusions

▪ HPC is a high growth market

• Growing recognition of HPC’s strategic value

• HPDA, including ML/DL, cognitive and AI

• HPC in the Cloud will lift the sector writ large

▪ HPDA, AI, ML & DL is growing very quickly

• The HPDA, AI, ML & DL markets will expand

opportunities for vendors

▪ Vendor share positions shifted greatly in

2015, 2016, 2017 & again in 2019 and may

continue to shift

• e.g., HPE acquisition of Cray

▪ Software continues to lag hardware and

systems designs/sizes

©Hyperion Research 81Important Dates For Your Calendar

▪ 2019 HPC USER FORUM MEETINGS:

• Late August, Hohhot and Beijing, China

• September 9 to 11, Chicago Illinois, Argonne

National Laboratory

• October 7 to 8, Lugano, Switzerland at CSCS

• October 10 to 11, Edinburgh, Scotland at

EPCC

▪ And Join us for the Hyperion SC19 Briefing

© Hyperion Research 82Thank You!

QUESTIONS?

INFO@HYPERIONRES.COM

© Hyperion Research 83Visit Our Website: www.HyperionResearch.com

Twitter: HPC_Hyperion@HPC_Hyperion

Hyperion Research Holdings, LLC:

▪ Owns 100% of the IDC HPC assets

▪ Tracking the HPC market since 1986

▪ Headquarters:

365 Summit Ave., St. Paul, MN 55102

© Hyperion Research 84You can also read