CURAJ_IIITDWD@LT-EDI-ACL2022: Hope Speech Detection in English YouTube Comments using Deep Learning Techniques

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

CURAJ_IIITDWD@LT-EDI-ACL2022: Hope Speech Detection in English

YouTube Comments using Deep Learning Techniques

Vanshita Jha Ankit Kumar Mishra

Central University of Rajasthan, India Goa University, India

vanshitajha@gmail.com ankitmishra.in.com@gmail.com

Sunil Saumya

Indian Institute of Information Technology Dharwad, India

sunil.saumya@iiitdwd.ac.in

Abstract (Chakravarthi et al., 2021, 2020). While the most

effective expression in real life is face or visual

Hope Speech are positive terms that help to expression, which frequently delivers a much more

promote or criticise a point of view without efficient message than linguistic words, expressions

hurting the user’s or community’s feelings.

in virtual life, such as social media, are frequently

Non-Hope Speech, on the other side, includes

expressions that are harsh, ridiculing, or de- expressed through linguistic texts (or words) and

motivating. The goal of this article is to emoticons. These words have a significant impact

find the hope speech comments in a YouTube on one’s life (Sampath et al., 2022; Ravikiran et al.,

dataset. The datasets were created as part of the 2022; Chakravarthi et al., 2022b; Bharathi et al.,

"LT-EDI-ACL 2022: Hope Speech Detection 2022; Priyadharshini et al., 2022). For example, if

for Equality, Diversity, and Inclusion" shared we respond to someone’s social media post with

task. The shared task dataset was proposed

“Well Done!", “Very Good", “Must do it again",

in Malayalam, Tamil, English, Spanish, and

Kannada languages. In this paper, we worked “need a little more practise", and so on, it may in-

at English-language YouTube comments. We stil confidence in the author. On the other hand,

employed several deep learning based mod- negative statements such as “You should not try

els such as DNN (dense or fully connected it", “You don’t deserve it", “You are from a dif-

neural network), CNN (Convolutional Neural ferent religion", and others demotivate the person.

Network), Bi-LSTM (Bidirectional Long Short The comments that fall into the first group are re-

Term Memory Network), and GRU(Gated Re- ferred to as “Hope Speech" while those that fall

current Unit) to identify the hopeful comments.

into the second category are referred to as “Non-

We also used Stacked LSTM-CNN and Stacked

LSTM-LSTM network to train the model. The hope speech" or “Hate Speech" (Kumar et al., 2020;

best macro avg F1-score 0.67 for development Saumya et al., 2021; Biradar et al., 2021).

dataset was obtained using the DNN model.The In the previous decade, researchers have worked

macro avg F1-score of 0.67 was achieved for heavily on hate speech identification in order to

the classification done on the test data as well. maintain social media clean and healthy. How-

ever, in order to improve the user experience, it is

1 Introduction also necessary to emphasise the message of hope

In recent years, the majority of the world’s popu- on these sites. To our knowledge, the shared task

lation has access to social media. The social me- "LT-EDI-EACL 2021: Hope Speech Detection for

dia’s posts, comments, articles, and other content Equality, Diversity, and Inclusion1 " was the first

have a significant impact on everyone’s lives. Peo- attempt to recognise hope speech in YouTube com-

ple tend to believe that their lives on social media ments. The organizers proposed the shared task

are the same as their real lives, therefore the in- in three different languages that is English, Tamil

fluence of others’ opinions or expressions is enor- and Malayalam. Many research teams from all

mous (Priyadharshini et al., 2021; Kumaresan et al., over the world took part in the shared task and

2021). People submit their posts to social network- contributed their working notes to describe how

ing platform and receive both positive and negative to identify the hope speech comments. (Saumya

expressions from their peer users. and Mishra, 2021) used a parallel network of CNN

and LSTM with GloVe and Word2Vec embeddings

People in a multilingual world use a variety

and obtained a weighted F1-score of 0.91 for En-

of languages to express themselves, including

English, Hindi, Malayalam, French, and others 1

https://sites.google.com/view/lt-edi-2021/home

190

Proceedings of the Second Workshop on Language Technology for Equality, Diversity and Inclusion, pages 190 - 195

May 27, 2022 ©2022 Association for Computational Linguisticsglish Dataset. Similarly, for Tamil and Malayalam Output layer

they trained parallel Bidirectional LSTM model

and obtained F1-score of 0.56 and 0.78 respec-

Fully connected layers

Dense(4)

tively. (Puranik et al., 2021) trained several fine

Dense(64)

tuned transformer models and identified that ULM-

FiT is best for English with F1-score 0.9356. They

Flatten Vector

(1x3000)

also found that mBERT obtained 0.85 F1-score on

Malayalam dataset and distilmBERT obtained 0.59

Embedded Vector

Representation

F1-score on Tamil dataset. For the same task a

(1x300)

fine tuned ALBERT model was used by (Chen and

representation

(1x20255)

One-hot

Kong, 2021) and they obtained a F1-score of 0.91. Every word is represented in a one-hot vector of vocabulary dimension

Similarly, (Zhao and Tao, 2021; Huang and Bai,

Data cleaning and Pre-processing

(Cleaned text) (Preprocessed text)

2021; Ziehe et al., 2021; Mahajan et al., 2021) em- [ 3005, 4871, 466, 48, 25, ]

Data preprocessing: Tokenization and

ployed XLM-RoBERTa-Based Model with Atten- Here indices are

taken randomly.

bag of words creation, proving indices to

every unique word, encoding, and

tion for Hope Speech Detection. (Dave et al., 2021) sasha dumse god accepts everyone

padding

experimented the conventional classifiers like lo- Data cleaning: converting lowercase,

removing number, puctuation, space, links

gistic regression and support vector machine with and removing stopwords

TF_IDF character N-gram fearures for hope speech Sasha Dumse God Accepts Everyone

classification.

(Raw Example Sentence from training set)

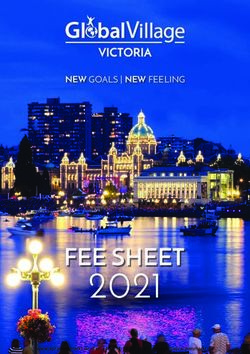

Figure 1: A DNN network for hope speech detection

ACL 2022 will see the introduction of a new edi-

tion of the shared task "Hope Speech Detection for 2 Task and data description

Equality, Diversity, and Inclusion." In contrast to

LT-EDI-EACL 2021, this time the shared task LT- At LT-EDI-ACL 2022, the shared task on Hope

EDI-ACL 2022 has been proposed in five different Speech Detection for Equality, Diversity, and

languages: English, Malayalam, Tamil, Kannada, Inclusion (provided in English, Tamil, Spanish,

and Spanish (Chakravarthi, 2020; Chakravarthi and Kannada, and Malayalam) intended to determine

Muralidaran, 2021; Chakravarthi et al., 2022a). whether the given comment was Hope speech or

The data was extracted via the YouTube platform. not. The dataset was gathered from the YouTube

We took part in the competition and worked on the platform. In the given dataset, there were two fields

dataset of English hope speech comments. The for each language: comment and label. We only

experiments were carried out on several neural net- submitted the system for the English dataset. In the

work based models such as a dense or multilayer English training dataset, there were approximately

neural network (DNN), one layer CNN network 22740 comments, with 1962 labeled as hope speech

(CNN), one layer Bi-LSTM network (Bi-LSTM), and 20778 labeled as non-hope speech. There were

and one layer GRU network (GRU), among deep 2841 comments in the development dataset, with

learning networks. The stack connections of 272 hope speech and 2569 non-hope speech com-

LSTM-CNN and LSTM-LSTM were also trained ments.The test dataset contained 250 hope speech

for hope speech detection. After all experimen- and 2593 non-hope speech comments. The English

tation, it was found that DNN produced the best dataset statistics is shown in Table 1.

results with macro average F1-score of 0.67 on

development as well as on test dataset.

3 Methodology

The rest of the article is oragnized as follows. Several deep learning models were developed to

The next section 2 give the details of the given task identify the hope speech from supplied English

and dataset statistics. This is followed by the de- YouTube comments. The architecture of our best

scription of methodology used for experimentation model DNN, as depicted in Figure 1, will be ex-

in Section 3. The results are explained in the Sec- plained in this section. We also explain the archi-

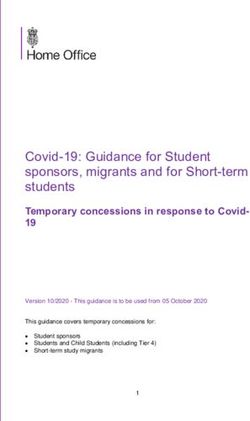

tion 4. At the end, Section 5 talks about future tecture of stacked network LSTM-LSTM as shown

scope of the research. in Figure 2.

191Hopespeech Non Hopespeech Total

Training 1962 20778 22740

Development 272 2569 2841

Test 250 2593 2843

Total 2434 25940 28424

Table 1: English Dataset statistics

a single layer CNN model and a single layer Bi-

Classification

Non-

Hope

Hope LSTM model were constructed. Finally, we built

stacked LSTM-CNN and stacked LSTM-LSTM

models shown in Figure 2. In Section 4, the re-

sults of each model are discussed. Regardless of

Feature extraction using

LSTM LSTM LSTM LSTM LSTM the model, the feeding input and collecting output

stacked LSTM

(256) (256) (256) (256) (256)

were the same in all instances. The whole process

flow from input to output is depicted in Figures 1

LSTM LSTM LSTM LSTM LSTM

(256) (256) (256) (256) (256)

and 2. As can be seen, there were three stages to

the process: data preparation, feature extraction,

Data cleaning and Pre-processing

(Cleaned text) (Preprocessed text)

[ 3005, 4871, 466, 48, 25 ] and classification. The biggest distinction among

Data preprocessing: Tokenization and

Here indices are

taken randomly.

bag of words creation, proving indices to

every unique word, encoding, and

the models was in the feature extraction criterion.

padding

sasha dumse god accepts everyone To demonstrate the model flow, a representative

Data cleaning: converting lowercase,

removing number, puctuation, space, links

and removing stopwords

example from the English Dataset is used. The

Sasha Dumse God Accepts Everyone

representative example “Sasha Dumse God Ac-

(Raw Example Sentence from training set)

cepts Everyone." was first changed to lower case

as “sasha dumse god accepts everyone.". During

Figure 2: A stacked LSTM network for hope speech the data cleaning process, the dot(.) is eliminated.

detection

The lowercase text was then encoded and padded

into a sequence list as “[3005, 4871, 466, 48, 25]".

3.1 Data cleaning and pre-processing The index “3005" refers to the word “sasha", the

index “4871" to the word “dumse," and so on. The

We used a few early procedures to convert the raw

sentence was padded into the length of ten.

input comments into readable input vectors. We

started with data cleaning and then moved on to After preprocessing the data, each index is

data preprocessing. Every comment was changed turned into a one-hot vector ( of 1x20255 dimen-

to lower case during data cleaning. Numbers, punc- sion) with a size equal to the vocabulary. The

tuation, symbols, emojis, and links have all been resultant one-hot vector was sparse and high di-

removed. The nltk library was used to eliminate mensional, and it was then passed through an em-

stopwords like the, an, and so on. Finally, the ex- bedding layer, yielding a low dimensional dense

tra spaces were removed, resulting in a clean text. embedding vector (of 1x 300 dimension). Be-

During data preprocessing, we first tokenized each tween the input and embedding layers, many sets

comment in the dataset and created a bag of words of weights were used. We experimented with ran-

with an index number for each unique word. The dom weights as well as pre-trained Word2Vec and

comments were then turned into an index sequence. GloVe weights, but found that random weights ini-

The length of the encoded vectors was varied. Af- tialization at the embedding layer performed better.

ter that, the encoded indices vector was padded to As a result, we’ve only covered the usage of ran-

form an equal-length vector. In our case we kept dom weights at the embedding layer in this article.

the length of each vector as ten. For abstract level feature extraction, the embed-

ded vector was provided as an input to a stacked

3.2 Classification Models DNN or LSTM layer as shown in Figures 1 and 2

Several deep learning classification models were respectively. Finally, the collected features were

developed. We started with a multilayer dense neu- classified into hope and non-hope categories using

ral network (DNN) as shown in Figure 1. After that, a dense (or an output) layer.

192Table 2: Results of English Development dataset

Methods Metrics Non-Hope Hope Macro Avg Weighted Avg

Precision 0.43 0.93 0.68 0.89

DNN Recall 0.38 0.95 0.66 0.89

F1-score 0.40 0.94 0.67 0.89

Precision 0.39 0.93 0.66 0.88

CNN Recall 0.37 0.94 0.65 0.88

F1-score 0.38 0.94 0.66 0.88

Precision 0.39 0.94 0.66 0.88

Bi-LSTM Recall 0.40 0.93 0.67 0.88

F1-score 0.40 0.94 0.67 0.88

Precision 0.39 0.94 0.66 0.88

GRU Recall 0.38 0.94 0.66 0.88

F1-score 0.38 0.94 0.66 0.88

Precision 0.41 0.93 0.67 0.88

LSTM-CNN Recall 0.35 0.95 0.65 0.89

F1-score 0.38 0.94 0.67 0.87

Precision 0.34 0.94 0.64 0.88

LSTM-LSTM Recall 0.43 0.91 0.67 0.86

F1-score 0.38 0.92 0.65 0.89

Table 3: Results of English test dataset

Metrices Non-Hope Hope Macro Avg Weighted Avg

Precision 0.40 0.94 0.67 0.89

Recall 0.38 0.94 0.66 0.90

F1 score 0.39 0.94 0.67 0.89

4 Results produced from the DNN model.

All of the experiments were carried out in the Keras 5 Conclusion

and sklearn environment. We used the pandas li-

As part of the joint task LT-EDI-ACL2022, we pre-

brary to read the datasets. Keras preprocessing

sented a model provided by team CURJ_IIITDWD

classes and the nltk library were used to prepare the

for detecting hope speech on an English dataset ob-

dataset. All the results shown in Table 2 is on En-

tained from the YouTube platform. We used many

glish development dataset. The initial experiment

deep learning algorithms in the paper and found

was with dense neural network (DNN). The three

that DNN with three hidden layers performed best

layers of dense network with relu activation (in the

on the development and test dataset with a macro

internal layer) and sigmoid activation at the output

average F1-score of 0.67. In the future, we can im-

layer were trained with English comment dataset.

prove classification performance by training trans-

Similarly, the experiments were performed with

fer learning models like BERT and ULMFiT ans

CNN, Bi-LSTM, GRU, LSTM-CNN, and LSTM-

so on.

LSTM. The best result was obtained by DNN with

macro average F1-score 0.67. The results of other

models are shown in Table 2. Later, once the la-

References

bels for test dataset was released by the organizers,

we also collected the model performance on test B Bharathi, Bharathi Raja Chakravarthi, Subalalitha

dataset. The macro average F1-score achieved after Chinnaudayar Navaneethakrishnan, N Sripriya,

Arunaggiri Pandian, and Swetha Valli. 2022. Find-

categorising the test data from the DNN model was ings of the shared task on Speech Recognition for

0.67, which was the same as in the case of devel- Vulnerable Individuals in Tamil. In Proceedings of

opment data. Table 3 lists the test dataset results the Second Workshop on Language Technology for

193Equality, Diversity and Inclusion. Association for Shi Chen and Bing Kong. 2021. cs_english@ LT-EDI-

Computational Linguistics. EACL2021: Hope Speech Detection Based On Fine-

tuning ALBERT Model. In Proceedings of the First

Shankar Biradar, Sunil Saumya, and Arun Chauhan. Workshop on Language Technology for Equality, Di-

2021. Hate or non-hate: Translation based hate versity and Inclusion, pages 128–131.

speech identification in code-mixed hinglish data set.

In 2021 IEEE International Conference on Big Data Bhargav Dave, Shripad Bhat, and Prasenjit Majumder.

(Big Data), pages 2470–2475. IEEE. 2021. IRNLP_DAIICT@ LT-EDI-EACL2021: hope

speech detection in code mixed text using TF-IDF

Bharathi Raja Chakravarthi. 2020. HopeEDI: A mul- char n-grams and MuRIL. In Proceedings of the

tilingual hope speech detection dataset for equality, First Workshop on Language Technology for Equality,

diversity, and inclusion. In Proceedings of the Third Diversity and Inclusion, pages 114–117.

Workshop on Computational Modeling of People’s

Opinions, Personality, and Emotion’s in Social Me- Bo Huang and Yang Bai. 2021. TEAM HUB@ LT-

dia, pages 41–53, Barcelona, Spain (Online). Associ- EDI-EACL2021: hope speech detection based on

ation for Computational Linguistics. pre-trained language model. In Proceedings of the

First Workshop on Language Technology for Equality,

Bharathi Raja Chakravarthi and Vigneshwaran Mural- Diversity and Inclusion, pages 122–127.

idaran. 2021. Findings of the shared task on hope

speech detection for equality, diversity, and inclu- Abhinav Kumar, Sunil Saumya, and Jyoti Prakash

sion. In Proceedings of the First Workshop on Lan- Singh. 2020. NITP-AI-NLP@ HASOC-Dravidian-

guage Technology for Equality, Diversity and Inclu- CodeMix-FIRE2020: A Machine Learning Approach

sion, pages 61–72, Kyiv. Association for Computa- to Identify Offensive Languages from Dravidian

tional Linguistics. Code-Mixed Text. In FIRE (Working Notes), pages

384–390.

Bharathi Raja Chakravarthi, Vigneshwaran Muralidaran,

Ruba Priyadharshini, Subalalitha Chinnaudayar Na- Prasanna Kumar Kumaresan, Ratnasingam Sakuntharaj,

vaneethakrishnan, John Phillip McCrae, Miguel Án- Sajeetha Thavareesan, Subalalitha Navaneethakr-

gel García-Cumbreras, Salud María Jiménez-Zafra, ishnan, Anand Kumar Madasamy, Bharathi Raja

Rafael Valencia-García, Prasanna Kumar Kumare- Chakravarthi, and John P McCrae. 2021. Findings

san, Rahul Ponnusamy, Daniel García-Baena, and of shared task on offensive language identification

José Antonio García-Díaz. 2022a. Findings of the in Tamil and Malayalam. In Forum for Information

shared task on Hope Speech Detection for Equality, Retrieval Evaluation, pages 16–18.

Diversity, and Inclusion. In Proceedings of the Sec-

ond Workshop on Language Technology for Equality, Khyati Mahajan, Erfan Al-Hossami, and Samira Shaikh.

Diversity and Inclusion. Association for Computa- 2021. Teamuncc@ lt-edi-eacl2021: Hope speech de-

tional Linguistics. tection using transfer learning with transformers. In

Proceedings of the First Workshop on Language Tech-

Bharathi Raja Chakravarthi, Vigneshwaran Muralidaran, nology for Equality, Diversity and Inclusion, pages

Ruba Priyadharshini, and John Philip McCrae. 2020. 136–142.

Corpus creation for sentiment analysis in code-mixed

Tamil-English text. In Proceedings of the 1st Joint Ruba Priyadharshini, Bharathi Raja Chakravarthi, Sub-

Workshop on Spoken Language Technologies for alalitha Chinnaudayar Navaneethakrishnan, Then-

Under-resourced languages (SLTU) and Collabora- mozhi Durairaj, Malliga Subramanian, Kogila-

tion and Computing for Under-Resourced Languages vani Shanmugavadivel, Siddhanth U Hegde, and

(CCURL), pages 202–210, Marseille, France. Euro- Prasanna Kumar Kumaresan. 2022. Findings of

pean Language Resources association. the shared task on Abusive Comment Detection in

Tamil. In Proceedings of the Second Workshop on

Bharathi Raja Chakravarthi, Ruba Priyadharshini, Then- Speech and Language Technologies for Dravidian

mozhi Durairaj, John Phillip McCrae, Paul Buitaleer, Languages. Association for Computational Linguis-

Prasanna Kumar Kumaresan, and Rahul Ponnusamy. tics.

2022b. Findings of the shared task on Homophobia

Transphobia Detection in Social Media Comments. Ruba Priyadharshini, Bharathi Raja Chakravarthi,

In Proceedings of the Second Workshop on Language Sajeetha Thavareesan, Dhivya Chinnappa, Durairaj

Technology for Equality, Diversity and Inclusion. As- Thenmozhi, and Rahul Ponnusamy. 2021. Overview

sociation for Computational Linguistics. of the DravidianCodeMix 2021 shared task on senti-

ment detection in Tamil, Malayalam, and Kannada.

Bharathi Raja Chakravarthi, Ruba Priyadharshini, In Forum for Information Retrieval Evaluation, pages

Rahul Ponnusamy, Prasanna Kumar Kumaresan, 4–6.

Kayalvizhi Sampath, Durairaj Thenmozhi, Sathi-

yaraj Thangasamy, Rajendran Nallathambi, and Karthik Puranik, Adeep Hande, Ruba Priyad-

John Phillip McCrae. 2021. Dataset for identi- harshini, Sajeetha Thavareesan, and Bharathi Raja

fication of homophobia and transophobia in mul- Chakravarthi. 2021. IIITT@ LT-EDI-EACL2021-

tilingual YouTube comments. arXiv preprint hope speech detection: there is always hope in trans-

arXiv:2109.00227. formers. arXiv preprint arXiv:2104.09066.

194Manikandan Ravikiran, Bharathi Raja Chakravarthi,

Anand Kumar Madasamy, Sangeetha Sivanesan, Rat-

navel Rajalakshmi, Sajeetha Thavareesan, Rahul Pon-

nusamy, and Shankar Mahadevan. 2022. Findings

of the shared task on Offensive Span Identification

in code-mixed Tamil-English comments. In Pro-

ceedings of the Second Workshop on Speech and

Language Technologies for Dravidian Languages.

Association for Computational Linguistics.

Anbukkarasi Sampath, Thenmozhi Durairaj,

Bharathi Raja Chakravarthi, Ruba Priyadharshini,

Subalalitha Chinnaudayar Navaneethakrishnan,

Kogilavani Shanmugavadivel, Sajeetha Thavareesan,

Sathiyaraj Thangasamy, Parameswari Krishna-

murthy, Adeep Hande, Sean Benhur, Kishor Kumar

Ponnusamy, and Santhiya Pandiyan. 2022. Findings

of the shared task on Emotion Analysis in Tamil. In

Proceedings of the Second Workshop on Speech and

Language Technologies for Dravidian Languages.

Association for Computational Linguistics.

Sunil Saumya, Abhinav Kumar, and Jyoti Prakash Singh.

2021. Offensive language identification in dravidian

code mixed social media text. In Proceedings of the

First Workshop on Speech and Language Technolo-

gies for Dravidian Languages, pages 36–45.

Sunil Saumya and Ankit Kumar Mishra. 2021.

IIIT_DWD@ LT-EDI-EACL2021: hope speech de-

tection in YouTube multilingual comments. In Pro-

ceedings of the First Workshop on Language Tech-

nology for Equality, Diversity and Inclusion, pages

107–113.

Yingjia Zhao and Xin Tao. 2021. ZYJ@ LT-EDI-

EACL2021: XLM-RoBERTa-based model with at-

tention for hope speech detection. In Proceedings

of the First Workshop on Language Technology for

Equality, Diversity and Inclusion, pages 118–121.

Stefan Ziehe, Franziska Pannach, and Aravind Krish-

nan. 2021. GCDH@ LT-EDI-EACL2021: XLM-

RoBERTa for hope speech detection in English,

Malayalam, and Tamil. In Proceedings of the First

Workshop on Language Technology for Equality, Di-

versity and Inclusion, pages 132–135.

195You can also read