Smoothing Dialogue States for Open Conversational Machine Reading

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Smoothing Dialogue States for Open Conversational Machine Reading

∗

Zhuosheng Zhang1,2,3,# , Siru Ouyang1,2,3,# , Hai Zhao1,2,3, , Masao Utiyama4 , Eiichiro Sumita4

1

Department of Computer Science and Engineering, Shanghai Jiao Tong University

2

Key Laboratory of Shanghai Education Commission for Intelligent Interaction

and Cognitive Engineering, Shanghai Jiao Tong University

3

MoE Key Lab of Artificial Intelligence, AI Institute, Shanghai Jiao Tong University

4

National Institute of Information and Communications Technology (NICT), Kyoto, Japan

{zhangzs,oysr0926}@sjtu.edu.cn,zhaohai@cs.sjtu.edu.cn

{mutiyama,eiichiro.sumita}@nict.go.jp

Abstract with people according to the dialogue states, and

completes specific tasks, such as ordering meals

Conversational machine reading (CMR) re-

(Liu et al., 2013) and air tickets (Price, 1990). In

quires machines to communicate with humans

through multi-turn interactions between two

real-world scenario, annotating data such as in-

arXiv:2108.12599v2 [cs.CL] 2 Sep 2021

salient dialogue states of decision making and tents and slots is expensive. Inspired by the studies

question generation processes. In open CMR of reading comprehension (Rajpurkar et al., 2016,

settings, as the more realistic scenario, the re- 2018; Zhang et al., 2020c, 2021), there appears a

trieved background knowledge would be noisy, more general task — conversational machine read-

which results in severe challenges in the in- ing (CMR) (Saeidi et al., 2018): given the inquiry,

formation transmission. Existing studies com- the machine is required to retrieve relevant sup-

monly train independent or pipeline systems

porting rule documents, the machine should judge

for the two subtasks. However, those methods

are trivial by using hard-label decisions to acti- whether the goal is satisfied according to the dia-

vate question generation, which eventually hin- logue context, and make decisions or ask clarifica-

ders the model performance. In this work, we tion questions.

propose an effective gating strategy by smooth- A variety of methods have been proposed for

ing the two dialogue states in only one decoder the CMR task, including 1) sequential models that

and bridge decision making and question gen-

encode all the elements and model the matching re-

eration to provide a richer dialogue state refer-

ence. Experiments on the OR-ShARC dataset

lationships with attention mechanisms (Zhong and

show the effectiveness of our method, which Zettlemoyer, 2019; Lawrence et al., 2019; Verma

achieves new state-of-the-art results. et al., 2020; Gao et al., 2020a,b); 2) graph-based

methods that capture the discourse structures of the

1 Introduction rule texts and user scenario for better interactions

(Ouyang et al., 2021). However, there are two sides

The ultimate goal of multi-turn dialogue is to en-

of challenges that have been neglected:

able the machine to interact with human beings and

solve practical problems (Zhu et al., 2018; Zhang 1) Open-retrieval of supporting evidence. The

et al., 2018; Zaib et al., 2020; Huang et al., 2020; above existing methods assume that the relevant

Fan et al., 2020; Gu et al., 2021). It usually adopts rule documents are given before the system inter-

the form of question answering (QA) according to acts with users, which is in a closed-book style.

the user’s query along with the dialogue context In real-world applications, the machines are of-

(Sun et al., 2019; Reddy et al., 2019; Choi et al., ten required to retrieve supporting information to

2018). The machine may also actively ask ques- respond to incoming high-level queries in an inter-

tions for confirmation (Wu et al., 2018; Cai et al., active manner, which results in an open-retrieval

2019; Zhang et al., 2020b; Gu et al., 2020). setting (Gao et al., 2021). The comparison of the

In the classic spoken language understanding closed-book setting and open-retrieval setting is

tasks (Tur and De Mori, 2011; Zhang et al., 2020a; shown in Figure 1.

Ren et al., 2018; Qin et al., 2021), specific slots 2) The gap between decision making and ques-

and intentions are usually defined. According to tion generation. Existing CMR studies generally

these predefined patterns, the machine interacts regard CMR as two separate tasks and design in-

∗

dependent systems. Only the result of decision

Corresponding author. # Equal contribution. This paper

was partially supported by Key Projects of National Natural making will be fed back to the question generation

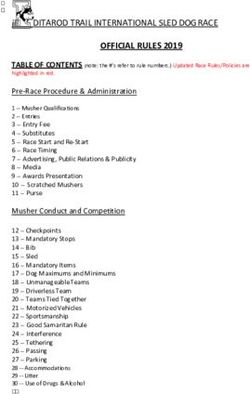

Science Foundation of China (U1836222 and 61733011). module. As a result, the question generation mod-Closed-Book User Scenario: I got my loan last year. It was for

Rule Text: Eligible applicants may obtain direct loans 450,000.

for up to a maximum indebtedness of $300,000, and + Initial Question: Does this loan meet my needs?

guaranteed loans for up to a maximum indebtedness Dialogue History:

of $1,392,000 (amount adjusted annually for inflation). Follow-up Q1: Do you need a direct loan?

Follow-up A1: Yes.

Open-Retrieval Follow-up Q2: Is your loan for less than 300,000?

Rule Text-1: Eligible applicants may obtain direct ... + Follow-up A2: Yes.

Rule Text-2: Guaranteed loans for up to a maximum ... Follow-up Q3: Is your loan less than 1,392,000?

Rule Text-3: $1,392,000 ... Follow-up A3: Yes.

(a) Closed-book setting v.s. open-retrieval setting

Rule Text User Scenario Initial Question History Rule Text User Scenario Initial Question History

Pre-trained Language Model Pre-trained Language Model

Decision Making Question Generation Decision Making Question Generation

Yes No Irre. Inq. Follow-up Question Yes No Irre. Inq. Follow-up Question

(b) The framework of existing methods (c) The framework of our model

Figure 1: The overall framework for our proposed model (c) compared with the existing ones (b). Previous studies

generally regard CMR as two separate tasks and design independent systems. Technically, only the result of

decision making will be fed to the question generation module, thus there is a gap between the dialogue states

of decision making and question generation. To reduce the information gap, our model bridges the information

transition between the two salient dialogue states and benefits from a richer rule reference through open-retrieval

(a).

ule knows nothing about the actual conversation strategies are empirically studied for smoothing the

states, which leads to poorly generated questions. two dialogue states in only one decoder.

There are even cases when the decision masking 3) Experiments on the ShARC dataset show the

result is improved, but the question generation is effectiveness of our model, which achieves the new

decreased as reported in previous studies (Ouyang state-of-the-art results. A series of analyses show

et al., 2021). the contributing factors.

In this work, we design an end-to-end system by

Open-retrieval of Supporting evidence and bridg- 2 Related Work

ing deCision mAking and question geneRation

(O SCAR),1 to bridge the information transition be- Most of the current conversation-based reading

tween the two salient dialogue states of decision comprehension tasks are formed as either span-

making and question generation, at the same time based QA (Reddy et al., 2019; Choi et al., 2018)

benefiting from a richer rule reference through open or multi-choice tasks (Sun et al., 2019; Cui et al.,

retrieval. In summary, our contributions are three 2020), both of which neglect the vital process of

folds: question generation for confirmation during the

human-machine interaction. In this work, we are

1) For the task, we investigate the open-retrieval

interested in building a machine that can not only

setting for CMR. We bridge decision making and

make the right decisions but also raise questions

question generation for the challenging CMR task,

when necessary. The related task is called con-

which is the first practice to our best knowledge.

versational machine reading (Saeidi et al., 2018)

2) For the technique, we design an end-to-end

which consists of two separate subtasks: decision

framework where the dialogue states for decision

making and question generation. Compared with

making are employed for question generation, in

conversation-based reading comprehension tasks,

contrast to the independent models or pipeline sys-

our concerned CMR task is more challenging as it

tems in previous studies. Besides, a variety of

involves rule documents, scenarios, asking clarifi-

1

Our source codes are available at https://github. cation questions, and making a final decision.

com/ozyyshr/OSCAR. Existing works (Zhong and Zettlemoyer, 2019;Ques: Am I entitled to the National Minimum Wage?

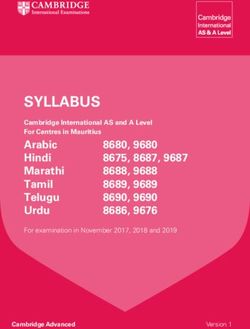

r1 r2 r3 us uq h1 Double-channel Decoder

Scen: I am not following a European Union programme.

Decision Making

GCN layer e relations

4

Rule Retrieval e1 R1

Rule Documents Question Generation

Document 1

e2 follow-up ques.

The following are not entitled to the National Minimum Wage: R2

higher students on a work placement up to 1 year

workers on government pre-apprenticeships schemes e3

people on the European Union programmes *relations obtained by tagging model BART-base

people working on a Jobcentre Plus Work trial for 6 weeks

user scenario

Rule Span

...... Document k e1 e2 e3 e4

e1 ... e2 ... e3 ... e4 ... e5 ... e6 ...

Comment Contrast

......

Comment Rule Conditions BART-base

Contrast Tagging

EDU0 EDU1 EDU... Scen. Ques. QA...

Figure 2: The overall structure of our model O SCAR. The left part introduces the retrieval and tagging process for

rule documents, which is then fed into the encoder together with other necessary information.

Lawrence et al., 2019; Verma et al., 2020; Gao et al., and a generator G(·, ·) on {R, Us , Uq , C} for ques-

2020a,b; Ouyang et al., 2021) have made progress tion generation.

in modeling the matching relationships between

the rule document and other elements such as user

scenarios and questions. These studies are based on 4 Model

the hypothesis that the supporting information for

answering the question is provided, which does not Our model is composed of three main modules:

meet the real-world applications. Therefore, we are retriever, encoder, and decoder. The retriever is em-

motivated to investigate the open-retrieval settings ployed to retrieve the related rule texts for the given

(Qu et al., 2020), where the retrieved background user scenario and question. The encoder takes the

knowledge would be noisy. Gao et al. (2021) makes tuple {R, Us , Uq , C} as the input, encodes the ele-

the initial attempts of open-retrieval for CMR. How- ments into vectors and captures the contextualized

ever, like previous studies, the common solution representations. The decoder makes a decision or

is training independent or pipeline systems for the generates a question once the decision is “inquiry”.

two subtasks and does not consider the information Figure 1 overviews the model architecture, we will

flow between decision making and question gen- elaborate the details in the following part.

eration, which would eventually hinder the model

performance. Compared to existing methods, our

4.1 Retrieval

method makes the first attempt to bridge the gap

between decision making and question generation, To obtain the supporting rules, we construct the

by smoothing the two dialogue states in only one query by concatenating the user question and user

decoder. In addition, we improve the retrieval pro- scenario. The retriever calculates the semantic

cess by taking advantage of the traditional TF-IDF matching score between the query and the candi-

method and the latest dense passage retrieval model date rule texts from the pre-defined corpus and re-

(Karpukhin et al., 2020). turns the top-k candidates. In this work, we employ

TF-IDF and DPR (Karpukhin et al., 2020) in our

3 Open-retrieval Setting for CMR

retrieval, which are representatives for sparse and

In the CMR task, each example is formed as a tu- dense retrieval methods. TF-IDF stands for term

ple {R, Us , Uq , C}, where R denotes the rule texts, frequency-inverse document frequency, which is

Us and Uq are user scenarios and user questions, used to reflect how relevant a term is in a given

respectively, and C represents the dialogue history. document. DPR is a dense passage retrieval model

For open-retrieval CMR, R is a subset retrieved that calculates the semantic matching using dense

from a large candidate corpus D. The goal is to vectors, and it uses embedding functions that can

train a discriminator F(·, ·) for decision making, be trained for specific tasks.4.2 Graph Encoder we fetch the contextualized representation of the

One of the major challenges of CMR is interpret- special tokens [RULE] and [CLS] before the cor-

ing rule texts, which have complex logical struc- responding sequences, respectively. For relation

tures between various inner rule conditions. Ac- vertices, they are initialized as the conventional em-

cording to Rhetorical Structure Theory (RST) of bedding layer, whose representations are obtained

discourse parsing (Mann and Thompson, 1988), through a lookup table.

we utilize a pre-trained discourse parser (Shi and For each rule document that is composed of mul-

Huang, 2019)2 to break the rule text into clause- tiple rule conditions, i.e., EDUs, let hp denote the

like units called elementary discourse units (EDUs) initial representation of every node vp , the graph-

to extract the in-line rule conditions from the rule based information flow process can be written as:

texts.

X X 1

Embedding We employ pre-trained language h(l+1)

p = ReLU( wr(l) h(l)

q ),

cp,r

model (PrLM) model as the backbone of the en- r∈RL vq ∈Nr (vp )

coder. As shown in the figure, the input of our (1)

model includes rule document which has already be where Nr (vp ) denotes the neighbors of node vp

parsed into EDUs with explicit discourse relation under relation r and cp,r is the number of those

(l)

tagging, user initial question, user scenario and the nodes. wr is the trainable parameters of layer l.

dialog history. Instead of inserting a [CLS] token We have the last-layer output of discourse graph:

before each rule condition to get a sentence-level

representation, we use [RULE] which is proved to

enhance performance (Lee et al., 2020). Formally, gp(l) = Sigmoid(h(l)

p Wr,g ),

the sequence is organized as: {[RULE] EDU0 X X 1 (l) (l)

rp(l+1) = ReLU( gq(l) w h ),

[RULE] EDU1 [RULE] EDUk [CLS] Question cp,r r q

r∈RL vq ∈Nr (vp )

[CLS] Scenario [CLS] History [SEP]}. Then

(2)

we feed the sequence to the PrLM to obtain the

contextualized representation. (l)

where Wr,g is a learnable parameter under relation

Interaction To explicitly model the discourse type r of the l-th layer. The last-layer hidden states

(l+1)

structure among the rule conditions, we first an- for all the vertices rp are used as the graph

notate the discourse relationships between the rule representation for the rule document. For all the k

conditions and employ a relational graph convolu- rule documents from the retriever, we concatenate

(l+1)

tional network following Ouyang et al. (2021) by rp for each rule document, and finally have

regarding the rule conditions as the vertices. The r = {r1 , r2 , . . . , rm } where m is the total number

graph is formed as a Levi graph (Levi, 1942) that re- of the vertices among those rule documents.

gards the relation edges as additional vertices. For

each two vertices, there are six types of possible 4.3 Double-channel Decoder

edges derived from the discourse parsing, namely, Before decoding, we first accumulate all the avail-

default-in, default-out, reverse-in, reverse-out, self, able information through a self-attention layer

and global. Furthermore, to build the relationship (Vaswani et al., 2017a) by allowing all the rule con-

with the background user scenario, we add an extra ditions and other elements to attend to each other.

global vertex of the user scenario that connects all Let [r1 , r2 , . . . , rm ; uq ; us ; h1 , h2 , . . . , hn ] denote

the other vertices. As a result, there are three types all the representations, ri is the representation of

of vertices, including the rule conditions, discourse the discourse graph, uq , us and hi stand for the

relations, and the global scenario vertex. representation of user question, user scenario and

For rule condition and user scenario vertices, dialog history respectively. n is the number of his-

2 tory QAs. After encoding, the output is represented

This discourse parser gives a state-of-the-art performance

on STAC so far. There are 16 discourse relations according to as:

STAC (Asher et al., 2016), including comment, clarification-

question, elaboration, acknowledgment, continuation, expla- Hc = [r̃1 , r̃2 , . . . , r̃m ; ũq , ũs ; h̃1 , h̃2 , . . . , h̃n ],

nation, conditional, question-answer, alternation, question-

elaboration, result, background, narration, correction, parallel, (3)

and contrast. which is then used for the decoder.Decision Making Similar to existing works span and the follow-up questions. Intuitively, the

(Zhong and Zettlemoyer, 2019; Gao et al., 2020a,b), shortest rule span with the minimum edit distance

we apply an entailment-driven approach for deci- is selected to be the under-specified span.

sion making. A linear transformation tracks the Existing studies deal with decision making and

fulfillment state of each rule condition among en- question generation independently (Zhong and

tailment, contradiction and Unmentioned. As a Zettlemoyer, 2019; Lawrence et al., 2019; Verma

result, our model makes the decision by et al., 2020; Gao et al., 2020a,b), and use hard-label

decisions to activate question generation. These

fi = Wf r˜i + bf ∈ R3 , (4) methods inevitably suffer from error propagation

if the model makes the wrong decisions. For ex-

where fi is the score predicted for the three labels

ample, if the made decision is not “inquiry", the

of the i-th condition. This prediction is trained via a

question generation module will not be activated

cross entropy loss for multi-classification problems:

which may be supposed to ask questions in the

N cases. For the open-retrieval CMR that involves

1 X

Lentail = − log softmax(fi )r , (5) multiple rule texts, it even brings more diverse rule

N

i=1 conditions as a reference, which would benefit for

where r is the ground-truth state of fulfillment. generating meaningful questions.

After obtaining the state of every rule, we are Therefore, we concatenate the rule document

able to give a final decision towards whether it is and the predicted span to form an input sequence:

Yes, No, Inquire or Irrelevant by attention. x = [CLS] Span [SEP] Rule Documents [SEP].

We feed x to BART encoder (Dong et al., 2019)

αi = wαT [fi ; r˜i ] + bα ∈ R1 , and obtain the encoded representation He . To take

α̃i = softmax(α)i ∈ [0, 1], advantage of the contextual states of the overall

(6)

X interaction of the dialogue states, we explore two

z = Wz α̃i [fi ; r˜i ] + bz ∈ R4 ,

alternative smoothing strategies:

i

where αi is the attention weight for the i-th decision 1. Direct Concatenation concatenates Hc and

and z has the score for all the four possible states. He to have H = [Hc ; He ].

The corresponding training loss is 2. Gated Attention applies multi-head attention

mechanism (Vaswani et al., 2017b) to ap-

Ldecision = − log softmax(z)l . (7)

pend the contextual states to He to get Ĥ =

The overall loss for decision making is: Attn(He , K, V ) where {K,V} are packed

from Hc . Then a gate control λ is computed

Ld = Ldecision + λLentail . (8) as sigmoid(Wλ Ĥ + Uλ H e ) to get the final

representation H = H e + λĤ.

Question Generation If the decision is made to

be Inquire, the machine needs to ask a follow-up H is then passed to the BART decoder to gener-

question to further clarify. Question generation in ate the follow-up question. At the i-th time-step,

this part is mainly based on the uncovered informa- H is used to generate the target token yi by

tion in the rule document, and then that informa-

tion will be rephrased into a question. We predict P (yi | yDev Set Test Set

Model Decision Making Question Gen. Decision Making Question Gen.

Micro Macro F1BLEU1 F1BLEU4 Micro Macro F1BLEU1 F1BLEU4

w/ TF-IDF

E3 61.8±0.9 62.3±1.0 29.0±1.2 18.1±1.0 61.4±2.2 61.7±1.9 31.7±0.8 22.2±1.1

EMT 65.6±1.6 66.5±1.5 36.8±1.1 32.9±1.1 64.3±0.5 64.8±0.4 38.5±0.5 30.6±0.4

DISCERN 66.0±1.6 66.7±1.8 36.3±1.9 28.4±2.1 66.7±1.1 67.1±1.2 36.7±1.4 28.6±1.2

DP-RoBERTa 73.0±1.7 73.1±1.6 45.9±1.1 40.0±0.9 70.4±1.5 70.1±1.4 40.1±1.6 34.3±1.5

MUDERN 78.4±0.5 78.8±0.6 49.9±0.8 42.7±0.8 75.2±1.0 75.3±0.9 47.1±1.7 40.4±1.8

w/ DPR++

MUDERN 79.7±1.2 80.1±1.0 50.2±0.7 42.6±0.5 75.6±0.4 75.8±0.3 48.6±1.3 40.7±1.1

O SCAR 80.5±0.5 80.9±0.6 51.3±0.8 43.1±0.8 76.5±0.5 76.4±0.4 49.1±1.1 41.9±1.8

Table 1: Results on the validation and test set of OR-ShARC. The first block presents the results of public models

from Gao et al. (2021), and the second block reports the results of our implementation of the SOTA model MUD-

ERN, and ours based on DPR++. The average results with a standard deviation on 5 random seeds are reported.

Model

Seen Unseen 5.2 Evaluation Metrics

F1BLEU1 F1BLEU4 F1BLEU1 F1BLEU4

For the decision-making subtask, ShARC evaluates

MUDERN 62.6 57.8 33.1 24.3

O SCAR 64.6 59.6 34.9 25.1 the Micro- and Macro- Acc. for the results of clas-

sification. For question generation, the main metric

Table 2: The comparison of question generation on the is F1BLEU proposed in Gao et al. (2021), which

seen and unseen splits. calculates the BLEU scores for question generation

when the predicted decision is “inquire".

5 Experiments 5.3 Implementation Details

5.1 Datasets Following the current state-of-the-art MUDERN

model (Gao et al., 2021) for open CMR, we em-

For the evaluation of open-retrieval setting, we ploy BART (Dong et al., 2019) as our backbone

adopt the OR-ShARC dataset (Gao et al., 2021), model and the BART model serves as our base-

which is a revision of the current CMR benchmark line in the following sections. For open retrieval

— ShARC (Saeidi et al., 2018). The original dataset with DPR, we fine-tune DPR in our task follow-

contains up to 948 dialog trees clawed from govern- ing the same training process as the official imple-

ment websites. Those dialog trees are then flattened mentation, with the same data format stated in the

into 32,436 examples consisting of utterance_id, DPR GitHub repository.3 Since the data process re-

tree_id, rule document, initial question, user sce- quires hard negatives (hard_negative_ctxs),

nario, dialog history, evidence and the decision. we constructed them using the most relevant rule

The update of OR-ShARC is the removal of the documents (but not the gold) selected by TF-IDF

gold rule text for each sample. Instead, all rule and left the negative_ctxs to be empty as it

texts used in the ShARC dataset are served as the can be. For discourse parsing, we keep all the de-

supporting knowledge sources for retrieval. There fault parameters of the original discourse relation

are 651 rules in total. Since the test set of ShARC parser4 , with F1 score achieving 55. The dimen-

is not public, the train, dev and test are further man- sion of hidden states is 768 for both the encoder and

ually split, whose sizes are 17,936, 1,105, 2,373, decoder. The training process uses Adam (Kingma

respectively. For the dev and test sets, around 50% and Ba, 2015) for 5 epochs with a learning rate

of the samples ask questions on rule texts used in set to 5e-5. We also use gradient clipping with a

training (seen) while the remaining of them contain maximum gradient norm of 2, and a total batch

questions on unseen (new) rule texts. The rationale size of 16. The parameter λ in the decision making

behind seen and unseen splits for the validation objective is set to 3.0. For BART-based decoder

and test set is that the two cases mimic the real for question generation, the beam size is set to 10

usage scenario: users may ask questions about rule

3

text which 1) exists in the training data (i.e., dialog https://github.com/facebookresearch/

DPR

history, scenario) as well as 2) completely newly 4

https://github.com/shizhouxing/

added rule text. DialogueDiscourseParsingDev Set Test Set

Model

Top1 Top5 Top10 Top20 Top1 Top5 Top10 Top20

TF-IDF 53.8 83.4 94.0 96.6 66.9 90.3 94.0 96.6

DPR 48.1 74.6 84.9 90.5 52.4 80.3 88.9 92.6

TF-IDF + DPR 66.3 90.0 92.4 94.5 79.8 95.4 97.1 97.5

Table 3: Comparison of the open-retrieval methods.

TF-IDF Top1 Top5 Top10 Top20 DPR Top1 Top5 Top10 Top20

Train 59.9 83.8 94.4 94.2 Train 77.2 96.5 99.0 99.8

Dev 53.8 83.4 94.0 96.6 Dev 48.1 74.6 84.9 90.5

Seen Only 62.0 84.2 90.2 93.2 Seen Only 77.4 96.8 98.6 99.6

Unseen Only 46.9 82.8 90.7 83.1 Unseen Only 23.8 56.2 73.6 83.0

Test 66.9 90.3 94.0 96.6 Test 52.4 80.3 88.9 92.6

Seen Only 62.1 83.4 89.4 93.8 Seen Only 76.2 96.1 98.6 99.8

Unseen Only 70.4 95.3 97.3 98.7 Unseen Only 35.0 68.8 81.9 87.3

Table 4: Retrieval Results of TF-IDF. Table 5: Retrieval Results of DPR.

for inference. We report the averaged result of five ing Karpukhin et al. (2020), using a linear combi-

randomly run seeds with deviations. nation of their scores as the new ranking function.

The overall results are present in Table 3. We see

5.4 Results that TF-IDF performs better than DPR, and com-

Table 1 shows the results of O SCAR and all the bining TF-IDF and DPR (DPR++) yields substan-

baseline models for the End-to-End task on the tial improvements. To investigate the reasons, we

dev and test set with respect to the evaluation met- collect the detailed results of the seen and unseen

rics mentioned above. Evaluating results indicate subsets for the dev and test sets, from which we

that O SCAR outperforms the baselines in all of the observe that TF-IDF generally works well on both

metrics. In particular, it outperforms the public the seen and unseen sets, while DPR is degraded

state-of-the-art model MUDERN by 1.3% in Mi- on the unseen set. The most plausible reason would

cro Acc. and 1.1% in Macro Acc for the decision be that DPR is trained on the training set, it can

making stage on the test set. The question genera- only give better results on the seen subsets because

tion quality is greatly boosted via our approaches. seen subsets share the same rule texts for retrieval

Specifically, F1BLEU1 and F1BLEU4 are increased with the training set. However, DPR may easily

by 2.0% and 1.5% on the test set respectively. suffer from over-fitting issues that result in the rela-

Since the dev set and test set have a 50% split tively weak scores on the unseen sets. Based on the

of user questions between seen and unseen rule complementary merits, combining the two methods

documents as described in Section 5.1, to analyze would take advantage of both sides, which achieves

the performance of the proposed framework over the best results finally.

seen and unseen rules, we have added a comparison

6.2 Decision Making

of question generation on the seen and unseen splits

as shown in Table 2. The results show consistent By means of TF-IDF + DPR retrieval, we com-

gains for both of the seen and unseen splits. pare our model with the previous SOTA model

MUDERN (Gao et al., 2021) for comparison on

6 Analysis the open-retrieval setting. According to the results

in Table 1, we observe that our method can achieve

6.1 Comparison of Open-Retrieval Methods a better performance than DISCERN, which indi-

We compare two typical retrievals methods, TF- cates that the graph-like discourse modeling works

IDF and Dense Passage Retrieval (DPR), which are well in the open-retrieval setting in general.

widely-used traditional models from sparse vector

space and recent dense-vector-based ones for open- 6.3 Question Generation

domain retrieval, respectively. We also present the Overall Results We first compare the vanilla

results of TF-IDF+DPR (denoted DPR++) follow- question generation with our method with encoderDPR++ Top1 Top5 Top10 Top20 Dev Set Test Set

Model

F1BLEU1 F1BLEU4 F1BLEU1 F1BLEU4

Train 84.2 99.0 99.9 100

Dev 66.3 90.0 92.4 94.5 Concatenation 51.3±0.8 43.1±0.8 49.1±1.1 41.9±1.8

Seen Only 84.6 98.0 99.8 100 Gated Attention 51.6±0.6 44.1±0.5 49.5±1.2 42.1±1.4

Unseen Only 51.2 83.3 86.3 100

Test 79.8 95.4 97.1 97.5 Table 8: Question generation results using different

Seen Only 83.7 98.5 99.9 100

Unseen Only 76.9 93.1 95 95.6 smoothing strategies on the OR-ShARC dataset.

Table 6: Retrieval Results of DPR++.

ShARC OR-ShARC

Model

BLEU1 BLEU4 F1BLEU1 F1BLEU4

Dev Set Test Set

Model BASELINE 62.4±1.6 47.4±1.6 50.2±0.7 42.6±0.5

F1BLEU1 F1BLEU4 F1BLEU1 F1BLEU4

O SCAR 63.3±1.2 48.1±1.4 51.6±0.6 44.4±0.4

O SCAR 51.3±0.8 43.1±0.8 49.1±1.1 41.9±1.8

w/o GS 50.9±0.9 43.0±0.7 48.7±1.3 41.6±1.5 Table 9: Performance comparison on the dev sets of the

w/o SS 50.6±0.6 42.8±0.5 48.1±1.4 41.4±1.4

w/o both 49.9±0.8 42.7±0.8 47.1±1.7 40.4±1.8

closed-book and open-retrieval tasks.

Table 7: Question generation results on the OR-ShARC

dataset. SS and GS denote the sequential states and 89.61 → 85.81 after aggregating the DM states on

graph states, respectively. the constructed dataset. Compared with the experi-

ments on the original dataset, the performance gap

states. Table 7 shows the results, which verify that shows that using embeddings from the decision

both the sequential states and graph states from making stage would well fill the information loss

the encoding process contribute to the overall per- caused by the span prediction stage, and would be

formance as removing any one of them causes a beneficial to deal with the errors propagation.

performance drop on both F1BLEU 1 and F1BLEU 4 .

Especially, when removing GS/SS, those two ma- Closed-book Evaluation Besides the open-

trices drops by a great margin, which shows the retrieval task, our end-to-end unified modeling

contributions. The results indicate that bridging the method is also applicable to the traditional CMR

gap between decision making and question genera- task. We conduct comparisons on the original

tion is necessary.5 ShARC question generation task with provided rule

documents to evaluate the performance. Results in

Smoothing Strategies We explore the perfor-

Table 9 show the obvious advantage on the open-

mance of different strategies when fusing the con-

retrieval task, indicating the strong ability to extract

textual states into BART decoder, and the results

key information from noisy documents.

are shown in Table 8, from which we see that the

gating mechanism yields the best performance. The

most plausible reason would be the advantage of 6.4 Case Study

using the gates to filter the critical information.

To explore the generation quality intuitively, we

Upper-bound Evaluation To further investigate randomly collect and summarize error cases of the

how the encoder states help generation, we con- baseline and our models for comparison. Results of

struct a “gold" dataset as the upper bound evalua- a few typical examples are presented in Figure. 3.

tion, in which we replace the reference span with We evaluate the examples in term of three aspects,

the ground-truth span by selecting the span of the namely, factualness, succinctness and informative-

rule text which has the minimum edit distance with ness. The difference of generation by O SCAR and

the to-be-asked follow-up question, in contrast to the baseline are highlighted in green, while the blue

the original span that is predicted by our model. words are the indication of the correct generations.

We find an interesting observation that the BLEU-1 One can easily observe that our generation out-

and BLEU-4 scores drop from 90.64 → 89.23, and performs the baseline model regarding factualness,

5

Our method is also applicable to other generation archi- succinctness, and informativeness. This might be

tectures such as T5 (Raffel et al., 2020). For the reference of because that the incorporation of features from the

interested readers, we tried to employ T5 as our backbone,

achieving better performance: 53.7/45.0 for dev and 52.5/43.7 decision making stage can well fill in the gap of

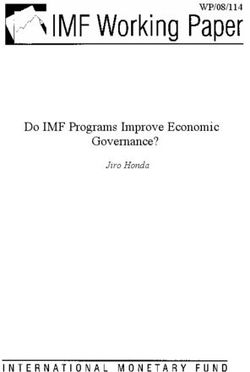

for test (F1BLEU1/F1BLEU4). information provided for question generation.Types Gold Snippet & Scenario Predicted Span Our Gen. & Ori. Gen.

Snippet:

You can still get Statutory Maternity Leave and SMP Ours:

Factualness if your baby: Was your baby born early?

(associates with the (1) is born early; is born early

correct facts) Original:

(2) is stillborn after the start of your 24th week of pregnancy

Was you born early?

(3) dies after being born

Scenario: (empty)

Snippet: Ours:

..., In general, loan funds may be used for normal Will it be used for machinery and

Succinctness expenses,

operating expenses, machinery and equipment, minor real equipment?

machinery,

(does not contain estate repairs or improvements, and refinancing debt. Original:

equipment

redundant information) Will it be used for expenses, ma-

Scenario: I have no intentions of using the loan for

operating expenses... chinery and equipment?

Snippet:

The eligible items include:

Ours:

Informativeness Is it for disabled people?

(1) medical, veterinary and scientific equipment goods for

(covers the most

(2) ambulances (3) goods for disabled people disabled people Original:

important content)

(4) motor vehicles for medical use. Is the item goods for disabled

Scenario: (empty) people?

Figure 3: Question generation examples of O SCAR and the original model.“Our Gen.” stands for the question

generated by O SCAR; “Ori. Gen.” stands for the question generated by the baseline model.

7 Conclusion response: Dialogue generation guided by retrieval

memory. In Proceedings of the 2019 Conference

In this paper, we study conversational machine of the North American Chapter of the Association

reading based on open-retrieval of supporting rule for Computational Linguistics: Human Language

documents, and present a novel end-to-end frame- Technologies, Volume 1 (Long and Short Papers),

pages 1219–1228, Minneapolis, Minnesota. Associ-

work O SCAR to enhance the question generation ation for Computational Linguistics.

by referring to the rich contextualized dialogue

states that involve the interactions between rule Eunsol Choi, He He, Mohit Iyyer, Mark Yatskar, Wen-

conditions, user scenario, initial question and dia- tau Yih, Yejin Choi, Percy Liang, and Luke Zettle-

moyer. 2018. QuAC: Question answering in con-

logue history. Our O SCAR consists of three main

text. In Proceedings of the 2018 Conference on

modules including retriever, encoder, and decoder Empirical Methods in Natural Language Processing,

as a unified model. Experiments on OR-ShARC pages 2174–2184, Brussels, Belgium. Association

show the effectiveness by achieving a new state-of- for Computational Linguistics.

the-art result. Case studies show that O SCAR can

Leyang Cui, Yu Wu, Shujie Liu, Yue Zhang, and Ming

generate high-quality questions compared with the Zhou. 2020. MuTual: A dataset for multi-turn dia-

previous widely-used pipeline systems. logue reasoning. In Proceedings of the 58th Annual

Meeting of the Association for Computational Lin-

Acknowledgments guistics, pages 1406–1416, Online. Association for

Computational Linguistics.

We thank Yifan Gao for providing the sources of

MUDERN (Gao et al., 2021) and valuable sugges- Jacob Devlin, Ming-Wei Chang, Kenton Lee, and

tions to help improve this work. Kristina Toutanova. 2019. BERT: Pre-training of

deep bidirectional transformers for language under-

standing. In Proceedings of the 2019 Conference

of the North American Chapter of the Association

References for Computational Linguistics: Human Language

Nicholas Asher, Julie Hunter, Mathieu Morey, Bena- Technologies, Volume 1 (Long and Short Papers),

mara Farah, and Stergos Afantenos. 2016. Dis- pages 4171–4186, Minneapolis, Minnesota. Associ-

course structure and dialogue acts in multiparty di- ation for Computational Linguistics.

alogue: the STAC corpus. In Proceedings of the

Tenth International Conference on Language Re- Li Dong, Nan Yang, Wenhui Wang, Furu Wei, Xi-

sources and Evaluation (LREC’16), pages 2721– aodong Liu, Yu Wang, Jianfeng Gao, Ming Zhou,

2727, Portorož, Slovenia. European Language Re- and Hsiao-Wuen Hon. 2019. Unified language

sources Association (ELRA). model pre-training for natural language understand-

ing and generation. In Advances in Neural Infor-

Deng Cai, Yan Wang, Wei Bi, Zhaopeng Tu, Xiaojiang mation Processing Systems 32: Annual Conference

Liu, Wai Lam, and Shuming Shi. 2019. Skeleton-to- on Neural Information Processing Systems 2019,NeurIPS 2019, December 8-14, 2019, Vancouver, Carolin Lawrence, Bhushan Kotnis, and Mathias

BC, Canada, pages 13042–13054. Niepert. 2019. Attending to future tokens for bidi-

rectional sequence generation. In Proceedings of

Yifan Fan, Xudong Luo, and Pingping Lin. 2020. A the 2019 Conference on Empirical Methods in Nat-

survey of response generation of dialogue systems. ural Language Processing and the 9th International

International Journal of Computer and Information Joint Conference on Natural Language Processing

Engineering, 14(12):461–472. (EMNLP-IJCNLP), pages 1–10, Hong Kong, China.

Association for Computational Linguistics.

Yifan Gao, Jingjing Li, Michael R Lyu, and Irwin King.

2021. Open-retrieval conversational machine read- Haejun Lee, Drew A. Hudson, Kangwook Lee, and

ing. arXiv preprint arXiv:2102.08633. Christopher D. Manning. 2020. SLM: Learning a

discourse language representation with sentence un-

Yifan Gao, Chien-Sheng Wu, Shafiq Joty, Caiming shuffling. In Proceedings of the 2020 Conference

Xiong, Richard Socher, Irwin King, Michael Lyu, on Empirical Methods in Natural Language Process-

and Steven C.H. Hoi. 2020a. Explicit memory ing (EMNLP), pages 1551–1562, Online. Associa-

tracker with coarse-to-fine reasoning for conversa- tion for Computational Linguistics.

tional machine reading. In Proceedings of the 58th

Annual Meeting of the Association for Computa- Friedrich Wilhelm Levi. 1942. Finite geometrical sys-

tional Linguistics, pages 935–945, Online. Associ- tems: six public lectues delivered in February, 1940,

ation for Computational Linguistics. at the University of Calcutta. University of Calcutta.

Yifan Gao, Chien-Sheng Wu, Jingjing Li, Shafiq Joty, Jingjing Liu, Panupong Pasupat, Scott Cyphers, and

Steven C.H. Hoi, Caiming Xiong, Irwin King, and Jim Glass. 2013. Asgard: A portable architecture

Michael Lyu. 2020b. Discern: Discourse-aware en- for multilingual dialogue systems. In 2013 IEEE

tailment reasoning network for conversational ma- International Conference on Acoustics, Speech and

chine reading. In Proceedings of the 2020 Confer- Signal Processing, pages 8386–8390. IEEE.

ence on Empirical Methods in Natural Language

Processing (EMNLP), pages 2439–2449, Online. As- William C Mann and Sandra A Thompson. 1988.

sociation for Computational Linguistics. Rhetorical structure theory: Toward a functional the-

ory of text organization. Text-interdisciplinary Jour-

Jia-Chen Gu, Chongyang Tao, Zhenhua Ling, Can Xu, nal for the Study of Discourse, 8(3):243–281.

Xiubo Geng, and Daxin Jiang. 2021. MPC-BERT: A Siru Ouyang, Zhuosheng Zhang, and Hai Zhao. 2021.

pre-trained language model for multi-party conversa- Dialogue graph modeling for conversational ma-

tion understanding. In Proceedings of the 59th An- chine reading. In The Joint Conference of the 59th

nual Meeting of the Association for Computational Annual Meeting of the Association for Computa-

Linguistics and the 11th International Joint Confer- tional Linguistics and the 11th International Joint

ence on Natural Language Processing (Volume 1: Conference on Natural Language Processing (ACL-

Long Papers), pages 3682–3692, Online. Associa- IJCNLP 2021).

tion for Computational Linguistics.

P. J. Price. 1990. Evaluation of spoken language sys-

Xiaodong Gu, Kang Min Yoo, and Jung-Woo Ha. tems: the ATIS domain. In Speech and Natural Lan-

2020. Dialogbert: Discourse-aware response gen- guage: Proceedings of a Workshop Held at Hidden

eration via learning to recover and rank utterances. Valley, Pennsylvania, June 24-27,1990.

arXiv:2012.01775.

Libo Qin, Tianbao Xie, Wanxiang Che, and Ting Liu.

Minlie Huang, Xiaoyan Zhu, and Jianfeng Gao. 2020. 2021. A survey on spoken language understanding:

Challenges in building intelligent open-domain dia- Recent advances and new frontiers. In the 30th Inter-

log systems. ACM Transactions on Information Sys- national Joint Conference on Artificial Intelligence

tems (TOIS), 38(3):1–32. (IJCAI-21: Survey Track).

Vladimir Karpukhin, Barlas Oguz, Sewon Min, Patrick Chen Qu, Liu Yang, Cen Chen, Minghui Qiu, W. Bruce

Lewis, Ledell Wu, Sergey Edunov, Danqi Chen, and Croft, and Mohit Iyyer. 2020. Open-retrieval con-

Wen-tau Yih. 2020. Dense passage retrieval for versational question answering. In Proceedings of

open-domain question answering. In Proceedings of the 43rd International ACM SIGIR conference on re-

the 2020 Conference on Empirical Methods in Nat- search and development in Information Retrieval, SI-

ural Language Processing (EMNLP), pages 6769– GIR 2020, Virtual Event, China, July 25-30, 2020,

6781, Online. Association for Computational Lin- pages 539–548. ACM.

guistics.

Colin Raffel, Noam Shazeer, Adam Roberts, Katherine

Diederik P. Kingma and Jimmy Ba. 2015. Adam: A Lee, Sharan Narang, Michael Matena, Yanqi Zhou,

method for stochastic optimization. In 3rd Inter- Wei Li, and Peter J Liu. 2020. Exploring the lim-

national Conference on Learning Representations, its of transfer learning with a unified text-to-text

ICLR 2015, San Diego, CA, USA, May 7-9, 2015, transformer. Journal of Machine Learning Research,

Conference Track Proceedings. 21(140):1–67.Pranav Rajpurkar, Robin Jia, and Percy Liang. 2018. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob

Know what you don’t know: Unanswerable ques- Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz

tions for SQuAD. In Proceedings of the 56th An- Kaiser, and Illia Polosukhin. 2017b. Attention is all

nual Meeting of the Association for Computational you need. In Advances in Neural Information Pro-

Linguistics (Volume 2: Short Papers), pages 784– cessing Systems 30: Annual Conference on Neural

789, Melbourne, Australia. Association for Compu- Information Processing Systems 2017, December 4-

tational Linguistics. 9, 2017, Long Beach, CA, USA, pages 5998–6008.

Pranav Rajpurkar, Jian Zhang, Konstantin Lopyrev, and Nikhil Verma, Abhishek Sharma, Dhiraj Madan, Dan-

Percy Liang. 2016. SQuAD: 100,000+ questions for ish Contractor, Harshit Kumar, and Sachindra Joshi.

machine comprehension of text. In Proceedings of 2020. Neural conversational QA: Learning to rea-

the 2016 Conference on Empirical Methods in Natu- son vs exploiting patterns. In Proceedings of the

ral Language Processing, pages 2383–2392, Austin, 2020 Conference on Empirical Methods in Natural

Texas. Association for Computational Linguistics. Language Processing (EMNLP), pages 7263–7269,

Online. Association for Computational Linguistics.

Siva Reddy, Danqi Chen, and Christopher D. Manning.

Yu Wu, Wei Wu, Dejian Yang, Can Xu, and Zhoujun

2019. CoQA: A conversational question answering

Li. 2018. Neural response generation with dynamic

challenge. Transactions of the Association for Com-

vocabularies. In Proceedings of the Thirty-Second

putational Linguistics, 7:249–266.

AAAI Conference on Artificial Intelligence, (AAAI-

18), the 30th innovative Applications of Artificial In-

Liliang Ren, Kaige Xie, Lu Chen, and Kai Yu. 2018. telligence (IAAI-18), and the 8th AAAI Symposium

Towards universal dialogue state tracking. In Pro- on Educational Advances in Artificial Intelligence

ceedings of the 2018 Conference on Empirical Meth- (EAAI-18), New Orleans, Louisiana, USA, February

ods in Natural Language Processing, pages 2780– 2-7, 2018, pages 5594–5601. AAAI Press.

2786, Brussels, Belgium. Association for Computa-

tional Linguistics. Munazza Zaib, Quan Z Sheng, and Wei Emma Zhang.

2020. A short survey of pre-trained language mod-

Marzieh Saeidi, Max Bartolo, Patrick Lewis, Sameer els for conversational ai-a new age in nlp. In

Singh, Tim Rocktäschel, Mike Sheldon, Guillaume Proceedings of the Australasian Computer Science

Bouchard, and Sebastian Riedel. 2018. Interpreta- Week Multiconference, pages 1–4.

tion of natural language rules in conversational ma-

chine reading. In Proceedings of the 2018 Confer- Linhao Zhang, Dehong Ma, Xiaodong Zhang, Xiaohui

ence on Empirical Methods in Natural Language Yan, and Houfeng Wang. 2020a. Graph lstm with

Processing, pages 2087–2097, Brussels, Belgium. context-gated mechanism for spoken language un-

Association for Computational Linguistics. derstanding. In Proceedings of the AAAI Conference

on Artificial Intelligence, volume 34, pages 9539–

Zhouxing Shi and Minlie Huang. 2019. A deep sequen- 9546.

tial model for discourse parsing on multi-party dia-

logues. In The Thirty-Third AAAI Conference on Ar- Yizhe Zhang, Siqi Sun, Michel Galley, Yen-Chun Chen,

tificial Intelligence, AAAI 2019, The Thirty-First In- Chris Brockett, Xiang Gao, Jianfeng Gao, Jingjing

novative Applications of Artificial Intelligence Con- Liu, and Bill Dolan. 2020b. DIALOGPT : Large-

ference, IAAI 2019, The Ninth AAAI Symposium scale generative pre-training for conversational re-

on Educational Advances in Artificial Intelligence, sponse generation. In Proceedings of the 58th An-

EAAI 2019, Honolulu, Hawaii, USA, January 27 - nual Meeting of the Association for Computational

February 1, 2019, pages 7007–7014. AAAI Press. Linguistics: System Demonstrations, pages 270–

278, Online. Association for Computational Linguis-

tics.

Kai Sun, Dian Yu, Jianshu Chen, Dong Yu, Yejin Choi,

and Claire Cardie. 2019. DREAM: A challenge data Zhuosheng Zhang, Jiangtong Li, Pengfei Zhu, Hai

set and models for dialogue-based reading compre- Zhao, and Gongshen Liu. 2018. Modeling multi-

hension. Transactions of the Association for Com- turn conversation with deep utterance aggregation.

putational Linguistics, 7:217–231. In Proceedings of the 27th International Conference

on Computational Linguistics, pages 3740–3752,

Gokhan Tur and Renato De Mori. 2011. Spoken lan- Santa Fe, New Mexico, USA. Association for Com-

guage understanding: Systems for extracting seman- putational Linguistics.

tic information from speech. John Wiley & Sons.

Zhuosheng Zhang, Yuwei Wu, Hai Zhao, Zuchao Li,

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Shuailiang Zhang, Xi Zhou, and Xiang Zhou. 2020c.

Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Semantics-aware BERT for language understanding.

Kaiser, and Illia Polosukhin. 2017a. Attention is all In The Thirty-Fourth AAAI Conference on Artificial

you need. In Advances in Neural Information Pro- Intelligence, AAAI 2020, The Thirty-Second Inno-

cessing Systems 30: Annual Conference on Neural vative Applications of Artificial Intelligence Confer-

Information Processing Systems 2017, December 4- ence, IAAI 2020, The Tenth AAAI Symposium on Ed-

9, 2017, Long Beach, CA, USA, pages 5998–6008. ucational Advances in Artificial Intelligence, EAAI2020, New York, NY, USA, February 7-12, 2020, pages 9628–9635. AAAI Press. Zhuosheng Zhang, Junjie Yang, and Hai Zhao. 2021. Retrospective reader for machine reading compre- hension. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 35, pages 14506– 14514. Victor Zhong and Luke Zettlemoyer. 2019. E3: Entailment-driven extracting and editing for conver- sational machine reading. In Proceedings of the 57th Annual Meeting of the Association for Com- putational Linguistics, pages 2310–2320, Florence, Italy. Association for Computational Linguistics. Pengfei Zhu, Zhuosheng Zhang, Jiangtong Li, Yafang Huang, and Hai Zhao. 2018. Lingke: a fine-grained multi-turn chatbot for customer service. In Proceed- ings of the 27th International Conference on Compu- tational Linguistics: System Demonstrations, pages 108–112, Santa Fe, New Mexico. Association for Computational Linguistics.

You can also read