SimCSE: Simple Contrastive Learning of Sentence Embeddings

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

SimCSE: Simple Contrastive Learning of Sentence Embeddings

Tianyu Gao†∗ Xingcheng Yao‡∗ Danqi Chen†

†

Department of Computer Science, Princeton University

‡

Institute for Interdisciplinary Information Sciences, Tsinghua University

{tianyug,danqic}@cs.princeton.edu

yxc18@mails.tsinghua.edu.cn

Abstract embedding methods and demonstrate that a con-

trastive objective can be extremely effective when

This paper presents SimCSE, a simple con- coupled with pre-trained language models such as

trastive learning framework that greatly ad- BERT (Devlin et al., 2019) or RoBERTa (Liu et al.,

vances the state-of-the-art sentence embed-

arXiv:2104.08821v3 [cs.CL] 9 Sep 2021

2019). We present SimCSE, a simple contrastive

dings. We first describe an unsupervised ap-

proach, which takes an input sentence and sentence embedding framework, which can pro-

predicts itself in a contrastive objective, with duce superior sentence embeddings, from either

only standard dropout used as noise. This unlabeled or labeled data.

simple method works surprisingly well, per- Our unsupervised SimCSE simply predicts the

forming on par with previous supervised coun-

input sentence itself with only dropout (Srivastava

terparts. We find that dropout acts as mini-

mal data augmentation and removing it leads

et al., 2014) used as noise (Figure 1(a)). In other

to a representation collapse. Then, we pro- words, we pass the same sentence to the pre-trained

pose a supervised approach, which incorpo- encoder twice: by applying the standard dropout

rates annotated pairs from natural language twice, we can obtain two different embeddings as

inference datasets into our contrastive learn- “positive pairs”. Then we take other sentences in the

ing framework, by using “entailment” pairs same mini-batch as “negatives”, and the model pre-

as positives and “contradiction” pairs as hard dicts the positive one among negatives. Although it

negatives. We evaluate SimCSE on standard

may appear strikingly simple, this approach outper-

semantic textual similarity (STS) tasks, and

our unsupervised and supervised models using forms training objectives such as predicting next

BERTbase achieve an average of 76.3% and sentences (Logeswaran and Lee, 2018) and discrete

81.6% Spearman’s correlation respectively, a data augmentation (e.g., word deletion and replace-

4.2% and 2.2% improvement compared to ment) by a large margin, and even matches previous

previous best results. We also show—both supervised methods. Through careful analysis, we

theoretically and empirically—that contrastive find that dropout acts as minimal “data augmenta-

learning objective regularizes pre-trained em-

tion” of hidden representations, while removing it

beddings’ anisotropic space to be more uni-

form, and it better aligns positive pairs when

leads to a representation collapse.

supervised signals are available.1 Our supervised SimCSE builds upon the recent

success of using natural language inference (NLI)

1 Introduction datasets for sentence embeddings (Conneau et al.,

2017; Reimers and Gurevych, 2019) and incorpo-

Learning universal sentence embeddings is a fun-

rates annotated sentence pairs in contrastive learn-

damental problem in natural language process-

ing (Figure 1(b)). Unlike previous work that casts

ing and has been studied extensively in the litera-

it as a 3-way classification task (entailment, neu-

ture (Kiros et al., 2015; Hill et al., 2016; Conneau

tral and contradiction), we leverage the fact that

et al., 2017; Logeswaran and Lee, 2018; Cer et al.,

entailment pairs can be naturally used as positive

2018; Reimers and Gurevych, 2019, inter alia).

instances. We also find that adding correspond-

In this work, we advance state-of-the-art sentence

ing contradiction pairs as hard negatives further

* The first two authors contributed equally (listed in alpha- improves performance. This simple use of NLI

betical order). This work was done when Xingcheng visited datasets achieves a substantial improvement com-

the Princeton NLP group remotely.

1

Our code and pre-trained models are publicly available at pared to prior methods using the same datasets.

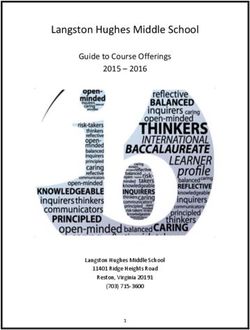

https://github.com/princeton-nlp/SimCSE. We also compare to other labeled sentence-pair(a) Unsupervised SimCSE (b) Supervised SimCSE

Different hidden dropout masks

in two forward passes

Two dogs are running. Two dogs There are animals outdoors.

label=entailment

are running.

A man surfing on the sea. E The pets are sitting on a couch.

label=contradiction

A kid is on a skateboard. A man surfing There is a man.

label=entailment

on the sea. E E

The man wears a business suit.

E Encoder label=contradiction

Positive instance A kid is on a A kid is skateboarding.

label=entailment

Negative instance skateboard.

A kit is inside the house.

label=contradiction

Figure 1: (a) Unsupervised SimCSE predicts the input sentence itself from in-batch negatives, with different hidden

dropout masks applied. (b) Supervised SimCSE leverages the NLI datasets and takes the entailment (premise-

hypothesis) pairs as positives, and contradiction pairs as well as other in-batch instances as negatives.

datasets and find that NLI datasets are especially 2 Background: Contrastive Learning

effective for learning sentence embeddings.

Contrastive learning aims to learn effective repre-

To better understand the strong performance of

sentation by pulling semantically close neighbors

SimCSE, we borrow the analysis tool from Wang

together and pushing apart non-neighbors (Hadsell

and Isola (2020), which takes alignment between

et al., 2006). It assumes a set of paired examples

semantically-related positive pairs and uniformity

D = {(xi , x+ m +

i )}i=1 , where xi and xi are semanti-

of the whole representation space to measure the

cally related. We follow the contrastive framework

quality of learned embeddings. Through empiri-

in Chen et al. (2020) and take a cross-entropy ob-

cal analysis, we find that our unsupervised Sim-

jective with in-batch negatives (Chen et al., 2017;

CSE essentially improves uniformity while avoid-

Henderson et al., 2017): let hi and h+ i denote the

ing degenerated alignment via dropout noise, thus

representations of xi and x+ i , the training objective

improving the expressiveness of the representa-

for (xi , x+

i ) with a mini-batch of N pairs is:

tions. The same analysis shows that the NLI train-

ing signal can further improve alignment between esim(hi ,hi

+

)/τ

positive pairs and produce better sentence embed- `i = − log P , (1)

N sim(hi ,h+

j )/τ

dings. We also draw a connection to the recent find- j=1 e

ings that pre-trained word embeddings suffer from

where τ is a temperature hyperparameter and

anisotropy (Ethayarajh, 2019; Li et al., 2020) and h>1 h2

prove that—through a spectrum perspective—the sim(h1 , h2 ) is the cosine similarity kh1 k·kh 2k

. In

contrastive learning objective “flattens” the singu- this work, we encode input sentences using a

lar value distribution of the sentence embedding pre-trained language model such as BERT (De-

space, hence improving uniformity. vlin et al., 2019) or RoBERTa (Liu et al., 2019):

h = fθ (x), and then fine-tune all the parameters

We conduct a comprehensive evaluation of Sim-

using the contrastive learning objective (Eq. 1).

CSE on seven standard semantic textual similarity

(STS) tasks (Agirre et al., 2012, 2013, 2014, 2015, Positive instances. One critical question in con-

2016; Cer et al., 2017; Marelli et al., 2014) and trastive learning is how to construct (xi , x+

i ) pairs.

seven transfer tasks (Conneau and Kiela, 2018). In visual representations, an effective solution is to

On the STS tasks, our unsupervised and supervised take two random transformations of the same image

models achieve a 76.3% and 81.6% averaged Spear- (e.g., cropping, flipping, distortion and rotation) as

man’s correlation respectively using BERTbase , a xi and x+ i (Dosovitskiy et al., 2014). A similar

4.2% and 2.2% improvement compared to previous approach has been recently adopted in language

best results. We also achieve competitive perfor- representations (Wu et al., 2020; Meng et al., 2021)

mance on the transfer tasks. Finally, we identify by applying augmentation techniques such as word

an incoherent evaluation issue in the literature and deletion, reordering, and substitution. However,

consolidate results of different settings for future data augmentation in NLP is inherently difficult

work in evaluation of sentence embeddings. because of its discrete nature. As we will see in §3,simply using standard dropout on intermediate rep- Data augmentation STS-B

resentations outperforms these discrete operators. None (unsup. SimCSE) 82.5

In NLP, a similar contrastive learning objective Crop 10% 20% 30%

has been explored in different contexts (Henderson 77.8 71.4 63.6

et al., 2017; Gillick et al., 2019; Karpukhin et al., Word deletion 10% 20% 30%

2020). In these cases, (xi , x+i ) are collected from 75.9 72.2 68.2

supervised datasets such as question-passage pairs. Delete one word 75.9

Because of the distinct nature of xi and x+ i , these w/o dropout 74.2

approaches always use a dual-encoder framework, Synonym replacement 77.4

MLM 15% 62.2

i.e., using two independent encoders fθ1 and fθ2 for

xi and x+ i . For sentence embeddings, Logeswaran

and Lee (2018) also use contrastive learning with Table 1: Comparison of data augmentations on STS-B

development set (Spearman’s correlation). Crop k%:

a dual-encoder approach, by forming current sen-

keep 100-k% of the length; word deletion k%: delete

tence and next sentence as (xi , x+ i ). k% words; Synonym replacement: use nlpaug (Ma,

Alignment and uniformity. Recently, Wang and 2019) to randomly replace one word with its synonym;

MLM k%: use BERTbase to replace k% of words.

Isola (2020) identify two key properties related to

contrastive learning—alignment and uniformity—

Training objective fθ (fθ1 , fθ2 )

and propose to use them to measure the quality of

representations. Given a distribution of positive Next sentence 67.1 68.9

Next 3 sentences 67.4 68.8

pairs ppos , alignment calculates expected distance Delete one word 75.9 73.1

between embeddings of the paired instances (as- Unsupervised SimCSE 82.5 80.7

suming representations are already normalized):

Table 2: Comparison of different unsupervised objec-

`align , E kf (x) − f (x+ )k2 . (2) tives (STS-B development set, Spearman’s correlation).

(x,x+ )∼ppos

The two columns denote whether we use one encoder

On the other hand, uniformity measures how well or two independent encoders. Next 3 sentences: ran-

domly sample one from the next 3 sentences. Delete

the embeddings are uniformly distributed:

one word: delete one word randomly (see Table 1).

2

`uniform , log E e−2kf (x)−f (y)k , (3)

i.i.d.

x,y ∼ pdata

two embeddings with different dropout masks z, z 0 ,

and the training objective of SimCSE becomes:

where pdata denotes the data distribution. These

two metrics are well aligned with the objective zi zi

0

of contrastive learning: positive instances should esim(hi ,hi )/τ

`i = − log z0

, (4)

z

stay close and embeddings for random instances PN sim(hi i ,hj j )/τ

j=1 e

should scatter on the hypersphere. In the following

sections, we will also use the two metrics to justify for a mini-batch of N sentences. Note that z is just

the inner workings of our approaches. the standard dropout mask in Transformers and we

do not add any additional dropout.

3 Unsupervised SimCSE

Dropout noise as data augmentation. We view

The idea of unsupervised SimCSE is extremely it as a minimal form of data augmentation: the

simple: we take a collection of sentences {xi }m i=1 positive pair takes exactly the same sentence, and

and use x+ i = xi . The key ingredient to get this to their embeddings only differ in dropout masks.

work with identical positive pairs is through the use We compare this approach to other training ob-

of independently sampled dropout masks for xi and jectives on the STS-B development set (Cer et al.,

x+i . In standard training of Transformers (Vaswani 2017)2 . Table 1 compares our approach to common

et al., 2017), there are dropout masks placed on data augmentation techniques such as crop, word

fully-connected layers as well as attention probabil- deletion and replacement, which can be viewed as

ities (default p = 0.1). We denote hzi = fθ (xi , z) 2

We randomly sample 106 sentences from English

where z is a random mask for dropout. We simply Wikipedia and fine-tune BERTbase with learning rate = 3e-5,

feed the same input to the encoder twice and get N = 64. In all our experiments, no STS training sets are used.0.400

p 0.0 0.01 0.05 0.1 Fixed

Fixed0.1

0.1

STS-B 71.1 72.6 81.1 82.5 0.375 No1odropout

dUoSout

Delete

Deleteone

oneword

woUd

0.350

p 0.15 0.2 0.5 Fixed 0.1 Unsup.

8nsuS.SimCSE

6imC6E

STS-B 81.4 80.5 71.0 43.6 0.325

!Alignment

align

0.300

Training direction

0.275

Table 3: Effects of different dropout probabilities p

on the STS-B development set (Spearman’s correlation, 0.250

BERTbase ). Fixed 0.1: default 0.1 dropout rate but ap- 0.225

ply the same dropout mask on both xi and x+ i . 0.200

−2.6 −2.4 −2.2 −2.0 −1.8 −1.6

8nifoUmity

!uniform

h = fθ (g(x), z) and g is a (random) discrete op-

Figure 2: `align -`uniform plot for unsupervised SimCSE,

erator on x. We note that even deleting one word “no dropout”, “fixed 0.1”, and “delete one word”. We

would hurt performance and none of the discrete visualize checkpoints every 10 training steps and the

augmentations outperforms dropout noise. arrows indicate the training direction. For both `align

We also compare this self-prediction training and `uniform , lower numbers are better.

objective to the next-sentence objective used in Lo-

geswaran and Lee (2018), taking either one encoder 4 Supervised SimCSE

or two independent encoders. As shown in Table 2,

we find that SimCSE performs much better than We have demonstrated that adding dropout noise

the next-sentence objectives (82.5 vs 67.4 on STS- is able to keep a good alignment for positive pairs

B) and using one encoder instead of two makes a (x, x+ ) ∼ ppos . In this section, we study whether

significant difference in our approach. we can leverage supervised datasets to provide

better training signals for improving alignment of

Why does it work? To further understand the our approach. Prior work (Conneau et al., 2017;

role of dropout noise in unsupervised SimCSE, we Reimers and Gurevych, 2019) has demonstrated

try out different dropout rates in Table 3 and ob- that supervised natural language inference (NLI)

serve that all the variants underperform the default datasets (Bowman et al., 2015; Williams et al.,

dropout probability p = 0.1 from Transformers. 2018) are effective for learning sentence embed-

We find two extreme cases particularly interesting: dings, by predicting whether the relationship be-

“no dropout” (p = 0) and “fixed 0.1” (using default tween two sentences is entailment, neutral or con-

dropout p = 0.1 but the same dropout masks for tradiction. In our contrastive learning framework,

the pair). In both cases, the resulting embeddings we instead directly take (xi , x+

i ) pairs from super-

for the pair are exactly the same, and it leads to vised datasets and use them to optimize Eq. 1.

a dramatic performance degradation. We take the

checkpoints of these models every 10 steps during Choices of labeled data. We first explore which

training and visualize the alignment and uniformity supervised datasets are especially suitable for con-

metrics3 in Figure 2, along with a simple data aug- structing positive pairs (xi , x+

i ). We experiment

mentation model “delete one word”. As clearly with a number of datasets with sentence-pair ex-

shown, starting from pre-trained checkpoints, all amples, including 1) QQP4 : Quora question pairs;

models greatly improve uniformity. However, the 2) Flickr30k (Young et al., 2014): each image is

alignment of the two special variants also degrades annotated with 5 human-written captions and we

drastically, while our unsupervised SimCSE keeps consider any two captions of the same image as a

a steady alignment, thanks to the use of dropout positive pair; 3) ParaNMT (Wieting and Gimpel,

noise. It also demonstrates that starting from a pre- 2018): a large-scale back-translation paraphrase

trained checkpoint is crucial, for it provides good dataset5 ; and finally 4) NLI datasets: SNLI (Bow-

initial alignment. At last, “delete one word” im- man et al., 2015) and MNLI (Williams et al., 2018).

proves the alignment yet achieves a smaller gain We train the contrastive learning model (Eq. 1)

on the uniformity metric, and eventually underper- with different datasets and compare the results in

forms unsupervised SimCSE. 4

https://www.quora.com/q/quoradata/

5

ParaNMT is automatically constructed by machine trans-

3

We take STS-B pairs with a score higher than 4 as ppos lation systems. Strictly speaking, we should not call it “super-

and all STS-B sentences as pdata . vised”. It underperforms our unsupervised SimCSE though.Table 4. For a fair comparison, we also run exper- Dataset sample full

iments with the same # of training pairs. Among Unsup. SimCSE (1m) - 82.5

all the options, using entailment pairs from the

QQP (134k) 81.8 81.8

NLI (SNLI + MNLI) datasets performs the best. Flickr30k (318k) 81.5 81.4

We think this is reasonable, as the NLI datasets ParaNMT (5m) 79.7 78.7

consist of high-quality and crowd-sourced pairs. SNLI+MNLI

entailment (314k) 84.1 84.9

Also, human annotators are expected to write the

neutral (314k)8 82.6 82.9

hypotheses manually based on the premises and contradiction (314k) 77.5 77.6

two sentences tend to have less lexical overlap. all (942k) 81.7 81.9

For instance, we find that the lexical overlap (F1 SNLI+MNLI

measured between two bags of words) for the en- entailment + hard neg. - 86.2

tailment pairs (SNLI + MNLI) is 39%, while they + ANLI (52k) - 85.0

are 60% and 55% for QQP and ParaNMT.

Table 4: Comparisons of different supervised datasets

Contradiction as hard negatives. Finally, we fur-

as positive pairs. Results are Spearman’s correlations

ther take the advantage of the NLI datasets by us- on the STS-B development set using BERTbase (we

ing its contradiction pairs as hard negatives6 . In use the same hyperparameters as the final SimCSE

NLI datasets, given one premise, annotators are re- model). Numbers in brackets denote the # of pairs.

quired to manually write one sentence that is abso- Sample: subsampling 134k positive pairs for a fair com-

lutely true (entailment), one that might be true (neu- parison among datasets; full: using the full dataset. In

tral), and one that is definitely false (contradiction). the last block, we use entailment pairs as positives and

Therefore, for each premise and its entailment hy- contradiction pairs as hard negatives (our final model).

pothesis, there is an accompanying contradiction

hypothesis7 (see Figure 1 for an example). demonstrate that language models trained with tied

+ −

Formally, we extend (xi , x+ i ) to (xi , xi , xi ), input/output embeddings lead to anisotropic word

−

where xi is the premise, x+ i and xi are entailment embeddings, and this is further observed by Etha-

and contradiction hypotheses. The training objec- yarajh (2019) in pre-trained contextual representa-

tive `i is then defined by (N is mini-batch size): tions. Wang et al. (2020) show that singular values

+

of the word embedding matrix in a language model

esim(hi ,hi )/τ

decay drastically: except for a few dominating sin-

− log P −

.

sim(hi ,h+

N

e j )/τ + esim(hi ,hj )/τ gular values, all others are close to zero.

j=1

(5) A simple way to alleviate the problem is post-

As shown in Table 4, adding hard negatives can processing, either to eliminate the dominant prin-

further improve performance (84.9 → 86.2) and cipal components (Arora et al., 2017; Mu and

this is our final supervised SimCSE. We also tried Viswanath, 2018), or to map embeddings to an

to add the ANLI dataset (Nie et al., 2020) or com- isotropic distribution (Li et al., 2020; Su et al.,

bine it with our unsupervised SimCSE approach, 2021). Another common solution is to add reg-

but didn’t find a meaningful improvement. We also ularization during training (Gao et al., 2019; Wang

considered a dual encoder framework in supervised et al., 2020). In this work, we show that—both

SimCSE and it hurt performance (86.2 → 84.2). theoretically and empirically—the contrastive ob-

jective can also alleviate the anisotropy problem.

5 Connection to Anisotropy The anisotropy problem is naturally connected to

uniformity (Wang and Isola, 2020), both highlight-

Recent work identifies an anisotropy problem in ing that embeddings should be evenly distributed

language representations (Ethayarajh, 2019; Li in the space. Intuitively, optimizing the contrastive

et al., 2020), i.e., the learned embeddings occupy a learning objective can improve uniformity (or ease

narrow cone in the vector space, which severely the anisotropy problem), as the objective pushes

limits their expressiveness. Gao et al. (2019) negative instances apart. Here, we take a singular

6

We also experimented with adding neutral hypotheses as spectrum perspective—which is a common practice

hard negatives. See Section 6.3 for more discussion.

7 8

In fact, one premise can have multiple contradiction hy- Though our final model only takes entailment pairs as

potheses. In our implementation, we only sample one as the positive instances, here we also try taking neutral and contra-

hard negative and we did not find a difference by using more. diction pairs from the NLI datasets as positive pairs.in analyzing word embeddings (Mu and Viswanath, also optimizes for aligning positive pairs by the

2018; Gao et al., 2019; Wang et al., 2020), and first term in Eq. 6, which is the key to the success

show that the contrastive objective can “flatten” the of SimCSE. A quantitative analysis is given in §7.

singular value distribution of sentence embeddings

and make the representations more isotropic. 6 Experiment

Following Wang and Isola (2020), the asymp-

totics of the contrastive learning objective (Eq. 1) 6.1 Evaluation Setup

can be expressed by the following equation when We conduct our experiments on 7 semantic textual

the number of negative instances approaches infin- similarity (STS) tasks. Note that all our STS exper-

ity (assuming f (x) is normalized): iments are fully unsupervised and no STS training

1 h i sets are used. Even for supervised SimCSE, we

− E f (x)> f (x+ ) simply mean that we take extra labeled datasets

τ (x,x+ )∼ppos

h i (6) for training, following previous work (Conneau

f (x)> f (x− )/τ et al., 2017). We also evaluate 7 transfer learning

+ E log E e ,

x∼pdata x− ∼pdata

tasks and provide detailed results in Appendix E.

where the first term keeps positive instances similar We share a similar sentiment with Reimers and

and the second pushes negative pairs apart. When Gurevych (2019) that the main goal of sentence

pdata is uniform over finite samples {xi }m embeddings is to cluster semantically similar sen-

i=1 , with

hi = f (xi ), we can derive the following formula tences and hence take STS as the main result.

from the second term with Jensen’s inequality: Semantic textual similarity tasks. We evalu-

h i ate on 7 STS tasks: STS 2012–2016 (Agirre

f (x)> f (x− )/τ

E log E e et al., 2012, 2013, 2014, 2015, 2016), STS

x∼pdata x− ∼pdata

Benchmark (Cer et al., 2017) and SICK-

m m

1 X 1 X > Relatedness (Marelli et al., 2014). When compar-

= log ehi hj /τ (7) ing to previous work, we identify invalid compari-

m m

i=1 j=1

son patterns in published papers in the evaluation

m X

m

1 X settings, including (a) whether to use an additional

≥ h>

i hj .

τ m2 regressor, (b) Spearman’s vs Pearson’s correlation,

i=1 j=1

and (c) how the results are aggregated (Table B.1).

Let W be the sentence embedding matrix corre- We discuss the detailed differences in Appendix B

sponding to {xi }m i=1 , i.e., the i-th row of W is and choose to follow the setting of Reimers and

hi . Optimizing the second term in Eq. 6 essen- Gurevych (2019) in our evaluation (no additional

tially minimizes an upper bound of the summation regressor, Spearman’s correlation, and “all” aggre-

of all elements in WW> , i.e., Sum(WW> ) = gation). We also report our replicated study of

P m Pm >

i=1 j=1 hi hj . previous work as well as our results evaluated in

Since we normalize hi , all elements on the di- a different setting in Table B.2 and Table B.3. We

agonal of WW> are 1 and then tr(WW> ) (the call for unifying the setting in evaluating sentence

sum of all eigenvalues) is a constant. According embeddings for future research.

to Merikoski (1984), if all elements in WW> are

Training details. We start from pre-trained check-

positive, which is the case in most times accord-

points of BERT (Devlin et al., 2019) (uncased)

ing to Figure G.1, then Sum(WW> ) is an upper

or RoBERTa (Liu et al., 2019) (cased) and take

bound for the largest eigenvalue of WW> . When

the [CLS] representation as the sentence embed-

minimizing the second term in Eq. 6, we reduce

ding9 (see §6.3 for comparison between different

the top eigenvalue of WW> and inherently “flat-

pooling methods). We train unsupervised SimCSE

ten” the singular spectrum of the embedding space.

on 106 randomly sampled sentences from English

Therefore, contrastive learning is expected to alle-

Wikipedia, and train supervised SimCSE on the

viate the representation degeneration problem and

combination of MNLI and SNLI datasets (314k).

improve uniformity of sentence embeddings.

More training details can be found in Appendix A.

Compared to post-processing methods in Li et al.

(2020); Su et al. (2021), which only aim to encour- 9

There is an MLP layer over [CLS] in BERT’s original

age isotropic representations, contrastive learning implementation and we keep it with random initialization.Model STS12 STS13 STS14 STS15 STS16 STS-B SICK-R Avg.

Unsupervised models

♣

GloVe embeddings (avg.) 55.14 70.66 59.73 68.25 63.66 58.02 53.76 61.32

BERTbase (first-last avg.) 39.70 59.38 49.67 66.03 66.19 53.87 62.06 56.70

BERTbase -flow 58.40 67.10 60.85 75.16 71.22 68.66 64.47 66.55

BERTbase -whitening 57.83 66.90 60.90 75.08 71.31 68.24 63.73 66.28

IS-BERTbase ♥ 56.77 69.24 61.21 75.23 70.16 69.21 64.25 66.58

CT-BERTbase 61.63 76.80 68.47 77.50 76.48 74.31 69.19 72.05

∗ SimCSE-BERTbase 68.40 82.41 74.38 80.91 78.56 76.85 72.23 76.25

RoBERTabase (first-last avg.) 40.88 58.74 49.07 65.63 61.48 58.55 61.63 56.57

RoBERTabase -whitening 46.99 63.24 57.23 71.36 68.99 61.36 62.91 61.73

DeCLUTR-RoBERTabase 52.41 75.19 65.52 77.12 78.63 72.41 68.62 69.99

∗ SimCSE-RoBERTabase 70.16 81.77 73.24 81.36 80.65 80.22 68.56 76.57

∗ SimCSE-RoBERTalarge 72.86 83.99 75.62 84.77 81.80 81.98 71.26 78.90

Supervised models

InferSent-GloVe♣ 52.86 66.75 62.15 72.77 66.87 68.03 65.65 65.01

Universal Sentence Encoder♣ 64.49 67.80 64.61 76.83 73.18 74.92 76.69 71.22

SBERTbase ♣ 70.97 76.53 73.19 79.09 74.30 77.03 72.91 74.89

SBERTbase -flow 69.78 77.27 74.35 82.01 77.46 79.12 76.21 76.60

SBERTbase -whitening 69.65 77.57 74.66 82.27 78.39 79.52 76.91 77.00

CT-SBERTbase 74.84 83.20 78.07 83.84 77.93 81.46 76.42 79.39

∗ SimCSE-BERTbase 75.30 84.67 80.19 85.40 80.82 84.25 80.39 81.57

SRoBERTabase ♣ 71.54 72.49 70.80 78.74 73.69 77.77 74.46 74.21

SRoBERTabase -whitening 70.46 77.07 74.46 81.64 76.43 79.49 76.65 76.60

∗ SimCSE-RoBERTabase 76.53 85.21 80.95 86.03 82.57 85.83 80.50 82.52

∗ SimCSE-RoBERTalarge 77.46 87.27 82.36 86.66 83.93 86.70 81.95 83.76

Table 5: Sentence embedding performance on STS tasks (Spearman’s correlation, “all” setting). We highlight the

highest numbers among models with the same pre-trained encoder. ♣: results from Reimers and Gurevych (2019);

♥: results from Zhang et al. (2020); all other results are reproduced or reevaluated by ourselves. For BERT-flow (Li

et al., 2020) and whitening (Su et al., 2021), we only report the “NLI” setting (see Table C.1).

6.2 Main Results methods include InferSent (Conneau et al., 2017),

Universal Sentence Encoder (Cer et al., 2018), and

We compare unsupervised and supervised Sim-

SBERT/SRoBERTa (Reimers and Gurevych, 2019)

CSE to previous state-of-the-art sentence embed-

with post-processing methods (BERT-flow, whiten-

ding methods on STS tasks. Unsupervised base-

ing, and CT). We provide more details of these

lines include average GloVe embeddings (Pen-

baselines in Appendix C.

nington et al., 2014), average BERT or RoBERTa

Table 5 shows the evaluation results on 7 STS

embeddings10 , and post-processing methods such

tasks. SimCSE can substantially improve results

as BERT-flow (Li et al., 2020) and BERT-

on all the datasets with or without extra NLI su-

whitening (Su et al., 2021). We also compare to sev-

pervision, greatly outperforming the previous state-

eral recent methods using a contrastive objective,

of-the-art models. Specifically, our unsupervised

including 1) IS-BERT (Zhang et al., 2020), which

SimCSE-BERTbase improves the previous best

maximizes the agreement between global and lo-

averaged Spearman’s correlation from 72.05% to

cal features; 2) DeCLUTR (Giorgi et al., 2021),

76.25%, even comparable to supervised baselines.

which takes different spans from the same docu-

When using NLI datasets, SimCSE-BERTbase fur-

ment as positive pairs; 3) CT (Carlsson et al., 2021),

ther pushes the state-of-the-art results to 81.57%.

which aligns embeddings of the same sentence

The gains are more pronounced on RoBERTa

from two different encoders.11 Other supervised

encoders, and our supervised SimCSE achieves

10

Following Su et al. (2021), we take the average of the first

83.76% with RoBERTalarge .

and the last layers, which is better than only taking the last. In Appendix E, we show that SimCSE also

11

We do not compare to CLEAR (Wu et al., 2020), because achieves on par or better transfer task performance

they use their own version of pre-trained models, and the

numbers appear to be much lower. Also note that CT is a compared to existing work, and an auxiliary MLM

concurrent work to ours. objective can further boost performance.Pooler Unsup. Sup. incorporate weighting of different negatives:

[CLS] esim(hi ,hi

+

)/τ

w/ MLP 81.7 86.2 − log P , (8)

+ α1i esim(hi ,hj )/τ

−

sim(hi ,h+

j

N j )/τ

w/ MLP (train) 82.5 85.8 j=1 e

w/o MLP 80.9 86.2

First-last avg. 81.2 86.1 where 1ji ∈ {0, 1} is an indicator that equals 1 if

and only if i = j. We train SimCSE with different

Table 6: Ablation studies of different pooling methods values of α and evaluate the trained models on

in unsupervised and supervised SimCSE. [CLS] w/ the development set of STS-B. We also consider

MLP (train): using MLP on [CLS] during training but taking neutral hypotheses as hard negatives. As

removing it during testing. The results are based on the shown in Table 7, α = 1 performs the best, and

development set of STS-B using BERTbase .

neutral hypotheses do not bring further gains.

Contra.+ 7 Analysis

Hard neg N/A Contradiction

Neutral

In this section, we conduct further analyses to un-

α - 0.5 1.0 2.0 1.0

derstand the inner workings of SimCSE.

STS-B 84.9 86.1 86.2 86.2 85.3

Uniformity and alignment. Figure 3 shows uni-

formity and alignment of different sentence embed-

Table 7: STS-B development results with different hard

ding models along with their averaged STS results.

negative policies. “N/A”: no hard negative.

In general, models which have both better align-

ment and uniformity achieve better performance,

6.3 Ablation Studies confirming the findings in Wang and Isola (2020).

We also observe that (1) though pre-trained em-

We investigate the impact of different pooling meth- beddings have good alignment, their uniformity is

ods and hard negatives. All reported results in this poor (i.e., the embeddings are highly anisotropic);

section are based on the STS-B development set. (2) post-processing methods like BERT-flow and

We provide more ablation studies (normalization, BERT-whitening greatly improve uniformity but

temperature, and MLM objectives) in Appendix D. also suffer a degeneration in alignment; (3) unsu-

Pooling methods. Reimers and Gurevych (2019); pervised SimCSE effectively improves uniformity

Li et al. (2020) show that taking the average em- of pre-trained embeddings whereas keeping a good

beddings of pre-trained models (especially from alignment; (4) incorporating supervised data in

both the first and last layers) leads to better perfor- SimCSE further amends alignment. In Appendix F,

mance than [CLS]. Table 6 shows the comparison we further show that SimCSE can effectively flat-

between different pooling methods in both unsuper- ten singular value distribution of pre-trained em-

vised and supervised SimCSE. For [CLS] repre- beddings. In Appendix G, we demonstrate that

sentation, the original BERT implementation takes SimCSE provides more distinguishable cosine sim-

an extra MLP layer on top of it. Here, we consider ilarities between different sentence pairs.

three different settings for [CLS]: 1) keeping the Qualitative comparison. We conduct a small-

MLP layer; 2) no MLP layer; 3) keeping MLP dur- scale retrieval experiment using SBERTbase and

ing training but removing it at testing time. We find SimCSE-BERTbase . We use 150k captions from

that for unsupervised SimCSE, taking [CLS] rep- Flickr30k dataset and take any random sentence as

resentation with MLP only during training works query to retrieve similar sentences (based on cosine

the best; for supervised SimCSE, different pooling similarity). As several examples shown in Table 8,

methods do not matter much. By default, we take the retrieved sentences by SimCSE have a higher

[CLS]with MLP (train) for unsupervised SimCSE quality compared to those retrieved by SBERT.

and [CLS]with MLP for supervised SimCSE.

8 Related Work

Hard negatives. Intuitively, it may be beneficial

to differentiate hard negatives (contradiction exam- Early work in sentence embeddings builds upon the

ples) from other in-batch negatives. Therefore, we distributional hypothesis by predicting surrounding

extend our training objective defined in Eq. 5 to sentences of a given one (Kiros et al., 2015; HillSBERTbase Supervised SimCSE-BERTbase

Query: A man riding a small boat in a harbor.

#1 A group of men traveling over the ocean in a small boat. A man on a moored blue and white boat.

#2 Two men sit on the bow of a colorful boat. A man is riding in a boat on the water.

#3 A man wearing a life jacket is in a small boat on a lake. A man in a blue boat on the water.

Query: A dog runs on the green grass near a wooden fence.

#1 A dog runs on the green grass near a grove of trees. The dog by the fence is running on the grass.

#2 A brown and white dog runs through the green grass. Dog running through grass in fenced area.

#3 The dogs run in the green field. A dog runs on the green grass near a grove of trees.

Table 8: Retrieved top-3 examples by SBERT and supervised SimCSE from Flickr30k (150k sentences).

0.7 100 bilingual and back-translation corpora provide use-

BERT-whitening (66.3)

0.6 BERT-flow (66.6) 90

ful supervision for learning semantic similarity. An-

SBERT-whitening (77.0) other line of work focuses on regularizing embed-

0.5

SBERT-flow (76.6) 80 dings (Li et al., 2020; Su et al., 2021; Huang et al.,

Alignment

0.4 2021) to alleviate the representation degeneration

align

70

0.3 problem (as discussed in §5), and yields substantial

!

Unsup. SimCSE (76.3)

Avg. BERT (56.7) 60 improvement over pre-trained language models.

0.2 SimCSE (81.6)

SBERT (74.9)

0.1 Next3Sent (63.1) 50 9 Conclusion

0.0 40 In this work, we propose SimCSE, a simple con-

−4.0 −3.5 −3.0 −2.5 −2.0 −1.5 −1.0

!uniform

8nifoUmity trastive learning framework, which greatly im-

proves state-of-the-art sentence embeddings on se-

Figure 3: `align -`uniform plot of models based on

mantic textual similarity tasks. We present an un-

BERTbase . Color of points and numbers in brackets

represent average STS performance (Spearman’s corre-

supervised approach which predicts input sentence

lation). Next3Sent: “next 3 sentences” from Table 2. itself with dropout noise and a supervised approach

utilizing NLI datasets. We further justify the inner

workings of our approach by analyzing alignment

et al., 2016; Logeswaran and Lee, 2018). Pagliar- and uniformity of SimCSE along with other base-

dini et al. (2018) show that simply augmenting line models. We believe that our contrastive objec-

the idea of word2vec (Mikolov et al., 2013) with tive, especially the unsupervised one, may have a

n-gram embeddings leads to strong results. Sev- broader application in NLP. It provides a new per-

eral recent (and concurrent) approaches adopt con- spective on data augmentation with text input, and

trastive objectives (Zhang et al., 2020; Giorgi et al., can be extended to other continuous representations

2021; Wu et al., 2020; Meng et al., 2021; Carlsson and integrated in language model pre-training.

et al., 2021; Kim et al., 2021; Yan et al., 2021) by

taking different views—from data augmentation or Acknowledgements

different copies of models—of the same sentence We thank Tao Lei, Jason Lee, Zhengyan Zhang,

or document. Compared to these work, SimCSE Jinhyuk Lee, Alexander Wettig, Zexuan Zhong,

uses the simplest idea by taking different outputs and the members of the Princeton NLP group for

of the same sentence from standard dropout, and helpful discussion and valuable feedback. This

performs the best on STS tasks. research is supported by a Graduate Fellowship at

Supervised sentence embeddings are promised Princeton University and a gift award from Apple.

to have stronger performance compared to unsu-

pervised counterparts. Conneau et al. (2017) pro-

pose to fine-tune a Siamese model on NLI datasets,

which is further extended to other encoders or

pre-trained models (Cer et al., 2018; Reimers and

Gurevych, 2019). Furthermore, Wieting and Gim-

pel (2018); Wieting et al. (2020) demonstrate thatReferences task 1: Semantic textual similarity multilingual and

crosslingual focused evaluation. In Proceedings of

Eneko Agirre, Carmen Banea, Claire Cardie, Daniel the 11th International Workshop on Semantic Evalu-

Cer, Mona Diab, Aitor Gonzalez-Agirre, Weiwei ation (SemEval-2017), pages 1–14.

Guo, Iñigo Lopez-Gazpio, Montse Maritxalar, Rada

Mihalcea, German Rigau, Larraitz Uria, and Janyce Daniel Cer, Yinfei Yang, Sheng-yi Kong, Nan Hua,

Wiebe. 2015. SemEval-2015 task 2: Semantic tex- Nicole Limtiaco, Rhomni St. John, Noah Constant,

tual similarity, English, Spanish and pilot on inter- Mario Guajardo-Cespedes, Steve Yuan, Chris Tar,

pretability. In Proceedings of the 9th International Brian Strope, and Ray Kurzweil. 2018. Universal

Workshop on Semantic Evaluation (SemEval 2015), sentence encoder for English. In Empirical Methods

pages 252–263. in Natural Language Processing (EMNLP): System

Demonstrations, pages 169–174.

Eneko Agirre, Carmen Banea, Claire Cardie, Daniel

Cer, Mona Diab, Aitor Gonzalez-Agirre, Weiwei Ting Chen, Simon Kornblith, Mohammad Norouzi,

Guo, Rada Mihalcea, German Rigau, and Janyce and Geoffrey Hinton. 2020. A simple framework

Wiebe. 2014. SemEval-2014 task 10: Multilingual for contrastive learning of visual representations.

semantic textual similarity. In Proceedings of the In International Conference on Machine Learning

8th International Workshop on Semantic Evaluation (ICML), pages 1597–1607.

(SemEval 2014), pages 81–91.

Ting Chen, Yizhou Sun, Yue Shi, and Liangjie Hong.

Eneko Agirre, Carmen Banea, Daniel Cer, Mona Diab, 2017. On sampling strategies for neural network-

Aitor Gonzalez-Agirre, Rada Mihalcea, German based collaborative filtering. In ACM SIGKDD

Rigau, and Janyce Wiebe. 2016. SemEval-2016 International Conference on Knowledge Discovery

task 1: Semantic textual similarity, monolingual and Data Mining, pages 767–776.

and cross-lingual evaluation. In Proceedings of the

10th International Workshop on Semantic Evalua- Alexis Conneau and Douwe Kiela. 2018. SentEval: An

tion (SemEval-2016), pages 497–511. Association evaluation toolkit for universal sentence representa-

for Computational Linguistics. tions. In International Conference on Language Re-

sources and Evaluation (LREC).

Eneko Agirre, Daniel Cer, Mona Diab, and Aitor

Gonzalez-Agirre. 2012. SemEval-2012 task 6: A Alexis Conneau, Douwe Kiela, Holger Schwenk, Loïc

pilot on semantic textual similarity. In *SEM 2012: Barrault, and Antoine Bordes. 2017. Supervised

The First Joint Conference on Lexical and Compu- learning of universal sentence representations from

tational Semantics – Volume 1: Proceedings of the natural language inference data. In Empirical

main conference and the shared task, and Volume Methods in Natural Language Processing (EMNLP),

2: Proceedings of the Sixth International Workshop pages 670–680.

on Semantic Evaluation (SemEval 2012), pages 385–

393. Jacob Devlin, Ming-Wei Chang, Kenton Lee, and

Kristina Toutanova. 2019. BERT: Pre-training of

Eneko Agirre, Daniel Cer, Mona Diab, Aitor Gonzalez- deep bidirectional transformers for language under-

Agirre, and Weiwei Guo. 2013. *SEM 2013 shared standing. In North American Chapter of the As-

task: Semantic textual similarity. In Second Joint sociation for Computational Linguistics: Human

Conference on Lexical and Computational Seman- Language Technologies (NAACL-HLT), pages 4171–

tics (*SEM), Volume 1: Proceedings of the Main 4186.

Conference and the Shared Task: Semantic Textual

Similarity, pages 32–43. William B. Dolan and Chris Brockett. 2005. Automati-

cally constructing a corpus of sentential paraphrases.

Sanjeev Arora, Yingyu Liang, and Tengyu Ma. 2017. In Proceedings of the Third International Workshop

A simple but tough-to-beat baseline for sentence em- on Paraphrasing (IWP2005).

beddings. In International Conference on Learning

Representations (ICLR). Alexey Dosovitskiy, Jost Tobias Springenberg, Mar-

tin Riedmiller, and Thomas Brox. 2014. Discrim-

Samuel R. Bowman, Gabor Angeli, Christopher Potts, inative unsupervised feature learning with convolu-

and Christopher D. Manning. 2015. A large anno- tional neural networks. In Advances in Neural Infor-

tated corpus for learning natural language inference. mation Processing Systems (NIPS), volume 27.

In Empirical Methods in Natural Language Process-

ing (EMNLP), pages 632–642. Kawin Ethayarajh. 2019. How contextual are contex-

tualized word representations? comparing the geom-

Fredrik Carlsson, Amaru Cuba Gyllensten, Evan- etry of BERT, ELMo, and GPT-2 embeddings. In

gelia Gogoulou, Erik Ylipää Hellqvist, and Magnus Empirical Methods in Natural Language Processing

Sahlgren. 2021. Semantic re-tuning with contrastive and International Joint Conference on Natural Lan-

tension. In International Conference on Learning guage Processing (EMNLP-IJCNLP), pages 55–65.

Representations (ICLR).

Jun Gao, Di He, Xu Tan, Tao Qin, Liwei Wang,

Daniel Cer, Mona Diab, Eneko Agirre, Iñigo Lopez- and Tieyan Liu. 2019. Representation degenera-

Gazpio, and Lucia Specia. 2017. SemEval-2017 tion problem in training natural language generationmodels. In International Conference on Learning Bohan Li, Hao Zhou, Junxian He, Mingxuan Wang,

Representations (ICLR). Yiming Yang, and Lei Li. 2020. On the sentence

embeddings from pre-trained language models. In

Dan Gillick, Sayali Kulkarni, Larry Lansing, Alessan- Empirical Methods in Natural Language Processing

dro Presta, Jason Baldridge, Eugene Ie, and Diego (EMNLP), pages 9119–9130.

Garcia-Olano. 2019. Learning dense representa-

tions for entity retrieval. In Computational Natural Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Man-

Language Learning (CoNLL), pages 528–537. dar Joshi, Danqi Chen, Omer Levy, Mike Lewis,

Luke Zettlemoyer, and Veselin Stoyanov. 2019.

John Giorgi, Osvald Nitski, Bo Wang, and Gary Bader. Roberta: A robustly optimized bert pretraining ap-

2021. DeCLUTR: Deep contrastive learning for proach. arXiv preprint arXiv:1907.11692.

unsupervised textual representations. In Associ-

ation for Computational Linguistics and Interna- Lajanugen Logeswaran and Honglak Lee. 2018. An ef-

tional Joint Conference on Natural Language Pro- ficient framework for learning sentence representa-

cessing (ACL-IJCNLP), pages 879–895. tions. In International Conference on Learning Rep-

resentations (ICLR).

Raia Hadsell, Sumit Chopra, and Yann LeCun. 2006.

Dimensionality reduction by learning an invariant Edward Ma. 2019. Nlp augmentation.

mapping. In IEEE/CVF Conference on Computer https://github.com/makcedward/nlpaug.

Vision and Pattern Recognition (CVPR), volume 2,

pages 1735–1742. IEEE. Marco Marelli, Stefano Menini, Marco Baroni, Luisa

Bentivogli, Raffaella Bernardi, and Roberto Zampar-

Matthew Henderson, Rami Al-Rfou, Brian Strope, Yun- elli. 2014. A SICK cure for the evaluation of compo-

Hsuan Sung, László Lukács, Ruiqi Guo, Sanjiv Ku- sitional distributional semantic models. In Interna-

mar, Balint Miklos, and Ray Kurzweil. 2017. Effi- tional Conference on Language Resources and Eval-

cient natural language response suggestion for smart uation (LREC), pages 216–223.

reply. arXiv preprint arXiv:1705.00652.

Yu Meng, Chenyan Xiong, Payal Bajaj, Saurabh Ti-

Felix Hill, Kyunghyun Cho, and Anna Korhonen. 2016. wary, Paul Bennett, Jiawei Han, and Xia Song.

Learning distributed representations of sentences 2021. COCO-LM: Correcting and contrasting text

from unlabelled data. In North American Chapter of sequences for language model pretraining. arXiv

the Association for Computational Linguistics: Hu- preprint arXiv:2102.08473.

man Language Technologies (NAACL-HLT), pages

1367–1377. Jorma Kaarlo Merikoski. 1984. On the trace and the

sum of elements of a matrix. Linear Algebra and its

Minqing Hu and Bing Liu. 2004. Mining and summa- Applications, 60:177–185.

rizing customer reviews. In ACM SIGKDD interna-

tional conference on Knowledge discovery and data Tomas Mikolov, Ilya Sutskever, Kai Chen, G. Corrado,

mining. and J. Dean. 2013. Distributed representations of

words and phrases and their compositionality. In

Junjie Huang, Duyu Tang, Wanjun Zhong, Shuai Lu, Advances in Neural Information Processing Systems

Linjun Shou, Ming Gong, Daxin Jiang, and Nan (NIPS).

Duan. 2021. Whiteningbert: An easy unsuper-

vised sentence embedding approach. arXiv preprint Jiaqi Mu and Pramod Viswanath. 2018. All-but-the-

arXiv:2104.01767. top: Simple and effective postprocessing for word

representations. In International Conference on

Vladimir Karpukhin, Barlas Oguz, Sewon Min, Patrick Learning Representations (ICLR).

Lewis, Ledell Wu, Sergey Edunov, Danqi Chen,

and Wen-tau Yih. 2020. Dense passage retrieval Yixin Nie, Adina Williams, Emily Dinan, Mohit

for open-domain question answering. In Empirical Bansal, Jason Weston, and Douwe Kiela. 2020. Ad-

Methods in Natural Language Processing (EMNLP), versarial NLI: A new benchmark for natural lan-

pages 6769–6781. guage understanding. In Association for Computa-

tional Linguistics (ACL), pages 4885–4901.

Taeuk Kim, Kang Min Yoo, and Sang-goo Lee. 2021.

Self-guided contrastive learning for BERT sentence Matteo Pagliardini, Prakhar Gupta, and Martin Jaggi.

representations. In Association for Computational 2018. Unsupervised learning of sentence embed-

Linguistics and International Joint Conference on dings using compositional n-gram features. In North

Natural Language Processing (ACL-IJCNLP), pages American Chapter of the Association for Computa-

2528–2540. tional Linguistics: Human Language Technologies

(NAACL-HLT), pages 528–540.

Ryan Kiros, Yukun Zhu, Ruslan Salakhutdinov,

Richard S Zemel, Antonio Torralba, Raquel Urtasun, Bo Pang and Lillian Lee. 2004. A sentimental educa-

and Sanja Fidler. 2015. Skip-thought vectors. In tion: Sentiment analysis using subjectivity summa-

Advances in Neural Information Processing Systems rization based on minimum cuts. In Association for

(NIPS), pages 3294–3302. Computational Linguistics (ACL), pages 271–278.Bo Pang and Lillian Lee. 2005. Seeing stars: Exploit- Janyce Wiebe, Theresa Wilson, and Claire Cardie.

ing class relationships for sentiment categorization 2005. Annotating expressions of opinions and emo-

with respect to rating scales. In Association for Com- tions in language. Language resources and evalua-

putational Linguistics (ACL), pages 115–124. tion, 39(2-3):165–210.

Jeffrey Pennington, Richard Socher, and Christopher John Wieting and Kevin Gimpel. 2018. ParaNMT-

Manning. 2014. GloVe: Global vectors for word 50M: Pushing the limits of paraphrastic sentence

representation. In Proceedings of the 2014 Confer- embeddings with millions of machine translations.

ence on Empirical Methods in Natural Language In Association for Computational Linguistics (ACL),

Processing (EMNLP), pages 1532–1543. pages 451–462.

Nils Reimers, Philip Beyer, and Iryna Gurevych. 2016. John Wieting, Graham Neubig, and Taylor Berg-

Task-oriented intrinsic evaluation of semantic tex- Kirkpatrick. 2020. A bilingual generative trans-

tual similarity. In International Conference on Com- former for semantic sentence embedding. In Em-

putational Linguistics (COLING), pages 87–96. pirical Methods in Natural Language Processing

(EMNLP), pages 1581–1594.

Nils Reimers and Iryna Gurevych. 2019. Sentence-

Adina Williams, Nikita Nangia, and Samuel Bowman.

BERT: Sentence embeddings using Siamese BERT-

2018. A broad-coverage challenge corpus for sen-

networks. In Empirical Methods in Natural Lan-

tence understanding through inference. In North

guage Processing and International Joint Confer-

American Chapter of the Association for Computa-

ence on Natural Language Processing (EMNLP-

tional Linguistics: Human Language Technologies

IJCNLP), pages 3982–3992.

(NAACL-HLT), pages 1112–1122.

Richard Socher, Alex Perelygin, Jean Wu, Jason Thomas Wolf, Lysandre Debut, Victor Sanh, Julien

Chuang, Christopher D. Manning, Andrew Ng, and Chaumond, Clement Delangue, Anthony Moi, Pier-

Christopher Potts. 2013. Recursive deep models ric Cistac, Tim Rault, Remi Louf, Morgan Funtow-

for semantic compositionality over a sentiment tree- icz, Joe Davison, Sam Shleifer, Patrick von Platen,

bank. In Empirical Methods in Natural Language Clara Ma, Yacine Jernite, Julien Plu, Canwen Xu,

Processing (EMNLP), pages 1631–1642. Teven Le Scao, Sylvain Gugger, Mariama Drame,

Quentin Lhoest, and Alexander Rush. 2020. Trans-

Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, formers: State-of-the-art natural language process-

Ilya Sutskever, and Ruslan Salakhutdinov. 2014. ing. In Empirical Methods in Natural Language Pro-

Dropout: a simple way to prevent neural networks cessing (EMNLP): System Demonstrations, pages

from overfitting. The Journal of Machine Learning 38–45.

Research (JMLR), 15(1):1929–1958.

Zhuofeng Wu, Sinong Wang, Jiatao Gu, Madian

Jianlin Su, Jiarun Cao, Weijie Liu, and Yangyiwen Ou. Khabsa, Fei Sun, and Hao Ma. 2020. Clear: Con-

2021. Whitening sentence representations for bet- trastive learning for sentence representation. arXiv

ter semantics and faster retrieval. arXiv preprint preprint arXiv:2012.15466.

arXiv:2103.15316.

Yuanmeng Yan, Rumei Li, Sirui Wang, Fuzheng Zhang,

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Wei Wu, and Weiran Xu. 2021. ConSERT: A

Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz contrastive framework for self-supervised sentence

Kaiser, and Illia Polosukhin. 2017. Attention is all representation transfer. In Association for Com-

you need. In Advances in Neural Information Pro- putational Linguistics and International Joint Con-

cessing Systems (NIPS), pages 6000–6010. ference on Natural Language Processing (ACL-

IJCNLP), pages 5065–5075.

Ellen M Voorhees and Dawn M Tice. 2000. Building

a question answering test collection. In the 23rd Peter Young, Alice Lai, Micah Hodosh, and Julia Hock-

annual international ACM SIGIR conference on Re- enmaier. 2014. From image descriptions to visual

search and development in information retrieval, denotations: New similarity metrics for semantic in-

pages 200–207. ference over event descriptions. Transactions of the

Association for Computational Linguistics, 2:67–78.

Lingxiao Wang, Jing Huang, Kevin Huang, Ziniu Hu,

Guangtao Wang, and Quanquan Gu. 2020. Improv- Yan Zhang, Ruidan He, Zuozhu Liu, Kwan Hui Lim,

ing neural language generation with spectrum con- and Lidong Bing. 2020. An unsupervised sentence

trol. In International Conference on Learning Rep- embedding method by mutual information maxi-

resentations (ICLR). mization. In Empirical Methods in Natural Lan-

guage Processing (EMNLP), pages 1601–1610.

Tongzhou Wang and Phillip Isola. 2020. Understand-

ing contrastive representation learning through align-

ment and uniformity on the hypersphere. In Inter-

national Conference on Machine Learning (ICML),

pages 9929–9939.A Training Details Paper Reg. Metric Aggr.

Hill et al. (2016) Both all

We implement SimCSE with transformers Conneau et al. (2017) X Pearson mean

package (Wolf et al., 2020). For supervised Sim- Conneau and Kiela (2018) X Pearson mean

CSE, we train our models for 3 epochs, evaluate the Reimers and Gurevych (2019) Spearman all

Zhang et al. (2020) Spearman all

model every 250 training steps on the development

Li et al. (2020) Spearman wmean

set of STS-B and keep the best checkpoint for the Su et al. (2021) Spearman wmean

final evaluation on test sets. We do the same for Wieting et al. (2020) Pearson mean

the unsupervised SimCSE, except that we train the Giorgi et al. (2021) Spearman mean

Ours Spearman all

model for one epoch. We carry out grid-search of

batch size ∈ {64, 128, 256, 512} and learning rate

Table B.1: STS evaluation protocols used in different

∈ {1e-5, 3e-5, 5e-5} on STS-B development set

papers. “Reg.”: whether an additional regressor is used;

and adopt the hyperparameter settings in Table A.1. “aggr.”: methods to aggregate different subset results.

We find that SimCSE is not sensitive to batch sizes

as long as tuning the learning rates accordingly,

which contradicts the finding that contrastive learn- frozen sentence embeddings for STS-B and SICK-

ing requires large batch sizes (Chen et al., 2020). R, and train the regressor on the training sets of

It is probably due to that all SimCSE models start the two tasks, while most sentence representation

from pre-trained checkpoints, which already pro- papers take the raw embeddings and evaluate in an

vide us a good set of initial parameters. unsupervised way. In our experiments, we do not

apply any additional regressors and directly take

Unsupervised Supervised

cosine similarities for all STS tasks.

BERT RoBERTa

base large Metrics. Both Pearson’s and Spearman’s cor-

base large base large

Batch size 64 64 512 512 512 512 relation coefficients are used in the literature.

Learning rate 3e-5 1e-5 1e-5 3e-5 5e-5 1e-5 Reimers et al. (2016) argue that Spearman corre-

lation, which measures the rankings instead of the

Table A.1: Batch sizes and learning rates for SimCSE. actual scores, better suits the need of evaluating

sentence embeddings. For all of our experiments,

For both unsupervised and supervised SimCSE, we report Spearman’s rank correlation.

we take the [CLS] representation with an MLP Aggregation methods. Given that each year’s

layer on top of it as the sentence representation. STS challenge contains several subsets, there are

Specially, for unsupervised SimCSE, we discard different choices to gather results from them: one

the MLP layer and only use the [CLS] output way is to concatenate all the topics and report the

during test, since we find that it leads to better overall Spearman’s correlation (denoted as “all”),

performance (ablation study in §6.3). and the other is to calculate results for differ-

Finally, we introduce one more optional variant ent subsets separately and average them (denoted

which adds a masked language modeling (MLM) as “mean” if it is simple average or “wmean” if

objective (Devlin et al., 2019) as an auxiliary loss weighted by the subset sizes). However, most pa-

to Eq. 1: ` + λ · `mlm (λ is a hyperparameter). pers do not claim the method they take, making it

This helps SimCSE avoid catastrophic forgetting challenging for a fair comparison. We take some

of token-level knowledge. As we will show in Ta- of the most recent work: SBERT (Reimers and

ble D.2, we find that adding this term can help Gurevych, 2019), BERT-flow (Li et al., 2020) and

improve performance on transfer tasks (not on BERT-whitening (Su et al., 2021)12 as an example:

sentence-level STS tasks). In Table B.2, we compare our reproduced results

to reported results of SBERT and BERT-whitening,

B Different Settings for STS Evaluation and find that Reimers and Gurevych (2019) take the

We elaborate the differences in STS evaluation set- “all” setting but Li et al. (2020); Su et al. (2021) take

tings in previous work in terms of (a) whether to the “wmean” setting, even though Li et al. (2020)

use additional regressors; (b) reported metrics; (c) claim that they take the same setting as Reimers

different ways to aggregate results. 12

Li et al. (2020) and Su et al. (2021) have consistent results,

Additional regressors. The default SentEval so we assume that they take the same evaluation and just take

implementation applies a linear regressor on top of BERT-whitening in experiments here.You can also read