Samanantar: The Largest Publicly Available

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Samanantar: The Largest Publicly Available

Parallel Corpora Collection for 11 Indic Languages

Gowtham Ramesh1∗ Sumanth Doddapaneni1∗ Aravinth Bheemaraj2,5

Mayank Jobanputra3 Raghavan AK4 Ajitesh Sharma2,5 Sujit Sahoo2,5

Harshita Diddee4 Mahalakshmi J4 Divyanshu Kakwani3,4 Navneet Kumar2,5

Aswin Pradeep2,5 Kumar Deepak2,5 Vivek Raghavan5 Anoop Kunchukuttan4,6

Pratyush Kumar1,3,4 Mitesh Shantadevi† Khapra1,3,4‡

1

Robert Bosch Center for Data Science and Artificial Intelligence,

2

Tarento Technologies, 3 Indian Institute of Technology, Madras

4

AI4Bharat, 5 EkStep Foundation, 6 Microsoft

Abstract 1 Introduction

arXiv:2104.05596v2 [cs.CL] 29 Apr 2021

We present Samanantar, the largest publicly Deep Learning (DL) has revolutionized the field

available parallel corpora collection for Indic of Natural Language Processing, establishing new

languages. The collection contains a total of state of the art results on a wide variety of

46.9 million sentence pairs between English NLU (Wang et al., 2018, 2019) and NLG tasks

and 11 Indic languages (from two language (Gehrmann et al., 2021). Across languages and

families). In particular, we compile 12.4 mil-

tasks, a proven recipe for high performance is

lion sentence pairs from existing, publicly-

available parallel corpora, and we addition-

to pretrain and/or finetune large models on mas-

ally mine 34.6 million sentence pairs from the sive amounts of data. Particularly, significant

web, resulting in a 2.8× increase in publicly progress has been made in machine translation due

available sentence pairs. We mine the par- to encoder-decoder based models (Bahdanau et al.,

allel sentences from the web by combining 2015; Wu et al., 2016; Sennrich et al., 2016b,a;

many corpora, tools, and methods. In particu- Vaswani et al., 2017). While this has been favorable

lar, we use (a) web-crawled monolingual cor- for resource-rich languages, there has been limited

pora, (b) document OCR for extracting sen-

benefit for resource-poor languages which lack par-

tences from scanned documents (c) multilin-

gual representation models for aligning sen- allel corpora, monolingual corpora and evaluation

tences, and (d) approximate nearest neighbor benchmarks (Koehn and Knowles, 2017). One ef-

search for searching in a large collection of fort to close this gap is training multilingual models

sentences. Human evaluation of samples from with the hope that performance on resource-poor

the newly mined corpora validate the high languages improves from supervision on resource-

quality of the parallel sentences across 11 lan- rich languages (Firat et al., 2016; Johnson et al.,

guage pairs. Further, we extracted 82.7 mil-

2017b). Such transfer learning works best when

lion sentence pairs between all 55 Indic lan-

guage pairs from the English-centric parallel

the resource-rich languages are related to the low-

corpus using English as the pivot language. resource languages (Nguyen and Chiang, 2017;

We trained multilingual NMT models span- Dabre et al., 2017) and it is difficult to achieve high

ning all these languages on Samanantar and quality translation with limited in-language data

compared with other baselines and previously (Guzmán et al., 2019). The situation is particularly

reported results on publicly available bench- dire when an entire group of related languages is

marks. Our models outperform existing mod- low-resource making transfer-learning infeasible.

els on these benchmarks, establishing the util-

ity of Samanantar. Our data and models will This disparity across languages is exemplified by

be available publicly1 and we hope they will the limited progress made in translation involving

help advance research in Indic NMT and mul- Indic languages. Given the very large collective

tilingual NLP for Indic languages. speaker base of over 1 billion speakers, the pref-

∗

erence for Indic languages and increasing digital

* The first two authors have contributed equally.

†

† Dedicated to the loving memory of my grandmother.

penetration, a good translation system is a necessity

‡

‡ Corresponding author: miteshk@cse.iitm.ac.in to provide equitable access to information and con-

1

https://indicnlp.ai4bharat.org/samanantar tent. For example, educational videos for primary,secondary and higher education should be available ·107

1

in different Indic languages. Similarly, various gov-

Newly Mined

ernment advisories, policy announcements, high

Existing Sources

court judgments, etc. should be disseminated in all 0.8

major regional languages. Despite this fundamen-

tal need, the accuracy of machine translation (MT) 0.6

systems to and from Indic languages are poorer

compared to those for several European languages

(Bojar et al., 2014; Barrault et al., 2019, 2020). The 0.4

primary reason for this is the lack of large-scale

parallel data between Indic languages and English. 0.2

Consequently, Indic languages have a poor repre-

sentation in WMT shared tasks on translation and

0

allied problems (post-editing, MT evaluation, etc.), hi bn ml ta te kn mr gu pa or as

further affecting attention by researchers. Thus, de-

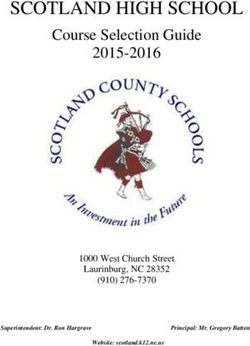

Figure 1: Total number of En-X parallel sentences in

spite the huge practical need, Indic MT has signifi-

Samanantar for different Indic languages that are com-

cantly lagged while other resource-rich languages piled from existing sources and newly mined. With

have made rapid advances with deep learning. the newly mined data, the number of parallel sentences

What does it take to improve MT on the large across all En-X pairs increases by a ratio of 2.8×.

set of related low-resource Indic languages? The

answer is straightforward: create large parallel

datasets and then train proven DL models. How- sentence aligner, and (c) IndicCorp (Kakwani et al.,

ever, collecting new data with manual translations 2020), the largest corpus of monolingual data for

at the scale necessary to train large DL models Indic languages which requires approximate near-

would be slow and expensive. Instead, several est neighbour search using FAISS followed by a

recent works have proposed mining parallel sen- more accurate alignment using LaBSE. In sum-

tences from the web (Schwenk et al., 2019a, 2020; mary, we propose a series of pipelines to collect

El-Kishky et al., 2020). The representation of parallel data from publicly available data sources.

Indic languages in these works is however poor Combining existing datasets and the new

(e.g., CCMatrix contains parallel data for only 2 datasets that we collect from different sources, we

Indic languages). In this work, we aim to signifi- present Samanantar3 - the largest publicly avail-

cantly increase the amount of parallel data on Indic able parallel corpora collection for Indic languages.

languages by combining the benefits of many re- Samanantar contains ∼ 46.9M parallel sentences

cent contributions: large Indic monolingual corpora between English and 11 Indic languages, rang-

(Kakwani et al., 2020; Ortiz Suarez et al., 2019), ing from 142K pairs between English-Assamese

accurate multilingual representation learning (Feng to 8.6M pairs between English-Hindi. Of these

et al., 2020; Artetxe and Schwenk, 2019), scal- 34.6M pairs are newly mined as a part of this work

able approximate nearest neighbor search (Johnson whereas 12.4M are compiled from existing sources.

et al., 2017a; Subramanya et al., 2019; Guo et al., The language-wise statistics are shown in Figure 1.

2020), and open-source tools for optical charac- In addition, we mine 82.7M parallel sentences be-

ter recognition of Indic scripts in rich text doc- tween the 11 2 Indic language pairs using English

uments2 . By combining these methods, we pro- as the pivot. To evaluate the quality of the mined

pose different pipelines to collect parallel data from sentences we collect human judgments from 38 an-

three different types of sources : (a) scanned par- notators for a total of about 10,000 sentence pairs

allel documents which require Optical Character across the 11 language pairs. The results show that

Recognition followed by sentence alignment us- the parallel sentences mined from the corpus are

ing a multilingual representation model, such as of high quality and validate the adopted thresholds

LaBSE (Feng et al., 2020), (b) news websites with for alignment using LaBSE representations. The

multilingual content which require a crawler, an results also show the potential for further improv-

article aligner based on date ranges followed by a ing LaBSE-based alignment, especially for low-

2 3

https://anuvaad.org Samanantar in Sanskrit means semantically similarresource languages and for longer sentences. This chine readable format. Examples of such sources

parallel data along with the human judgments will include some news websites which publish articles

be made publicly available as a benchmark on in multiple languages. Next, we consider sources

cross-lingual semantic similarity. which contain (almost) parallel documents which

To evaluate if Samanantar advances the state are not in machine readable format. Examples of

of the art for Indic NMT, we train a model us- such sources include PDF documents such as In-

ing Samanantar and compare it with existing mod- dian parliamentary proceedings. The text in these

els. We make a practical choice of training a joint documents is not always machine readable as it

model which can leverage lexical and syntactic may have been encoded using legacy proprietary

similarities between Indic languages. We com- encodings (not UTF-8). Lastly, we consider Indic-

pare our joint model, called IndicTrans, trained Corp which is the largest collection of monolingual

on Samanantar with (a) commercial translation sentences for 11 Indic languages mined from the

systems (Google, Microsoft), (b) publicly available web. These sentences are collected from monolin-

translation systems (OPUS-MT (Tiedemann and gual documents in multiple languages but may still

Thottingal, 2020), mBART50 (Tang et al., 2020), contain parallel sentences as the content is India-

CVIT-ILMulti (Philip et al., 2020), and (c) mod- centric. The pipelines required for mining each

els trained on all existing sources of parallel data of these sources may have some common compo-

between Indic languages. Across 44 publicly avail- nents (e.g., sentence pair scorer) and some unique

able test sets spanning 10 Indic languages, we ob- components (e.g., OCR for non-machine readable

serve that IndicTrans performs better than all exist- documents, annoy index4 for web scale monolin-

ing models on 37 datasets. On several benchmarks, gual documents, etc.). We describe these pipelines

IndicTrans trained on Samanantar outperforms in detail in the following subsections.

all existing models by a significant margin, estab-

2.1 Existing sources

lishing the utility of our corpus.

The three main contributions of this work viz. We first briefly describe the existing sources of

(i) Samanantar, the largest collection of parallel parallel sentences for Indic languages which are

corpora for Indic languages, (ii) IndicTrans, a joint enumerated in Table 1. The Indic NLP Catalog6

model for translating from En-Indic and Indic-En, helped identify many of these sources. Recently,

and (iii) human judgments on cross-lingual textual the WAT 2021 shared task also compiled many

similarity for about 10,000 sentence pairs will be existing Indic language parallel corpora.

made publicly available. The following sentence aligned corpora were

available from OPUS (Tiedemann, 2012). We

2 Samanantar: A Parallel Corpus for downloaded the latest available versions of these

Indic Languages corpora on 21 March 2021:

ELRC_29227 : Parallel text between English and

In this section, we describe Samanantar, the largest 5 Indic languages collected from Wikipedia on

publicly available parallel corpora collection for health and COVID-19 domain.

Indic languages. It contains parallel sentences be- GNOME8 , KDE49 , Ubuntu10 : Parallel text

tween English and 11 Indic languages, viz., As- between English and 11 Indic languages from the

samese (as), Bengali (bn), Gujarati (gu), Hindi (hi), localization files of GNOME, KDE4 and Ubuntu.

Kannada (kn), Malayalam (ml), Marathi (mr), Odia Global Voices11 : Parallel text between English

(or), Punjabi (pa), Tamil (ta) and Telugu (te). In ad- and 4 Indic languages extracted from news

dition, it also contains parallel sentences between articles published on Global Voices which is an

the 55 Indic language pairs obtained by pivoting international, multilingual community of writers,

through English (en). To build this corpus, we first translators, academics, and digital rights activists.

collated all existing public sources of parallel data 4

https://github.com/spotify/annoy

for Indic languages that have been released over https://github.com/facebookresearch/faiss

6

the years, as described in Section 2.1. We then https://github.com/AI4Bharat/indicnlp_catalog

7

expand this corpus further by mining parallel sen- https://elrc-share.eu

8

https://l10n.gnome.org

tences from three types of sources from the web. 9

https://l10n.kde.org

First, we consider sources which contain (almost) 10

https://translations.launchpad.net

11

parallel or comparable documents available in ma- https://globalvoices.orgen-as en-bn en-gu en-hi en-kn en-ml en-mr en-or en-pa en-ta en-te Total

JW300 46 269 305 510 316 371 289 - 374 718 203 3400

banglanmt - 2380 - - - - - - - - - 2380

iitb - - - 1603 - - - - - - - 1603

cvit-pib - 92 58 267 - 43 114 94 101 116 45 930

5

wikimatrix - 281 - 231 - 72 124 - - 95 92 895

OpenSubtitles - 372 - 81 - 357 - - - 28 23 862

Tanzil - 185 - 185 - 185 - - - 92 - 647

KDE4 6 35 31 85 13 39 12 8 78 79 14 402

PMIndia V1 7 23 42 50 29 27 29 32 28 33 33 333

GNOME 29 40 38 30 24 23 26 21 33 31 37 332

bible-uedin - - 16 62 61 61 60 - - - 62 321

Ubuntu 21 28 27 25 22 22 26 20 29 25 24 269

ufal - - - - - - - - - 167 - 167

sipc - 21 - 38 - 30 - - - 35 43 166

GlobalVoices - 138 - 2 - - - 326 1 - - 142

TED2020Wiki-Matrix (Schwenk et al., 2019a): This tions of English Wikipedia documents into 5 Indic

corpus contains parallel text from Wikimedia. languages covering a diverse set of topics.

We download this corpus from OPUS which is TICO19 (Anastasopoulos et al., 2020): Parallel

filtered with a LASER (Artetxe and Schwenk, text between English and 9 Indic languages

2019) margin score of 1.04 for all language pairs containing CoViD-19 related information from

as recommended in the original Wiki-Matrix paper. Pubmed, Wikipedia, Wikinews, etc. The dataset

It has parallel text between English and 6 Indic provides CoViD-19 terminologies and benchmark

languages. (dev and test sets) to train and benchmark transla-

tion systems.

The following datasets are collected from UFAL (Ramasamy et al., 2012): Parallel text

sources which are not included in OPUS: between English and Tamil collected from news,

ALT (Riza et al., 2016): Parallel text between cinema and Bible websites.

English and 2 Indic languages created by manually URST (Shah and Bakrola, 2019): Parallel text

translating sentences from English Wikinews. obtained by translating English sentences from the

BanglaNMT (Hasan et al., 2020): Parallel text MSCOCO captioning dataset (Lin et al., 2015) to

between English and Bengali created by collating Gujarati.

and mining data from various English-Bengali WMT-2019-wiki23 , WMT-2019-govin24 : Paral-

parallel and comparable sources. lel text between English and Gujarati provided as

CVIT-PIB (Philip et al., 2020): Parallel text training data for the WMT-2019 Gujarati–English

between English and 9 Indic languages extracted news translation shared task.

by aligning and mining parallel sentences from

press releases of the Press Information Bureau17 of As shown in Table 1, these sources25 collated

India. together result in a total of 12.4M parallel sentences

IITB (Kunchukuttan et al., 2018): Parallel text (after removing duplicates) between English and 11

between English and Hindi mined from various Indic languages. It is interesting to note that there

English-Hindi parallel sources. is no publicly available system which has been

MTEnglish2Odia18 : Parallel text between trained using parallel data from all these existing

English and Odia created by collating various sources.

sources like Wikipedia, TDIL19 , Global Voices,

etc. 2.2 Mining parallel sentences from machine

NLPC20 (Fernando et al., 2021): Parallel text readable comparable corpora

between English and Tamil extracted from We identified 12 news websites which publish ar-

publicly available government resources such as ticles in multiple Indic languages. For a given

annual reports, procurement reports, circulars website, the articles across languages are not nec-

and websites by National Languages Processing essarily translations of each other. However, con-

Center, University of Moratuwa. tent within a given date range is often similar as

OdiEnCorp 2.0 (Parida et al., 2020): Parallel the sources are India-centric with a focus on local

text between English and Odia mined from events, personalities, advisories, etc. For example,

Wikipedia, online websites and non-machine news about guidelines for CoViD-19 vaccination

readable documents. get published in multiple Indic languages. Even

PMIndia V1 (Haddow and Kirefu, 2020): Parallel if such a news article in Hindi is not a sentence-

text between English and 11 Indic languages by-sentence translation, it may contain some sen-

collected by crawling and extracting the PMIndia tences which are accidentally or intentionally par-

website21 . 23

http://data.statmt.org/wmt19/translation-

SIPC22 (Post et al., 2012): Crowdsourced transla- task/wikipedia.gu-en.tsv.gz

24

17 http://data.statmt.org/wmt19/translation-

https://pib.gov.in task/govinraw.gu-en.tsv.gz

18

https://soumendrak.github.io/MTEnglish2Odia 25

We have not included CCMatrix (Schwenk et al., 2020)

19

http://tdil-dc.in/index.php and CCAligned (El-Kishky et al., 2020) in the current version

20

https://github.com/nlpcuom/English-Tamil-Parallel- of Samanantar. CCMatrix is not publicly available at the

Corpus time of writing. Some initial models trained with CCAligned

21

https://www. pmindia.gov.in/en/news-updates showed performance degradation on some benchmarks, and

22

https://github.com/joshua-decoder/indian-parallel- we will include it in a subsequent version if further analysis

corpora and cleanup shows it is beneficial for training MT models.en-as en-bn en-gu en-hi en-kn en-ml en-mr en-or en-pa en-ta en-te Total

IndicParCorp 55 4885 2424 4846 3507 4590 2600 835 1819 3403 4119 33081

Wikipedia 4 331 50 222 89 102 24 - 70 162 84 1138

PIB - 74 74 402 51 28 74 - 205 105 66 1078

PIB_archives 6 27 29 289 21 13 29 - 31 23 16 484

Nouns_dictionary - - - 72 54 66 57 - 54 63 64 430

Prothomalo - 284 - - - - - - - - - 284

Drivespark - - - 40 57 50 - - - 66 68 280

General_corpus - 224 - - - - - - - - - 224

Oneindia - 5 12 91 14 10 - - - 38 32 203

NPTEL - 24 21 73 8 5 15 - - 18 22 187

OCR - 14 - - - - - - - 169 2 185

Nativeplanet - - - 32 32 27 - - - 25 41 156

Mykhel - 24 - 16 30 27 - - - 35 21 153

Newsonair - - - 111 - - - - - - - 111

DW - 23 - 56 - - - - - - - 79

Timesofindia - - 3 31 - - 25 - - - - 59

Indianexpress - - - 41 - 13 - - - - - 55

Goodreturns - - - 13 8 11 - - - 7 9 47

Catchnews - - - 36 - - - - - - - 36

DD_National - - - 33 - - - - - - - 33

Khan_academy - 4 2 6allel to sentences from a corresponding English a sentence break is not inserted when we encounter

article. Hence, we consider such news websites to common Indian titles such as Shri. (equivalent to

be a good source of parallel sentences. Mr. in English) which are followed by a period.

We also identified two sources from educa- Parallel Sentence Extraction. At the end of the

tion domain - NPTEL26 and Khan Academy27 above step we have sentence tokenised articles in

which provide educational videos (also available English and a target language (say, Hindi). Fur-

on youtube) with parallel human translated subti- ther, all these websites contain metadata based on

tles in different languages including English and which we can cluster the articles according to the

Indic languages. month in which they were published (say, January

We use the following steps to extract parallel 2021). We assume that to find a match for a given

sentences from the above sources: Hindi sentence we only need to consider all En-

Article Extraction. For every news website, we glish sentences which belong to articles published

build custom extractors using BeautifulSoup28 or in the same month as the article containing the

Selenium29 . BeautifulSoup is a Python library for Hindi sentence. This is a reasonable assumption

parsing HTML/XML documents and is suitable for as content of news articles is temporal in nature.

websites where the content is largely static. How- Let S = {s1 , s2 , . . . , sm } be the set of all sen-

ever, many websites have dynamic content which tences across all English articles in a particular

gets loaded from a data source (a database or file) month. Similarly, let T = {t1 , t2 , . . . , tn } be the

and requires additional action events to be triggered set of all sentences across all Hindi articles in that

by the user. This requires the extractor to interact same month. Let f (s, t) be a scoring function

with the browser and perform repetitive tasks such which assigns a score indicating how likely it is

as scrolling down, clicking, etc. Selenium allows that s ∈ S, t ∈ T form a translation pair. For a

automation of such web browser interactions and is given Hindi sentence ti ∈ T , the matching English

used for scraping content from such dynamic sites. sentence can be found as:

For NPTEL, we programmatically gather all the

youtube video links of courses mentioned on the s∗ = arg max f (s, ti )

s∈S

NPTEL translation page30 . We then collect Indic

and English subtitles for every video using youtube- We chose f to be the cosine similarity function

dl31 . For Khan Academy, we use youtube-dl to on vectorial embeddings of s and t. We compute

search the entire channel for videos containing sub- these embeddings using LaBSE (Feng et al., 2020)

titles for English and any of the 11 Indic languages which is a multilingual embedding model that en-

and download them. We skip the auto-generated codes text from different languages into a shared

youtube captions to ensure that we only get high embedding space. LaBSE is trained on 17 billion

quality translations. We collect subtitles for all monolingual sentences and 6 billion bilingual sen-

available courses/videos on March 7th, 2021 tence pairs using the Masked Language Model-

Tokenisation. Once the main content of the arti- ing (Devlin et al., 2019) and Translation Language

cle is extracted in the above step, we split it into Modeling objectives (Conneau and Lample, 2019).

sentences and tokenize the sentences. We used The authors have shown that it produces state of the

the tokenisers available in the Indic NLP Library32 art results on multiple parallel text retrieval tasks

(Kunchukuttan, 2020) and added some more heuris- and is effective even for low-resource languages.

tics to account for Indic punctuation characters and Hereon, we refer to the cosine similarity between

sentence delimiters. For example, we ensured that the LaBSE embeddings of s, t as the LaBSE Align-

26

ment Score (LAS).

https://nptel.ac.in

27

https://www.khanacademy.org Post Processing. Using the above described pro-

28

https://www.crummy.com/software/BeautifulSoup cess, we find the top matching English sentence,

29

https://pypi.org/project/selenium s∗ , for every Hindi sentence, ti . We now apply

30

https://nptel.ac.in/Translation a threshold and select only those pairs for which

31

https://github.com/tpikonen/youtube-dl: We use a fork

of youtube-dl that lets us download multiple subtitles per the cosine similarity is greater than a threshold t.

language if available. This was necessary for some NPTEL Across different sources we found 0.75 to be a good

youtube videos which had one erroneous and one correct threshold. We refer to this as the LAS threshold.

subtitle file for english. We heuristically remove the incorrect

one after download. Next, we remove duplicates in the data. We con-

32

https://github.com/anoopkunchukuttan/indic_nlp_library sider two pairs (si , ti ) and (sj , tj ) to be duplicate ifsi = sj and ti = tj . We also remove any sentence Language Number of sentences

pair where the English sentence was less than 4 as 2.38

words. Lastly, we use a language identifier33 and bn 77.7

eliminate pairs where the language identified for si en 100.6

or ti does not match the intended language. gu 46.6

hi 77.3

2.3 Mining parallel sentences from kn 56.5

non-machine readable comparable ml 67.9

corpora mr 41.6

While web sources are machine readable, there are or 10.1

official documents that are generated which are pa 35.3

not always machine readable. This includes pro- ta 47.8

ceedings of the legislative assembly of different te 60.5

states in India that are published in English as well

as the local language of the state. For example, Table 4: Number of sentences (in millions) in the mono-

lingual corpora from IndicCorp for English and 11 In-

in the state of Tamil Nadu, the proceedings get dic languages. IndicCorp is the largest available such

published in English as well as Tamil. These doc- corpus and contributes the largest fraction of parallel

uments are often translated sentence-by-sentence sentences (IndicParCorp) to Samanantar.

by human translators and thus the translation pairs

are of high quality. In this work, we considered

3 such sources: (a) documents from Tamil Nadu

of a page which may overflow on to the next

government34 which get published in English and

page. Since each page is independently passed to

Tamil, (b) speeches from Bangladesh Parliament35

Google’s Vision API, we ensure that an incomplete

and West Bengal Legislative Assembly36 which get

sentence at the bottom of one page is merged with

published in English and Bengali, and (c) speeches

an incomplete sentence at the top of the next page.

from Andhra Pradesh37 and Telangana Legislative

Parallel Sentence Extraction. Unlike the

Assemblies38 which get published in English and

previous section, here we have the exact

Telugu. The documents available on these sources

information about which documents are

are public and have a regular URL format. Most of

parallel. This information is typically en-

these documents either contained scanned images

coded in the URL of the document itself (e.g.,

of the original document or contain proprietary en-

https://www.tn.gov.in/en/budget2020.pdf and

codings (non-UTF8) due to legacy issues. As a

https://www.tn.gov.in/ta/budget2020.pdf). Hence,

result, standard PDF parsers cannot be used to ex-

for a given Tamil sentence, ti we only need to

tract text from them. We use the following pipeline

consider the sentences S = {s1 , s2 , . . . , sm }

for extracting parallel sentences from such sources.

which appear in the corresponding English article.

Optical Character Recognition (OCR). We used The search space is thus much smaller than that

Google’s Vision API which supports OCR in in the previous section, where we considered S to

English as well as all the 11 Indic languages that be a collection of all sentences from all articles

we consider in this work. Specifically, we pass published in the same month. Once this set S

each document through Google’s OCR service has been identified, for a give ti , we identify the

which returns the text contained in the document. matching sentence, s∗ , using LAS as described in

Tokenisation. Once the text is extracted from the the previous subsection.

PDFs, we use the same tokenisation process as Post-Processing. We use the same post-processing

described in the previous section. Here, we apply as described in the previous subsection, viz., (a)

extra heuristics to handle sentences at the bottom filtering based on a threshold on LAS, (b) removing

33

https://github.com/aboSamoor/polyglot duplicates, (c) filtering short sentences, and (d)

34

https://www.tn.gov.in/documents/deptname filtering sentence pairs where either the source of

35

http://www.parliament.gov.bd target text is not in the desired language.

36

http://www.wbassembly.gov.in

37

https://www.aplegislature.org,

https://www.apfinance.gov.in

38 38

https://finance.telangana.gov.in margin threshold of 1.042.4 Mining parallel sentences from web scale

monolingual corpora

Recent works have shown that it is possible to

mine parallel sentences from web scale monolin-

gual corpora. For example, both Schwenk et al.

(2019b) and Feng et al. (2020) align sentences in

large monolingual corpora (e.g., CommonCrawl)

by computing the similarity between them in a

shared multilingual embedding space. In this work,

we consider IndicCorp, the largest collection of

monolingual corpora for Indic languages. Table 4

shows the number of sentences in version 2.0 of

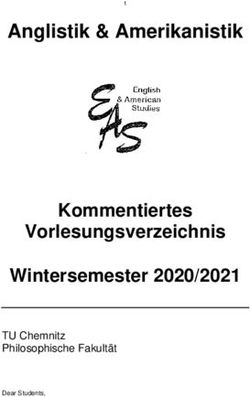

Figure 2: Histogram of the cosine similarity between

IndicCorp for each of the languages that we consid- the product quantised representations in FAISS of sen-

ered. It should be obvious that a brute force search tence pairs known to be parallel. The wide distribution

where every sentence in the source language is com- implies that applying a threshold on the approximated

pared to every sentence in the target language to similarity would not be effective.

find the best matching sentence is infeasible. How-

ever, unlike the previous two subsections where we

could restrict the set of sentences, S, to include so that computing the inner product is the same as

only those sentences which were published in a computing the cosine similarity. FAISS first finds

given month or belonged to a known parallel docu- the top-p clusters by computing the distance be-

ment, there is no easy way of restricting the search tween each of the cluster centroids and the given

space for IndicCorp. In particular, IndicCorp only Hindi sentence. We set the value of p to 1024.

contains a list of sentences with no meta-data about Within each of these clusters, FAISS then searches

the month or article to which each sentence belongs. for the nearest neighbors. This retrieval is highly

The only option then is to iterate over all target sen- optimized to scale. In our implementation, on av-

tences to find a match. To do this efficiently, we erage we were able to process 1100 sentences per

use FAISS39 (Johnson et al., 2017a) which does second, i.e., we were able to retrieve the nearest

efficient indexing, clustering, semantic matching, neighbors for 1,100 sentences per second when

and retrieval of dense vectors as explained below. mining from the index of the entire IndicCorp.

Indexing. We first compute the sentence embed-

Recomputing cosine similarity. Notice that

ding using LaBSE for all English sentences in In-

FAISS computes cosine similarity on the quantized

dicCorp. Note that IndicCorp is a sentence level

vector, in our case of dimension 64. For a given

corpus (one sentence per line) so we do not need

pair of sentences, how do these approximate sim-

any pre-processing or sentence splitting to extract

ilarity scores compare with the cosine similarity

sentences. Once the embeddings are computed,

on the LaBSE embeddings (LAS)? We found that

we create a FAISS index where these embeddings

while the relative ranking produced by FAISS is

are stored in 100k clusters. Since LaBSE embed-

good, the similarity scores on the quantized vectors

dings are very high dimensional, we use the Prod-

vary widely. In other words, while FAISS identi-

uct Quantisation of FAISS to reduce the amount of

fies sentence pairs which are likely to have large

space required to store these embeddings. In par-

LAS, the similarity scores on the quantized vec-

ticular, each 786 dimensional LaBSE embedding

tors vary widely. To study this better, we collected

is quantized into a m dimensional vector (m = 64)

100 gold standard en-hi sentence pairs and com-

where each dimension is represented using an 8-bit

puted the similarity score on the quantized vectors

integer value.

- the scores that would be used by FAISS. The his-

Retrieval. For every Indic sentence (say, Hindi sen-

togram of these scores are shown in in Figure 2. We

tence) we first compute the LaBSE embedding and

found that the scores vary widely from 0.42 to 0.78

then query the FAISS index for its nearest neighbor

and hence it is difficult to choose an appropriate

based on inner product. Note that we normalise

threshold on the similarity of the quantized vector.

both the English index and the Hindi embeddings

However, the relative ranking provided by FAISS

39

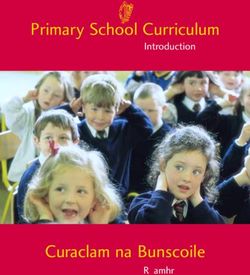

https://github.com/facebookresearch/faiss is still good, i.e., for all the 100 query Hindi sen-tences that we analysed FAISS retrieved the correct ·107

1

matching English sentence from an index of 100.6

Monolingual Corpus - IndicCorp

M sentences at the top-1 position. Based on this ob-

Non Machine Readable Sources

servation, we follow a two-step approach: First, we 0.8

Machine Readable Sources

retrieve the top-1 matching sentence from FAISS

using the quantized vector. Then, we compute the 0.6

LAS between the full LaBSE embeddings of the

retreived sentence pair. On this computed LAS,

we apply a LAS threshold of 0.80 (slightly higher 0.4

than that described in the previous subsection) for

filtering. This modified FAISS mining, combining 0.2

quantized vectors for efficient searching and full

embeddings from LaBSE for accurate thresholding,

0 as bn gu hi kn ml mr or pa ta te

was an essential innovation to mine a large number

of parallel sentences.

Figure 3: Total number of newly mined En-X par-

Post-processing. We follow the same post- allel sentences in Samanantar from different sources.

processing steps as described in Section 2.2. Across languages, mining from IndicCorp is the domi-

nant source accounting for 85% of all newly identified

We used the same process as described above to parallel sentences.

extract parallel sentences from Wikipedia. More

specifically, we treated Wikipedia as a collection

of monolingual sentences in different languages. then we extract (thi , tta ) as a Hindi-Tamil parallel

We then created a FAISS index of all sentences sentence pair. Further, we use a very strict de-

from English Wikipedia. Next, for every source duplication criterion to avoid the creation of very

sentence from the corresponding Indic language similar parallel sentences. For example, if an en

Wikipedia (say, Hindi Wikipedia) we retrieved the sentence is aligned to m hi sentences and n ta sen-

nearest neighbor from this index. We followed the tences, then we would get mn hi-ta pairs. However,

exact same steps as above with the only difference these pairs would be very similar and not contribute

that the sentences from IndicCorp were replaced much to the training process. Hence, we retain only

by sentences from Wikipedia. We found that we 1 randomly chosen pair out of these mn pairs.

were able to mine more parallel sentences using

this approach as opposed to aligning bilingual arti- 2.6 Statistics of Samanantar

cles using Wikipedia’s interlanguage links and then

mining parallel sentences only from these aligned Table 2 summarizes the number of parallel sen-

articles. tences obtained from each of these sources between

English and the 11 Indic languages that we con-

sidered. Overall, we mined 34.6M parallel sen-

2.5 Mining Corpora between Indic

tences in addition to the 12.4M parallel sentences

languages.

already available between English and Indic lan-

So far, we have discussed mining parallel corpora guages. We thus contribute 2.8× more data over

between English and Indic languages. To sup- existing sources. Figure 3 shows the relative con-

port translation between Indic languages, we also tribution of different sources in mining new paral-

need direct parallel corpus between these languages lel sentences. The dominant source is IndicCorp

since zero-shot translation is insufficient to address contributing over 85% of the sentence pairs. How-

translation between non-English languages (Ari- ever, we do note that there is the possibility of care-

vazhagan et al., 2019a). Following Freitag and fully collating more high quality machine-readable

Firat (2020) and Rios et al. (2020), we use English and non-machine-readable sources. Table 3 sum-

as a pivot to mine parallel sentences between In- marises the number of parallel sentences that we

dic languages from all the English-centric corpora mined between the 11 2 = 55 Indic language pairs.

described earlier in this section. For example, let We mined 82.7M parallel sentences resulting in a

(sen , thi ) and (ŝen , tta ) be mined parallel sentences 5.29× increase in publicly available sentence pairs

between en-hi and en-ta respective. If sen = ŝen between these languages.3 Analysis of the Quality of the Mined There, the STS of two given sentences is character-

Parallel Corpus ized as an ordinal scale of six levels ranging from

complete semantic equivalence (5) to complete se-

In Samanantar, parallel sentences have been mined mantic dissimilarity (0). We follow the same guide-

using a series of pipelines at different content scales lines in defining six ordinal levels as exemplified

with multiple tools and models such as LaBSE, in Table 1 of Agirre et al. (2016). These guidelines

FAISS, and Anuvaad. Further, the parallel sen- were explained to 38 annotators across 11 Indic

tences have been mined for different languages languages with a minimum of 3 annotators per lan-

from different sources. It is thus important to char- guage. Each of the annotators was a native speaker

acterize the quality of these mined sentences across in the language assigned to them and was also flu-

pipelines, languages, and sources. One way to do ent in English. The annotators have experience

so is to quantify the improvement in performance ranging from 1-20 years in working on language

of NMT models when trained with the additional and related tasks, with a mean of 5 years. The

mined parallel sentences, which we discuss in the annotation task was performed on Google forms

next section. However, to inform further research in the following manner: Each form consisted of

and development, it also valuable to intrinsically 30 parallel sentences coming from an annotation

and manually evaluate the cross-lingual Semantic batch as defined earlier. Annotators were shown

Textual Similarity (STS) of pairs of sentences. In one pair of sentences at a time and were asked to

this section, we describe the task and results of score it in the range of 0 to 5. The SemEval-2016

such an evaluation for the English-Indic parallel guidelines for each ordinal value were visible to the

sentences in Samanantar. annotators at all times. After annotating 30 parallel

3.1 Annotation Task and Setup sentences, the annotators submitted the form and

then resumed again with a new form. The anno-

We sampled 10,069 sentence pairs (English and tators were compensated for the work at the rate

Indic sentences) across 11 Indic languages and of Rs 100 to Rs 150 (1.38 to 2.06 USD) per 100

sources. To understand the sensitivity of the STS words read.

scores assigned by the annotators with the align-

ment quality as estimated with LAS, we sampled 3.2 Annotation Results and Discussion

sentences equally in three sets: The results of the annotation of semantic textual

similarity (STS) of over 9,500 sentence pairs with

• sentences which were definitely accepted over 30,000 annotations are shown language-wise

(LAS greater than 0.1 of the chosen thresh- in Table 5. We make the following key observations

old), from the data.

• sentences which were marginally accepted Sentence pairs included in Samanantar have

(LAS greater than but within 0.1 of the chosen high semantic similarity. Overall, the mined

threshold), and parallel sentences (the ‘All accept’ column) have a

mean STS score of 4.17 and a median STS score of

• sentences which were rejected (LAS lower 5. On a scale of 0 to 5, where 5 represents perfect

than but within 0.1 of the chosen threshold). semantic similarity, a mean score of 4.17 indicates

that the annotators rate the parallel sentences to

Thus, for every source and language pair, we per-

be of high quality. Furthermore, the chosen LAS

form a stratified sampling with equal number of

thresholds sensitively filter out sentence pairs - the

sentences in each of the above three sets. After

definitely accept sentence pairs have a high aver-

all sentences are sampled, we randomly shuffled

age STS score of 4.53, which reduces to 3.8 with

the language-wise sentence pairs such that there is

marginally accept, and significantly falls to 2.9 with

no ordering preserved across sources or LAS. We

the reject sets.

then divided the language-wise sentence pairs into

annotation batches of 30 parallel sentences each. Mean STS scores depend on the resource-size of

For defining the annotation scores, we base our corresponding Indic language. The mean STS

work on the SemEval-2016 Task 1 (Agirre et al., scores are a function of the resource-size of the

2016), wherein semantic textual similarity was corresponding Indic language, at least at the ex-

studied for mono-lingual and cross-lingual tasks. treme ends. The two languages with the smallest re-Annotation data Semantic Textual Similarity score Spearman correlation coefficient

Language

STS,

# Bitext # Anno- All Definitely Marginally LAS, LAS,

Reject Sentence

pairs tations accept accept accept STS Sentence len

len

Assamese 689 1,973 3.48 3.83 3.06 2.14 0.25 -0.39 0.15

Bengali 957 3,814 4.53 4.82 4.23 3.51 0.41 -0.42 -0.14

Gujarati 779 2,333 3.94 4.41 3.46 2.56 0.44 -0.30 -0.07

Hindi 1,277 4,679 4.38 4.75 3.99 3.03 0.44 -0.18 -0.12

Kannada 957 2,839 4.08 4.51 3.66 2.62 0.37 -0.38 -0.08

Malayalam 917 2,781 3.94 4.40 3.49 2.30 0.36 -0.33 0.02

Marathi 779 2,324 4.14 4.56 3.66 2.76 0.39 -0.37 -0.01

Odia 500 1,497 3.97 4.07 3.86 4.18 0.08 -0.41 -0.02

Punjabi 689 2,265 4.16 4.58 3.71 2.27 0.34 -0.25 0.13

Tamil 1,044 3,123 4.11 4.48 3.74 2.42 0.36 -0.40 -0.17

Telugu 951 2,968 4.51 4.76 4.25 3.60 0.32 -0.40 -0.08

Overall 9,570 30,596 4.17 4.53 3.8 2.9 0.33 -0.35 -0.03

Table 5: Results of the annotation task to evaluate the semantic similarity between sentence pairs across 11 lan-

guages. Human judgments confirm that the mined sentences (All accept) have a high semantic similarity and with

a moderately high correlation between the human judgments and LAS.

source sizes (As, Or) have the the lowest mean STS across languages, the sentence length is computed

scores, while the two languages with the highest for the English sentences in each pair. We find that

resource sizes (Hi, Bn) are in the top-3 mean STS sentence length is negatively correlated with LAS

scores. This indicates that the mining of parallel with a Spearman correlation coefficient of -0.35,

sentences with multilingual representation models while sentence length is almost uncorrelated with

such as LaBSE is more accurate for resource-rich STS with a Separaman correlation coefficient of

languages. -0.03. In other words, sentence pairs with longer

sentences are unlikely to have high alignment on

LAS and STS are moderately correlated across LaBSE representations and thus be included in

languages. The Spearman correlation coefficient Samanantar. This is also evidenced by the average

between LAS and STS is a moderately positive sentence length in the three sets of definitely accept,

value of 0.33. This suggests that sentence pairs marginally accept, and reject of 11.36, 13.4, 12.9,

which have a higher LAS are likely to be rated respectively. However, this preference for shorter

to be semantically similar by annotators. How- sentences seems to be an accident of the LaBSE

ever, the correlation coefficient is also not very representation rather than reflecting semantic simi-

high (say > 0.5) indicating potential for further larity as shown by lack of any correlation between

improvement in learning multilingual representa- sentence length and STS.

tions with LaBSE-like models. Further, for the low-

resource languages such as Assamese and Odia the

correlation values are lower, indicating potential In summary, the annotation task established that

for improvement in alignment. the parallel sentences in Samanantar are of high

quality and the chosen thresholds are validated.

LAS is negatively correlated with sentence The task also established that LaBSE-based align-

length, while STS is not. In the above two points ment should be further improved for low-resource

we have highlighted the potential for improving the languages (such as, As, Or) and for longer sen-

LaBSE model in aligning sentences. We identified tences. We will release this parallel dataset and

one specific opportunity to do this by analyzing the human judgments on the over 9,500 sentence pairs

correlation between sentence length and LAS, and as a dataset for evaluating cross-lingual semantic

sentence length and STS score. To be consistent similarity between English and Indic languages.4 IndicTrans: Multingual, single Indic pre-processing done on the data are Unicode nor-

script models malization and tokenization. When the target lan-

guage is Indic, the output in Devanagari script is

The languages in the Indian subcontinent exhibit converted back to the corresponding Indic script.

many lexical and syntactic similarities on account All the text processing is done using the Indic NLP

of genetic and contact relatedness (Abbi, 2012; library.

Subbārāo, 2012). Genetic relatedness manifests

in the two major language groups considered in Training Data For all models, we use all the

this work: the Indo-Aryan branch of the Indo- available parallel data between English and Indic

European family and the Dravidian family. Owing languages. We then remove any overlaps with any

to the long history of contact between these lan- test or validation data that we use. We use a very

guage groups, the Indian subcontinent is a linguis- strict criteria for identifying such overlaps. In par-

tic area (Emeneau, 1956) exhibiting convergence ticular, while finding overlaps, we remove all punc-

of many linguistic properties between languages tuation characters and lower case all strings in the

of these groups. Hence, we explore multilingual training and validation/test data. We then remove

models spanning all these Indic languages to en- any translation pair, (en, t), from the training data

able transfer from high resource languages to low if (i) the English sentence en appears in the vali-

resource languages on account of genetic related- dation/test data of any En-X language pair or (ii)

ness (Nguyen and Chiang, 2017) or contact relat- the Indic sentence t appears in the validation/test

edness (Goyal et al., 2020). More specifically, we data of the corresponding En-X language pair. Note

explored 2 types of multilingual models for trans- that, since we train a joint model it is important to

lation involving Indic languages: (i) One to Many ensure that no en sentence in the test/validation

for English to Indic language translation (O2M: data appears in any of the En-X training sets. In

11 pairs) (ii) Many to One for Indic language to particular, if there is en sentence in the En-Hi vali-

English translation (M2O: 11 pairs). dation/test data then any pair containing this data

should not be in any of the En-X training sets. We

Data Representation The first major design do not use any data sampling while training and

choice we made was to represent all the Indic leave the exploration of these strategies for future

language data in a single script. The scripts for work (Arivazhagan et al., 2019b).

these languages are all derived from the ancient

Brahmi script. Though each of these scripts have Validation Data For both the models, we used

their own Unicode codepoint range, it is possible all the validation data from the benchmarks de-

to get a 1-1 mapping between characters in these scribed in Section 5.1.

different scripts since the Unicode standard takes

Vocabulary We use a subword vocabulary learnt

into account the similarities between these scripts.

using subword-nmt (Sennrich et al., 2016b) for

Hence, we convert all the Indic data to the Devana-

building our models. For both the models, we learn

gari script (we could have chosen any of the other

separate vocabularies for the English and Indic lan-

scripts as the common script, except Tamil). This

guages from the English-centric training data using

allows better lexical sharing between languages

32k BPE merge operations each.

for transfer learning, prevents fragmentation of the

subword vocabulary between Indic languages and Network & Training We use transformer-based

allows using a smaller subword vocabulary. models (Vaswani et al., 2017) for training our NMT

The first token of the source sentence is a special models. Table 6 shows the model configuration

token indicating the source language (Tan et al., details.

2019; Tang et al., 2020). The model can make The models were trained using fairseq (Ott et al.,

a decision on the transfer learning between these 2019) on 8 V-100 GPUs. We optimized the cross

languages based on both the source language tag entropy loss using the Adam optimizer with a

and the similarity of representations. When mul- label-smoothing of 0.1 and gradient clipping of

tiple target languages are involved, we follow the 1.0. We use mixed precision training with Nvidia

standard approach of using a special token in the Apex40 . We use an initial learning rate of 5e-4,

input sequence to indicate the target language to

40

generate (Johnson et al., 2017b). Other standard https://github.com/NVIDIA/apexAttribute Value tions of news articles.

Encoder layers 6 UFAL EnTam: This benchmark is part of the

Decoder layers 6 UFAL EnTam corpus (Ramasamy et al., 2012). The

Embedding size 1536 dataset contains parallel sentences in English-Tamil

Number of heads 16 with sentences from the Bible, cinema and news

Feed-forward dim 4096 domain.

Model parameters (in million) 400 PMI: We create this benchmark from PMIndia

corpus(Haddow and Kirefu, 2020) to test English-

Table 6: Model configuration for the IndicTrans Model Assamese systems. The dataset consists of 1000

validation and 2000 test samples and we ensure

that the dataset does not have very short sentences

4000 warmup steps and the same learning rate an- (80 words).

nealing schedule as proposed in (Vaswani et al.,

2017). We use a global batch size of 64k tokens. 5.2 Evaluation Metrics

We train each model on 8 V100 GPUs and use early We use BLEU scores for the evaluation of the

stopping with the patience set to 5 epochs. models. To ensure consistency and reproducibility

Decoding We use beam search with a beam size across the models, we provide SacreBLEU sig-

of 5 and length penalty set to 1. natures in the footnote for Indic-English41 and

English-Indic42 evaluations. For Indic-English, we

5 Experimental Setup use the the in-built, default mteval-v13a tokenizer.

For En-Indic, we first tokenize using the IndicNLP

We evaluate the usefulness of the parallel data re- tokenizer before running sacreBleu. The evaluation

leased as a part of this work, by comparing the script will be made available for reproducibility.

performance of a translation system trained using

this data with existing state of the art models on a 5.3 Models

wide variety of benchmarks. Below, we describe We compare the performance of the following mod-

the models and benchmarks used for this compari- els:

son. Commercial MT systems. We use the translation

APIs provided by Google Cloud Platform (v2) and

5.1 Benchmarks

Microsoft Azure Cognitive Services (v3) to trans-

We use the following publicly available bench- late all the sentences in the test set of the bench-

marks for evaluating all the models: marks described above.

Publicly available MT systems. We consider the

WAT2020 (Nakazawa et al., 2020): This bench- following publicly available NMT systems:

mark is part of the “Indic tasks” track of WAT2020. OPUS-MT 43 : As a part of their ongoing work

The dev and test sets are a subset of the Mann on NLP for morphologically rich languages, the

Ki Baat test set (Siripragada et al., 2020) which Helsinki-NLP group has released translation mod-

consists of Indian Prime Minister’s speeches els for bn-en, hi-en, ml-en, mr-en, pa-en, en-hi,

translated to 8 Indic languages. en-ml and en-mr. These models were trained us-

ing all parallel sources available from OPUS as

WAT2021: This benchmark is part of the Multi- described in section 2.1. We refer the readers to the

IndicMT track of WAT2021. The dataset is sourced URL mentioned in the footnote for further details

from the PMIndia corpus (Haddow and Kirefu, about the training data. For now, it suffices to know

2020). It is a multi-parallel test set for 11 Indic that these models were trained using lesser amount

languages containing sentences from the news do- of data as compared to the total data released in

main. this work.

WMT test sets: Dev and test sets from the News mBART5044 : This is a multilingial many-to-many

track of WMT 2014 English-Hindi shared task model which can translate between any pair of 50

(Bojar et al., 2014), WMT 2019 English-Gujarati 41

BLEU+1+smooth.exp+tok.13a+version.1.5.1

shared task (Barrault et al., 2019) and WMT 2020 42

BLEU+case.mixed+numrefs.1+smooth.exp+tok.none+version.1.5.1

English-Tamil shared task (Barrault et al., 2020). 43

https://huggingface.co/Helsinki-NLP

44

All these benchmarks consists of human transla- https://huggingface.co/transformers/model_doc/mbart.htmlPMI UFAL EnTam WAT2020 WAT2021 WMT News

en-as 1000 / 2000 - - - -

en-bn - - 2000 / 3522 1000 / 2390 -

en-gu - - 2000 / 4463 1000 / 2390 - / 1016

en-hi - - 2000 / 3169 1000 / 2390 520 / 2506

en-kn - - - 1000 / 2390 -

en-ml - - 2000 / 2886 1000 / 2390 -

en-mr - - 2000 / 3760 1000 / 2390 -

en-or - - - 1000 / 2390 -

en-pa - - - 1000 / 2390 -

en-ta - 1000 / 2000 2000 / 1000 1000 / 2390 1989 / 1000

en-te - - 2000 / 3049 1000 / 2390 -

Table 7: List of all available benchmarks with sizes of validation and test sets across languages.

Commercial MT Systems Public MT Systems Trained on existing data Trained on Samanantar

Google Microsoft CVIT OPUS-MT mBART50 Transformer mT5 IndicTrans

bn 20.6 21.8 - 11.4 4.7 24.2 24.8 28.4

gu 32.9 34.5 - - 6. 33.1 34.6 39.5

hi 36.7 38. - 13.3 33.1 38.8 39.2 43.2

kn 24.6 23.4 - - - 23.5 27.8 34.9

ml 27.2 27.4 - 5.7 19.1 26.3 26.8 33.4

WAT2021

mr 26.1 27.7 - .4 11.7 26.7 27.6 32.4

or 23.7 27.4 - - - 23.7 - 33.4

pa 35.9 35.9 - 8.6 - 36. 37.1 42.

ta 23.5 24.8 - - 26.8 28.4 26.8 32.

te 25.9 25.4 - - 4.3 26.8 28.5 35.1

bn 17. 17.2 18.1 9. 6.2 16.3 16.4 19.2

gu 21. 22. 23.4 - 3. 16.6 18.9 23.0

hi 22.6 21.3 23. 8.6 19. 21.7 21.5 23.5

WAT2020 ml 17.3 16.5 18.9 5.8 13.5 14.4 15.4 19.6

mr 18.1 18.6 19.5 .5 9.2 15.3 16.8 19.6

ta 14.6 15.4 17.1 - 16.1 15.3 14.9 17.9

te 15.6 15.1 13.7 - 5.1 12.1 14.2 17.8

hi 31.3 30.1 24.6 13.1 25.7 25.3 26. 29.4

WMT gu 30.4 29.9 24.2 - 5.6 16.8 21.9 23.4

ta 27.5 27.4 17.1 - 20.7 16.6 17.5 24.3

UFAL ta 25.1 25.5 19.9 - 24.7 26.3 25.6 30.1

pmi as - 16.7 - - - 7.4 - 28.7

Table 8: BLEU scores for translation from Indian languages to English acorss different available benchmarks.

Excepting for the WMT benchmark, IndicTrans trained on Samanantar outperforms all other models (including

commercial MT systems).

languages. In particular, it supports the following data between multiple language pairs. We refer

language pairs which are relevant for our work: en- the readers to the original paper for details of the

bn, en-gu, en-hi, en-ml, en-mr, en-ta, en-te and the monolingual pre-training data and the bilingual

reverse directions. This model is first pre-trained on fine-tuning data (Tang et al., 2020). Once again,

large amounts of monolingual data from all the 50 we note that these models use much lesser bilingual

languages and then jointly fine-tuned using parallel data in Indic languages as compared to the amountof data released in this work. 6 Results and Discussion

Models trained on all existing parallel data. To

The results of our experiments on Indic-En and

evaluate the usefulness of the parallel sentences in

En-Indic tranlslation are reported in Tables 8 and

Samanantar, we train a few well studied models

9 respectively. Similarly, Figures 4a and 4b give a

using all parallel data available prior to this work.

quick comparison of the performance of different

Transformer: We train one transformer model each models averaged over all the benchmarks that we

for every en-Indic language pair and one for every used per language. In particular, it compares (i) cur-

Indic-en language pair (22 models in all). Each rent best existing MT systems for every language

model contains 6 encoder layers, 6 decoder layers pair (this system could be different for different

and 8 attention heads per layer. The input embed- language pairs), (ii) current best model trained on

dings are of size 512, the output of each attention all existing data, and (iii) IndicTrans trained on

head is of size 64 and the feedforward layer in the Samanantar. Below, we list down the main obser-

transformer block has 2048 neurons. The overall vations from our experiments.

architecture is the same as the TransformerBASE Compilation of existing resources was a fruitful

model described in (Vaswani et al., 2017). We use exercise. From Figures 4a and 4b we observe that

byte pair encoding (BPE) with a vocabulary size current state-of-the-art models trained on all exist-

of ≈32K for every language. We use Adam as the ing parallel data (curated as a subset of Samanan-

optimizer, an initial learning rate of 5e-4 and the tar) perform competitively with commercial MT

same learning rate annealing schedule as proposed systems. In particular, in 11 of the 22 bars (11

in (Vaswani et al., 2017). We train each model languages in either direction) shown in the two fig-

on 8 V100 GPUs and use early stopping with the ures, models trained on existing data outperform

patience set to 5 epochs. commercial MT systems.

mT5: We use Multilingual T5 (mT5) which is a IndicTrans trained on Samanantar leads to state

massively multilingual pretrained text-to-text trans- of the art performance. Again, referring to Fig-

former model for NLG (Xue et al., 2021). This ures 4a and 4b, we observed that IndicTrans trained

model supports 9 out of the 11 Indic languages on Samanantar outperforms all existing models for

that we consider in the work. However, it is not all the languages in both the directions (except,

a translation model but pre-trained using monolin- Gujarati-English). The absolute gain in BLEU

gual data in multiple languages for predicting a score is higher for the Indic-En direction as com-

corrupted/dropped span in the input sequence. We pared to the En-Indic direction. This is on account

take this pre-trained model and finetune it for the of better transfer in many to one settings compared

translation task using all existing sources of paral- to one-to-many settings (Aharoni et al., 2019) and

lel data. We finetune one model for every language better language model on the target side.

pair of interest (18 pairs). We use the mT5BASE Performance gains are higher for low resource

model which has 6 encoder layers, 6 decoder lay- languages. We observe significant gains for ex-

ers and 12 attention heads per layer. The input isting low resource languages such as, or, as, and

embeddings are of size 768, the output of each at- kn, especially in the Indic-En direction. We hy-

tention head is of size 64 and the feedforward layer pothesise that these languages benefit from other

in the transformer block has 2048 neurons. We use related languages with more resources during the

AdaFactor as the optimizer, with a constant learn- joint-training on a common script.

ing rate of 1e-3. We train each model on 1 v3 TPU Pre-training needs further investigation. mT5

and use early stopping with the patience set to 25K which is pre-trained on large amounts of monolin-

steps. gual corpora from multiple languages does not al-

Models trained using Samanantar. We train ways outperform a TransformerBASE model which

the proposed IndicTrans model using the entire is just trained on existing parallel data without

Samanantar corpus. any pre-training. While this does not invalidate

Note that for all the models that we have trained the value of pre-training, it does suggest that pre-

or finetuned as a part of this work, we have ensured training needs to be optimized for the specific lan-

that there is no overlap between the training set guages. As future work, we would like to explore

and any of the test sets or development sets that we pre-training using the monolingual corpora on In-

have used. dic languages available from IndicCorp. Further,You can also read