Multi-Vehicle Control in Roundabouts using Decentralized Game-Theoretic Planning

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Multi-Vehicle Control in Roundabouts using

Decentralized Game-Theoretic Planning

Arec Jamgochian , Kunal Menda and Mykel J. Kochenderfer

Department of Aeronautics and Astronautics, Stanford University

{arec, kmenda, mykel}@stanford.edu

Abstract

arXiv:2201.02718v1 [cs.RO] 8 Jan 2022

Safe navigation in dense, urban driving environ-

ments remains an open problem and an active

area of research. Unlike typical predict-then-

plan approaches, game-theoretic planning consid-

ers how one vehicle’s plan will affect the ac-

tions of another. Recent work has demonstrated

significant improvements in the time required to

find local Nash equilibria in general-sum games

with nonlinear objectives and constraints. When

applied trivially to driving, these works assume

all vehicles in a scene play a game together,

which can result in intractable computation times

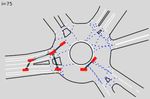

for dense traffic. We formulate a decentralized Figure 1: The DR USA Roundabout FT roundabout (Zhan et al.,

2019). Planning in a dense traffic environment requires negotiating

approach to game-theoretic planning by assum- with multiple agents, but not with all agents in a scene.

ing that agents only play games within their ob-

servational vicinity, which we believe to be a

more reasonable assumption for human driving. A general approach is to assume every driver acts to opti-

Games are played in parallel for all strongly con- mize their own objective, as is the case in a Markov Game.

nected components of an interaction graph, signif- If no agent has any incentive for unilaterally switching its

icantly reducing the number of players and con- policy, then the resulting joint policy is a Nash equilib-

straints in each game, and therefore the time re- rium (Kochenderfer et al., 2022). While typical policies may

quired for planning. We demonstrate that our ap- be formed using predictions about other vehicles, Nash equi-

proach can achieve collision-free, efficient driv- librium policies have the benefit of considering how agents

ing in urban environments by comparing perfor- may respond to the actions of others. Nash equilibrium (and

mance against an adaptation of the Intelligent other game-theoretic) policies therefore can more closely

Driver Model and centralized game-theoretic plan- capture human driving, where drivers reason about how their

ning when navigating roundabouts in the INTER- driving decisions will affect the plans of other drivers and ne-

ACTION dataset. Our implementation is available gotiate accordingly.

at http://github.com/sisl/DecNashPlanning. Nash equilibrium strategies for finite-horizon linear-

quadratic games obey coupled Riccati differential equations,

and can be solved for in discrete time using dynamic pro-

1 Introduction gramming (Başar and Olsder, 1998; Engwerda, 2007). More

A better understanding of human driver behavior could yield recent work has explored efficiently finding Nash equilibria

better simulators to stress test autonomous driving policies. in games with non-linear dynamics by iteratively linearizing

A well-studied line of work attempts to build interpretable them (Fridovich-Keil et al., 2020) or by searching for roots

longitudinal or lateral motion models parameterized by only of the gradients of augmented Lagrangians (Le Cleac’h et al.,

a few parameters. For example, the Intelligent Driver Model 2020). Both kinds of approaches have been applied to urban

(IDM) control law has five parameters that govern longitudi- driving scenarios. However, they focus on scenarios where

nal acceleration (Treiber et al., 2000; Kesting et al., 2010). the number of agents is low, and the methods are empirically

There are different ways to infer model parameters from shown in the latter work to scale poorly as the number of

data (Liebner et al., 2012; Buyer et al., 2019; Bhattacharyya, agents is increased.

Senanayake, et al., 2020). However, one can typically only In dense, urban driving environments, the number of agents

use these models in well-defined, simple scenarios (e.g. high- in a particular scene can be quite large. Navigating a round-

way driving). Building models from data in complicated sce- about, such as the one shown in Figure 1, can require success-

narios (e.g. roundabouts) remains an open area of research. fully negotiating with many other drivers. Luckily, modelingmulti-agent driving in dense traffic as one large, coordinated The literature on multi-agent planning is rich and exten-

game of negotiations is unrealistic. Drivers typically perform sive. A common assumption is cooperation, which can be

negotiations with the only vehicles closest to them. Further- modeled with one single reward function that is shared by all

more, they cannot negotiate with drivers who do not see them. agents.

In this work, we formulate multi-vehicle decentralized, Multi-agent cooperative planning is surveyed recently

game-theoretic control under the latter assumption that games by Torreno et al., 2017. In real driving, agents do not coop-

are only played when drivers see one another. We use a erate, but rather optimize their individual reward functions.

time-dependent interaction graph to encodes agents’ observa- One approach is to model real driving as a general-sum dy-

tions of other agents, and we delegate game-theoretic control namic game. In non-cooperative games, different solution

to strongly connected components (SCCs) within that graph. concepts have been proposed. Nash equilibria are solutions in

Furthermore, we make the assumption any time a driver ob- which no player can reduce their cost by changing their strat-

serves a vehicle that does not observe them in turn, that driver egy alone (Kochenderfer et al., 2022). The problem of find-

must use forecasts of the other vehicle’s movements in their ing Nash equilibria for multi-player dynamic games in which

plan. Thus, the game of an SCC of agents has additional col- player strategies are coupled through joint state constraints

lision constraints imposed by outgoing edges from that SCC. (e.g. for avoiding collisions) is referred to as a Generalized

We demonstrate our method on a roundabout simulator de- Nash Equilibrium Problem (GNEP) (Facchinei and Kanzow,

rived from the INTERACTION dataset (Zhan et al., 2019). 2007).

We observe that a decentralized model based on IDM is un- Nash equilibrium strategies for finite-horizon linear-

tenable for safe control, and that our decentralized approach quadratic games obey coupled Riccati differential equations,

to game-theoretic planning can generate safe, efficient be- and can be solved for in discrete time using dynamic pro-

havior. We observe that our approach provides significant gramming (Başar and Olsder, 1998; Engwerda, 2007). A

speedup to centralized game-theoretic planning, though it is recent approach, iLQGames, can solve more general dif-

still not fast enough for real-time control. ferential dynamic games by iteratively making and solving

In summary, our contributions are to: linear-quadratic approximations until convergence to a lo-

cal, closed-loop Nash equilibrium strategy (Fridovich-Keil

• Introduce a method for achieving (partially) decentral- et al., 2020). An alternative method, ALGAMES, uses a

ized game-theoretic control, and quasi-Newton root-finding algorithm to solve a general-sum

• Apply that method to demonstrate human-like driving in dynamic game for a local open-loop Nash equilibrium (Le

roundabouts using real data. Cleac’h et al., 2020). Unlike iLQGames, ALGAMES allows

The remainder of this paper is organized as follows: Section 2 for hard constraints in the joint state and action space, but

outlines background material, Section 3 describes our ap- it must be wrapped in model-predictive control to be used

proach and methodologies, Section 4 highlights experiments practically. We note that neither method finds global Nash

and results, and Section 5 concludes. equilibria, and the same game can result in different equilib-

ria depending on the initialization. The problem of inferring

which Nash equilibrium most closely matches observations

2 Background of other vehicles is considered by Peters et al., 2020. Since

Research to model and predict human driver behavior was re- the reward functions of other vehicles may be unknown, ad-

cently surveyed by Brown et al. (2020). The field of imitation ditional work formulates an extension in which an Unscented

learning (IL) seeks to learn policies that mimic expert demon- Kalman Filter is used to update beliefs over parameters in the

strations. One class of IL algorithms requires actively query- other vehicle’s reward functions (Le Cleac’h et al., 2021).

ing an expert to obtain feedback on the policy while learn- In this paper, we adopt the convention presented in AL-

ing. Naturally, this method does not scale (Ross and Bagnell, GAMES. We denote the state and control of player i at time t

2010; Ross, Gordon, et al., 2011). as xit and uit respectively. We denote the full trajectory of all

Other methods use fixed expert data and alternate between agents x1:N i

1:T as X for shorthand. X indicates agent i’s full

optimizing a reward function, and re-solving a Markov de- trajectory, Xt indicates the joint state at time t. Similarly, we

cision process for the optimal policy, which can be expen- use U to indicate the joint action plan u1:N i

1:T , U to indicate

sive (Abbeel and Ng, 2004; Ziebart et al., 2008). More re- agent i’s full plan, and Ut to indicates the joint action at time

cent methods attempt to do these steps progressively while t. The cost function of each player is denoted J i (X, U i ).

using neural network policies and policy gradients (Finn et The objective of each player is to choose actions that mini-

al., 2016; Ho, Gupta, et al., 2016). Generative Adversarial mize the cost of their objective function subject to constraints

Imitation Learning (GAIL) treats IL as a two-player game imposed by the joint dynamics f , and by external constraints

in which a generator is trying to generate human-like behav- C (e.g. collision avoidance constraints, action bounds, etc.):

ior to fool a discriminator, which is, in turn, trying to dif- mini J i (X, U i )

ferentiate between artificial and expert data (Ho and Ermon, X,U

2016). GAIL was expanded to perform multi-agent imitation s.t. Xt+1 = f (t, Xt , Ut ), t = 1, . . . , T − 1, (1)

learning in simulated environments (Song et al., 2018). GAIL

has recently been tested with mixed levels of success on real- C(X, U ) ≤ 0

world highway driving scenarios (Bhattacharyya, Phillips, et A joint plan U optimizes the GNEP and is, therefore, an open-

al., 2018; Bhattacharyya, Wulfe, et al., 2020). GAIL has not loop Nash equilibrium if each plan U i is optimal to its respec-

yet been implemented in more complex driving environments tive problem when the remaining agents plans U −i are fixed.

(e.g. roundabouts), where success will likely require signifi- That is to say, no agent can improve their objective by only

cant innovation. changing their own plan.served agents maintain their velocities, however, our method

is amenable to more complex forecasting methods. An exam-

ple interaction graph is shown in Figure 2.

To control the vehicles, this decentralized planning is

wrapped in model predictive control. Once each planner de-

termines the plan of all the agents in each sub-component,

the first action of each plan is taken, a new interaction graph

is generated, and new game-theoretic planners are instanti-

ated. Decentralized game-theoretic planning is summarized

in Algorithm 1.

Algorithm 1 Decentralized Game-Theoretic Planning

1: procedure D EC NASH(X1 )

2: for t ← 1 : T do

Figure 2: Sample roundabout frame from the INTERACTION 3: gt ← InteractionGraph(Xt )

dataset (Zhan et al., 2019). Observation edges are denoted with ar- 4: SCCs ← StronglyConnectedComponents(gt )

rows, and strongly connected components are drawn with circles. 5: for SCC ∈ SCCs do

Each strongly connected component must make a game-theoretic

plan while respecting forecasts of agents from edges leaving that

6: game ← Game(SCC, Xt )

component. 7: U [SCC.nodes] ← NashSolver(game)

8: Xt+1 ← ExecuteAction(Xt , U )

3 Methodology

3.1 Decentralized Game-Theoretic Planning We believe that this model of human driving more real-

We note that in dense traffic, the assumption that all agents istically captures real-life driving negotiations, where each

play a game with all other agents is unrealistic. More realisti- driver makes plans based only on a few observed neighboring

cally, agents only negotiate with a subset of the agents in the vehicles, and where game-theoretic negotiations only occur

scene at any one given point in time. If there are many agents between vehicles that actively observe one other. For exam-

in the scene, then agents typically negotiate with the ones in ple, if a driver observes a vehicle they know is not observing

their immediate vicinity. them, then that driver must plan using forecasts of the ob-

Furthermore, this game-theoretic negotiation exists only served vehicle’s motion, rather than play a game to commu-

when there is two-way communication between agents. If nicate their intent. Furthermore, this decentralized approach

two drivers can observe one another, they can negotiate, but is much more scalable since the number of players, as well

if a trailing vehicle is in a leading driver’s blind spot, then as the O(n2 ) safety constraints within each game, stays rela-

the leading driver is agnostic to the trailing vehicle’s actions tively small even when there are many vehicles in the driving

while the trailing driver must plan around predictions about scenario. The dependence on the number of players was ob-

the behavior of the leading vehicle. served empirically by Le Cleac’h et al. (2020), who saw a ten-

We encode this information flow using a directed graph to twenty-fold increase in computation time for their method

G = (V, E) where node i ∈ V captures information about when moving from two to four agents per game.

agent i and an edge (i, j) ∈ E captures whether agent i

can observe agent j. We define the agent i’s neighbor set 3.2 Roundabout Game

Ni = {j | (i, j) ∈ E} as the set of all agents that agent i We formulate the local negotiations between agents in a

actively observes. roundabout as separate GNEPs to be solved in parallel. Each

At each point in time, we can generate the interaction graph problem can be thought of to have N -players, Nc of which

Gt based on the agents each agent actively observes. We as- are controlled, and No = N − Nc of which are observed. Nc

sume that a human driver can only reason about a small sub- is the size of the SCC being considered, and No is the num-

set of agents that are within its field of view, so we add those ber of outgoing edges from that SCC. Since we only control

observed agents to the ego vehicle’s neighbor set. The cardi- Nc agents in our decentralized plan, we only need to consider

nality of each neighbor set |Ni | can therefore be much smaller the first Nc ≤ N optimization problems in the dynamic game

than the total number of agents in a scene. We then instanti- in Eq. (1).

ate one game for each of the strongly connected components In our roundabout game, we assume that vehicle trajec-

(SCCs) of Gt . tories are determined beforehand (e.g. by a trajectory plan-

Within each SCC, agents must compute a Nash equilib- ner), and the goal of our planner is to prescribe accelerations

rium. Agents within the SCC negotiate with one another, but of vehicles along their respective trajectories. We do so by

are constrained by the predictions of observed agents who do parametrizing a vehicle’s trajectory using a polynomial map-

not observe them (e.g. the outgoing edges of each strongly ping a distance travelled along the path to the x and y posi-

connected component). If two agents observe one another, tions of the vehicle. A vehicle modulates acceleration along

and one of those two agents observed a third leading car, then this pre-determined path in order to safely and efficiently nav-

the agents must make a joint plan subject to predictions about igate to its goal. We think it is valid to assume that trajecto-

the third car. This can be done by instantiating a game with all ries can be computed a priori in roundabouts, but this may be

three cars and fixing the actions of the third car to be those of a less-valid assumption in highway traffic, where agents can

its forecasted plan. In our experiments, we forecast that ob- plan their paths to react to other agents. Our decentralizedmethod can, however, be extended to other driving environ- in the scene, thereby controlling agents along paths gener-

ments by reformulating the game setup. ated from real-world data. Our code is made available at

With our assumptions, each vehicle’s state can be written: github.com/sisl/DecNashPlanning.

i

pxt = p(sit ; θxi )

We analyze behavior generated from a fully decentral-

|θ|−1 ized policy in which vehicles follow an adaptation of

i i

i pyt = p(st ; θyi ) X

xt = i , where p(s; θ) = θi si . (2) IDM (Treiber et al., 2000), a centralized game-theoretic pol-

st icy, and our decentralized game-theoretic method. We discuss

i=0

vti observed behavior and compare collision rates, average speed

Here, s and v encode the vehicle position and velocity along shortfalls, planning times, and agents in each game.

its path and are the minimal state representation. To simplify

collision constraints, the first two elements in the state ex- 4.1 IDM Adaptation

pand the path position to x and y locations using coefficients Given speed v(t) and relative speed r(t) to a followed vehi-

θxi and θyi . Each vehicle directly controls its own accel- cle, the Intelligent Driver Model (IDM) for longitudinal ac-

eration, leading to the following continuous-time dynamics, celeration predicts acceleration as

which are discretized at solve time:

v(t) · r(t)

ddes = dmin + τ · v(t) − p (8)

i i

ṗ(st , vt ; θxi )

2 amax · bpref

|θ|−1

i i

ṗ(s , v ; θ i ) X " 4 2 #

ẋit = t ti y , where ṗ(s, v; θ) = v θi isi−1 . a(t) = amax 1 −

v(t)

−

ddes

, (9)

vt

i=1 vtarget d(t)

uit

(3) where ddes is the desired distance to the lead vehicle, dmin is

To incentivize vehicles to reach their velocity targets the minimum distance to the lead vehicle that the driver will

while penalizing unnecessary accelerations, we use a linear- tolerate, τ is the desired time headway, amax is the controlled

quadratic objective: vehicle’s maximum acceleration, bpref is the preferred decel-

eration, and vtarget is the driver’s desired free-flow speed.

T

X Though IDM is intended for modeling longitudinal driv-

J i (X i , U i ) = q i kvti − vtarget

i

k22 + ri kuit k22 , (4) ing, we adapt it for use in roundabout driving by designating

t=1 the closest vehicle within a 20◦ half-angle cone from the con-

where qi and ri are vehicle-dependent objective weighting trolled vehicle’s heading as the “follow” vehicle, and calcu-

i

parameters, and vtarget is the vehicle’s desired free-flow speed. lating the relevant parameters by casting the “follow” vehicle

Controls are constrained to be within the control limits to the controlled vehicle’s path.

of each controlled vehicle, and to be equivalent to the pre- 4.2 Game-Theoretic Planning

dicted controls for the observed vehicles. That is, for all

t ∈ {1, ..., T }, we have We implement our decentralized game-theoretic planning ap-

proach as described in Section 3.1. To generate an interaction

uimin ≤ uit ≤ uimin ∀i ∈ {1, ..., Nc } (5) graph, we say agent i observes agent j (e.g. (i, j) ∈ E) if j is

uit = ûit ∀i ∈ {Nc + 1, ..., N } (6) within a 20 meter, 120◦ half-angle cone from i’s heading. To

compute strongly connected components and their outgoing

ûit

where is the predicted control of observed vehicle i at time edges, we use Kosaraju’s algorithm (Nuutila and Soisalon-

t. Soininen, 1994), which uses two depth-first search traversals

Finally, we impose collision constraints between each pair to compute strongly connected components in linear time.

of (both observed and unobserved) vehicles at every point in We implement the one-dimensional dynamic roundabout

time. That is, for every pair of agents i and j at every time in game setup as described in Section 3.2. For predictions of the

the horizon, we introduce constraints outgoing edges of each strongly connected component, we

(pixt − pjxt )2 + (piyt − pjyt )2 ≥ rsafe

2

, (7) assume each observed uncontrolled vehicle will maintain its

velocity throughout the planning horizon (i.e. ûit from Eq. (6)

where rsafe is a safety radius. is 0 for all t). We use Algames.jl (Le Cleac’h et al., 2020)

to solve for Nash equilibria by optimizing an augmented La-

4 Experiments grangian with the objective gradients. At each point in time,

In our experiments, we empirically demonstrate our ap- we plan a four-second horizon, where decisions are made ev-

proach’s ability to generate human-like behavior in real- ery 0.2 seconds.

world, complicated driving scenarios. We use the IN- We also compare our method against a fully centralized

TERACTION dataset (Zhan et al., 2019), which consists approach—that is, one where all agents in a frame play a sin-

of drone-collected motion data from scenes with compli- gle game together.

cated driving interactions. We focus on control in a round-

about, a traditionally difficult driving scenario that requires 4.3 Results

negotiating with potentially many agents. From the data, As a baseline, our IDM adaptation performs surprisingly well

we extract vehicle spawn times and approximate true paths even though IDM is designed to model single-lane driving.

with twentieth-order polynomials. In our simulator, we We observe successful navigation within the first few hun-

fix these values and allow for different methods to pro- dred frames, with collisions happening rarely when two ve-

vide (one-dimensional) acceleration control for all agents hicles do not observe one another while merging. As timeprogresses, we observe severe slowdowns as more agents en-

ter the scene, and an eventual standstill. During standstill,

the number of collisions increases dramatically as new vehi-

cles enter the scene before space is cleared. One could imag-

ine designing sets of rules to circumvent problems from the

IDM-based approach, but rule design for such a complex en-

vironment can be very difficult because of the edge cases that

must be considered. Regardless, this approach highlights the

lack of safety in simple systems.

On the contrary, decentralized game-theoretic planning

generates human-like driving without explicitly encoding

rules. We observe agents accelerating and decelerating natu-

rally with the flow of locally observed traffic. We also observe

instances of agents safely resolving complex traffic conflicts

which would be difficult to encode in rules-based planners.

Although our quantitative experiments focus on evaluating

performance at a single, more difficult roundabout, we see

human-like, safe driving emerge across roundabout environ- (a) Interaction Graph

ments. That is, we observe collision-free generalization to

different roundabouts without doing any additional parame-

ter tuning. For videos of resulting behavior please refer to

supplementary material.

A sample decentralized open-loop, Nash equilibrium plan

can be seen in Figure 3. In it, one highlighted strongly con-

nected component consists of three vehicles that observe one

another, and observe one other vehicle that does not observe

them in turn. The optimal plan for this strongly connected

component has the three vehicles in the component safely in-

teracting to proceed towards their goals, while the other ob-

served but non-controlled vehicle maintains its velocity. Vehicle 1

Figure 4 depicts the safe resolution of a traffic conflict by Vehicle 2

our method that would be otherwise hard to resolve. In it, the Vehicle 3

vehicles resolve who has right-of-way and merge safely be- Vehicle 4

hind one another without wholly disrupting the flow of traffic,

which happens often in the IDM adaptation baseline. (b) Planned Trajectory for SCC

In Table 1, we compare the performance of the IDM adap-

tation, centralized game-theoretic planning, and decentral-

ized game-theoretic planning in some key metrics averaged 12

over five 100-second roundabout simulations where decisions

are made at 10 Hz. Namely, we compare the number of col-

lisions per 100 seconds, the average shortfall below target

Velocity (m/s)

speed, the number of players per game given our choice of 10

interaction graph, and the time to generate a joint policy. For

our decentralized approach, we define the number of play-

ers per game and the time to generate a joint policy using 8

the largest values across strongly connected components at

each time step, as the games can be parallelized. We note that

our solution times are slow because we are approximating the 6 vtarget Vehicle 1 Vehicle 2

paths as twentieth-order polynomials, and the times are meant

to highlight relative improvement when using a decentralized Vehicle 3 Vehicle 4

approach. 0 0.2 0.4 0.6 0.8 1 1.2 1.4 1.6

We observe that though generating a joint policy is quick in Time (s)

the IDM adaptation, it leads to unsafe behavior that is signifi-

cantly reduced in the game-theoretic approaches. We observe (c) Planned Velocity Profile for SCC

that the game-theoretic approaches are both a) much safer in

terms of collision rate and b) much more efficient in terms Figure 3: A snapshot of an interaction graph, with a particular

of vehicle speed. We see an average of 55.8, 4.8, and 0.2 strongly connected component highlighted (a). There are three

collisions per 100 seconds when using the IDM, centralized agents in the SCC and a fourth one which is observed but not con-

game-theoretic, and decentralized game-theoretic policies re- trolled. The three vehicles inside the SCC safely negotiate with one

spectively. Similarly, we see that the mean vehicle shortfall another en route their goals. The planned Nash equilibrium trajec-

tory is shown in (b) with points for every 0.2 seconds. The planned

velocity improves from 8.7 m/s with IDM, to 3.2 m/s with velocity profile is depicted in (c).

the centralized game-theoretic policy, to 2.8 m/s with ourFigure 4: A set of snapshots of traffic conflicts which gets resolved safely through decentralized game-theoretic planning. Snapshots are

shown at one second intervals (ten decision frames). The vehicles quickly resolve right-of-way and merge safely without the need for a

complex set of rules.

Table 1: A comparison of performance metrics with the IDM adap- gotiations which drivers undergo to resolve conflicts. Game-

tation, centralized game-theoretic planning (C-Nash), and decentral- theoretic planning shows promise in allowing multiple agents

ized game-theoretic planning (Dec-Nash) models, performed over

five scenes of 100 seconds of driving. We compare the mean number to safely navigate a dense environment because it enables

of collisions per 100 seconds (collisions), the mean vehicle shortfall agents to reason about how their plans affect other agents’

below target speed (vtarget −vavg ), the number of players in the largest plans. Though finding a Nash equilibrium in a general-

game at each time step (# players), and the time to generate a joint sum Markov game can be quite expensive, recent work has

policy. demonstrated significant improvements in optimization ef-

ficiency for games with nonlinear dynamics and objectives

Model Collisions vtarget − vavg # Players Time (s) (Fridovich-Keil et al., 2020; Le Cleac’h et al., 2020). These

methods, however, are shown empirically to scale poorly with

IDM 55.80±11.53 8.68±0.13 1 0.005±0.001 the number of agents in the game.

C-Nash 4.80±1.88 3.22±0.16 6.23±2.34 6.43±5.74

Dec-Nash 0.20±0.20 2.79±0.12 1.83±1.00 2.24±1.97 In this project, we take a decentralized approach to game-

theoretic planning for urban navigation, in which we assume

that planning games are only played between vehicles that

method. are mutually interacting (e.g. who are visible to one another

We notice that using a centralized game-theoretic approach within a strongly connected graph component). This assump-

leads to more driving hesitancy, which ultimately results in tion significantly reduces the number of agents in each game,

slower average velocities when compared to our approach. and therefore collision constraints, allowing reduced Nash

We observe collisions in the centralized approach being equilibrium optimization time. We demonstrate our approach

caused by a) the solver being unable to find adequate solu- in roundabouts, where we control agent accelerations assum-

tions when the games are too large, and b) vehicles spawning ing paths are known a priori (e.g. from a trajectory planner).

before their path has been cleared. With the decentralized We observe the emergence of human-like driving behavior

game-theoretic policy, we only observed one collision in the which is safe and efficient when compared to a baseline IDM

500 total seconds of driving, which we believe to have also adaptation, and which is quick when compared to centralized

been caused by the game in question being too large. game-theoretic planning.

Furthermore, we observe that our decentralized approach

can generate plans much more quickly than a centralized one. Our approach has the limitation that it assumes shared

We also expect that in larger urban intersections and round- knowledge of other agents’ objective functions within a

abouts with much denser traffic, the performance gaps would strongly connected component, and is therefore not fully de-

become even more profound, and our method will be a neces- centralized. Future work involves relaxing this assumption.

sity to keep game-theoretic plan generation tractable. Recent work tries to infer parameters of other players’ un-

known objective functions (Le Cleac’h et al., 2021), but this

presents challenges in the decentralized case when other play-

5 Conclusion ers may only stay in a strongly connected component for a

Generating safe, human-like behavior in dense traffic is chal- short period of time. Future work also involves designing ob-

lenging for rule-based planners because of the complex ne- jectives to better imitate human behavior.Acknowledgments Ho, J., J. Gupta, and S. Ermon (2016). “Model-free imitation

This material is based upon work supported by the National learning with policy optimization”. In: International Con-

Science Foundation Graduate Research Fellowship Program ference on Machine Learning (ICML), pp. 2760–2769.

under Grant No. DGE-1656518. Any opinions, findings, and Kesting, A., M. Treiber, and D. Helbing (2010). “Enhanced

conclusions or recommendations expressed in this material intelligent driver model to access the impact of driving

are those of the author(s) and do not necessarily reflect the strategies on traffic capacity”. In: Philosophical Transac-

views of the National Science Foundation. This work is also tions of the Royal Society A: Mathematical, Physical and

supported by the COMET K2—Competence Centers for Ex- Engineering Sciences 368.1928, pp. 4585–4605.

cellent Technologies Programme of the Federal Ministry for Kochenderfer, M. J., T. A. Wheeler, and K. H. Wray (2022).

Transport, Innovation and Technology (bmvit), the Federal Algorithms for Decision Making. MIT Press.

Ministry for Digital, Business and Enterprise (bmdw), the Le Cleac’h, S., M. Schwager, and Z. Manchester (2020). “AL-

Austrian Research Promotion Agency (FFG), the Province of GAMES: A fast solver for constrained dynamic games”.

Styria, and the Styrian Business Promotion Agency (SFG). In: Robotics: Science and Systems.

The authors thank Simon Le Cleac’h, Brian Jackson, Lasse Le Cleac’h, S., M. Schwager, and Z. Manchester (2021).

Peters, and Mac Schwager for their insightful feedback. “LUCIDGames: online unscented inverse dynamic games

for adaptive trajectory prediction and planning”. In: IEEE

Robotics and Automation Letters, pp. 1–1.

References Liebner, M., M. Baumann, F. Klanner, and C. Stiller (2012).

Abbeel, P. and A. Y. Ng (2004). “Apprenticeship learning via “Driver intent inference at urban intersections using the in-

inverse reinforcement learning”. In: International Confer- telligent driver model”. In: IEEE Intelligent Vehicles Sym-

ence on Machine Learning (ICML). posium (IV), pp. 1162–1167.

Başar, T. and G. J. Olsder (1998). Dynamic noncooperative Nuutila, E. and E. Soisalon-Soininen (1994). “On finding the

game theory. SIAM. strongly connected components in a directed graph”. In:

Bhattacharyya, R., D. Phillips, B. Wulfe, J. Morton, A. Kue- Information Processing Letters 49.1, pp. 9–14.

fler, and M. J. Kochenderfer (2018). “Multi-agent imita- Peters, L., D. Fridovich-Keil, C. J. Tomlin, and Z. N. Sunberg

tion learning for driving simulation”. In: IEEE/RSJ In- (2020). “Inference-based strategy alignment for general-

ternational Conference on Intelligent Robots and Systems sum differential games”. In: International Conference on

(IROS), pp. 1534–1539. Autonomous Agents and Multiagent Systems (AAMAS),

Bhattacharyya, R., R. Senanayake, K. Brown, and M. J. pp. 1037–1045.

Kochenderfer (2020). “Online parameter estimation for Ross, S. and D. Bagnell (2010). “Efficient reductions for imi-

human driver behavior prediction”. In: American Control tation learning”. In: International Conference on Artificial

Conference (ACC), pp. 301–306. Intelligence and Statistics (AISTATS), pp. 661–668.

Bhattacharyya, R., B. Wulfe, D. Phillips, A. Kuefler, J. Ross, S., G. Gordon, and D. Bagnell (2011). “A reduction

Morton, R. Senanayake, and M. Kochenderfer (2020). of imitation learning and structured prediction to no-regret

“Modeling Human Driving Behavior through Genera- online learning”. In: International Conference on Artificial

tive Adversarial Imitation Learning”. In: arXiv preprint Intelligence and Statistics (AISTATS), pp. 627–635.

arXiv:2006.06412. Song, J., H. Ren, D. Sadigh, and S. Ermon (2018).

Brown, K., K. Driggs-Campbell, and M. J. Kochenderfer “Multi-agent generative adversarial imitation learning”.

(2020). “Modeling and prediction of human driver behav- In: Advances in Neural Information Processing Systems

ior: A survey”. In: arXiv preprint arXiv:2006.08832. (NeurIPS), pp. 7472–7483.

Buyer, J., D. Waldenmayer, N. Sußmann, R. Zöllner, and Torreno, A., E. Onaindia, A. Komenda, and M. Štolba (2017).

J. M. Zöllner (2019). “Interaction-aware approach for on- “Cooperative multi-agent planning: a survey”. In: ACM

line parameter estimation of a multi-lane intelligent driver Computing Surveys (CSUR) 50.6, pp. 1–32.

model”. In: IEEE International Conference on Intelligent Treiber, M., A. Hennecke, and D. Helbing (2000). “Con-

Transportation Systems (ITSC), pp. 3967–3973. gested traffic states in empirical observations and micro-

Engwerda, J. (2007). “Algorithms for computing Nash equi- scopic simulations”. In: Physical Review E 62.2, p. 1805.

libria in deterministic LQ games”. In: Computational Man- Zhan, W. et al. (2019). “INTERACTION Dataset: An

agement Science 4.2, pp. 113–140. INTERnational, Adversarial and Cooperative moTION

Facchinei, F. and C. Kanzow (2007). “Generalized Nash equi- Dataset in Interactive Driving Scenarios with Semantic

librium problems”. In: 4OR 5.3, pp. 173–210. Maps”. In: arXiv:1910.03088 [cs, eess].

Finn, C., S. Levine, and P. Abbeel (2016). “Guided cost Ziebart, B. D., A. L. Maas, J. A. Bagnell, and A. K.

learning: Deep inverse optimal control via policy optimiza- Dey (2008). “Maximum entropy inverse reinforcement

tion”. In: International Conference on Machine Learning learning.” In: AAAI Conference on Artificial Intelligence

(ICML), pp. 49–58. (AAAI), pp. 1433–1438.

Fridovich-Keil, D., E. Ratner, L. Peters, A. D. Dragan, and

C. J. Tomlin (2020). “Efficient iterative linear-quadratic ap-

proximations for nonlinear multi-player general-sum dif-

ferential games”. In: IEEE International Conference on

Robotics and Automation (ICRA), pp. 1475–1481.

Ho, J. and S. Ermon (2016). “Generative adversarial imitation

learning”. In: Advances in Neural Information Processing

Systems (NeurIPS), pp. 4572–4580.You can also read