Meta-Reinforcement Learning for Mastering Multiple Skills and Generalizing across Environments in Text-based Games

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Meta-Reinforcement Learning for Mastering Multiple Skills and

Generalizing across Environments in Text-based Games

Zhenjie Zhao Mingfei Sun

Nanjing University of Information University of Oxford

Science and Technology mingfei.sun@cs.ox.ac.uk

zzhaoao@nuist.edu.cn

Xiaojuan Ma

The Hong Kong University of Scinece and Technology

mxj@cse.ust.hk

Abstract text-based games requires non-trivial natural lan-

guage understanding/generalization and sequential

Text-based games can be used to develop task- decision making. Developing agents that can play

oriented text agents for accomplishing tasks text-based games automatically is promising for en-

with high-level language instructions, which

abling task-oriented, language-based human-robot

has potential applications in domains such as

human-robot interaction. Given a text instruc- interaction (HRI) experience (Scheutz et al., 2011).

tion, reinforcement learning is commonly used Supposing that a text agent can reason a given com-

to train agents to complete the intended task mand and generate a sequence of text actions for

owing to its convenience of learning policies accomplishing the task, we can then use text as a

automatically. However, because of the large proxy and connect text inputs and outputs of the

space of combinatorial text actions, learning a agent with multi-modal signals, such as vision and

policy network that generates an action word

physical actions, to allow a physical robot operate

by word with reinforcement learning is chal-

lenging. Recent research works show that

in the physical space (Shridhar et al., 2021).

imitation learning provides an effective way Given a text instruction or goal, reinforcement

of training a generation-based policy network. learning (RL) (Sutton and Barto, 2018) is com-

However, trained agents with imitation learn- monly used to train agents to finish the intended

ing are hard to master a wide spectrum of task task automatically. In general, there are two ap-

types or skills, and it is also difficult for them proaches to train a policy network to obtain the

to generalize to new environments. In this pa-

corresponding text action: generation-based meth-

per, we propose a meta-reinforcement learn-

ing based method to train text agents through ods that generate a text action word by word and

learning-to-explore. In particular, the text choice-based methods that select the optimal ac-

agent first explores the environment to gather tion from a list of candidates (Côté et al., 2018).

task-specific information and then adapts the The list of action candidates in a choice-based

execution policy for solving the task with this method may be limited by pre-defined rules and

information. On the publicly available testbed hard to generalize to a new environment. In con-

ALFWorld, we conducted a comparison study trast, generation-based methods can generate more

with imitation learning and show the superior-

possibilities and potentially have a better general-

ity of our method.

ization ability. Therefore, to allow a text agent to

1 Introduction fully explore in an environment and obtain best

performance, a generation-based method is needed

A text-based game, such as Zork (Infocom, 1980), (Yao et al., 2020). However, the combinatorial ac-

is a text-based simulation environment that a player tion space precludes reinforcement learning from

uses text commands to interact with. For example, working well on a generation-based policy network.

given the current text description of a game envi- Recent research shows that imitation learning (Ross

ronment, users need to change the environmental et al., 2011) provides an effective way to train

state by inputting a text action, and the environment a generation-based policy network using demon-

returns a text description of the next environmental strations or dense reinforcement signals (Shridhar

state. Users have to take text actions to change the et al., 2021). However, it is still difficult for the

environmental state iteratively until an expected trained policy to master multiple task types or skills

final state is achieved (Côté et al., 2018). Solving and generalize across environments (Shridhar et al.,

1

Proceedings of the 1st Workshop on Meta Learning and Its Applications to Natural Language Processing (MetaNLP 2021), pages 1–10

Bangkok, Thailand (online), August 5, 2021. ©2021 Association for Computational Linguistics2021). For example, an agent trained on the task 2 Related Work

type of slicing an apple cannot work on a task of

2.1 Language-based Human-Robot

pouring water. Such lack of the ability to gener-

Interaction

alize precludes the agent from working on a real

interaction scenario. To achieve real-world HRI ex- Enabling a robot to accomplish tasks with language

perience with text agents, two requirements should goals is a long-term study of human-robot interac-

be fulfilled: 1) a trained agent should master multi- tion (Scheutz et al., 2011), where the core problem

ple skills simultaneously and work on any task type is to ground language goals with multi-modal sig-

that it has seen during training; 2) a trained agent nals and generate an action sequence for the robot

should also generalize to unseen environments. to accomplish the task. Because of the character-

Meta-reinforcement learning (meta-RL) is a istic of sequential decision making, reinforcement

commonly used technique to train an agent that learning (Sutton and Barto, 2018) is commonly

generalizes across multiple tasks through summa- used. Previous research works using reinforcement

rizing experience over those tasks. The underlying learning have studied the problem on simplified

idea of meta-RL is to incorporate meta-learning block worlds (Janner et al., 2018; Bisk et al., 2018),

into reinforcement learning training, such that the which could be far from being realistic. The re-

trained agent, e.g., text-based agents, could master cent interests on embodied artificial intelligence

multiple skills and generalize across different envi- (embodied AI) have contributed to several realistic

ronments (Finn et al., 2017; Liu et al., 2020). In simulation environments, such as Gibson (Xia et al.,

this paper, we propose a meta-RL based method 2018), Habitat (Savva et al., 2019), RoboTHOR

to train text agents through learning-to-explore. In (Deitke et al., 2020), and ALFRED (Shridhar et al.,

particular, a text agent first explores an environment 2020). However, because of physical constraints in

to gather task-specific information. It then updates a real environment, gap between a simulation envi-

the agent’s policy towards solving the task with ronment and a real world still exists (Deitke et al.,

this task-specific information for better generaliza- 2020; Shridhar et al., 2021). Researchers have also

tion performance. On a publicly available testbed, explored the idea of finding a mapping between

ALFWorld (Shridhar et al., 2021), we conducted ex- vision signals of a real robot and language signals

periments on all its six task types (i.e., pick & place, directly (Blukis et al., 2020), but this mapping re-

examine in light, clean & place, heat & place, cool quires detailed annotated data and it is usually ex-

& place, and pick two & place), where for each pensive to obtain physical interaction data. An

task type, there is a set of unique environments alternative method of deploying an agent on a real

sampled from the distribution defined by their task robot is to train the agent on abstract text space,

type (see Section 5.1 for statistics). Results suggest such as TextWorld (Côté et al., 2018), and then

that our method generally masters multiple skills connect text with multi-modal signals of the robot

and enables better generalization performance on (Shridhar et al., 2021). For example, by connect-

new environments compared to ALFWorld (Shrid- ing text with the simulated environment ALFRED

har et al., 2021). We provide further analysis and (Shridhar et al., 2020), researchers have shown that

discussion to show the importance of task diversity the trained text agent has better generalization abil-

for meta-RL. The contributions of this paper are: ity than training an embodied agent end-to-end

directly (Shridhar et al., 2021). However, how to

make a text agent generalize across different tasks

• From the perspective of human-robot interac- so that one robot can work on tasks of different

tion, we identify the generalization problem of types and in unseen environments is still a chal-

training an agent to master multiple skills and lenging problem, which is the focus of this paper.

generalize on new environments. We propose 2.2 Text-based Games

to use meta-RL methods to achieve it.

The success of deep reinforcement learning (RL)

on Atari games (Mnih et al., 2015) inspires the

• We design an efficient learning-to-explore use of RL on text-based games. There are a va-

approach which enables a generation-based riety of ways to use deep reinforcement learn-

agent to master multiple skills and generalize ing on text-based games. For example, using the

across a wide spectrum of environments. deep Q-learning (DQN) framework, Narasimhan

2et al. (2015) leverage the Long Short-Term Mem- learning-to-explore. For memory-based methods,

ory (LSTM) as the policy network to predict action researchers have proposed RL2 (Duan et al., 2016),

for each state. In (He et al., 2016), researchers which uses a recurrent neural network (RNN) to

propose the deep reinforcement relevance network encode a “fast” RL algorithm, and the RNN mod-

(DRRN), which encodes states and actions sepa- ule is trained with another “slow” RL algorithm.

rately and then calculates Q-values by integrating Memory-based methods are usually hard to opti-

the information of the two channels. However, the mize and suffer from the sample efficiency problem

compositional and combinatorial properties of nat- (Duan et al., 2016). For optimization-based meth-

ural language lead to large state and action spaces, ods, in (Finn et al., 2017), researchers propose a

which makes solving text-based games with deep model-agnostic meta-reinforcement learning algo-

reinforcement learning very challenging. To deal rithm that uses a nested optimization procedure to

with this problem, in fiction-style text games, Ad- obtain maximal rewards with limited number of

hikari et al. (2020) use a graph-aided transformer sample trajectories. Optimization-based methods

(GATA) to capture game dynamics so that it can usually require on-policy reinforcement learning

plan well and select text actions more effectively. algorithms and are hard to use value-based meth-

Ammanabrolu and Riedl (2019) learn a knowledge ods (Finn et al., 2017), which also leads to the

graph during the exploration of an agent, and use sample efficiency problem. Learning-to-explore

it to prune the action space. Furthermore, Muruge- is a newly proposed meta-reinforcement learning

san et al. (2021) show that incorporating common approach that can potentially leverage any rein-

sense knowledge also helps reduce the action space forcement learning method with good optimiza-

and allows an agent to choose an action more effec- tion properties by decoupling an episode into two

tively. Recently, Yao et al. (2020) show that given stages: exploration and execution (Rakelly et al.,

a text state, a fine-tuned language model GPT can 2019; Liu et al., 2020). The exploration stage is

generate a corresponding text action set, which used to recognize task-specific information, which

significantly reduces the action space and also im- could be useful for the execution stage for fast and

proves the performance. Previous research works efficient adaptation.

mainly focus on learning an agent to solve one text For embodied AI, using meta-reinforcement

game effectively. However, in reality, we usually learning, researchers have explored to improve

hope an agent can learn a wide spectrum of tasks generalization ability of an agent to unseen envi-

and generalize well to unseen environments. In ronments (Wortsman et al., 2019). However, as

(Adolphs and Hofmann, 2020), in terms of environ- aforementioned, deploying such an agent on a real

ments and task descriptions, researchers show that robot is still a challenging problem owing to the

an actor-critic framework with action space pruning domain gap between a simulation environment and

can learn an agent to generalize to unseen games a physical environment. In this paper, we instead

that belongs to the same family when training. In try to use the learning-to-explore method of meta-

this paper, with meta-reinforcement learning, we reinforcement learning to increase the generaliza-

investigate if an agent can master multiple task tion ability of a text agent so that it can master mul-

types and generalize to unseen environments. tiple skills and work on new environments, which

can potentially facilitate real-world human-robot

2.3 Meta-reinforcement Learning interaction applications.

Meta-learning is a machine learning paradigm that 3 Problem Formulation

tries to leverage common knowledge among tasks

to generalize to new data (Thrun and Pratt, 1998; 3.1 Text-based Game Preliminary

Vilalta and Drissi, 2002). Meta-reinforcement Given a language goal g, playing a text-based game

learning, in particular, augments Markov decision can be modeled as a partially observable Markov

processes with particular task labels, and tries to decision process (POMDP) (S, P, A, Ω, O, R, γ)

use shared experience of interacting with differ- (Côté et al., 2018), where S is the set of environ-

ent tasks to adapt to a new task efficiently (Liu mental states, P is the set of transition probabilities,

et al., 2020). In general, there are three ways of A is the set of actions, Ω is the set of observations,

conducting meta-reinforcement learning: memory- O is the set of observation probabilities, R is the

based methods, optimization-based methods, and reward function, and γ is the discount factor. If

3we input an action at to the environment, it will -. (() |/)

/ ()

transition from the current state st to a new state Task Identifier

st+1 with probability P (st+1 |st , at ), output an ob-

servation ot+1 based on the new state with proba- 12 (%& |!, +& ) Environment

! 12 (() |!, +& )

bility O(ot+1 |st+1 ), and get a reward R(g, at , st ) ()0

depending on the goal g, the current action at , Exploration Policy

%&

and the current state st . Given the initial environ- "# (%& |() , !, +& )

Execution Policy

ment state s0 and a goal g, we want to learn a pol-

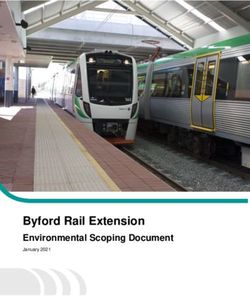

icy π(a|o, g) that can generate an action sequence Figure 1: Overview of our method, where g is the lan-

(a0 , a1 , . . . , aT ) to accomplishPthe task and obtain guage goal, µ denotes a task index, zµ and zµ0 are hid-

maximal discounted reward Tt=0 γ t R(g, at , st ). den feature vectors of a task, and at is the generated

In text-based games, o and a refer to text sentences. text action. The dotted line box is only used during

training. For simplicity, we did not draw the inputs of

3.2 Learning-to-Explore in Text-based roll-out trajectories.

Games

In meta-reinforcement learning, we consider a fam-

ily of POMDPs {(Sµ , Aµ , Ωµ , γ, Oµ , R, Pµ )} in- policy neural network πψ , a task identifier neural

dexed by µ, where µ ∈ M denotes a task and network qθ , and an exploration policy neural net-

M denotes the family of POMDPs or tasks. Here, work pφ , where ψ, θ, and φ denote parameters of

we consider that the reward function is indepen- the three neural networks, respectively. As shown

dent of tasks and can be applied for all POMDPs. in Figure 1, an exploration policy pφ is trained to

The tasks in the family have task-dependent set of generate a task-specific feature vector zµ0 , which is

states Sµ , actions Aµ , observations Ωµ , observa- then input to an execution policy πψ for generating

tion probabilities Oµ , and dynamics Pµ . Following actions. During training, a task identifier is used

the setting in (Liu et al., 2020), given a goal g, a to generate supervised signals zµ of zµ0 , and is not

task-based meta-reinforcement learning problem used during testing. Because of zµ0 , πψ can adapt

consists of sampling a task µ ∼ p(µ) and running a quickly and generalize well in a new task.

trial, where a trial contains an exploration episode, The πψ , qθ and pφ are all encoder-decoder

followed by several execution episodes. We also architectures. For πψ , it takes a goal

call a goal as a task type or a skill because it usu- g and a K-step roll-out trajectory τt =

ally constrains how an agent solves a task µ. We (o0 , at−K , ot−K+1 , . . . , at−1 , ot ) from time t−K+

call a POMDP without the reward function as an 1 to time t as inputs, and outputs the current ac-

environment, contextualized with the task speci- tion at , where o0 is obtained by executing the

fier µ, since it defines a game environment that an “look” action at the beginning. o0 is used be-

agent can interact with. A task denoted by µ then cause it is the only observation that lists the dif-

contains a task type, an environment, and a reward ferent areas of the room. qθ takes a task in-

function. Given a set of training tasks Mtrain , we dex µ as an input and outputs the task-specific

want to train a policy π(a|o, g) that can generalize feature zµ , which is only used during training.

well across a set of testing tasks Mtest . For training, pφ takes a goal and a K-step roll-out trajectory

we first fit a task-specific feature vector zµ0 using τt = (o0 , at−K , ot−K+1 , . . . , at−1 , ot ) as inputs

the exploration episode, and then use it to adapt to and outputs an estimated task-specific feature zµ0 .

the task quickly during execution. The task-specific

adaptation helps the policy π to recognize which Our goal is to make an execution policy

task type it works on and generalize well on a new π(at |g, τt ) generalizable across tasks. If we train

unseen environment. π using imitation learning, it is critical to have

enough training samples of {(g, τt , at )} following

4 Method some distributions to have good generalization per-

formance. But because of the combinatorial com-

We use neural networks to map observations plexity of τt , it is hard to obtain enough data of at .

to actions. Given the general setting of meta- Learning from conditional variational auto-encoder

reinforcement learning through learning-to-explore, (CVAE) (Sohn et al., 2015), we factorize π with a

our method contains three modules: an execution task-specific hidden variable z and use z to facili-

4tate the generation of at , namely, where ⊕ denotes the concatenation operation,

W ∈ Rde ×2de is a weight matrix, b ∈ Rde is a

Z bias vector, hRNN ∈ Rde , ht ∈ Rdh , de is the di-

π(at |g, τt ) = p(z|g, τt )π(at |z, g, τt )dz, mension of zµ , dh is the dimension of ht , GRU

z∈Z

(1) denotes a gated recurrent unit, and ReLU denotes

where Z ∼ N (zµ , σ 2 I) is assumed to follow a a ReLU activation function. Compared to selecting

Gaussian distribution, the aforementioned task- text actions from a set of valid actions, generat-

specific feature vector zµ is the mean vector and σ 2 ing text actions word by word is more likely to

is the variance. During testing, we can then gener- explore multiple possibilities for performing ac-

ate actions by first generating a task-specific hidden tions to achieve higher rewards (Yao et al., 2020).

variable z with p(z|g, τt ) and then generating the However, Shridhar et al. (2021) show that when

action with π(at |z, g, τt ). Because z encodes task- trained from a sparse reinforcement learning sig-

specific features, it helps π generate more proper nal in ALFWorld, generation-based methods are

actions for the current task µ. hard to get good performance. Because it is rela-

Optimizing (1) amounts to maximise evidence tively easy to get demonstrations from a text-based

lower bound (ELBO) (Sohn et al., 2015): game, similar to (Shridhar et al., 2021), the imita-

tion learning method DAgger (Ross et al., 2011) is

used to train a generation-based execution policy

ELBO(at , g, τt ) = Eq(z|at ,g,τt ) [log π(at |z, g, τt )]

πψ . In this case, optimizing the execution policy

− KL(q(z|at , g, τt ))||p(z|g, τt )), network is to optimize the first term of (3).

(2)

where q(z|at , g, τt )) is the approximate posterior 4.2 Task Identifier

probability of z and p(z|g, τt ) is the prior proba- We use a task identifier qθ (zµ |µ) to approximate

bility of z. To implement (2), we use the execu- the approximate posterior q(z|at , g, τt ). The task

tion policy network πψ (at |zµ , τt ), the task identi- identifier is used to generate task-specific features

fier qθ (zµ |µ), and the exploration policy network during training. We implement it as a simple two-

pφ (zµ0 |g, τt ) to approximate the execution policy, layer fully connected network as:

the posterior, and the prior, respectively, and as-

sume that both qθ (zµ |µ) and pφ (zµ0 |g, τt ) are Gaus-

sian. It is easy to show that the new objective is: zµ = ReLU(W2 ReLU(W1 e(µ) + b1 ) + b2 ),

||zµ − zµ0 ||22 where e(µ) is the one-hot encoding of the task

Eqθ (zµ |at ,g,τt ) [log πψ (at |zµ , g, τt )] − , index µ, W2 ∈ Rde ×de , W1 ∈ Rde ×N , b1 , b2 ∈

2σ 2

(3) Rde , de is the dimension of the task embedding zµ ,

2

where we assume σ is the same for both the poste- N is the number of training game environments.

rior and prior. In the following, we introduce the

details of the execution policy network, the task 4.3 Exploration Policy

identifier, and the exploration policy network. We use an exploration policy network pφ (zµ0 |g, τt )

to approximate the prior p(z|g, τt ). The explo-

4.1 Execution Policy

ration policy needs to explore the environment to

The architecture of the execution policy network gather task-specific trajectory within T exp . Be-

is similar to the policy network in (Shridhar et al., cause we train the model end-to-end, it will opti-

2021). In particular, a QANet (Yu et al., 2018) mize the agent to explore the environment in this

is used to first encode g, τt as a recurrent hidden fixed number of steps, which also saves time. The

state ht and then decode ht to get at . Different architecture is similar to the execution policy net-

from (Shridhar et al., 2021), during encoding, we work. An encoder takes g, τt as inputs and gener-

concatenate the initial encoding hRNN and zµ as an ates a hidden state ht , and the hidden state is then

input to obtain ht , namely, used to obtain zµ0 via a fully connected layer:

hRNN = Encode(g, τt ), zµ0 = ReLU(Wht + b),

ht = GRU(ReLU(W(hRNN ⊕ zµ ) + b), ht−1 ), where W ∈ Rde ×dh , b ∈ Rde .

5Algorithm 1: The training procedure DQN is an off-policy method that can leverage

Input: training tasks Mtrain replay buffer to deal with the sample efficiency

Output: execution policies πψ , exploration policy pφ problem. Here, we use DQN for its simplicity, but

initialize hyper-parameters Mstep , B, T exp , T exec it is possible to use other more sophisticated off-

initialize πψ , pφ , and qφ policy methods. Because it is generally difficult

to train a generation-based text agent with only

i←0

while True do the sparse rewards provided by the environment,

if i > Mstep then we adopt the choice-based method to train the text

break

end agent. We empirically turn the reward function to

be dense by adding the second term in Eq(3) to the

randomly sample B games MB from Mtrain reward function: Rnew = 0.5 × Rold + 0.5 × ||zµ −

// Evaluate the task identifier zµ0 ||22 to encourage per-step optimization, where

calculate zµ with qφ Rold is the reward provided by the environment.

// Exploration The training procedure of the proposed method,

execute “look” and get o0 as presented in Algorithm 1, runs as follows: first,

for t=1:T exp do

at ← pφ (at |g, τt )

we randomly samples a batch of tasks MB from

compose τt by adding at and ot Mtrain ; second, with task indices, we evaluates qθ

evaluate MB with at and get ot+1 to obtain the task-specific features zµ ; third, the

zµ0 ← pφ (zµ0 |g, τt )

calculate (4) and update exploration agent explores MB by taking actions

end with pφ , and updates pφ according to Eq(4) through

a DQN learning. zµ0 is also obtained by pφ during

// Execution

execute “look” and get o0 exploring; fourth, the execution agent takes actions

for t=1:T exec do with πψ and we update the likelihood (the first

at ← πψ (at |zµ , g, τt )

compose τt by adding at and ot

term in Eq(3)) with demonstrations of the training

get demonstrations from MB data. The end-to-end training runs iteratively up to

calculate likelihood of at using a maximal step Mstep . In Algorithm 1, B denotes

demonstrations and update

if done then the sampling size of tasks, T exp is the step number

break of exploration, and T exec is the step number of

end execution.

end

i←i+B 5 Experiments

end

To demonstrate the generalization ability of our

meta-reinforcement learning algorithm across

For the exploration policy, in addition to obtain tasks, we conducted a set of experiments with the

zµ0 ,we also decode ht to get an exploration ac- ALFWorld platform (Shridhar et al., 2021). Text

tion at : pφ (at |g, τt ). In other words, we adopt environments of ALFWorld are aligned with 3D

a multi-task learning method to train the explo- simulated environments from ALFRED (Shridhar

ration network. In this way, the exploration policy et al., 2020), which makes ALFWorld a good proxy

also learns how to solve the problem, which could for our human-robot interaction scenario.

help the learning of zµ0 . We optimize the following

multi-task objective: 5.1 Dataset

The ALFWorld dataset (Shridhar et al., 2021) con-

L = Lµ + Ldqn , (4)

tains six task types, including pick & place, ex-

where Lµ is the task embedding loss and Ldqn is amine in light, clean & place, heat & place, cool

the DQN loss. In particular, Lµ is the second term & place, and pick two & place. While all the task

in (3), except that we do not consider the coefficient types require some basic common sub-tasks such as

1/2σ 2 . For the DQN loss Ldqn , we use the deep finding an object, picking it up, and placing it to a

Q-learning (DQN) method to train the exploration particular place; some task types require more com-

policy. Unlike the execution policy network, we do plex interactions with certain objects (e.g., heating

not use demonstrations here because we want the an object with a heat source). Each task type con-

policy network to explore the environment more. tains a set of training environments, and two sets of

6test environments. The first test set (seen) contains being evaluated, if an agent can finish S tasks, then

environments that are different, but sampled from the success rate of the agent is sr = |MStest | . Similar

the same game distributions as the training set (e.g., to (Shridhar et al., 2021), we evaluate three times

same rooms but with different scene layouts). The on the testing data and report averaged scores.

second test set (unseen) contains environments that

ALFWorld Ours

do not appear in the training set (i.e., unseen rooms task type seen unseen seen unseen

with different receptacles and scene layouts). The pick & place 46.7 34.7 51.4 50.0

statistics of the dataset is shown in Table 1. The examine in light 25.7 22.2 38.5 22.2

clean & place 44.4 39.8 48.1 54.8

task types pick & place and pick two & place have heat & place 58.3 44.9 50.0 56.5

more training environments than others. Our gener- cool & place 38.7 47.6 44.0 76.2

alization goal is to train a text agent on the training pick two & place 23.6 27.4 12.5 23.5

all tasks 39.3 37.6 41.4 49.3

set of all tasks simultaneously, and during testing,

given any task type, the agent can have good perfor- Table 2: Experiment results of the generalization ability

mance on both seen and unseen environments, i.e., on each individual task type and the union of them.

the agent masters all the six task types and general-

izes well on both seen and unseen environments.

5.4 Results and Analysis

task type train seen unseen

pick & place 790 35 24

We show the performance of our model on both

examine in light 308 13 18 seen and unseen test sets in Table 2, compared

clean & place 650 27 31 with numbers computed using the code and model

heat & place 459 16 23 checkpoint provided by ALFWorld (Shridhar et al.,

cool & place 533 25 21

pick two & place 813 24 17 2021). We observe that in most experiment set-

all tasks 3553 140 134 tings, our method outperforms ALFWorld. This is

especially obvious in the unseen setting, where the

Table 1: The statistics of the ALFWorld dataset.

testing environments contain unseen rooms with

different receptacles and scene layouts, our method

outperforms ALFWorld by a significant margin.

5.2 Baseline and Implementation Details

This suggests that the task-specific features gener-

We compare our method (denoted as Ours) with the ated by our agent indeed enable the agent learning

state-of-the-art generation-based agent (denoted as from a wide spectrum of task types. The larger

ALFWorld). Transfer learning is another way to performance gap between our method and ALF-

improve the generalization ability of an agent, but World on the unseen test set (e.g., 49.3 vs 37.6

it usually considers transferring knowledge from when testing on the union of all task types) further

a source task to a target task without the setting advocates that the task-specific features generated

of multiple tasks (Zhuang et al., 2021). We leave by our method are useful when tackling with com-

it as a future direction to investigate. We adopt pletely unfamiliar environments.

the implementation of ALFWorld from the origi- On the other hand, we observe that our method’s

nal paper (Shridhar et al., 2021) and use their pre- performance on the pick two & place tasks are

trained model for conducting all comparison ex- lower than ALFWorld. As mentioned in (Shrid-

periments. For the hyper-parameters in Algorithm har et al., 2021), the pick two & place task type is

1, T exp is set as 10 empirically, Mstep = 500, 000 unique and is considerably more difficult compared

(50K), B = 10, T exec = 50 are kept as the default to other tasks, in the sense that it is the only task

values of ALFWorld. The trajectory length K is set type which requires an agent to grasp and operate

as 3 empirically. Following ALFWorld (Shridhar more than one object. Intuitively, this aligns with

et al., 2021), we use beam search with width 10 for the common sense that a person who has learned

decoding. We ran all experiments on a server with to ride all kinds of bicycles can easily ride a new

Intel(R) Xeon(R) Silver 4216 CPU @ 2.10GHz, bicycle, but does not necessarily know how to drive

32G Memory, Nvidia GPU 2080Ti, Ubuntu 16.04. a car. We suspect that the decrease in performance

may be caused by the agent being overfitting to

5.3 Evaluation Metric the majority of training data in which only single

We use success rate as the evaluation metric for our object is picked up. Namely, the current developed

experiment. In particular, for |Mtest | text games method could work better on scenarios where a

7text-based game has the same difficulty level. In 6 Conclusion

other words, the current developed method can

We study the generalization issue of text-based

only work on scenarios where a text-based game

games, and develop a meta-reinforcement learn-

has the same difficulty level as the majority of train-

ing method with a learning-to-explore approach. In

ing games, and it is still hard to generalize to tasks

particular, we first use an exploration policy net-

with a higher difficulty level. As a future direc-

work to learn a task-specific feature vector, and

tion, we plan to investigate the explainability of

use this feature vector to help another execution

why an end-to-end trained agent works on certain

policy network adapt to a new task. To train the

tasks through counterfactuals (Pearl and Macken-

exploration and execution policy network, we use a

zie, 2018), and improve our method to specifically

task identifier to embed a task index, and maximize

tackle such problems where a certain dimension of

the likelihood of the execution policy network end-

task representations is significantly different from

to-end. To demonstrate the generalization ability

and unbalanced in the majority of training data.

of our method, we conducted a set of experiments

Finally, compared to a dedicated model trained on the publicly available testbed ALFWorld. In

specifically on one task type (Table 2 left in (Shrid- general, we find that our method has better gener-

har et al., 2021)), the performance of our method alization performance on a wide spectrum of task

is generally 10% ∼ 20% behind, and there is still types and environments. We leave the investiga-

a lot of room for improvement to achieve human- tion of explanability, the unbalance problem of task

level intelligence. However, our method shows types, and the training speed as the future research

that learning task-specific features through meta- directions.

reinforcement learning help an agent generalize

across a wide spectrum of task types, which is vi- Acknowledgments

tal towards real-world applications of human-robot

interaction. The authors thank Mohit Shridhar for clarifying

the experiment section in ALFWorld, Xingdi (Eric)

Yuan and Marc-Alexandre Côté for their insightful

5.5 Discussion discussions and editing of the initial version of this

paper, as well as constructive suggestions from all

To investigate whether different task types help im-

anonymous reviewers. Zhenjie Zhao is supported

prove performance of each other, we experimented

by the Startup Foundation for Introducing Talent of

with a setting where an agent is trained on the six

NUIST. This work is also supported by the Hong

task types separately with our method. The results

Kong General Research Fund (GRF) with grant No.

are shown in Table 3. Compared to the setting

16204420.

where the agent is trained on the union of all task

types (Table 2), the performance shows a signif-

icant drop in most of the task types. This trend References

is especially clear in the pick two & place tasks.

Ashutosh Adhikari, Xingdi Yuan, Marc-Alexandre

When trained solely on this type of tasks, our agent Côté, Mikuláš Zelinka, Marc-Antoine Rondeau, Ro-

produces a zero success rate. This suggests that main Laroche, Pascal Poupart, Jian Tang, Adam

for a meta-reinforcement learning based method Trischler, and Will Hamilton. 2020. Learning dy-

like ours, it is essential to have a diverse set of task namic belief graphs to generalize on text-based

games. In Advances in Neural Information Process-

types as well as a large enough training dataset. ing Systems, volume 33, pages 3045–3057. Curran

Associates, Inc.

task type seen unseen

pick & place 57.1 25.0 Leonard Adolphs and Thomas Hofmann. 2020.

examine in light 23.1 11.1 LeDeepChef deep reinforcement learning agent

clean & place 51.9 58.1 for families of text-based games. Proceedings

heat & place 31.3 30.4 of the AAAI Conference on Artificial Intelligence,

cool & place 12.0 9.5 34(05):7342–7349.

pick two & place 0.0 0.0

Prithviraj Ammanabrolu and Mark Riedl. 2019. Play-

Table 3: Testing results of training a separate agent on ing text-adventure games with graph-based deep re-

each of the six task types. inforcement learning. In Proceedings of the 2019

Conference of the North American Chapter of the

Association for Computational Linguistics: Human

8Language Technologies, Volume 1 (Long and Short Volodymyr Mnih, Koray Kavukcuoglu, David Silver,

Papers), pages 3557–3565, Minneapolis, Minnesota. Andrei A. Rusu, Joel Veness, Marc G. Bellemare,

Association for Computational Linguistics. Alex Graves, Martin Riedmiller, Andreas K. Fid-

jeland, Georg Ostrovski, Stig Petersen, Charles

Yonatan Bisk, K. Shih, Yejin Choi, and Daniel Marcu. Beattie, Amir Sadik, Ioannis Antonoglou, Helen

2018. Learning interpretable spatial operations in a King, Dharshan Kumaran, Daan Wierstra, Shane

rich 3D blocks world. In Proceedings of the AAAI Legg, and Demis Hassabis. 2015. Human-level con-

Conference on Artificial Intelligence (AAAI). trol through deep reinforcement learning. Nature,

518(7540):529–533.

Valts Blukis, Ross A. Knepper, and Yoav Artzi. 2020.

Few-shot object grounding and mapping for natural Keerthiram Murugesan, Mattia Atzeni, Pavan Kapani-

language robot instruction following. In Proceed- pathi, Pushkar Shukla, Sadhana Kumaravel, Gerald

ings of the Conference on Robot Learning. Tesauro, Kartik Talamadupula, Mrinmaya Sachan,

and Murray Campbell. 2021. Text-based RL agents

with commonsense knowledge: New challenges, en-

Marc-Alexandre Côté, Ákos Kádár, Xingdi Yuan, Ben vironments and baselines. Proceedings of the AAAI

Kybartas, Tavian Barnes, Emery Fine, J. Moore, Conference on Artificial Intelligence, 35(10):9018–

Matthew J. Hausknecht, Layla El Asri, Mah- 9027.

moud Adada, Wendy Tay, and Adam Trischler.

2018. Textworld: A learning environment for text- Karthik Narasimhan, Tejas Kulkarni, and Regina Barzi-

based games. In Computer Games Workshop at lay. 2015. Language understanding for text-based

ICML/IJCAI 2018. games using deep reinforcement learning. In Pro-

ceedings of the 2015 Conference on Empirical Meth-

Matt Deitke, Winson Han, Alvaro Herrasti, Anirud- ods in Natural Language Processing, pages 1–11,

dha Kembhavi, Eric Kolve, Roozbeh Mottaghi, Jordi Lisbon, Portugal. Association for Computational

Salvador, Dustin Schwenk, Eli VanderBilt, Matthew Linguistics.

Wallingford, Luca Weihs, Mark Yatskar, and Ali

Farhadi. 2020. RoboTHOR: An open simulation-to- Judea Pearl and Dana Mackenzie. 2018. The book of

real embodied AI platform. In Proceedings of the why. Basic Books, New York.

IEEE/CVF Conference on Computer Vision and Pat-

Kate Rakelly, Aurick Zhou, Chelsea Finn, Sergey

tern Recognition (CVPR).

Levine, and Deirdre Quillen. 2019. Efficient off-

policy meta-reinforcement learning via probabilistic

Yan Duan, John Schulman, Xi Chen, Peter L Bartlett, context variables. In Proceedings of the 36th In-

Ilya Sutskever, and Pieter Abbeel. 2016. RL2 : Fast ternational Conference on Machine Learning, vol-

reinforcement learning via slow reinforcement learn- ume 97 of Proceedings of Machine Learning Re-

ing. arXiv:1611.02779. search, pages 5331–5340. PMLR.

Chelsea Finn, Pieter Abbeel, and Sergey Levine. 2017. Stephane Ross, Geoffrey Gordon, and Drew Bagnell.

Model-agnostic meta-learning for fast adaptation of 2011. A reduction of imitation learning and struc-

deep networks. In Proceedings of the 34th In- tured prediction to no-regret online learning. In Pro-

ternational Conference on Machine Learning, vol- ceedings of the Fourteenth International Conference

ume 70 of Proceedings of Machine Learning Re- on Artificial Intelligence and Statistics, volume 15 of

search, pages 1126–1135. PMLR. Proceedings of Machine Learning Research, pages

627–635, Fort Lauderdale, FL, USA. PMLR.

Ji He, Jianshu Chen, Xiaodong He, Jianfeng Gao, Li-

hong Li, Li Deng, and Mari Ostendorf. 2016. Deep Manolis Savva, Abhishek Kadian, Oleksandr

reinforcement learning with a natural language ac- Maksymets, Yili Zhao, Erik Wijmans, Bha-

tion space. In Proceedings of the 54th Annual Meet- vana Jain, Julian Straub, Jia Liu, Vladlen Koltun,

ing of the Association for Computational Linguistics, Jitendra Malik, Devi Parikh, and Dhruv Batra. 2019.

pages 1621–1630, Berlin, Germany. Association for Habitat: A platform for embodied AI research.

Computational Linguistics. In 2019 IEEE/CVF International Conference on

Computer Vision (ICCV), pages 9338–9346.

Infocom. 1980. Zork I. http://ifdb.tads.org/viewgame? Matthias Scheutz, Rehj Cantrell, and Paul Schermer-

id=0dbnusxunq7fw5ro. horn. 2011. Toward humanlike task-based dialogue

processing for human robot interaction. AI Maga-

Michael Janner, Karthik Narasimhan, and Regina zine, 32(4):77–84.

Barzilay. 2018. Representation learning for

grounded spatial reasoning. Transactions of the As- Mohit Shridhar, Jesse Thomason, Daniel Gordon,

sociation for Computational Linguistics, 6:49–61. Yonatan Bisk, Winson Han, Roozbeh Mottaghi,

Luke Zettlemoyer, and Dieter Fox. 2020. ALFRED:

Evan Zheran Liu, Aditi Raghunathan, Percy Liang, A benchmark for interpreting grounded instructions

and Chelsea Finn. 2020. Explore then exe- for everyday tasks. In Proceedings of the IEEE/CVF

cute: Adapting without rewards via factorized meta- Conference on Computer Vision and Pattern Recog-

reinforcement learning. arXiv:2008.02790. nition (CVPR), pages 10740–10749.

9Mohit Shridhar, Xingdi Yuan, Marc-Alexandre Côté,

Yonatan Bisk, Adam Trischler, and Matthew

Hausknecht. 2021. ALFWorld: Aligning Text and

Embodied Environments for Interactive Learning.

In Proceedings of the International Conference on

Learning Representations (ICLR).

Kihyuk Sohn, Honglak Lee, and Xinchen Yan. 2015.

Learning structured output representation using

deep conditional generative models. In Advances in

Neural Information Processing Systems, volume 28.

Curran Associates, Inc.

Richard S Sutton and Andrew G Barto. 2018. Rein-

forcement learning: An introduction. MIT press.

Sebastian Thrun and Lorien Pratt, editors. 1998. Learn-

ing to learn. Kluwer Academic Publishers.

Ricardo Vilalta and Youssef Drissi. 2002. A perspec-

tive view and survey of meta-learning. Artificial In-

telligence Review, 18(2):77–95.

Mitchell Wortsman, Kiana Ehsani, Mohammad Raste-

gari, Ali Farhadi, and Roozbeh Mottaghi. 2019.

Learning to learn how to learn: Self-adaptive visual

navigation using meta-learning. In Proceedings of

the IEEE/CVF Conference on Computer Vision and

Pattern Recognition (CVPR), pages 6750–6759.

Fei Xia, Amir R. Zamir, Zhiyang He, Alexander Sax,

Jitendra Malik, and Silvio Savarese. 2018. Gibson

env: Real-world perception for embodied agents.

In Proceedings of the IEEE Conference on Com-

puter Vision and Pattern Recognition (CVPR), pages

9068–9079.

Shunyu Yao, Rohan Rao, Matthew Hausknecht, and

Karthik Narasimhan. 2020. Keep CALM and ex-

plore: Language models for action generation in

text-based games. In Proceedings of the 2020 Con-

ference on Empirical Methods in Natural Language

Processing (EMNLP), pages 8736–8754, Online. As-

sociation for Computational Linguistics.

Adams Wei Yu, David Dohan, Quoc Le, Thang Luong,

Rui Zhao, and Kai Chen. 2018. Fast and accurate

reading comprehension by combining self-attention

and convolution. In International Conference on

Learning Representations.

Fuzhen Zhuang, Zhiyuan Qi, Keyu Duan, Dongbo Xi,

Yongchun Zhu, Hengshu Zhu, Hui Xiong, and Qing

He. 2021. A comprehensive survey on transfer learn-

ing. Proceedings of the IEEE, 109(1):43–76.

10You can also read