CONNECTING LATENT RELATIONSHIPS OVER HETEROGENEOUS ATTRIBUTED NETWORK FOR RECOMMENDATION

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Connecting Latent ReLationships over Heterogeneous Attributed Network

for Recommendation

Ziheng Duan1, 2 Yueyang Wang2 *

Weihao Ye1 Zixuan Feng2 Qilin Fan1 Xiuhua Li1

1

School of Big Data and Software Engineering, Chongqing University, Chongqing 401331, China

2

Department of Control Science and Technology, Zhejiang University, Hangzhou 310027, China

duanziheng@zju.edu.cn, yueyangw@cqu.edu.cn

yeweihao@cqu.edu.cnn, fengzixuan121@gmail.com, fanqilin@cqu.edu.cn, lixiuhua1099@gmail.com

arXiv:2103.05749v1 [cs.SI] 9 Mar 2021

Abstract As the data describing users and items in the recom-

mendation system can be naturally organized as a graph

Recently, deep neural network models for graph- structure. The graph-based deep learning methods (Kipf and

structured data have been demonstrating to be influen-

Welling 2016) have been applied in recommendation fields

tial in recommendation systems. Graph Neural Network

(GNN), which can generate high-quality embeddings (Fan et al. 2019a) and alleviate the enormous amount and the

by capturing graph-structured information, is conve- sparsity problem to some extent. Meanwhile, heterogeneous

nient for the recommendation. However, most existing attributed network (HAN) (Wang et al. 2019b), consisting

GNN models mainly focus on the homogeneous graph. of different types of nodes representing objects, edges de-

They cannot characterize heterogeneous and complex noting the relationships, and various attributes, is a unique

data in the recommendation system. Meanwhile, it is form of graph and is powerful to model heterogeneous and

challenging to develop effective methods to mine the complex data in the recommendation system (Zhu et al.

heterogeneity and latent correlations in the graph. In 2021). Therefore, designing a graph-based recommendation

this paper, we adopt Heterogeneous Attributed Network method to mine the information in HAN is a promising di-

(HAN), which involves different node types as well as

rection (Zhong et al. 2020).

rich node attributes, to model data in the recommen-

dation system. Furthermore, we propose a novel graph Recently, Graph Neural Network (GNN) (Defferrard,

neural network-based model to deal with HAN for Rec- Bresson, and Vandergheynst 2016), a kind of deep learning-

ommendation, called HANRec. In particular, we design based method for processing graphs, has become a widely

a component connecting potential neighbors to explore used graph analysis method due to its high performance and

the influence between neighbors and provide two dif- interpretability. GNN based recommendation methods have

ferent strategies with the attention mechanism to aggre- proved to be successful to some extent because they could

gate neighbors’ information. The experimental results

simultaneously encode the graph structure and the attributes

on two real-world datasets prove that HANRec outper-

forms other state-of-the-arts methods1 . of nodes (Derr, Ma, and Tang 2018). However, the advances

of GNNs are primarily concentrated on homogenous graphs,

so they still encounter limitations to utilize rich information

Introduction in HAN. The major challenge is caused by the heterogeneity

of the recommendation graph. The recommendation meth-

As it becomes more convenient for users to generate data

ods should be able to measure similarities between users and

than before, mass data appeared on the Internet are no

items from various aspects (Fan et al. 2019a). On the one

longer with simple structure but usually with more complex

hand, different types of nodes have various attributes that

types and structures. This makes the recommendation sys-

cannot be directly applied due to the different dimensions of

tem (Ricci, Rokach, and Shapira 2011), which helps users

node attributes (Wang et al. 2019b). For example, in movie

discover items of interest, attract widespread attention, and

rating graphs, a ”user” is associated with attributes like inter-

face more challenges (Lekakos and Caravelas 2008). Tra-

ests and the number of watched movies, while a ”movie” has

ditional recommendation methods, such as matrix factor-

attributes like genres and years. On the other hand, the influ-

ization (Ma et al. 2008), are designed to explore the user-

ence of distinct relationships is different, which should not

item interactions and generate an efficient recommendation.

be treated equally (Chen et al. 2020). For instance, the cor-

However, because of calculating each pair of interactions,

relation between ”user-Rating-movie” is intuitively stronger

they have difficulty processing sparse and high-volume data.

than ”movie-SameGenres-movie.” Hence, how to deal with

Furthermore, it is more challenging to deal with heteroge-

different feature spaces of various objects’ attributes and

neous and complex data derived from different sources (Wu

how to make full use of heterogeneity to distinguish the im-

et al. 2015; Kefalas, Symeonidis, and Manolopoulos 2018).

pact of different types of entities are challenging.

* Corresponding Author. Furthermore, GNNs are based on the connected neigh-

1

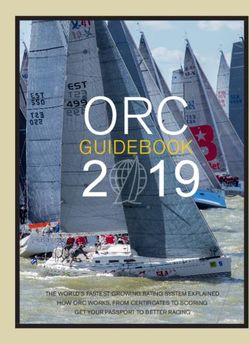

Code will be available when this paper is published. bor aggregation strategy to update the entity’s representationUser 1, id: 1

Rating: 5

Movie 2, id: 216

Billy Madison (1995)

Comedy

Rating: 4

High Rating ?

Connecting

Rating: 1

Movie 1, id: 1

Toy Story (1995)

Adventure|Animation Rating: 4

Children|Comedy|Fantasy Movie 3, id: 3176

Talented Mr. Ripley, The (1999)

Drama|Mystery|Thriller

Low Rating ?

User 2, id: 5

Figure 1: An example of connecting the users and movies from the real-world dataset: MovieLen. The solid black line represents

the rating scores of movies by users. We use the blue dashed line to indicate the potential relationships between users and the

red dashed line to demonstrate the potential relationships between movies through the connect module. The black dotted line is

the rating score of the movie 2 and 3 of user 2, which we want to predict.

(Zhou et al. 2018). However, the reality is that some poten- can model different patterns of the heterogeneous graph

tial relationships of entities are not directly connected, but structure. Finally, we use the entities’ high-quality embed-

implicit (Wu et al. 2020). For example, as shown in Fig. 1, dings to make corresponding recommendations through the

both user 1 and user 2 score the movie 1 as the same rat- recommendation generation component. To summarize, we

ing 4. That in part reflects the interests of the two users are make the following contributions:

similar. Analogously, movie 1 and movie 2 have the same

• We propose a new method to connect the users, thus pro-

genres. This infers there was some potential relationship be-

viding a potential reference for recommendations, where

tween the two movies. Nevertheless, these implicit relation-

existing methods usually miss.

ships are hardly captured by GNNs. Meanwhile, the degree

of rating could reflect the preferences of users. For instance, • We present a novel framework for the recommendation,

user 1 rates the movie 2 as 5, but the movie 3 as 1. This making full use of the heterogeneity in graphs and design

may infer that user 2 prefers movie 3 to movie 2. Therefore, strategies for gathering information for entities of differ-

how to explicitly model entities with potential relationships ent types.

to provide references for each other’s recommendation and • We design an attention mechanism to characterize the dif-

distinguish the rating weights are significant. ferent influences among entities.

To better overcome these challenges mentioned above, we

• Our model outperforms previous state-of-the-art methods

propose a neural network model to deal with Heterogeneous

in two recommendation tasks.

Attributed Network for Recommendation, called HANRec,

which can make full use of the heterogeneity of graphs and

deeper encode the latent relationships. Specifically, we first Related Work

design a component connecting potential neighbors to ex- This section briefly introduces some related works on rec-

plore the influence between neighbors with potential con- ommendation algorithms, mainly from traditional methods

nections. The connecting component also assigns users’ rat- and deep learning methods.

ing information to different weights and integrates them into For the past years, the use of social connections for the

the potential relationships. Next, we design homogeneous recommendation has attracted great attention (Tang, Aggar-

aggregation and heterogeneous aggregation strategies to ag- wal, and Liu 2016; Yang et al. 2017). Traditional meth-

gregate feature information of both connected neighbors and ods to deal with recommendation problems mainly include

potential neighbors. It is worth mentioning that we introduce content-based recommendation algorithms and collabora-

the attention mechanism (Vaswani et al. 2017) to measure tive filtering algorithms. The basic idea of Content-Based

the different impacts of heterogeneous nodes. The learned Recommendation (CB) (Pazzani and Billsus 2007) is to ex-

parameters in the two aggregations are different so that they tract product features from known user preference recordsand recommend the product that is most similar to his/her tic Regression (LR), can predict users’ ratings of products to known preference to the user. The recommendation process obtain more accurate results. Collaborative Deep Learning of CB can be divided into three steps: Item Representation, (CDL) proposed by Wang et al. (Wang, Wang, and Yeung Profiles Learning, and Generate Recommendation Genera- 2015) jointly deals with the representation of product con- tion. A further complication in generating a product repre- tent information and users’ rating matrix for products. CDL sentation is that the information for the product is unstruc- relies on user reviews of the product and information about tured. The most typical unstructured product information is the product itself. text. The weight of words can be calculated by using Term Some researchers have also used graph neural networks Frequency (TF) (Ramos 2003) and Inverse Document Fre- (GNNs) to complete recommendation tasks in recent years. quency (IDF) (Ramos 2003) to vectorize text. Feature learn- Graph Convolutional Network (Kipf and Welling 2016) ing is the process of modeling user interests. The training (GCN) is one of the representative methods. GCN uses con- process of all user interest models is a supervised classifica- volution operators on the graph to iteratively aggregate the tion problem that can be solved using traditional machine neighbor embeddings of nodes. It utilizes the Laplacian ma- learning algorithms. For example, decision tree algorithm trix of the graph to implement the convolution operation (Safavian and Landgrebe 1991), k-nearest neighbor algo- on the topological graph. In the multi-layer GCNs, each rithm (Keller, Gray, and Givens 1985), Naive Bayes algo- convolutional layer processes the graph’s first-order neigh- rithm (Vazquez et al. 2003), support vector machine (Bae- borhood information. Superimposing multiple convolutional sens et al. 2000), etc. CB uses different ways to generate layers can realize information transfer on the multi-level recommendations depending on the recommendation task neighborhood. On this basis, some GNN-based frameworks scenario. This strategy works well in situations where the for recommendation tasks have been proposed. According product’s attributes can be easily identified. Nevertheless, it to whether to consider the order of items, recommenda- is easy to over-specialize the user’s interest, and it is chal- tion systems can be divided into regular recommendation lenging to find out the user’s potential interest. The idea of tasks, and sequential recommendation tasks (Adomavicius Collaborative Filtering (CF) (Herlocker et al. 2004) is to and Tuzhilin 2005; Kang and McAuley 2018). The general find some similarity through the behaviors of groups and recommendation considers users to have static interest pref- make recommendations for users based on this similarity. erences and models the degree of matching between users The recommendation process is: establishing a user behav- and items based on implicit or explicit feedback. GNN can ior matrix, calculating similarity, and generating recommen- capture user-item interactions and learn user and item rep- dations. Collaborative filtering based on matrix decomposi- resentations. The sequential recommendation captures the tion decomposes the user rating matrix into user matrix U serialization mode in the item sequence and recommends and product matrix V. It then recombines U and V to get the next item of interest to the user. There are mainly meth- a new user rating matrix to predict unknown ratings. Ma- ods based on Markov chain (MC) (Rendle, Freudenthaler, trix decomposition includes Funk-SVD model (Koren, Bell, and Schmidt-Thieme 2010; He and McAuley 2016), based and Volinsky 2009), PMF model (Mnih and Salakhutdinov on RNN (Hidasi and Karatzoglou 2018; Tan, Xu, and Liu 2007), etc. 2016), and based on attention and self-attention mechanisms Deep learning, as an emerging method, also has many (Liu et al. 2018; Li et al. 2017). With the advent of GNN, achievements of learning on graph structure data (Bronstein some works convert the item sequence into a graph structure et al. 2017). The purpose of graph representation learning and use GNN to capture the transfer mode. Since this paper is to find a mapping function that can transform each node focuses on discussing the former, we mainly introduce the in the graph into a low-dimensional potential factor. The work of the general recommendation. low-dimensional latent factors are more efficient in calcu- General recommendation uses user-item interaction to lations and can also filter some noise. Based on the nodes’ model user preferences. For example, GC-MC (Berg, Kipf, latent factors in the graph, machine learning algorithms can and Welling 2017) deals with rating score prediction, and complete downstream tasks more efficiently, such as recom- the interactive data is represented as a bipartite graph with mendation tasks and link prediction tasks. Graphs can easily labeled edges. GC-MC only uses interactive items to model represent data on the Internet, making graph representation user nodes and ignores the user’s representation. So the lim- learning more and more popular. Graph representation learn- itations of GC-MC are: 1) it uses mean-pooling to aggre- ing includes random walk-based methods and graph neural gate neighbor nodes, assuming that different neighbors are network-based methods. The random walk-based methods equally important; 2) it only considers first-order neighbors sample the paths in the graph, and the structure informa- and cannot make full use of the graph structure to spread in- tion near the nodes can be obtained through these random formation. Online social networks also develop rapidly in re- paths. For example, the DeepWalk proposed by Perozzi et cent years. Recommendation algorithms that use neighbors’ al. (Perozzi, Al-Rfou, and Skiena 2014a) applies the ideas preferences to portray users’ profiles have been proposed, of Natural Language Processing (NLP) to network embed- which can better solve the problem of data sparsity and ding. DeepWalk treats the relationship between the user and generate high-quality embeddings for users. These meth- the product as a graph, generating a series of random walks. ods use different strategies for influence modeling or pref- A continuous space of lower latitudes represents the user erence integration. For instance, DiffNet (Wu et al. 2019) vector and the product vector. In this graph representation models user preferences based on users’ social relationships space, traditional machine learning methods, such as Logis- and historical behaviors, and it uses the GraphSAGE frame-

work to model the social diffusion process. DiffNet uses tion objectives of HANRec. The schematic of connecting the

mean-pooling to aggregate friend representations and mean- users and the model structure is shown in Fig. 2.

pooling to aggregate historical item representations to obtain

the user’s representation in the item space. DiffNet can use Problem Formulation and Symbols Definition

GNN to capture a more in-depth social diffusion process. Definition 1 Heterogeneous Attributed Network

However, this model’s limitations include: 1) The assump-

tion of the same influence is not suitable for the real scene; A heterogeneous attributed network can be represented

2) The model ignores the representation of the items and can as G = (V, E, A). V is a set of nodes representing differ-

be enhanced by interactive users. GraphRec proposed by Fan ent types of objects, E is a set of edges representing rela-

et al. (Fan et al. 2019b) learns the low-dimensional represen- tionships between two objects, and A denotes the attributes

tation of users and products through graph neural networks. of objects. The heterogeneity of the graph is reflected as:

GraphRec used the mean square error of predicted ratings to type(nodes) + type(edges) > 2. On the other hand, when

guide the neural network’s parameter optimization and intro- type(nodes) = type(edges) = 1, we call it a homogeneous

duced users’ social relationships and attention mechanisms graph (Cen et al. 2019). Attributed graphs mean that each

to describe user preferences better. With the emergence of node in graphs has corresponding attributes. Entities with

heterogeneous and complex data in the recommendation net- attributes are widespread in the real world. For example, in

work, some work has introduced knowledge graphs (KG) a movie recommendation network, each movie has its genres

into recommendation algorithms. The challenge of apply- and users have their preferences; in an author-paper network,

ing the KG to recommendation algorithms comes from the each author and paper has related research topics. Therefore,

complex graph structure, multiple types of entities, and rela- most networks in the real world exist as heterogeneous at-

tionships in KG. Previous work used KG embedding to learn tribute graphs, which is the focus of this paper.

the representation of entities and relationships; or designed

Definition 2 Recommendation

meta-path to aggregate neighbor information. Recent work

uses GNN to capture item-item relationships. For exam- In this paper, the task of recommendation is focused. For

ple, KGCN (Wang et al. 2019a) uses user-specific relation- a graph G = (V, E), vi and vj are two entities in this graph.

aware GNN to aggregate neighbors’ entity information and In the triple (r, vi , vj ), r represents the relationship be-

uses knowledge graphs to obtain semantically aware item tween vi and vj . A recommendation model learns a score

representations. Different users may impose different im- function, and outputs the relationship r between vi and vj :

portance on different relationships. Therefore, the model ri,j = Recommendation M odel(vi , vj ). For example, in

weights neighbors according to the relationship and the user, the user-movie-rating recommendation network, the main

which can characterize the semantic information in the KG focus is to recommend movies that users might be interested

and the user’s interest in a specific relationship. The item’s in. This requires the recommendation model to accurately

overall representation is distinguished for different users, give users the possible ratings of these movies (commonly

and semantic information in KG is introduced. Finally, pre- a 5-point rating, etc.). So in most cases, this is a regression

dictions are made based on user preferences and item repre- problem.

sentation. IntentGC (Zhao et al. 2019) reconstructs the user-

to-user relationship and the item-to-item relationship based Definition 3 Link Prediction

on the multi-entity knowledge graph, greatly simplifying the We also discussed link prediction in this paper. For a graph

graph structure. The multi-relationship graph is transformed G = (V, E), vi and vj are two entities in this graph. In

into two homogeneous graphs, and the user and item em- the triple (r, vi , vj ), r represents the relationship between

beddings are learned from the two graphs, respectively. This vi and vj . A link prediction model learns a score function,

work also proposes a more efficient vector-wise convolution and outputs the relationship r between vi and vj : ri,j =

operation instead of the splicing operation. IntentGC uses lo- Link P rediction M odel(vi , vj ). In most cases, the value

cal filters to avoid learning meaningless feature interactions of r is 0 or 1. This means that there is no edge or there is an

(such as the age of one’s node and neighbor nodes’ rating). edge between the two entities, which is a binary classifica-

Although the previous work has achieved remarkable suc- tion problem.

cess, the exploration of potential relationships in social rec- The purpose of link prediction is to infer missing links

ommendations has not been paid enough attention. In this or predict future relations based on the currently observed

paper, we propose a graph neural network framework that part of the network. This is a fundamental problem with a

can connect potential relationships in the network to fill this large number of practical applications in network science.

gap. The recommendation problem can also be regarded as a kind

of link prediction problem. The difference is that recommen-

Proposed Framework dation is a regression task that needs to predict specific rela-

In this section, we present the proposed HANRec in detail. tionships (such as the ratings of movies by people in a movie

First, we define the heterogeneous attributed network, the recommendation network). At the same time, link prediction

formula expression of the recommendation and link predic- is a binary classification task, where we need to determine

tion problem, and the symbols used in this paper. Later, we whether there is a link connected between the two entities.

give an overview of the proposed framework and the de- The mathematical notations used in this paper are sum-

tails of each component. Finally, we introduce the optimiza- marized in Table 1.Table 1: Symbols’ Definition and Description

Symbols Definitions

B(vi ) The set of entities of the same type as entity vi .

C(vi ) The set of entities of different types as entity vi .

N (vi ) The set of neighbor entities of entity vi .

0

N (vi ) The set of entities that have potential connections with entity vi .

ri,j The relationship between entity vi and vj .

pi The embedding vector of entity vi .

ei,j The rating embedding for the rating level (entity vi to vj ).

d The embedding dimension.

h0i The initial representation vector of entity vi .

hB

i The influence embedding among entity vi and entities of the same type.

hC

i The influence embedding among entity vi and entities of different types.

fi,j The opinion-aware interaction representation between entity vi and vj .

∗

αi,j Attention parameter between entity of the same type (vj to vi ).

∗

βi,j Attention parameter between entity of different types (vj to vi ).

αi,j The normalized attention parameter between entity of the same type (vj to vi ).

βi,j The normalized attention parameter between entity of different types (vj to vi ).

W,b The weight and bias in neural networks.

An Overview of HANRec characteristics from the perspective of entities of different

types. Recommendation generation gathers all the informa-

HANRec consists of four components: Connecting Potential tion related to the entity and generates its high-quality em-

Neighbors, Homogeneous Network Aggregation, Heteroge- bedding. This component also matches the embeddings of

neous Network Aggregation, and Recommendation Genera- other entities to make the final recommendations.

tion. Connecting Potential Neighbors aims to fully explore

As shown in Fig. 2, in the movie recommendation net-

the influence between neighbors with potential connections.

work, we connect different users/movies through the de-

Like the common user-movie-rating network, there is a lot

signed connection method (as indicated by the orange ar-

of data for users’ ratings of movies, but there is little or no

row). Then we use homogeneous and heterogeneous ag-

interaction between users. However, the interaction between

gregation to combine users/movies its own characteristics

users is important, and there may be potential influences

and generate the final representation embedding. Finally, the

between users. Users with the same interests will have a

user’s embedding is compared with the movie’s embedding

high probability of giving similar ratings to the same movie.

to be evaluated, and the final rating score is generated. Next,

This component uses shared entities to generate a connec-

we introduce the details of each component.

tion path, which utilizes scores and features to model the

potential influence and generate high-quality entity repre-

sentations. Connecting Potential Neighbors

Homogeneous Network Aggregation is responsible for

aggregating information of entities of the same type and We design this component to fully explore the influence be-

quantifying the influence of different neighbors on the entity tween neighbors with potential connections. Note that in

through an attention mechanism. This component is mainly the common user-movie-rating network, there may not be

to portray the influence between entities of the same type. a direct link among users and users, movies and movies.

Heterogeneous Network Aggregation is responsible for ag- At this time, we can connect the users and movies through

gregating information of entities of different types. We also second-order neighbors. Suppose that vj ∈ B(vi ) ∩ N (vi ),

use the user-movie-rating network to illustrate. Different vk ∈ C(vi ) ∩ N (vi ), vk ∈ C(vj ) ∩ N (vj ), where B(vi ) is

movie genres and the user’s ratings will describe the user’s the set of entities that are the same type of vi ; C(vi ) is the

preferences from a particular perspective. Heterogeneous set of entities that are different types of vi and N (vi ) repre-

Aggregation Network will focus on describing an entity’s sents the set of neighbors of vi . We can infer the influenceHeterogeneous

Network The initial

Aggeration The initial representation vector

representation vector The latent

embedding

High Rating High Rating

Rating Score

Homogeneous Homogeneous Heterogeneous

Users Low Rating Connecting Network Network Connecting Network

Aggeration Recommendation Aggeration Aggeration

The initial

The initial

representation vector

representation vector Rating Score

High Rating High Rating

Heterogeneous

Network

Aggeration

User Model Movie Model

Figure 2: The architecture of HANRec. It contains four major components: connecting potential neighbors, homogeneous

aggregation, heterogeneous aggregation and rating generation.

of vj on vi from the common neighbor vk : The design of attention parameters is based on the assump-

fi,j = M LP (h0k ⊕ ei,k ⊕ ⊕ ek,j ),h0j (1) tion that entities with similar characteristics should have

greater influence among them. And we use a neural net-

where fi.j is the opinion-aware interaction representation work to learn this influence adaptively. In this way, we get

between entity vi and vj ; M LP means Multilayer Percep- the information gathered by entity vi from its homogeneous

tron (Pal and Mitra 1992); ⊕ represents the concatenate op- graph:

erator of two vectors; h0k and h0j represent the initial features X

of vk and vj , respectively; ei,k and ek,j represent the rat- hBi = (αi,j ∗ fi,j ) (5)

ing embedding for the rating level between vi , vk and vk , j∈B(vi )∩(N (vi )∪N 0 (vi ))

vj , respectively. Through this connecting way, we use fi,j

to represent their previous potential relationship between vi It is worth mentioning that when we aggregate neighbor in-

and vj . Then vj is added to N 0 (vi ), which represents the formation, we not only consider neighbors with edge con-

set of entities that have potential connections with entity vi . nections but also consider neighbors with potential relation-

The connection method we designed can fully integrate the ships discovered through our connection method. We aggre-

characteristics of entities and the relationships between en- gate their influence on entity vi in the meantime. Subsequent

tities in the path. For example, there may not be a direct experiments proved the superiority of our design ideas.

connection between users in a common movie recommen-

dation network. In this way, the two users can be connected Heterogeneous Network Aggregation

by using the movies they have watched together. The movie Heterogeneous Network Aggregation aims to learn the in-

genres and the user’s rating of the movie can provide an es- fluence embedding hC i , representing the relationship among

sential reference for users’ potential relationships. entity vi and entities of different types. Given the initial

representation of entity vj , and the rating embedding ri,j ,

Homogeneous Network Aggregation the opinion-ware interaction representation fi,j can be ex-

Homogeneous Network Aggregation aims to learn the in- pressed as:

fluence embedding hB i , representing the relationship among fi,j = M LP (h0j ⊕ ei,j ) (6)

entity vi and entities of the same type. Given the initial The attention parameter βi,j ∗

reflecting the influence of dif-

representation of entity vj , and the rating embedding ei,j , ferent entities vi and vj is designed as:

the opinion-ware interaction representation fi,j can be ex-

∗

pressed as: βi,j = w4T · σ(W3 · [h0i ⊕ h0j ] + b3 ) + b4 (7)

fi,j = M LP (h0j ⊕ ei,j ). (2)

∗

The attention parameter αi,j reflecting the influence of dif- The normalized attention parameter βi,j is as follows:

ferent entities vi and vj is designed as: ∗

exp(βi,j )

∗ βi,j = P (8)

αi,j= w2T

· σ(W1 · ⊕ [h0i h0j ]

+ b1 ) + b2 , (3) k∈C(vi )∩(N (vi )∪N 0 (vi ))

∗ )

exp(βi,k

where W and b represents the weight and bias in neural

networks. The normalized attention parameter αi,j is as fol- In this way, we get the information gathered by entity vi

lows: from its heterogeneous graph:

∗

exp(αi,j )

X

αi,j = P . (4) hC

i = (βi,j ∗ fi,j ) (9)

∗

k∈B(vi )∩(N (vi )∪N 0 (vi )) exp(αi,k ) j∈C(vi )∩(N (vi )∪N 0 (vi ))According to the different types of neighbors, we designed 105

two aggregation strategies to highlight the impact of differ-

ent types of entities on vi . For example, in a common movie

recommendation network, the influence of movies and other

people on a person should be different, and mixing them

cannot make full use of the graph’s heterogeneity. The sub- 104

sequent experimental part also proved our conjecture.

# Edges

Recommendation Generation

After gathering information from entities of the same and 103

different types, we can easily get the latent representation of

entity vi :

pi = M LP (h0i ⊕ hB C

i ⊕ hi ), (10)

where h0i is the initial representation of entity vi , such as 102

1 2 3 4 5

the user’s preferences in the user-movie-rating network, the Rating Score

movie genres, etc. hB C

i and hi represent the influence em-

beddings indicating the relationships among entity vi and (a) MovieLen

entities of the same type and different types, respectively.

For vi and vj , after getting the embedding that aggregates a 105

variety of information (pi and pj ), their relationship can be

measured as: 104

ri,j = M LP (pi ⊕ pj ). (11)

So far, the entire end-to-end recommendation prediction 103

process has been completed. # Nodes

Objective Function 102

To optimize the parameters involved in the model, we need

to specify an objective function to optimize. Since the task 101

we focus on in this work is rating prediction and link pre-

diction, the following loss function is used referred to (Fan

et al. 2019a): 100

1 X 0 0 25 50 75 100 125 150 175 200

Loss = (ri,j − ri,j )2 , (12) # Degree

2|T |

i,j∈T (b) AMiner

0

where |T | is the number used in the training dataset, ri,jis

the relationship between entity vi and vj predicted by the Figure 3: Characteristics of the MovieLen and the AMiner

model and ri,j is the ground truth. dataset.

To optimize the objective function, we use Adam

(Kingma and Ba 2014) as the optimizer in our implemen-

tation. Each time it randomly selects a training instance and For the recommendation task, MovieLens 100k2 was

updates each model parameter in its negative gradient direc- used, a common data set in the field of movie recommen-

tion. In optimizing deep neural network models, overfitting dation. It consists of 10,334 nodes, including 610 users

is an eternal problem. To alleviate this problem, we adopted and 9724 movies, and 100,836 edges. It is worth mention-

a dropout strategy (Srivastava et al. 2014) in the model. The ing that the edges here all indicate the user’s rating of the

idea of dropout is to discard some neurons during training movie, and there is no edge connection between users or be-

randomly. When updating parameters, only part of them will tween movies. MovieLens also includes the movie’s genre

be updated. Besides, since the dropout function was disabled attributes and the timestamp of the rating. Movie categories

during the test, the entire network will be used for predic- include 18 categories such as Action, Drama, Fantasy. Each

tion. The whole algorithm framework is shown in algorithm movie can have multiple unique category attributes. For ex-

1. ample, Batman (1989) has three category attributes: Action,

Crime, and Thriller. We initialize representation embeddings

Experiments for these 18 genres. The initial embedding of each movie is

the average of the genre’s embeddings in the movie. For the

Datasets MovieLen dataset, our model focuses on three kinds of em-

In order to verify the effectiveness of HANRec, we per-

2

formed two tasks: recommendation and link prediction. https://grouplens.org/datasets/movielens/100k/Algorithm 1: HANRec algorithm framework

Input : The heterogeneous attributed network G = (V, E, A); two entities v1 and v2 whose relationship needs to be

evaluated

Output: The relationship r1,2 between the two entities v1 and v2

1 P = {};

// P is used to record the representation embedding of each entity v

2 for vi in {v1 , v2 } do

3 N 0 (vi ) = {};

// N 0 (vi ) records the entities that have potential connections with entity vi

4 for vm in N (vi ) do

5 fi,m = M LP (h0m ⊕ ei,m );

// The opinion-aware interaction representation between entity vi and vm

6 for vn in N (vm ) do

7 if vn 6= vi then

8 N 0 (vi ) ← vn ;

// Add vn to N 0 (vi )

9 fi,n = M LP (h0m ⊕ ei,m ⊕ h0n ⊕ em,n );

// The opinion-aware interaction representation between entity vi

and vn

10 end

11 end

12 end

// Connecting Potential Neighbors

∗ exp(α∗ )

13 αi,j = w2T · σ(W1 · [h0i ⊕ h0j ] + b1 ) + b2 , αi,j = P i,j

∗ ;

k∈B(vi )∩(N (vi )∪N 0 (vi )) exp(αi,k )

// TheP attention parameters in homogeneous aggregation

14 hB

i = vk ∈B(vi )∩(N (vi )∪N 0 (vi )) (αi,k ∗ fi,k );

// Homogeneous Aggregation

∗ exp(β ∗ )

15 βi,j = w4T · σ(W3 · [h0i ⊕ h0j ] + b3 ) + b4 , βi,j = P i,j

∗ ;

k∈C(vi )∩(N (vi )∪N 0 (vi )) exp(βi,k )

// The attention parameters in heterogeneous aggregation

hC

P

16 i = vk ∈C(vi )∩(N (vi )∪N 0 (vi )) (βi,k ∗ fi,k );

// Heterogeneous Aggregation

17 pi = M LP (h0i ⊕ hB C

i ⊕ hi );

// Fuse vi ’s initial feature and the information aggregated from homogeneous

and heterogeneous neighbors to obtain the final representation embedding

pi

18 P ← pi ;

// Add p to P

19 end

20 r1,2 = M LP (p1 ⊕ p2 );

// Compute the relationship r1,2 between two entity v1 and v2

21 return r1,2beddings, including user embedding h0i , where i belongs to v

the user index, movie embedding h0j , which is composed of u

u1 X m

the embedding of movie genres, and j belongs to the movie RM SE = t (pi − ai )2 (14)

index, and opinion embedding ei,j . They are initialized ran- m i=1

domly and learn together during the training phase. Since the

original features are extensive and sparse, we do not use one- a = actual target, p = predict target

key vectors to represent each user and item. By embedding Smaller values of MAE and RMSE indicate better pre-

high-dimensional sparse features into the low-dimensional dictive accuracy. For the link prediction task, Accuracy and

latent space, the model can be easily trained (He et al. 2017). AUC (Area Under the Curve) are used to quantify the ac-

The opinion embedding matrix ei,j depends on the system’s curacy. Higher values of Accuracy and AUC indicate better

rating range. For example, for the MovieLen dataset, which predictive accuracy.

is a 5-star rating system and 0.5 as an interval, the opinion

embedding matrix e contains nine different embedding vec- Baselines

tors to represent {0.5, 1, 1.5, 2, 2.5, 3, 3.5, 4, 4.5, 5} in the The methods in our comparative evaluation are as follows:

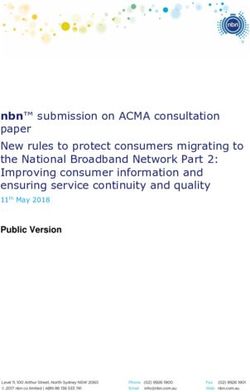

score. Fig. 3(a) shows the rating score distribution of edges

in the MovieLen dataset. We can clearly see fewer edges • Doc2Vec (Le and Mikolov 2014) is the Paragraph Vec-

with lower rating scores, and there are more edges with rat- tors algorithm that embeds the text describing objects in a

ing scores from 3 to 4. distributed vector using neural network models. Here we

For the link prediction task, we use the AMiner dataset3 use Doc2Vec to process the text describing authors and

(Tang et al. 2008). We construct the relationship between an papers’ research interests in the link prediction task to ob-

author and one paper when the author published this paper. tain the initialization embedding of authors and papers.

We also generate the relationships between authors when • DeepWalk (Perozzi, Al-Rfou, and Skiena 2014b) uses

they are co-authors and generate the relationships between random walk to sample nodes in the graph to get the node

papers when there are citation relationships. The authors’ embedding. As for the relevant parameters, we refer to

initial attribute contains research interests, published papers, the original paper. We set num-walks as 80, walk-length

and the total number of citations. (Stallings et al. 2013). The as 40, and window-size as 10.

title and abstract can represent the initial attribute of papers.

• LINE (Tang et al. 2015) minimizes a loss function

In this author-paper-network, we treat the weight of edges as

to learn embedding while preserving the first and the

binary. In the experiment part, we selected all papers from

second-order neighbors’ proximity among nodes. We use

the famous venue4 in eight research topics (Dong, Chawla,

LINE (1st+2nd) as overall embeddings. The number of

and Swami 2017) and all the relative authors who published

negative samples is set as 5, just the same as the original

these papers. Based on this, we derive a heterogeneous at-

paper.

tributed network from the academic network of the AMiner

dataset. It consists of 29,059 nodes, including 16,604 au- • Node2Vec (Grover and Leskovec 2016) adopts a biased

thors and 12,455 papers with eight labels, and 124,626 edges random walk strategy and applies Skip-Gram to learn

representing 62,115 coauthor, 31,251 citation, and 31,263 node embedding. We set p is 0.25 and q as 4 here.

author-paper relationships. We treat authors’ and papers’ • SoRec (Ma et al. 2008) performs co-factorization on the

text descriptions as node attributes and transform them into user-item rating matrix and user-user social relations ma-

vectors by Doc2vec in the experiments. Fig. 3(b) shows the trix. We set the parameters as the same as the original

node distribution of the AMiner dataset. We can find that paper. λC = 10, and λU = λV = λZ = 0.001.

most nodes have few neighbors, but there are still some su-

per nodes whose number of neighbors exceeds 100. In this • GATNE (Cen et al. 2019) provides an embedding method

case, it is more important to distinguish the influence of dif- for large heterogeneous network. In the two datasets used

ferent neighbors effectively. here, the edge type is 1, so the edge embedding type in

GATNE is set to 1.

Metrics • GraphRec (Fan et al. 2019c) jointly captures interactions

We apply two conventional evaluation metrics to evaluate and opinions in the user-item graph. For the MovieLen

the performance of different models for recommendation: dataset here, there are no user-to-user and movie-to-movie

Mean Absolute Error (MAE) and Root Mean Squared Error interactions, so only item aggregation and user aggrega-

(RMSE): tion of the original paper are used.

• HANRec is our proposed framework, which makes full

m use of the heterogeneity and attribute of the network and

1 X

M AE = (pi − ai ) (13) uses the attention mechanism to provide better recom-

m i=1

mendations.

3

https://www.aminer.cn/aminernetwork

4 Training Details

1. IEEE Trans. Parallel Distrib. Syst; 2. STOC; 3. IEEE Com-

munications Magazine; 4. ACM Trans. Graph; 5.CHI; 6. ACL; 7. For the task of recommendation, we use the MovieLen

CVPR; 8. WWW dataset. The recommendation task’s goal is to predict theTable 2: Results comparison of the recommendation. MAE/RMSE are evaluation metrics.

Train Edges DeepWalk LINE Node2Vec SoRec GATNE GraphRec HANRec

30% 0.8514/1.0578 0.8614/1.0687 0.8519/1.0317 0.8278/1.0324 0.7923/0.9848 0.7824/0.9748 0.7751/0.9624

40% 0.8258/1.0014 0.8413/1.0342 0.8202/0.9832 0.7891/0.9936 0.7774/0.9607 0.7528/0.9555 0.7419/0.9355

50% 0.7869/0.9817 0.8017/0.9959 0.7928/0.9707 0.7417/0.9615 0.7319/0.9487 0.7347/0.9379 0.7128/0.9132

60% 0.7521/0.9678 0.7758/0.9816 0.7498/0.9601 0.7314/0.9504 0.7217/0.9215 0.7191/0.9189 0.6981/0.9047

70% 0.7331/0.9497 0.7525/0.9607 0.7345/0.9427 0.7257/0.9407 0.7047/0.9158 0.7055/0.9107 0.6823/0.8925

80% 0.7250/0.9418 0.7332/0.9511 0.7273/0.9395 0.7192/0.9339 0.6954/0.9057 0.6966/0.9071 0.6753/0.8855

90% 0.7148/0.9375 0.7241/0.9403 0.7068/0.9189 0.6957/0.9048 0.6824/0.8907 0.6807/0.8827 0.6673/0.8681

Table 3: Results comparison of the link prediction. AUC/Accuracy are evaluation metrics.

Train Edges Doc2Vec DeepWalk LINE Node2Vec SoRec GraphRec HANRec

30% 0.8074/0.7335 0.8693/0.8206 0.7911/0.7214 0.8808/0.8341 0.9228/0.8547 0.9241/0.8613 0.9612/0.8982

40% 0.8158/0.7407 0.8873/0.8345 0.8017/0.7275 0.8879/0.8410 0.9317/0.8602 0.9333/0.8752 0.9647/0.9032

50% 0.8243/0.7505 0.8948/0.8415 0.8155/0.7357 0.9023/0.8531 0.9394/0.8739 0.9517/0.8907 0.9679/0.9101

60% 0.8395/0.7612 0.9105/0.8631 0.8209/0.7398 0.9201/0.8617 0.9534/0.8907 0.9571/0.8988 0.9758/0.9223

70% 0.8548/0.7765 0.9358/0.8758 0.8288/0.7451 0.9393/0.8826 0.9627/0.9015 0.9673/0.9056 0.9807/0.9409

80% 0.8702/0.7893 0.9501/0.8921 0.8371/0.7473 0.9513/0.8901 0.9708/0.9077 0.9728/0.9099 0.9881/0.9514

90% 0.8771/0.7973 0.9573/0.8967 0.8429/0.7506 0.9584/0.8972 0.9782/0.9103 0.9792/0.9156 0.9912/0.9611

rating score of one user to one movie. x% of the scoring HANRec on the test set gets better and better. Then we

records are used for training, and the remaining (100-x)% focus on the recommendation performance of all methods.

are used for testing. In this task, HANRec will output a value Table 2 shows the overall rating prediction results (MAE

between 0.5 and 5.0, indicating the user’s possible rating for and RMSE) among different recommendation methods on

the movie. The closer the score is to the ground truch, the MovieLen datasets. We find that no matter how much x is,

better the performance of the model. For the task of link the performance of DeepWalk, LINE, and Node2Vec, which

prediction, we use the AMiner dataset. The link prediction are based on the walking in the graph, is relatively low. As

task’s goal is to predict whether there is an edge between a matrix factorization method that leverages both the rat-

two given nodes. First, we randomly hide (100-x)% of the ing and social network information, SoRec is slightly bet-

edges in the original graph to form positive samples in the ter than the previous three. GATNE’s embedding of edge

test set. The test set also has an equal number of randomly heterogeneity and GraphRec’s social aggregation cannot be

selected disconnected links that servers as negative samples. fully utilized in this case. Nevertheless, these two methods’

We then use the remaining x% connected links and ran- performance is generally better than the previous methods,

domly selected disconnected ones to form the training set. which proves the effectiveness of deep neural networks on

HANRec outputs a value from 0 to 1, indicating the prob- the recommendation task. The model we proposed, HAN-

ability that there are edges between entities. For these two Rec, can fully connect users, thus providing more guidance

tasks, the value of x ranges from 30 to 90, and the inter- for the recommendation, thus showing the best performance

val is 10. It is worth noting that when x is low, we call it a on the recommendation task.

cold start problem (Schafer et al. 2007), which is discussed

in detail in the section 21. Then we evaluate HANRec’s performance on the link pre-

The proposed HANRec is implemented on the basis of diction accuracy and compare HANRec with other link pre-

Pytorch 1.4.05 . All multilayer perceptrons have a three-layer diction algorithms mentioned above. Table 3 demonstrates

linear network and prelu activation function by default. The that the performance of Doc2Vec, which does not use graph

embedding size d used in the model and the batch size are all information and only uses the initial information of each

set to 128. The learning rate are searched in [0.001, 0.0001, node, is the worst. This shows that the graph structure is

0.00001], and the Adam algorithm (Kingma and Ba 2014) essential in the link prediction task, and the initial features

is used to optimize the parameters of HANRec. The perfor- of the nodes are challenging to provide enough reference

mance results were recorded on the test set after 2000 iter- for the link prediction task. Methods that use graph struc-

ations on the MovieLen dataset and 20000 iterations on the ture information, such as DeepWalk, LINE, Node2Vec, and

AMiner dataset. The parameters for the baseline algorithms SoRec, have higher performance than Doc2Vec, indicating

were initialized as in the corresponding papers and were then that in this case, the graph structure information is more im-

carefully tuned to achieve optimal performance. portant than the initial information of nodes. The effect of

GATNE, which uses graph heterogeneity, is better than the

Main Results previously mentioned methods, which shows that graph het-

We can first see that no matter which task it is, as the erogeneity can also provide some reference for the link pre-

proportion of the training data set increases, the effect of diction task. The performance of our method HANRec in

the link prediction task far exceeds other methods, which

5

https://pytorch.org/ further shows that the framework can fully consider the ho-(a) Ablation Study: MovieLen- (b) Ablation Study: MovieLen-

(a) Cold-Start: MovieLen-MAE (b) Cold-Start: AMiner-AUC MAE RMSE

Figure 4: Cold Start Problem analysis on the MovieLen and

AMiner datasets.

mogeneity and heterogeneity of the graph and use the at-

tention mechanism to generate high-quality embeddings for

nodes. Further investigations are also conducted to better un- (c) Ablation Study: AMiner- (d) Ablation Study: AMiner-

derstand each component’s contributions in HANRec in the AUC Accuracy

following subsection.

In conclusion, we can know from these results: (1) graph Figure 5: Effect of each component on the MovieLen and

neural networks can boost the performance in the recom- the AMiner dataset.

mendation and the link prediction tasks; (2) using the het-

erogeneity of graphs can make full use of the information the entity’s neighbor information is incomplete. The embed-

of the network, thereby improving the performance on these ding obtained by other methods by aggregating neighbor in-

two tasks; (3) our proposed HANRec achieves the state-of- formation does not fully integrate this neighbor information.

art performance in the MovieLen and the AMiner datasets By connecting potential neighbors, HANRec overcomes the

for both tasks. disadvantage of few known edges to a certain extent and

is suitable for alleviating the difficulty of generating high-

Cold Start Problem quality recommendations during cold start. It is worth notic-

ing that as shown in Fig. 4(b), Doc2Vec, the recommended

The cold start problem (Schafer et al. 2007) refers to the dif-

method based on entity features, is better than DeepWalk as

ficulty of the recommendation system to give high quality

the first, which is based on the random walk. As the pro-

recommendations when there is insufficient data. According

portion of the training edges increases, DeepWalk gradually

to (Bobadilla et al. 2012), the cold start problem can be di-

overtakes Doc2Vec. This is intuitive: because DocVec does

vided into three categories: (1) User cold start: how to make

not use the graph’s structural information, it only makes

personalized recommendations for new users; (2) Item cold

recommendations based on each entity’s feature. The in-

start: how to recommend a new item to users who may be in-

crease in the number of training edges has relatively little

terested in it; (3) System cold start: how to design a person-

effect on the performance improvement of Doc2Vec. While

alized recommendation system on a newly developed web-

DeepWalk can capture more neighbor information by ran-

site (no users, user behavior, only partial item information),

dom walking, improve the generated embedding quality, and

so that users can experience personalized recommendations

make better recommendations.

when the website is released.

In this paper, the cold start problem belongs to the third

category, which can be defined as how to make high-quality Ablation Study

recommendations based on the information of a small num- We evaluated how each of the components of HANRec

ber of edges (rating scores or relationships). We continu- affects the results. We report the results on two datasets

ously adjusted the proportion of available information in the after removing a specific part. HANRec-C, HANRec-

two datasets, that is, the proportion of training set x, from Homo, HANRec-Hete, HANRec-Att respectively represent

5% to 25%, and obtained the performance results of a series our model without connecting potential neighbors, homoge-

of methods. When the ratio of the training edges is from 5% neous aggregation, heterogeneous aggregation, and the at-

to 25%, Fig. 4(a) and 4(b) show the performance of different tention mechanism. HANRec is the complete model we pro-

models in the MoveLen and the AMiner datasets. Since the posed. It can be seen from Fig. 5 that regardless of remov-

methods based on the random walk, such as Node2Vec and ing the connecting component, homogeneous aggregation,

LINE, have a similar performance with DeepWalk, we only heterogeneous aggregation, or the attention mechanism, the

report the results of DeepWalk here. performance of HANRec shows attenuation, and removing

Both Fig. 4(a) and Fig. 4(b) reflect that HANRec performs the attention mechanism has less impact on performance

the best in various cases. Specifically, when the proportion than the former two. This makes intuitive sense. The other

of training edges is 5%, HANRec outperforms other meth- three cases will lose much information in the graph, which

ods the most. This reflects the superiority of the connect is not conducive to the entity’s high-quality embedding. It

method we designed: when there are very few known edges, is worth noting that after removing the connecting compo-(a) Parameter Analysis: (b) Parameter Analysis:

(a) Connecting Strategies: (b) Connecting Strategies: MovieLen-MAE MovieLen-RMSE

MovieLen-MAE MovieLen-RMSE

(c) Parameter Analysis: (d) Parameter Analysis:

AMiner-AUC AMiner-Accuracy

(c) Connecting Strategies: (d) Connecting Strategies:

AMiner-AUC AMiner-Accuracy

Figure 7: Effect of dimension d on the MovieLen and the

AMiner dataset.

Figure 6: The performance of different connecting methods

on the MovieLen and the AMiner dataset.

nent, the performance of the model has dropped a lot, in-

dicating that the connection method we designed can pro-

vide an essential reference for recommendations. This also

further proves that the connecting component, aggregation

methods, and attention mechanism are practical. With their

joint contribution, HANRec can perform well in the recom-

mendation task. (a) MovieLen-Loss (b) AMiner-Loss

We also explored the performance of different connection

strategies. Connecting-FB represents the feature-based con- Figure 8: Performance with respect to the number of itera-

necting method. When we explore neighbors with potential tions on the MovieLen and the AMiner dataset.

relationships, Connecting-FB only uses the feature informa-

tion of entities on the path, not the relationship information

between entities. In this case, formula 1 can be rewritten as:

performance. It demonstrates that using a large number of

fi,j = M LP (h0k ⊕ h0j ). Connecting-RB means the relation-

the embedding dimension has powerful representation. Nev-

based connecting method. Connecting-RB only uses the re-

ertheless, if the embedding dimension is too large, the com-

lationship information between entities on the path, not the

plexity of our model will significantly increase. Therefore,

feature information of entities. So formula 1 can be rewrit-

we need to find a proper length of embedding to balance the

ten as: fi,j = M LP (ei,k ⊕ ek,j ). Connecting is the com-

trade-off between the performance and the complexity.

plete connecting method we proposed. As shown in Fig. 6,

whether it is a feature-based connection or a relationship- We also study the performance change w.r.t. the number

based connection, the model’s performance is not as good as of iterations when the learning rate is 0.001, and the train-

the connection method we use. This is intuitive: for exam- ing ratio is 90%. As shown in Fig. 8, we can see that the

ple, in a movie recommendation network, if two users have proposed model has a fast convergence rate, and about 400

watched a movie, then both the rating scores of the movie iterations are required for the MovieLen dataset, while about

and the characteristics of the movie and users can provide a 8000 iterations are required for the AMiner dataset.

vital reference for the relationship between users.

Parameter Analysis

Conclusion

In this part, we analyze the effect of embedding dimension In this paper, we propose a heterogeneous attributed network

d of the entity’s latent representation pi on the performance framework with a connecting method, called HANRec, to

of HANRec. Fig. 7 presents the performance comparison of address the recommendation task in heterogeneous graph.

the embedding dimension on the MovieLen and the AMiner By gathering multiple types of neighbor information and us-

dataset. In general, with the increase of the embedding di- ing the attention mechanism, HANRec efficiently generates

mension, the performance first increases and then decreases. embeddings of each entity for downstream tasks. The exper-

When expanding the embedding dimension from 32 to 128 iment results on two real-world dataset can prove HANRec

can improve the performance significantly. However, with outperform state-of-the art models for the task of recommen-

the embedding dimension of 256, HANRec degrades the dation and link prediction.References SIGKDD international conference on Knowledge discovery

Adomavicius, G.; and Tuzhilin, A. 2005. Toward the next and data mining, 855–864.

generation of recommender systems: A survey of the state- He, R.; and McAuley, J. 2016. Fusing similarity models with

of-the-art and possible extensions. IEEE transactions on markov chains for sparse sequential recommendation. In

knowledge and data engineering 17(6): 734–749. 2016 IEEE 16th International Conference on Data Mining

Baesens, B.; Viaene, S.; Van Gestel, T.; Suykens, J. A. K.; (ICDM), 191–200. IEEE.

Dedene, G.; De Moor, B.; and Vanthienen, J. 2000. An He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; and Chua, T.-S.

empirical assessment of kernel type performance for least 2017. Neural collaborative filtering. In Proceedings of the

squares support vector machine classifiers. In KES’2000. 26th international conference on world wide web, 173–182.

Fourth International Conference on Knowledge-Based In-

telligent Engineering Systems and Allied Technologies. Pro- Herlocker, J. L.; Konstan, J. A.; Terveen, L. G.; and Riedl,

ceedings (Cat. No.00TH8516), volume 1, 313–316 vol.1. J. T. 2004. Evaluating Collaborative Filtering Recommender

Systems 22(1): 5–53. ISSN 1046-8188.

Berg, R. v. d.; Kipf, T. N.; and Welling, M. 2017.

Graph convolutional matrix completion. arXiv preprint Hidasi, B.; and Karatzoglou, A. 2018. Recurrent neural net-

arXiv:1706.02263 . works with top-k gains for session-based recommendations.

In Proceedings of the 27th ACM international conference on

Bobadilla, J.; Ortega, F.; Hernando, A.; and Bernal, J. 2012.

information and knowledge management, 843–852.

A collaborative filtering approach to mitigate the new user

cold start problem. Knowledge-based systems 26: 225–238. Kang, W.-C.; and McAuley, J. 2018. Self-attentive sequen-

Bronstein, M. M.; Bruna, J.; LeCun, Y.; Szlam, A.; and Van- tial recommendation. In 2018 IEEE International Confer-

dergheynst, P. 2017. Geometric Deep Learning: Going be- ence on Data Mining (ICDM), 197–206. IEEE.

yond Euclidean data. IEEE Signal Processing Magazine Kefalas, P.; Symeonidis, P.; and Manolopoulos, Y. 2018.

34(4): 18–42. Recommendations based on a heterogeneous spatio-

Cen, Y.; Zou, X.; Zhang, J.; Yang, H.; Zhou, J.; and Tang, temporal social network. World Wide Web 21(2): 345–371.

J. 2019. Representation learning for attributed multiplex Keller, J. M.; Gray, M. R.; and Givens, J. A. 1985. A fuzzy

heterogeneous network. In Proceedings of the 25th ACM K-nearest neighbor algorithm. IEEE Transactions on Sys-

SIGKDD International Conference on Knowledge Discov- tems, Man, and Cybernetics SMC-15(4): 580–585.

ery & Data Mining, 1358–1368.

Kingma, D. P.; and Ba, J. 2014. Adam: A method for

Chen, X.; Liu, D.; Xiong, Z.; and Zha, Z.-J. 2020. Learning stochastic optimization. arXiv preprint arXiv:1412.6980 .

and fusing multiple user interest representations for micro-

video and movie recommendations. IEEE Transactions on Kipf, T. N.; and Welling, M. 2016. Semi-supervised classi-

Multimedia 23: 484–496. fication with graph convolutional networks. arXiv preprint

arXiv:1609.02907 .

Defferrard, M.; Bresson, X.; and Vandergheynst, P. 2016.

Convolutional neural networks on graphs with fast localized Koren, Y.; Bell, R.; and Volinsky, C. 2009. Matrix factoriza-

spectral filtering. arXiv preprint arXiv:1606.09375 . tion techniques for recommender systems. Computer 42(8):

30–37.

Derr, T.; Ma, Y.; and Tang, J. 2018. Signed graph convolu-

tional networks. In 2018 IEEE International Conference on Le, Q.; and Mikolov, T. 2014. Distributed representations

Data Mining (ICDM), 929–934. IEEE. of sentences and documents. In International conference on

Dong, Y.; Chawla, N. V.; and Swami, A. 2017. metap- machine learning, 1188–1196. PMLR.

ath2vec: Scalable representation learning for heterogeneous Lekakos, G.; and Caravelas, P. 2008. A hybrid approach for

networks. In Proceedings of the 23rd ACM SIGKDD inter- movie recommendation. Multimedia tools and applications

national conference on knowledge discovery and data min- 36(1): 55–70.

ing, 135–144.

Li, J.; Ren, P.; Chen, Z.; Ren, Z.; Lian, T.; and Ma, J. 2017.

Fan, W.; Ma, Y.; Li, Q.; He, Y.; Zhao, E.; Tang, J.; and Yin, Neural attentive session-based recommendation. In Pro-

D. 2019a. Graph Neural Networks for Social Recommenda- ceedings of the 2017 ACM on Conference on Information

tion. 417–426. doi:10.1145/3308558.3313488. and Knowledge Management, 1419–1428.

Fan, W.; Ma, Y.; Li, Q.; He, Y.; Zhao, E.; Tang, J.; and Yin, Liu, Q.; Zeng, Y.; Mokhosi, R.; and Zhang, H. 2018.

D. 2019b. Graph Neural Networks for Social Recommen- STAMP: short-term attention/memory priority model for

dation. In The World Wide Web Conference, 417–426. New session-based recommendation. In Proceedings of the 24th

York, NY, USA: Association for Computing Machinery. ACM SIGKDD International Conference on Knowledge

Fan, W.; Ma, Y.; Li, Q.; He, Y.; Zhao, E.; Tang, J.; and Yin, Discovery & Data Mining, 1831–1839.

D. 2019c. Graph neural networks for social recommenda- Ma, H.; Yang, H.; Lyu, M. R.; and King, I. 2008. Sorec:

tion. In The World Wide Web Conference, 417–426. social recommendation using probabilistic matrix factoriza-

Grover, A.; and Leskovec, J. 2016. node2vec: Scalable fea- tion. In Proceedings of the 17th ACM conference on Infor-

ture learning for networks. In Proceedings of the 22nd ACM mation and knowledge management, 931–940.You can also read