Societal Biases in Language Generation: Progress and Challenges - ACL Anthology

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Societal Biases in Language Generation: Progress and Challenges

Emily Sheng1 , Kai-Wei Chang2 , Premkumar Natarajan1 , Nanyun Peng1,2

1

Information Sciences Institute, University of Southern California

2

Computer Science Department, University of California, Los Angeles

{ewsheng,pnataraj}@isi.edu, {kwchang,violetpeng}@cs.ucla.edu

Abstract into developing NLG techniques.

We emphasize the importance of better under-

Technology for language generation has ad-

standing how societal biases manifest in NLG tech-

vanced rapidly, spurred by advancements in

pre-training large models on massive amounts

niques, because NLG applications directly inter-

of data and the need for intelligent agents to act with many different users to generate novel

communicate in a natural manner. While tech- content in various domains (e.g., chat bots for

niques can effectively generate fluent text, they health, education, and customer support). However,

can also produce undesirable societal biases when techniques are less effective or detrimental

that can have a disproportionately negative im- for marginalized populations, these techniques can

pact on marginalized populations. Language inadvertently become gatekeepers of those popula-

generation presents unique challenges for bi-

tions for generation and associated language tech-

ases in terms of direct user interaction and

the structure of decoding techniques. To bet- nologies. For example, an educational chat bot that

ter understand these challenges, we present a produces more negative responses for topics about

survey on societal biases in language gener- a specific ethnicity will discourage users of that eth-

ation, focusing on how data and techniques nicity from interacting with the chat bot. While it

contribute to biases and progress towards re- is generally important to study the societal impact

ducing biases. Motivated by a lack of studies of NLP and AI techniques, we argue that the direct

on biases from decoding techniques, we also

user impact of NLG techniques makes it especially

conduct experiments to quantify the effects

of these techniques. By further discussing

important to carefully quantify the impact.

general trends and open challenges, we call Motivated by the importance of fairness in lan-

to attention promising directions for research guage generation, we present the first comprehen-

and the importance of fairness and inclusivity sive survey on societal biases in language genera-

considerations for language generation appli- tion. By enumerating how NLG techniques con-

cations. tribute to biases and examining progress towards

bias analysis and mitigation, we contextualize the

1 Introduction

discussion of broader trends and challenges. Specif-

Natural language generation (NLG) is a suite of ically, we focus on techniques for NLG tasks, i.e.,

techniques that enables the generation of human- tasks that generate a sequence of text.1 Finding a

readable language for different goals. These tech- lack of studies on biases from decoding techniques,

niques are the core components of applications we additionally present an experimental study to

such as virtual assistants, chat bots, automatic trans- quantify the effects of various decoding techniques.

lators, summarizers, and creative language com- Before we delve into the details of biases in lan-

posers. Recent advances in techniques for language guage generation, we first position our survey in

generation (e.g., GPT (Radford et al., 2018), GPT-2 the context of other relevant surveys and position

(Radford et al., 2019), GPT-3 (Brown et al., 2020), papers. Sun et al. (2019) present a focused survey

TransformerXL (Dai et al., 2019), XLNet (Yang 1

Although bi-directional language models like BERT (De-

et al., 2019)) powered by Transformers (Vaswani vlin et al., 2019) can also be used for auto-regressive gener-

et al., 2017) and an increasing repository of avail- ation (Wang and Cho, 2019; Chen et al., 2020), traditional

auto-regressive models are still typically of better quality and

able data have created more capable applications. more widely used for generation (Shwartz et al., 2020). Thus,

This has, in turn, channeled more interest and effort we limit the scope of this survey to the latter models.

4275

Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics

and the 11th International Joint Conference on Natural Language Processing, pages 4275–4293

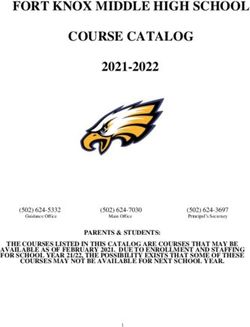

August 1–6, 2021. ©2021 Association for Computational LinguisticsDemo. Dim. NLG Task Works

Gender Autocomplete Bordia and Bowman (2019); Qian et al. (2019); Solaiman et al. (2019); Sheng et al. (2019,

2020); Vig et al. (2020); Yeo and Chen (2020); Brown et al. (2020); Dhamala et al. (2021);

Schick et al. (2021); Nozza et al. (2021); Kirk et al. (2021)

Dialogue Henderson et al. (2018); Dinan et al. (2020a); Liu et al. (2020a,b); Cercas Curry et al. (2020);

Sheng et al. (2021a,b)

MT Vanmassenhove et al. (2018); Elaraby et al. (2018); Prates et al. (2019); Stanovsky et al.

(2019); Escudé Font and Costa-jussà (2019); Cho et al. (2019); Moryossef et al. (2019);

Saunders and Byrne (2020); Saunders et al. (2020); Kocmi et al. (2020); Costa-jussà and

de Jorge (2020); Costa-jussà et al. (2020); Basta et al. (2020); Farkas and Németh (2020);

Stafanovičs et al. (2020); Gonen and Webster (2020); Hovy et al. (2020); Roberts et al.

(2020); Cho et al. (2021); Savoldi et al. (2021); Renduchintala and Williams (2021); Choubey

et al. (2021); Saunders et al. (2021); Tomalin et al. (2021)

Re-writing Habash et al. (2019); Zmigrod et al. (2019); Alhafni et al. (2020); Sun et al. (2021)

Profession Autocomplete Huang et al. (2020); Dhamala et al. (2021)

Race Autocomplete Solaiman et al. (2019); Sheng et al. (2019, 2020); Groenwold et al. (2020); Brown et al.

(2020); Dhamala et al. (2021); Schick et al. (2021); Kirk et al. (2021)

Dialogue Sheng et al. (2021a,b)

Religion Autocomplete Solaiman et al. (2019); Brown et al. (2020); Dhamala et al. (2021); Kirk et al. (2021); Abid

et al. (2021)

Sexuality Autocomplete Sheng et al. (2019, 2020); Kirk et al. (2021)

Dialogue Sheng et al. (2021a)

Other Autocomplete Shwartz et al. (2020); Peng et al. (2020); Huang et al. (2020); Dhamala et al. (2021); Kirk

et al. (2021)

Dialogue Sheng et al. (2021a)

Re-writing Pryzant et al. (2020); Ma et al. (2020)

Table 1: Existing bias studies on different demographic dimensions in various NLG tasks: autocomplete genera-

tion, dialogue generation, machine translation (MT), and text re-writing.

on mitigating gender biases and Shah et al. (2020) that generate text continuations conditioned on

categorize sources of biases—both largely focus some prompt and those that transform text from

on natural language understanding (NLU) tasks, one form to another. Table 1 organizes various

while we examine biases in NLG tasks. Addition- bias-related works for NLG tasks.

ally, Blodgett et al. (2020) urge for more explicitly

tying “biases” in NLP to societal normative defi- 2.1 Continuation Generation Tasks

nitions of biases and social hierarchies; with their The continuation class includes autocomplete and

recommendations in mind, we discuss the negative dialogue generation, where the goal is to generate

impacts of biases in NLG techniques. text that is coherent and relevant to a prompt.

Our contributions are a comprehensive survey

Autocomplete Generation We use the term au-

on societal biases in language generation and an

tocomplete generation to refer to conditional gen-

experimental study on biases from decoding tech-

eration directly from language models. Language

niques. To start, we describe classes of NLG tasks

models are the core components for many NLG

(Sec. 2) and subsequently examine examples of bi-

and NLU tasks, and this task enables directly quan-

ases and harms in NLG (Sec. 3). We then discuss

tifying biases in large, pre-trained language models

NLG techniques that facilitate biases, including a

(Bordia and Bowman, 2019; Sheng et al., 2019;

study of decoding techniques (Sec. 4). Sec. 5 high-

Solaiman et al., 2019; Brown et al., 2020). Exist-

lights progress and challenges, and Sec. 6 presents

ing works analyzing biases in autocomplete gen-

open problems and proposals. We hope this survey

eration have mostly examined Transformer-based

brings more visibility to the importance of carefully

models, including GPT (Shwartz et al., 2020), GPT-

considering different components of NLG pipelines

2 (Solaiman et al., 2019; Sheng et al., 2019, 2020;

for potential biases and mitigation methods.

Shwartz et al., 2020; Vig et al., 2020; Yeo and Chen,

2020; Huang et al., 2020; Dhamala et al., 2021;

2 Language Generation Tasks

Schick et al., 2021), GPT-3 (Brown et al., 2020),

To begin, we categorize generation tasks and in- CTRL (Dhamala et al., 2021), TransformerXL

troduce existing bias studies relevant to each task. (Shwartz et al., 2020; Vig et al., 2020; Huang et al.,

NLG tasks broadly fall into two categories: those 2020), and XLNet (Shwartz et al., 2020; Vig et al.,

42762020; Yeo and Chen, 2020), though Bordia and study Microsoft Translator,4 Amazon Translate,5

Bowman (2019); Qian et al. (2019) also look at and SYSTRAN;6 Cho et al. (2019) additionally

LSTM-based models. look at Naver Papago7 and Kakao Translator,8 and

Dialogue Generation Dialogue generation is Cho et al. (2021) also examine Yandex.9

conditioned on user inputs and can be for spe- Re-writing We use the term re-writing to refer

cific domains (e.g., health, customer service) and to tasks of revising specific words and phrases in

tasks (e.g., behavior intervention, booking flights) the original text to be more aligned with a targeted

or general chit-chat. These dialogue applications attribute. Specifically, there have been studies on

directly interact with users, and any propagated re-inflection (Habash et al., 2019; Zmigrod et al.,

biases directly affect user behavior and actions. 2019; Alhafni et al., 2020) and re-writing text to use

In terms of recurrent dialogue models, Henderson neutral viewpoints (Pryzant et al., 2020), gender-

et al. (2018) analyze biases in hierarchical recur- neutral English (Sun et al., 2021), or more agency

rent encoder-decoder architectures and Liu et al. (Ma et al., 2020). These tasks typically rely on

(2020a,b) analyze LSTM-based encoder-decoder custom encoder-decoder models.

models. Other works on dialogue biases (Dinan

2.3 Other Tasks

et al., 2020a; Sheng et al., 2020, 2021b) focus

on Transformer-based models such as DialoGPT There are other NLG tasks, such as the continua-

(Zhang et al., 2020) and other custom architectures. tion tasks of story and poetry generation, and the

transformation tasks of abstractive summarization

2.2 Transformation Generation Tasks and paraphrase generation. However, these other

NLG tasks are not yet well-studied in the context

The transformation class includes machine trans-

of societal biases.10

lation and various formulations of text re-writing.

The general goal of these tasks is to transform text 3 Biases and their Negative Impacts

into a form with targeted properties.

Machine Translation Translation is the task of In this section, we introduce how existing studies

transforming text between languages while pre- of biases in NLG tasks commonly quantify biases

serving the meaning. Existing works on biases and their negative impacts.

in machine translation have almost exclusively fo- 3.1 Bias Definitions and Metrics

cused on issues of gender biases2 in a variety of

In the context of AI fairness, the term “bias” com-

academic and commercial systems. The use of

monly refers to skews that result in undesirable

grammatical gender in some languages and not in

impacts (Crawford, 2017) and is quantifiable with

others can expose unwanted gender associations

some metric. There are relatively more existing

(e.g., for different occupations) through translation

studies on biases in NLU tasks, where it is arguably

(Prates et al., 2019). Earlier works by Vanmassen-

simpler to define bias metrics, since we can intu-

hove et al. (2018) and Elaraby et al. (2018) study

itively compare the accuracy of the task (e.g., coref-

LSTM-based encoder-decoder translation systems,

erence resolution, hate speech detection) for differ-

and more recent works examine Transformer-based

ent demographics. Language generation tasks often

architectures (Escudé Font and Costa-jussà, 2019;

involve stochastic generation of open-ended and

Stanovsky et al., 2019; Saunders and Byrne, 2020;

lengthy texts, traits that are not directly compatible

Saunders et al., 2020; Costa-jussà and de Jorge,

with traditional algorithmic bias definitions (e.g.,

2020; Basta et al., 2020; Stafanovičs et al., 2020;

4

Renduchintala and Williams, 2021; Choubey et al., https://www.bing.com/translator

5

2021; Saunders et al., 2021; Tomalin et al., 2021). https://aws.amazon.com/translate

6

https://www.systransoft.com

While Google Translate3 has been the most pop- 7

https://papago.naver.com

ular commercial system to analyze for gender bi- 8

https://translate.kakao.com

9

ases (Prates et al., 2019; Moryossef et al., 2019; https://translate.yandex.com

10

Stanovsky et al., 2019; Cho et al., 2019; Farkas Lucy and Bamman (2021) is an exception that analyzes

gender in generated stories. While there are studies of bi-

and Németh, 2020), Stanovsky et al. (2019) also ases in poetry generation and summarization, they focus on

non-NLG biases: Sheng and Uthus (2020) investigate biases

2

For a detailed survey of gender bias in machine transla- in a poetry composition system, but in the context of infor-

tion, we refer readers to Savoldi et al. (2021). mation retrieval; Celis and Keswani (2020) analyze biases in

3

https://translate.google.com extractive summarization.

4277equalized odds, equal opportunity, demographic pacts. We survey detrimental representational11

parity (Dwork et al., 2012; Hardt et al., 2016)). and allocational12 impacts (Crawford, 2017; Baro-

Because of the difficulty in defining metrics, ex- cas et al., 2017; Blodgett et al., 2020) used to moti-

isting works define bias loosely as demographic vate existing studies of bias in NLG tasks, finding

inequality and use intermediate proxy metrics to limited examples. While representational impacts

comparatively measure bias. Examples include: are sometimes cited, it is difficult to measure the

• Regard Ratio: negative-neutral-positive regard extent of the impacts. Additionally, techniques

score ratios of text generated from bias-inducing for effective NLG are relatively new, and existing

prompts (Sheng et al., 2019) studies have limited knowledge of potential alloca-

• Sentiment Ratio: negative-neutral-positive sen- tional impacts. Finally, biases in NLG tasks give

timent score ratios of text generated from African rise to a third type of negative impacts, which we

American English (AAE) versus White-Aligned call vulnerability impacts.

English (WAE) prompts (Groenwold et al., 2020) Representational Impacts The works in Ta-

• Individual and Group Fairness through Sen- ble 1 motivate (to varying degrees) studying bi-

timent: comparisons of the sentiment distribu- ases in NLG through potential negative representa-

tions of generated text across demographics and tional impacts, in the form of propagating stereo-

prompts (Huang et al., 2020) types, misrepresentations, or denigrations of social

• Gendered Word Co-occurrence Score: mean groups. For example, Sheng et al. (2019) enumer-

and standard deviations of the absolute log ra- ate how generated text can propagate varying social

tio of probabilities: P(word|female terms) to perceptions of different demographics, and Prates

P(word|male terms) across all words in gener- et al. (2019) discuss how occupation-related gender

ated text (Bordia and Bowman, 2019) biases could propagate stereotypes in translation.

There are also metrics for other bias evaluation However, it is difficult to quantify the effects of rep-

setups in continuation generation tasks involving resentational impacts;13 while such impacts may be

sentiment (Shwartz et al., 2020), the ratio of gen- measured indirectly (e.g. by analyzing allocational

dered words (Solaiman et al., 2019; Vig et al., 2020; impacts), we suggest long-term, interdisciplinary

Dinan et al., 2020a), and other novel metrics (Peng collaborations to explore the direct effects of these

et al., 2020; Yeo and Chen, 2020). Studies of biases representational impacts.

in transformation generation tasks favor metrics of Allocational Impacts Harmful allocational im-

accuracy in terms of successfully transforming text pacts result from an unequal allocation of resources

to have a desired property. We present a more thor- across groups. Since effective NLG techniques

ough comparison of metrics in Section 5.4. based on large Transformer models (Vaswani et al.,

Bias metrics can also be categorized by how they 2017) are relatively new, most of the existing works

define associations between demographic group at- on biases in NLG that list possible impacts only

tributes and text. Biases can be towards people analyze direct representational consequences. A

described in text, people who produce the text, real example of a negative allocational impact is

or people to whom the text is addressed (Dinan when machine translation errors lead to arrests

et al., 2020b). Most existing works define bias (Ong, 2017). In general, technologies that are less

metrics through the first association—these biases effective or detrimental for certain populations be-

are relatively easier to analyze, since both the de- come barriers that actively prevent those popula-

mographic and the textual signals of bias are en- tions from using the technology, leading to dimin-

capsulated within the text. There are also works ished opportunities in jobs, education, health, etc.

that define biases towards people who produce the We discuss more details in Section 4.5. With contin-

text (Groenwold et al., 2020) or people to whom uous technological advances, more organizations

the text is addressed (Sheng et al., 2021b), though will turn to effective NLG techniques, making it

there are relatively fewer works that study these imperative to start setting norms to reduce harmful

latter associations. allocational impacts (Tamkin et al., 2021).

3.2 Negative Impacts 11

Unfair representations of different groups

12

Unfair allocation of resources

Biases in NLG techniques are important to study 13

Kay et al. (2015) is a rare example that explicitly studies

because they can result in harmful, negative im- the effect of representational impacts in image search.

4278Vulnerability Impacts Open-domain generation could lead to biases, and we refer readers to their

tasks can amplify a group’s vulnerability to manip- works for more discussion. In the context of trans-

ulation and harm, which is an intermediate impact lation, Cho et al. (2021) find that more data can

that makes a group more susceptible to represen- increase translation fluency but may also make the

tational and allocational impacts. For example, system more biased.

privacy-related issues (Carlini et al., 2020), misin-

formation (Levy et al., 2021), or radicalizing views 4.2 Biases from Model Architecture

in generated text could make a group more likely to There are relatively few studies that examine model

be attributed to specific stereotypes (e.g., through architectural properties that could lead to biases.

action guided by misinformation) or end up with We discuss the few efforts towards understanding

diminished opportunities (e.g., by having personal model biases in NLG tasks and emphasize the need

data exposed and misused). Separately identifying for more to generalize. For autocomplete gener-

vulnerability impacts could help facilitate recogni- ation, Vig et al. (2020) analyze GPT-2 variants

tion of other negative impacts. through a causal mediation analysis, finding that

larger models contain more gender bias, and bias

4 Contributors to NLG Biases tends to be concentrated in a small number of neu-

rons and attention heads. Silva et al. (2021) ob-

In a pipeline from data collection to evaluation

serve amplified biases in distilled versus original

for an NLG task, each component could propagate

models. For machine translation, Costa-jussà et al.

biases.14 We emphasize the ways in which data,

(2020) note that language-specific architectures are

model architecture, decoding, evaluation, and de-

less biased because they encode more gender in-

ployment uniquely exacerbate biases in generation

formation than shared language encoder-decoder

tasks. Additionally, we present an empirical study

architectures. Studies like the aforementioned are

to show how measured biases in generated text can

useful for designing targeted bias mitigation meth-

vary based on decoding technique.

ods (e.g., controlled generation to target specific

4.1 Biases from Data attention heads or regularization to retain gender

information). However, more evidence would be

Modern NLP models often rely on large pre-trained needed to generalize findings across models.15

language models, which in turn rely on a large col-

lection of data to learn explicit and implicit associ- 4.3 Biases from Decoding

ations. Several recent pre-trained language models While NLU and NLG models have structural simi-

used for NLG tasks, e.g., T5 (Raffel et al., 2020) larities, NLG tasks uniquely use search or sampling

and GPT-3 (Brown et al., 2020), are trained on the techniques at inference time to generate text. Popu-

largest datasets used for any models. These large lar techniques include:

models for generation are commonly trained on • Greedy Search: at each time step, choose the

web data, which is known to contain biased lan- word with the highest probability.

guage (e.g., Ferrer et al. (2021) discover gender, • Beam Search: at each time step, keep the top b

religion, and ethnic biases in Reddit communities). hypotheses with highest probabilities; eventually

While preprocessing is often included to filter out pick the hypothesis with the highest probability.

malformatted data and explicitly negative content • Top-k sampling (Fan et al., 2018): at each time

(e.g., bad words and offensive phrases), those are step, re-distribute the probability mass of the top

generally the only efforts to reduce biases and as- k words with highest probabilities and sample.

sociated impacts. Furthermore, by filtering out all • Nucleus sampling (Holtzman et al., 2019): at

words deemed “bad”, Bender et al. (2021) warns each time step, re-distribute the probability mass

that we remove the discourse of marginalized pop- of the smallest set of words with a cumulative

ulations. Paullada et al. (2020), Bender and Fried- probability exceeding p and sample.

man (2018), and Gebru et al. (2018) provide more

More constrained forms of generation such as ma-

comprehensive surveys and frameworks that focus

chine translation generally use variations of beam

on aspects of data creation and management that

15

We also refer the reader to the work of Park et al. (2018)

14

Task formulation and application deployment are also that discusses biases in NLU tasks from model components

part of NLG task pipelines (Kiritchenko et al., 2020), though that “attend” to specific words (e.g., through attention or pool-

we do not focus on biases in these areas. ing), which could be applicable to NLG tasks as well.

4279search; however, preferred decoding techniques are age researchers to document sampling techniques,

more varied for open-domain generation. Despite consider how metrics can be formulated to evaluate

variations in fluency and diversity between deter- both bias and other factors of generation quality,

ministic versus stochastic, search versus sampling and inspire more comprehensive studies.18

procedures, there are limited studies (Roberts et al.,

2020) on how different decoding properties affect 4.4 Biases from Evaluation

biases in generation. Biases can arise from both general evaluations and

A Study on Biases from Decoding To study bias evaluations for NLG tasks.

how decoding techniques affect biases in gener- General Evaluations Current standards for

ation, we use existing NLG bias metrics to evalu- NLG evaluation can reinforce certain types of lan-

ate text generated from different decoding meth- guage and penalize others. For example, using

ods.16 We examine autocomplete generations from perplexity as measured by models pre-trained on

GPT, GPT-2, and XLNet, using the decoding tech- datasets largely containing non-AAE text leads

niques from Section 4.3. We evaluate with the to an unfair evaluation of AAE text. Addition-

following bias metrics: regard ratios (Sheng et al., ally, the subjectivity of generation tasks means that

2019), sentiment ratios (Groenwold et al., 2020), much of NLG evaluation depends on human labels.

individual and group fairness through sentiment Since humans from different backgrounds are ac-

scores (Huang et al., 2020), and gendered word customed to different societal norms and linguistic

co-occurrence scores (Bordia and Bowman, 2019) variations, the choice of human annotators could

(as introduced in Section 3). More experimental drastically influence the evaluation standards for

details can be found in the Appendix. generated text.

In Section 5.4, we distinguish between relative Bias Evaluations It is difficult to evaluate so-

and absolute score metrics to examine evaluation cietal biases in NLG tasks because NLG can be

differences between NLG tasks. Here, we orga- open-domain, and there are many different notions

nize our results into these categories to generalize of biases from various backgrounds and cultures

trends about decoding techniques. The ratio-based (Sambasivan et al., 2021). These factors lead to

metrics are relative score metrics, since evaluation the use of a variety of metrics to evaluate biases

relies on comparing ratios between demographics. (Section 3). To avoid experimental bias in eval-

The latter three metrics are absolute score metrics uation, we recommend using multiple metrics to

that have target values of zero indicating no bias. cover many types of biases at various granulari-

For the relative score metrics, search and sam- ties. We identify three points to emphasize the

pling techniques generate similar outcomes. An need for more comprehensive evaluations. First,

interesting result between sampling techniques for most existing works on biases in generation center

the regard metric is that nucleus sampling is less around one demographic dimension (often gender

biased yet more negative than top-k sampling. For and from a Western perspective, e.g., using stan-

the absolute score metrics, we find that beam search dard Western occupations). While there has been

is the most unbiased technique, closely followed no comprehensive study on whether mitigating bi-

by greedy search and then top-k and nucleus sam- ases for one demographic dimension (e.g., gender)

pling. Through our study, we discover that text may exacerbate biases for others (e.g., race, inter-

diversity is not accounted for in any of the bias sectional identities), this is a possibility we must

metrics, yet diversity can be a confounding fac- consider. Second, most works only evaluate bias

tor. Specifically, beam search is the least diverse,17 through a single intermediate proxy; however, dif-

followed by greedy search, top-k sampling, then ferent metrics are defined at different granularities

nucleus sampling. Results indicate that the less (e.g., sentiment is sentence-level, gendered word

diverse search techniques lead to better scores for ratio is word-level). Finally, different evaluation

individual fairness, group fairness, and gendered datasets test for specific types of biases and are

word co-occurrence ratios. influenced by the backgrounds of the curators. Col-

We hope these experimental results will encour- lectively evaluating biases across demographic di-

16

Code at https://github.com/ewsheng/ mensions and granularities can thus help reduce

decoding-biases. experimentally-biased evaluations.

17

We report average generated text length and vocabulary

18

sizes to estimate diversity in Appendix Table 4. Results are summarized in Appendix Tables 2, 3, and 5.

42804.5 Biases from Deploying Systems Curated Datasets Existing datasets to study bi-

ases in translation include parallel sentences tagged

In terms of deploying NLG systems, there is a

with speaker or subject gender information (Van-

feedback loop that benefits some communities and

massenhove et al., 2018; Habash et al., 2019) and

further disadvantages others. While this feedback

datasets to study gender biases when translating

loop is not unique to NLG systems, these systems

from neutral references of a person (e.g., nurse in

that directly interact with users make good caution-

English, gender-neutral pronouns) to gendered in-

ary examples.

stances (e.g., enfermera or enfermero in Spanish,

First, many deployed language technologies re-

gendered pronouns) (Cho et al., 2019; Stanovsky

quire internet access both to use and contribute

et al., 2019; Gonen and Webster, 2020; Kocmi et al.,

feedback, thus favoring the views and languages of

2020). Renduchintala and Williams (2021) addi-

those privileged with this access. For example, any-

tionally provide a dataset to study translation of

one can contribute feedback to Google Translate,

neutral references in unambiguous contexts. Other

but if contributions and subsequent improvements

works present parallel corpora of biased versus un-

are focused on high-resource languages, this fur-

biased framings and presuppositions (Pryzant et al.,

ther increases the accuracy gap between the high

2020) and AAE versus WAE equivalents (Groen-

and low resource languages, diminishing opportuni-

wold et al., 2020). Sheng et al. (2019); Huang et al.

ties for speakers of the low resource languages, i.e.,

(2020); Dhamala et al. (2021) additionally curate

representation disparity (Hashimoto et al., 2018).

sets of prompts that can be used to evaluate biases

Second, those who are unable to achieve their in autocomplete generation.

goals from using these language technologies (e.g., Bias Analysis Most bias analyses of NLG tasks

unsuccessful translation, unhelpful or offensive use prompts to probe for different biases in gener-

chat bot) are less likely to continue using the tech- ated text, e.g., regarding social perception (Sheng

nology. This means that there is less feedback and et al., 2019), gender in translation (Prates et al.,

data to improve the technologies, reinforcing the 2019), names (Shwartz et al., 2020), sentiment

decreased effectiveness for certain populations, i.e., distribution (Huang et al., 2020), dialects (Groen-

disparity amplification (Hashimoto et al., 2018). wold et al., 2020), dialogue personas (Sheng et al.,

One way we might intervene is to follow a more 2021a), or other notions of similarity across demo-

targeted approach for data and feedback collection, graphics (Yeo and Chen, 2020; Henderson et al.,

e.g., from excluded populations. However, we ac- 2018). Vig et al. (2020) also use prompts to investi-

knowledge that this remains a difficult task and gate gender biases, though they do so in the context

that it is also necessary to be aware of “commu- of a causal mediation analysis. Furthermore, Prates

nity goals” and other factors in order to co-design et al. (2019) and Farkas and Németh (2020) com-

language technologies without inflicting additional pare pronoun gender biases in translations (induced

harm on marginalized populations (Bird, 2020). with prompts) to real-world statistics.

Bias Mitigation Methods can broadly be classi-

5 Progress, Trends, and Challenges fied into two categories based on the type of data ap-

plied. The first category encompasses methods that

Following the discussion of contributors to biases,

fine-tune or train on a balanced dataset to lessen

we survey trends and challenges for reducing biases

the effects of the model relying on spurious corre-

in NLG.

lations between imbalanced data and task perfor-

mance. CDA has been applied to datasets used for

5.1 Data Methods

continued or fresh training in dialogue generation

Data-based methods for both bias analysis and mit- (Dinan et al., 2020a; Liu et al., 2020a) as well as

igation use the general idea of counterfactual data machine translation (Saunders and Byrne, 2020;

augmentation (CDA) (Lu et al., 2020) to curate sets Costa-jussà and de Jorge, 2020; Stafanovičs et al.,

of counterfactual prompts. A common method for 2020). The second category is methods that at-

analysis is using targeted prompts to induce NLG tach a short prefix at training time (Vanmassenhove

models to reveal biases. For data-based mitigation, et al., 2018; Basta et al., 2020; Alhafni et al., 2020)

existing works focus on fine-tuning large models or inference time (Moryossef et al., 2019).

or training smaller models with datasets that are Challenges The size of state-of-the-art pre-

balanced with respect to targeted demographics. trained models and varying definitions of biases

4281in generation present difficulties for creating stan- be more equalized towards different demographics.

dardized datasets that are generally effective across For translation, Saunders and Byrne (2020) present

biases and demographics. Moreover, it remains to a lattice rescoring procedure that creates gender-

be seen whether data-based mitigation is as effec- inflected search spaces to rescore text for more ac-

tive for open-domain NLG tasks as it is for more curate translations, and Saunders et al. (2021) sub-

constrained settings. sequently use this lattice structure to present more

gendered options during beam search and rerank

5.2 Training Methods translation hypotheses according to gender criteria.

In addition to data-based mitigation, training-based For dialogue generation, Sheng et al. (2021b) in-

mitigation is another popular class of methods to troduce a constrained decoding method that uses

reduce biases in generation. n-gram similarity to guide generation away from

Bias Mitigation Several works that use training- ad hominems towards marginalized groups. For au-

based mitigation techniques rely on regularization tocomplete generation, Schick et al. (2021) present

(Bordia and Bowman, 2019; Qian et al., 2019; a self-debiasing scheme that re-weights word prob-

Huang et al., 2020; Liu et al., 2020a; Saunders and abilities to generate less undesirable words.

Byrne, 2020). There are also works that induce con- Challenges Control methods at inference time

trol by incorporating a bias control code through could potentially steer the model into degenerate

conditional training (Dinan et al., 2020a), by ap- spaces, so it is important to also evaluate these

pending a target value to inputs during training methods for coherence, fluency, and task relevance.

(Ma et al., 2020), by using a normative classifier to

produce reward values for backpropagation (Peng 5.4 Evaluation Methods

et al., 2020), or through adversarial training (Liu There are two types of evaluations: those that rely

et al., 2020b). Other techniques include using de- on absolute scores and those that rely on relative

biased word embeddings (Escudé Font and Costa- scores. Absolute score evaluations use an accu-

jussà, 2019), identifying and editing out subjective mulated score to summarize inequalities between

words (Pryzant et al., 2020), and using Markov ran- demographics, whereas relative evaluations explic-

dom fields to preserve morpho-syntactic agreement itly report inequalities between all demographics.

during reinflection (Zmigrod et al., 2019). While it is possible to convert between relative and

Challenges The main challenge of bias mitiga- absolute scores, distinguishing between how exist-

tion through training methods is that it is costly and ing works choose to portray evaluations allows us

impractical to re-train models for new biases en- to examine differences between generation tasks.

countered. In fact, most of the techniques that rely Absolute Evaluations We find that the transfor-

on training from scratch use smaller architectures mation class of generation tasks favors bias evalu-

(exceptions are from larger institutions). ation through absolute metrics, which is possible

because these tasks involve relatively more con-

5.3 Inference Methods strained forms of generation. Examples of eval-

While the existing literature on inference time meth- uation objectives through absolute scores include

ods for bias mitigation is sparse, decoding-based Peng et al. (2020) reducing non-normative gener-

methods are a promising alternative to data- and ations, Ma et al. (2020) increasing the accuracy

training-based methods. Specifically, these meth- of the change in agency, Zmigrod et al. (2019) in-

ods are compatible with any pre-trained language creasing the number of correct inflections, Huang

model for generation without additional training. et al. (2020) reducing individual and group fair-

Given recent development of inference-time meth- ness scores, and Sheng et al. (2021b) reducing

ods for control that can reduce toxicity (e.g., PPLM the amount of ad hominems towards marginalized

(Dathathri et al., 2019), GeDi (Krause et al., 2020), groups. Studies of gender bias in machine trans-

DExperts (Liu et al., 2021)), there is potential for lation are well-suited to evaluations using abso-

extending these methods to bias mitigation. lute scores: many use BLEU and its variants to

Bias Mitigation For autocomplete and dialogue evaluate correct gender inflections and translations

generation, Sheng et al. (2020) formulate bias trig- (Moryossef et al., 2019; Escudé Font and Costa-

gers using gradient-based methods of Wallace et al. jussà, 2019; Elaraby et al., 2018; Habash et al.,

(2019). These triggers are appended to prompts 2019; Alhafni et al., 2020) or accuracy on WinoMT

during inference time to control text generation to (Saunders and Byrne, 2020; Saunders et al., 2020;

4282Kocmi et al., 2020; Costa-jussà and de Jorge, 2020; for potential harms. Since effective models for

Costa-jussà et al., 2020; Basta et al., 2020; Choubey NLG tasks are correlated with increasing training

et al., 2021; Saunders et al., 2021). data sizes, biases in data collection (e.g., English-

Relative Evaluations In terms of evaluation centric, drawn from popular Western media) re-

through relative scores, examples from existing main a major contributor of biases that manifest

works are mainly from continuation generation in generation. Additionally, datasets used to study

tasks. We infer that the less constrained, open- biases in generation can also be limited (e.g., only

domain nature of continuation generation tasks for binary gender classes). For more bias-aware

makes it more preferable to evaluate mitigation data curation, we suggest diversifying datasets to

through more flexible comparisons rather than ab- include more viewpoints from various groups.

solute scores. For autocomplete generation, Sheng Understanding Trade-Offs Different methods

et al. (2019, 2020) and Groenwold et al. (2020) for analysis, mitigation, and evaluation have unique

compare regard or sentiment scores across demo- trade-offs. Existing works have been relatively

graphics, Shwartz et al. (2020) compare names small-scale and limited to a small number of biases

across various intermediate metrics, Vig et al. for specific tasks. Some useful questions to con-

(2020) measure proportional differences between sider when developing methods to study generation

the amount of bias under a gendered versus ambigu- biases are whether we can generalize methods to a

ous reading, and Yeo and Chen (2020) compare diverse set of biases and a wide range of contexts.

occupations generated for different genders. Bias It is also important to consider formulating met-

studies in dialogue generation use relative scores rics that would jointly mitigate biases and preserve

by comparing sentiment and offensive language other desired text qualities (e.g., diversity, fluency).

discrepancies (Henderson et al., 2018; Liu et al.,

2020a,b) and the percentage of gendered words Interactive and Continuous Learning The dif-

(Dinan et al., 2020a). ficulties of measuring and mitigating biases in gen-

Challenges A trade-off between framing biases eration can be reduced with a general framework

as a relative or absolute metric is that relative met- for interactive and continuous learning. Over time,

rics can be more flexibly aligned to normative con- such a system could learn from diverse opinions of

cerns like social perception. Absolute metrics that what constitutes “fair” versus “unfair” generations

look for ratios of gendered words or other indica- across tasks. A unified framework would centralize

tor words assume that there is a set of words that and highlight the importance of studying biases in

captures all the differences between demographic generation, as well as fuel the development of a

groups, regardless of whether these differences are more comprehensive set of evaluations that may be

related to normative definitions of harm. There useful for large-scale studies of impact.

are also absolute metrics such as those of Huang Focusing on Negative Impacts Section 3 dis-

et al. (2020) that can incorporate intermediate met- cusses how there are very few existing works on

rics that are more aligned with normative behavior, biases that explicitly and meaningfully engage with

though these metrics reduce the notion of biases to resulting negative impacts, even though these im-

a single value, which could erase historical inequal- pacts are what motivate reducing biases. By re-

ities between groups. framing efforts on reducing negative impacts rather

than biases, we may be able to define metrics and

6 Open Problems and Proposals progress that better correlate with reducing harm.

For example, relative framings of bias metrics

As a fairly nascent area of exploration, the study

could better enable metrics to be more aligned with

of biases in language generation still poses many

reducing harms for particularly impacted groups.

challenges. Throughout this paper, we discuss chal-

lenges associated with different components in a

generation pipeline. With a heightened awareness Acknowledgments

of the relevant body of work, we conclude with

recommendations for open problems. We would like to thank Seraphina Goldfarb-Tarrant,

Bias-Aware Data Curation Many works have Sunipa Dev, Jason Teoh, members of the Plus Lab,

highlighted the harms and problems when col- and our anonymous reviewers for the many helpful

lecting training datasets with limited awareness suggestions that went into this paper.

4283Ethics and Broader Implications of the The Fourth Widening Natural Language Pro-

cessing Workshop, pages 99–102, Seattle, USA. As-

In this work, we present a survey and commentary sociation for Computational Linguistics.

on the progress and challenges for studying societal

Emily M. Bender and Batya Friedman. 2018. Data

biases in language generation. statements for natural language processing: Toward

Data We do not check the quality of the datasets mitigating system bias and enabling better science.

used to train popular language generation models Transactions of the Association for Computational

Linguistics, 6:587–604.

(due to limited availability and size), though we

do briefly mention problems that other works have Emily M Bender, Timnit Gebru, Angelina McMillan-

found regarding using large datasets that have been Major, and Shmargaret Shmitchell. 2021. On the

minimally filtered. Some of the surveyed datasets dangers of stochastic parrots: Can language models

be too big. Proceedings of FAccT.

and metrics that are used for evaluating biases ap-

proximate binary genders using names typical of Steven Bird. 2020. Decolonising speech and lan-

specific genders, and may be better re-formulated guage technology. In Proceedings of the 28th Inter-

national Conference on Computational Linguistics,

to avoid harms and curate a more accurate repre- pages 3504–3519, Barcelona, Spain (Online). Inter-

sentation of different genders. On the subject of national Committee on Computational Linguistics.

genders, the majority of bias evaluation data also

Su Lin Blodgett, Solon Barocas, Hal Daumé III, and

only evaluate for binary genders—we point out this

Hanna Wallach. 2020. Language (technology) is

issue in our survey as well. power: A critical survey of “bias” in NLP. In Pro-

Techniques Most of the techniques surveyed in ceedings of the 58th Annual Meeting of the Asso-

this work are trained with or bias-tested with data ciation for Computational Linguistics, pages 5454–

5476, Online. Association for Computational Lin-

drawn from Western sources or culture, since that is guistics.

largely the focus of the existing body of work. We

also refer to studies that point out how techniques Shikha Bordia and Samuel R. Bowman. 2019. Identify-

for bias do not always transfer across cultures. Our ing and reducing gender bias in word-level language

models. In Proceedings of the 2019 Conference of

decoding experiments could potentially fuel mis- the North American Chapter of the Association for

use by giving those with adversarial interests a Computational Linguistics: Student Research Work-

better understanding of how decoding algorithms shop, pages 7–15, Minneapolis, Minnesota. Associ-

could thwart bias metrics, though we believe trans- ation for Computational Linguistics.

parency around these results outweigh the potential Tom B Brown, Benjamin Mann, Nick Ryder, Melanie

for misuse. Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind

Neelakantan, Pranav Shyam, Girish Sastry, Amanda

Askell, et al. 2020. Language models are few-shot

learners. arXiv preprint arXiv:2005.14165.

References

Abubakar Abid, Maheen Farooqi, and James Zou. Nicholas Carlini, Florian Tramer, Eric Wallace,

2021. Persistent anti-muslim bias in large language Matthew Jagielski, Ariel Herbert-Voss, Katherine

models. arXiv preprint arXiv:2101.05783. Lee, Adam Roberts, Tom Brown, Dawn Song, Ul-

far Erlingsson, et al. 2020. Extracting training

Bashar Alhafni, Nizar Habash, and Houda Bouamor. data from large language models. arXiv preprint

2020. Gender-aware reinflection using linguistically arXiv:2012.07805.

enhanced neural models. In Proceedings of the Sec- L Elisa Celis and Vijay Keswani. 2020. Dialect diver-

ond Workshop on Gender Bias in Natural Language sity in text summarization on twitter. arXiv preprint

Processing, pages 139–150, Barcelona, Spain (On- arXiv:2007.07860.

line). Association for Computational Linguistics.

Amanda Cercas Curry, Judy Robertson, and Verena

Solon Barocas, Kate Crawford, Aaron Shapiro, and Rieser. 2020. Conversational assistants and gender

Hanna Wallach. 2017. The problem with bias: Al- stereotypes: Public perceptions and desiderata for

locative versus representational harms in machine voice personas. In Proceedings of the Second Work-

learning. In 9th Annual Conference of the Special shop on Gender Bias in Natural Language Process-

Interest Group for Computing, Information and So- ing, pages 72–78, Barcelona, Spain (Online). Asso-

ciety. ciation for Computational Linguistics.

Christine Basta, Marta R. Costa-jussà, and José A. R. Yen-Chun Chen, Zhe Gan, Yu Cheng, Jingzhou Liu,

Fonollosa. 2020. Towards mitigating gender bias in and Jingjing Liu. 2020. Distilling knowledge

a decoder-based neural machine translation model learned in BERT for text generation. In Proceedings

by adding contextual information. In Proceedings of the 58th Annual Meeting of the Association for

4284Computational Linguistics, pages 7893–7905, On- Emily Dinan, Angela Fan, Adina Williams, Jack Ur-

line. Association for Computational Linguistics. banek, Douwe Kiela, and Jason Weston. 2020a.

Queens are powerful too: Mitigating gender bias in

Won Ik Cho, Ji Won Kim, Seok Min Kim, and dialogue generation. In Proceedings of the 2020

Nam Soo Kim. 2019. On measuring gender bias in Conference on Empirical Methods in Natural Lan-

translation of gender-neutral pronouns. In Proceed- guage Processing (EMNLP), pages 8173–8188, On-

ings of the First Workshop on Gender Bias in Natu- line. Association for Computational Linguistics.

ral Language Processing, pages 173–181, Florence,

Italy. Association for Computational Linguistics. Emily Dinan, Angela Fan, Ledell Wu, Jason Weston,

Won Ik Cho, Jiwon Kim, Jaeyeong Yang, and Nam Soo Douwe Kiela, and Adina Williams. 2020b. Multi-

Kim. 2021. Towards cross-lingual generalization of dimensional gender bias classification. In Proceed-

translation gender bias. In Proceedings of the 2021 ings of the 2020 Conference on Empirical Methods

ACM Conference on Fairness, Accountability, and in Natural Language Processing (EMNLP), pages

Transparency, pages 449–457. 314–331, Online. Association for Computational

Linguistics.

Prafulla Kumar Choubey, Anna Currey, Prashant

Mathur, and Georgiana Dinu. 2021. Improving gen- Cynthia Dwork, Moritz Hardt, Toniann Pitassi, Omer

der translation accuracy with filtered self-training. Reingold, and Richard Zemel. 2012. Fairness

arXiv preprint arXiv:2104.07695. through awareness. In Proceedings of the 3rd inno-

vations in theoretical computer science conference,

Marta R Costa-jussà, Carlos Escolano, Christine pages 214–226.

Basta, Javier Ferrando, Roser Batlle, and Ksenia

Kharitonova. 2020. Gender bias in multilingual neu- Mostafa Elaraby, Ahmed Y Tawfik, Mahmoud Khaled,

ral machine translation: The architecture matters. Hany Hassan, and Aly Osama. 2018. Gender aware

arXiv preprint arXiv:2012.13176. spoken language translation applied to english-

arabic. In 2018 2nd International Conference

Marta R. Costa-jussà and Adrià de Jorge. 2020.

on Natural Language and Speech Processing (IC-

Fine-tuning neural machine translation on gender-

NLSP), pages 1–6. IEEE.

balanced datasets. In Proceedings of the Second

Workshop on Gender Bias in Natural Language Pro-

Joel Escudé Font and Marta R. Costa-jussà. 2019.

cessing, pages 26–34, Barcelona, Spain (Online).

Equalizing gender bias in neural machine transla-

Association for Computational Linguistics.

tion with word embeddings techniques. In Proceed-

Kate Crawford. 2017. The trouble with bias. Keynote ings of the First Workshop on Gender Bias in Natu-

at NeurIPS. ral Language Processing, pages 147–154, Florence,

Italy. Association for Computational Linguistics.

Zihang Dai, Zhilin Yang, Yiming Yang, Jaime Car-

bonell, Quoc Le, and Ruslan Salakhutdinov. 2019. Angela Fan, Mike Lewis, and Yann Dauphin. 2018. Hi-

Transformer-XL: Attentive language models beyond erarchical neural story generation. In Proceedings

a fixed-length context. In Proceedings of the 57th of the 56th Annual Meeting of the Association for

Annual Meeting of the Association for Computa- Computational Linguistics (Volume 1: Long Papers),

tional Linguistics, pages 2978–2988, Florence, Italy. pages 889–898.

Association for Computational Linguistics.

Anna Farkas and Renáta Németh. 2020. How to mea-

Sumanth Dathathri, Andrea Madotto, Janice Lan, Jane sure gender bias in machine translation: Optimal

Hung, Eric Frank, Piero Molino, Jason Yosinski, and translators, multiple reference points. arXiv preprint

Rosanne Liu. 2019. Plug and play language mod- arXiv:2011.06445.

els: A simple approach to controlled text generation.

In International Conference on Learning Represen- Xavier Ferrer, Tom van Nuenen, Jose M Such, and Na-

tations. talia Criado. 2021. Discovering and categorising

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and language biases in reddit. In Proceedings of the In-

Kristina Toutanova. 2019. BERT: Pre-training of ternational AAAI Conference on Web and Social Me-

deep bidirectional transformers for language under- dia, volume 15.

standing. In Proceedings of the 2019 Conference

of the North American Chapter of the Association Timnit Gebru, Jamie Morgenstern, Briana Vecchione,

for Computational Linguistics: Human Language Jennifer Wortman Vaughan, Hanna Wallach, Hal

Technologies, Volume 1 (Long and Short Papers), Daumé III, and Kate Crawford. 2018. Datasheets

pages 4171–4186, Minneapolis, Minnesota. Associ- for datasets. arXiv preprint arXiv:1803.09010.

ation for Computational Linguistics.

Hila Gonen and Kellie Webster. 2020. Automatically

Jwala Dhamala, Tony Sun, Varun Kumar, Satyapriya identifying gender issues in machine translation us-

Krishna, Yada Pruksachatkun, Kai-Wei Chang, and ing perturbations. In Findings of the Association

Rahul Gupta. 2021. Bold: Dataset and metrics for for Computational Linguistics: EMNLP 2020, pages

measuring biases in open-ended language genera- 1991–1995, Online. Association for Computational

tion. Proceedings of FAccT. Linguistics.

4285Sophie Groenwold, Lily Ou, Aesha Parekh, Samhita Proceedings of the 33rd Annual ACM Conference on

Honnavalli, Sharon Levy, Diba Mirza, and Human Factors in Computing Systems, pages 3819–

William Yang Wang. 2020. Investigating African- 3828.

American Vernacular English in transformer-based

text generation. In Proceedings of the 2020 Confer- Svetlana Kiritchenko and Saif Mohammad. 2018. Ex-

ence on Empirical Methods in Natural Language amining gender and race bias in two hundred sen-

Processing (EMNLP), pages 5877–5883, Online. timent analysis systems. In Proceedings of the

Association for Computational Linguistics. Seventh Joint Conference on Lexical and Computa-

tional Semantics, pages 43–53.

Nizar Habash, Houda Bouamor, and Christine Chung.

2019. Automatic gender identification and reinflec- Svetlana Kiritchenko, Isar Nejadgholi, and Kathleen C

tion in Arabic. In Proceedings of the First Work- Fraser. 2020. Confronting abusive language online:

shop on Gender Bias in Natural Language Process- A survey from the ethical and human rights perspec-

ing, pages 155–165, Florence, Italy. Association for tive. arXiv preprint arXiv:2012.12305.

Computational Linguistics.

Hannah Kirk, Yennie Jun, Haider Iqbal, Elias Benussi,

Moritz Hardt, Eric Price, and Nati Srebro. 2016. Equal- Filippo Volpin, Frederic A Dreyer, Aleksandar Sht-

ity of opportunity in supervised learning. In Ad- edritski, and Yuki M Asano. 2021. How true is gpt-

vances in neural information processing systems, 2? an empirical analysis of intersectional occupa-

pages 3315–3323. tional biases. arXiv preprint arXiv:2102.04130.

Tatsunori Hashimoto, Megha Srivastava, Hongseok Tom Kocmi, Tomasz Limisiewicz, and Gabriel

Namkoong, and Percy Liang. 2018. Fairness with- Stanovsky. 2020. Gender coreference and bias eval-

out demographics in repeated loss minimization. uation at WMT 2020. In Proceedings of the Fifth

In International Conference on Machine Learning, Conference on Machine Translation, pages 357–364,

pages 1929–1938. PMLR. Online. Association for Computational Linguistics.

Peter Henderson, Koustuv Sinha, Nicolas Angelard- Ben Krause, Akhilesh Deepak Gotmare, Bryan Mc-

Gontier, Nan Rosemary Ke, Genevieve Fried, Ryan Cann, Nitish Shirish Keskar, Shafiq Joty, Richard

Lowe, and Joelle Pineau. 2018. Ethical challenges Socher, and Nazneen Fatema Rajani. 2020. Gedi:

in data-driven dialogue systems. In Proceedings of Generative discriminator guided sequence genera-

the 2018 AAAI/ACM Conference on AI, Ethics, and tion. arXiv preprint arXiv:2009.06367.

Society, pages 123–129.

Sharon Levy, Michael Saxon, and William Yang Wang.

Ari Holtzman, Jan Buys, Li Du, Maxwell Forbes, and 2021. The truth is out there: Investigating conspir-

Yejin Choi. 2019. The curious case of neural text de- acy theories in text generation. In Findings of The

generation. In International Conference on Learn- Joint Conference of the 59th Annual Meeting of the

ing Representations. Association for Computational Linguistics and the

11th International Joint Conference on Natural Lan-

Dirk Hovy, Federico Bianchi, and Tommaso Forna- guage Processing.

ciari. 2020. “you sound just like your father” com-

mercial machine translation systems include stylis- Alisa Liu, Maarten Sap, Ximing Lu, Swabha

tic biases. In Proceedings of the 58th Annual Meet- Swayamdipta, Chandra Bhagavatula, Noah A Smith,

ing of the Association for Computational Linguistics, and Yejin Choi. 2021. On-the-fly controlled text

pages 1686–1690, Online. Association for Computa- generation with experts and anti-experts. In The

tional Linguistics. Joint Conference of the 59th Annual Meeting of the

Association for Computational Linguistics and the

Po-Sen Huang, Huan Zhang, Ray Jiang, Robert Stan- 11th International Joint Conference on Natural Lan-

forth, Johannes Welbl, Jack Rae, Vishal Maini, Dani guage Processing.

Yogatama, and Pushmeet Kohli. 2020. Reducing

sentiment bias in language models via counterfac- Haochen Liu, Jamell Dacon, Wenqi Fan, Hui Liu, Zitao

tual evaluation. In Findings of the Association for Liu, and Jiliang Tang. 2020a. Does gender matter?

Computational Linguistics: EMNLP 2020, pages towards fairness in dialogue systems. In Proceed-

65–83, Online. Association for Computational Lin- ings of the 28th International Conference on Com-

guistics. putational Linguistics, pages 4403–4416, Barcelona,

Spain (Online). International Committee on Compu-

Clayton Hutto and Eric Gilbert. 2014. Vader: A par- tational Linguistics.

simonious rule-based model for sentiment analysis

of social media text. In Proceedings of the Interna- Haochen Liu, Wentao Wang, Yiqi Wang, Hui Liu, Zi-

tional AAAI Conference on Web and Social Media, tao Liu, and Jiliang Tang. 2020b. Mitigating gender

volume 8. bias for neural dialogue generation with adversarial

learning. In Proceedings of the 2020 Conference on

Matthew Kay, Cynthia Matuszek, and Sean A Munson. Empirical Methods in Natural Language Processing

2015. Unequal representation and gender stereo- (EMNLP), pages 893–903, Online. Association for

types in image search results for occupations. In Computational Linguistics.

4286You can also read