N15News: A New Dataset for Multimodal News Classification

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

N15News: A New Dataset for Multimodal News Classification

Zhen Wang1 , Xu Shan2 , Jie Yang3

1,3

EEMCS, Delft University of Technology, Netherlands

2

CEG, Delft University of Technology, Netherlands

1

z.wang-42@student.tudelft.nl

{ x.shan-2, 3 j.yang-3}@tudelft.nl

2

Abstract choose to ignore the images and merely pay atten-

tion to the text. This is not in line with the actual

Current news datasets merely focus on text fea-

situation, especially when almost all the news to-

tures on the news and rarely leverage the fea-

day has images. In this work, we aim to use both

arXiv:2108.13327v1 [cs.CL] 30 Aug 2021

ture of images, excluding numerous essential

features for news classification. In this paper, images and text to achieve better news classifica-

we propose a new dataset, N15News, which is tion.

generated from New York Times with 15 cat- In order to combine heterogeneous information

egories and contains both text and image in- extracted from images and texts, multimodal meth-

formation in each news. We design a novel ods are needed. Multimodal can process various

multitask multimodal network with different

types of information simultaneously and has been

fusion methods, and experiments show multi-

modal news classification performs better than used in news studies before. For example, in the

text-only news classification. Depending on fake news dataset Fakeddit (Nakamura et al., 2019),

the length of the text, the classification accu- the authors propose a hybrid text+image model to

racy can be increased by up to 5.8%. Our classifier fake news. However, currently, there is

research reveals the relationship between the no valid public multimodal news dataset containing

performance of a multimodal classifier and its enough real news with both images and texts that

sub-classifiers, and also the possible improve- can do news classification. We thus use the New

ments when applying multimodal in news clas-

sification. N15News is shown to have great po-

York Times to build a new dataset called N15News

tential to prompt the multimodal news studies. which contains massive text-image pairs of news.

The dataset can be found here. In this work, we focus on using N15News to

improve the news classification using multimodal

1 Introduction methods. Specifically, we want to answer the ques-

People have tried to use different carriers to record tion: whether and how can image enhance the news

news. Ancient people first drew images by hands- classification. To the best of our knowledge, multi-

on walls to record important things. After language modal classification applied to real news has rarely

was invented, words became the main tools for been studied before. The main contributions of our

recording. Thanks to parchment preserved to this work are as follows:

day, we can study people who lived a long time ago.

• We introduce N15News, a large-scale multi-

Later, with the invention of the camera, images are

modal news dataset comprising 200K image-

widely used in news. Compared with text, images

text pairs and 15 categories, which exceeding

can bring us more intuitive information, even if we

the previous news dataset in both the number

cannot understand the language used in the news.

of categories and samples.

It is safe to say that images and text play an equally

important role in news. • We use a multitask multimodal network to

News classification is one of the essential tasks do multimodal classification, and the exper-

in news research (Katari and Myneni, 2020). We iments show the multimodal method can

use the information provided by the news to group achieve higher accuracy than text-only news

them into different categories.There is already classification.

some research about news classification, for exam-

ple, news datasets, such as 20NEWS (Lang, 1995) • Our error analysis reveals the relationship be-

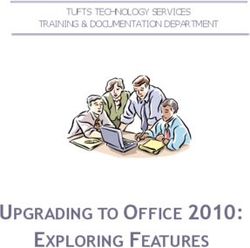

and AG News (Zhang et al., 2015). However, they tween the performance of a multimodal classi-Figure 1: Image examples of 15 categories

fier and its sub-classifiers, and also the possi- Category # samples Category # samples

ble improvements when applying multimodal

in news classification. Arts 37,135 Politics 21,906

Books 7,528 Realestate 3,854

2 Related Work Business 27,834 Science 4,898

In news studies, the most commonly used datasets Dining 5,551 Sports 35,048

are 20NEWS and AG NEWS. 20NEWS is a col- Fashion 7,111 Technology 5,758

lection of approximately 20,000 newsgroup doc- Health 3,314 Theater 7,709

uments across 20 different newsgroups, and AG Movies 13,177 Travel 5,071

News contains 1 million news articles gathered Opinion 20,592

from more than 2000 news sources and grouped

Table 1: Category distribution.

into four categories. These two datasets are now

used as benchmarks for testing text classification

models, such as BERT (Devlin et al., 2018) and

are also used in many fusion scenarios, which can

XLNet (Yang et al., 2019b).

find the most important pieces of information in

Multimodal deep learning (Ngiam et al., 2011)

each feature and finally integrate them. Addition-

is able to leverage different types of features, such

ally, attention-based fusion methods (Zhang et al.,

as voice, image, and text, to achieve better perfor-

2020b; Shih et al., 2016), which using the attention

mance. Nowadays, multimodal methods have been

mechanism to let the model learn to automatically

used in lots of tasks, for example, multimodal senti-

find the most crucial part of the feature through

ment analysis (Soleymani et al., 2017), multimodal

training, are playing an increasingly important role

translation (Sanabria et al., 2018), multimodal emo-

in multimodal deep learning tasks.

tion recognition (Tzirakis et al., 2017), and multi-

modal question answering (Yagcioglu et al., 2018). Multimodal methods are also commonly used

One common multimodal architecture is to use in news studies. Previous multimodal news re-

different types of models to process the correspond- searches mainly focus on fake news detection.

ing input data, such as first using an image classifier Nakamura et al. (2019) propose a multimodal fake

to obtain image features, a text classifier to obtain news dataset from Reddit with six categories ac-

text features, and then combining these features cording to the degree and type of fake news in the

before subsequent processing. In multimodal deep news. Giachanou et al. (2020) use word2vec to ex-

learning, the most critical part is feature fusion. tract the news text features and five different image

Recent researches have proposed various feature models to extract news image features. Wang et al.

fusion methods (Zhang et al., 2020a). Concatena- (2018) use an adversarial neural network to identify

tion (Nojavanasghari et al., 2016; Anastasopoulos fake news on newly emerged events in online social

et al., 2019) is the most commonly used method. platforms. Fake news detection is a variant of news

It splices different features directly along a certain classification, which mostly has only two major

dimension. Further, other fusion methods, for ex- categories (true or false), making the task is not so

ample, weighted-sum and pooling, are also able to difficult. Furthermore, there are few studies on the

achieve good results. Weighted-sum (Vielzeuf et al., application of multimodal classification focus on

2018) assigns different weights to each feature and real news.

sum them up. Pooling (Chao et al., 2015) meth- In that case, we collect and apply multimodal

ods, including max-pooling and average-pooling, methods on our dataset N15News, which containingCategory: Movies

Headline: A Man’s Death, a Career’s Birth

Image:

Caption: Ryan Coogler on the BART platform

at Fruitvale, where Oscar Grant III was killed.

His film of that story, “Fruitvale Station,” opens

next month.

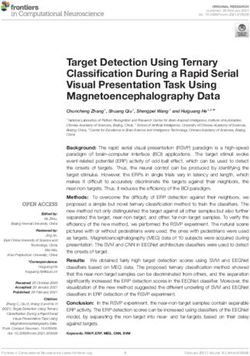

Abstract:A killing at a Bay Area rapid-transit Figure 2: Length distribution of each type of text.

station has inspired Ryan Coogler’s feature-film

debut, a movie already honored at the Sundance

and Cannes film festivals. and news, we only choose one image for each news

Body: OAKLAND — It had been nearly a year and drop out the news which does not contain any

since Ryan Coogler last stood on the arrival images. All news belongs to 15 different categories.

platform on the upper-level of the Fruitvale Bay We do not merge similar categories, such as science

Area Rapid Transit Station, where 22-year-old and technology, arts and theater. 200k news articles

Oscar Grant III, unarmed and physically re- are collected in total. The amount of each category

strained, was shot in the back by a BART transit is shown in Table 1. Each article sample contains

officer... one category tag, one headline, one abstract, one

article body, one image, and one corresponding

Table 2: A sample from N15News. image caption. An example is shown in Table 2.

Finally, the news is randomly split into 160k for

training, 20k for validation, and 20k for testing.

massive news images and texts, as well as many

Compared with other multimodal research such

different categories, to facilitate the research of

as fake news detection, since our dataset comes

multimodal news classification applied in real news

from a professional news website, which ensures

study.

the correctness of the dataset, thus no additional

3 The N15News Dataset manual annotation work is needed.

3.1 Dataset Collection 3.2 Dataset Statistics

The N15News is extracted from the New York In Table 3, we show some information about

Times. New York Times is an American daily news- N15News and other news-related datasets in pre-

paper that was founded in 1851. It publishes world- vious researches. Compare to other datasets,

wide news on various topics every day. Starting N15News has some unique advantages. Firstly,

from the 2000s, the New York Times fully turned to N15News has 15 categories, which exceeds most

digitization (Pérez-Peña, 2008), and previous news of the previous news datasets except for 20NEWS

was transferred to the Internet to facilitate people’s and Yahoo News (Yang et al., 2019a). However,

reading and provide internet API for scientific re- when taking the dataset size into account, N15News

search purposes. has much more samples than 20NEWS and Yahoo

To build the N15News dataset, we use the API News. Moreover, there is no valid multimodal

provided by New York Times to obtain all the links news dataset that can be used to do real news clas-

published from 2010 to 2020. Then we use these sification before N15News. Previous multimodal

links to retrieve all the actual web pages in the researches in news classification mainly focus on

past decade. After analyzing those web pages, we fake news detection. The length distribution of

exclude video news, and only the news articles in each type of text is shown in Figure 2. From the

text form are retained. While most news has only headline, caption, abstract to the article body, av-

one image, to better balance the number of images erage lengths are progressively increasing. ThisDataset Size Classes Type Source Topic

20NEWS 20,000 20 text Newsgroup real news

AG NEWS 1,000,000 4 text AG News real news

BreakingNews 110,000 none text, image RSS Feeds real news

Yahoo News 160,515 31 text Yahoo real news

BBC News 2,225 5 text BBC real news

TREC Washington Post 728,626 none text, image The Washington Post real news

Guardian News 52,900 4 text Guardian News real news

Fauxtography 1,233 2 text,image Snopes, Reuters fake news

Image-verification-corpus 17,806 2 text,image Twitter fake news

Fakeddit 1,063,106 2,3,6 text,image Reddit fake news

N15News (Ours) 206,486 15 text, image New York Times real news

Table 3: Comparison of various news datasets.

news images by current image classification mod-

els, we use a Faster-RCNN model trained on Visual

Genome (Krishna et al., 2016), a dataset aiming at

providing semantic information from images. We

also use a Resnet101 trained on N15News to reveal

the critical part of news images. Two examples

are shown in Figure 3. Faster-RCNN extracts the

important semantic information in the images, such

as ball and books, and Resnet focuses on salient

objects: player and book cover. In Figure 3, it

is hard to recognize the topic only given the two

headlines. The one on the left-hand side may be

related to many topics, while the right-hand side

one is closer to the topic of opinion. However, with

the information obtained by images, we can eas-

ily guess that the left one is about a sports-related

topic, while the right one is about a book. Figure

3 shows that the information in the image can be

used to strengthen news classification.

Figure 3: Visualization of critical parts in images. The

news headlines lacks keywords that can be used for

Moreover, The information provided by images

classification, but there are in the images. can also help to distinguish similar categories.

There are limited similar categories in previous

news datasets. However, in N15News, there are

allows us to study the gain effect of images on some similar categories, for example, theater and

different lengths and different types of text classifi- movies. Only with text, it is difficult to tell the story

cation tasks with N15News. is happening in a theater or on a screen, but images

make things much easier. Theater-related images

3.3 Multimodal Analysis

always happened on a stage, but movies not, as

Text feature in classification task has been well shown in Figure 1. This makes a huge difference,

studied before, so we will focus on what the news and if we can make good use of image information,

images in N15News are able to provide to improve the classification accuracy will be much higher.

the classification results. In Figure 1, we present

some image examples of each category. It is obvi-

3.4 Challenges

ous that news images are usually closely related to

the category they belong to. While multimodal can introduce lots of new in-

To better understand what can be learned from formation to facilitate the news classification,Figure 4: Breaking Point: How Mark Zuckerberg and

Tim Cook Became Foes

Figure 5: Overview of our multitask multimodal net-

N15News also releases some new challenges. The work.

biggest challenge is how to better understand news

images. Current image classification models are

able to extract the features of objects and the rela- The pre-trained BERT is also a base version and

tionships between them in images. However, di- consists 12 layers transformer encoder.

rectly using those models to classify news images We firstly use ViT and BERT to obtain the

cannot achieve a strong result because they are image feature and text feature separately, where

mainly designed to classify specific objects, such embedding is the embeddings extracted from the

as cats or dogs, while a news image is more likely original text and image, CLS is a 1D embedding

to reflect an event. An identical object may have containing the information of its corresponding

different meanings in different scenarios. For ex- image or text. Then we use the most common-

ample, the two people in Figure 4 are Facebook and used feature fusion methods - add, concatenate,

Apple’s CEO, and it seems they dislike each other dot-production, max-pooling - to combine those

in the image. This image comes from a news re- two kinds of features together. After obtaining the

lated to technology. However, existing image mod- fused feature, we then use three multilayer percep-

els only recognize there are two people but cannot trons (MLPs) to predict the label for image feature,

obtain more meaningful information. Therefore, text feature, fusion feature separately. Finally, the

the features obtained through those models cannot cross-entropy is used to calculate the Loss for each

fully reflect the hidden contextual information in prediction. The final T otalLoss will be the sum

news images. We hope N15News can also prompt of all three types of Loss. When testing on the test

the research in event image classification, which is set, we only use the output of the fusion feature to

a new and challenging field. calculate the final prediction result.

4 Model 5 Experiments

5.1 Experimental Settings

To figure out how images can enhance the news

classification and the potential challenges when ap- We trained all the models in the N15News train-

plying the multimodal methods, we propose a mul- ing set and the accuracy is tested on the test set.

titask multimodal network as the baseline model to Batch size is set to 32 and the learning rate is 1e-5

provide a strong benchmark in N15News. As illus- with an Adam (Kingma and Ba, 2014) optimizer.

trated in Figure 5, our model consists of two kinds Each input image is resized to 348 × 348 and the

of feature extraction models. On the bottom is Vi- maximum length of each input text is set to 512.

sion Transformer (ViT) (Dosovitskiy et al., 2020), Training device is an NVIDIA Tesla V100 with 16

one of the current state-of-the-art image classifica- GB RAM.

tion models. Above it is BERT, one of the current

state-of-the-art text classification models. 5.2 Results

The ViT we use is pre-trained on imagenet2012, All the experiment results are shown in Table 4.

consists of a Resnet-50 and a base version of vision We firstly classified the images and texts using ViT

transformer with 12 layers transformer encoder. and BERT respectively. In image classification,Multimodal(ViT+BERT)

Task ViT BERT

Cat Add Dot Max

Image - 0.6065 - - - - -

- Headline - 0.7727 - - - -

- Caption - 0.7792 - - - -

- Abstract - 0.8471 - - - -

- Body - 0.9203 - - - -

Image Headline - - 0.8183 0.8126 0.8202 0.8148

Image Caption - - 0.7951 0.7928 0.7899 0.7916

Image Abstract - - 0.8590 0.8592 0.8610 0.8545

Image Body - - 0.9227 0.9233 0.9249 0.9209

Average 0.6065 0.8298 0.8488 0.8470 0.8490 0.8455

Table 4: The evaluation results on the N15News dataset. The top section: image-only and text-only tasks. The

bottom: multimodal tasks.

Prediction Result Example

Count Percent

Multi Image Text Image Headline Label

A Clarinetist Who Stacks

True True True 10258 49.7% Arts

His Dates

Valentina Sampaio Is the

True False False 295 1.4% First Transgender Model Business

for Sports Illustrated

Microsoft Plumbs Ocean’s

True False True 5131 24.9% Depths to Test Underwater Technology

Data Center

A Friendly but Willful Old

True True False 1248 6.0% Theater

Spirit, Pouring Tea

Bob Dylan, More Than a

False True True 21 0.1% Arts

Songwriter

Review: A Paranormal

False False True 449 2.2% Sleuth Investigates Three Movies

‘Ghost Stories’

At Festival, Footfalls of

False True False 892 4.3% Arts

Farewell

A Plan to Talk About

False False False 246 11.4% Jobs, Elbowed Aside by Politics

Health Care

Table 5: The experiment results with three types of network. True means the classification is correct and False

means the classification is incorrect. For each type of prediction, an example of corresponding image-headline pair

and its ground truth label is provided.ViT achieves an accuracy of only 0.6065. Com- thing useful after the fusion of image and text,

paring to ViT, BERT behaves much better at the which may not be discovered if we process image

news text classification, reaching an average accu- and text separately.

racy of 0.8298. At the same time, there is a direct Things are much more complex when only one

correlation between BERT classification accuracy of the image and the text classifiers is correct. The

and text length. From headline, caption, abstract to correct-to-incorrect ratio of multimodal classifier

body, the longer the text length, the higher classifi- is (5131+1248=6739):(449+892=1341) in this sit-

cation accuracy can be achieved. This is because uation. This shows that after proper training, the

BERT can better understand the text with more multimodal network will be more affected by the

meaningful words. It is found that our baseline sub-network which can correctly perform the clas-

model is better than either the image classifier or sification task. And this explains why multimodal

the text classifier. Even the lowest average accu- is useful and able to outperform image-only and

racy reaches 0.8455. This is powerful proof that text-only networks.

multimodal learning combining image features and

This experiment results can be better understood

text features benefits news classification. And the

by the examples in Table 5. In the third and fourth

result also shows that the shorter the text (from

rows, the multimodal prediction is correct and only

body to headline), the more obvious the gain effect

one of image and text predictions is True. In the

of adding image information. In other words, when

third row, two men are looking at an oval-shaped

text contains insufficient information, image is a

object. It is hard to categorize the news article con-

perfect supplement.

sidering only images without texts. Luckily, by

Moreover, experiments show that concatenate the headline we know that this news is about Mi-

achieves the best result in the Image-Caption task crosoft, and Microsoft is a well-known technology

comparing to other fusion methods, and in other company. This news is related to technology. On

tasks, dot-production is the best one. Based on the contrary, in the fourth row, the topic of news is

the experiments of different fusion methods, we quite vague if only considered the keywords spirit

can get a conclusion that when image feature and and tea in text. But when take image into consider-

text feature are directly related, concatenate should ation, the scene described in this image obviously

be used as the fusion method, such as image and takes place on a stage. Thus our multimodal net-

its caption, they both talk about exactly one same work correctly predicts this news is about a theater.

thing. As for other situations, for example, im-

Then are the cases in sixth and seventh rows

age and headline are not so directly related, dot-

where only one of image and text predictions is

production is a better fusion way.

True and the multimodal prediction is False. In the

sixth row, from headline, it is easy to know this

5.3 Error Analysis

news is related to a movie about a sleuth. However,

To explore why the multimodal method surpasses the corresponding image only contains a photo of

the text-only method, we separate the trained base- a man, and this photo may be relevant to various

line model into three types: original multimodal news topics. The seventh row is quite similar. From

network, image classification network with only the image we know this news is about the arts,

the ViT, and text classification network with only but limited art-related information can be obtained

the BERT. And then we test them in the validation through its headline. Those wrong multimodal pre-

dataset using image-headline pairs. The experi- dictions show our multimodal network lacks the

ment results are shown in Table 5. classifying ability in some situations when two clas-

It is evident that when image and text are both sifiers are inconsistent. Therefore, to improve the

correctly classified, the multimodal network can multimodal approach performance, the multimodal

almost classify news correctly. The correct-to- networks must make good use of the features ex-

incorrect ratio is 10258:21. Additionally, when tracted from the correct classifier and get rid of the

image and text are both wrongly classified, the mul- adverse effects from the wrong one.

timodal network also tends to be incorrect, but the Based on the above analysis, there are two main

correct-to-incorrect ratio now is 2346:295, much methods to further improve the performance of mul-

lower than the previous Three True situation. This timodal classification networks. The first one is to

shows that multimodal network can learn some- improve the behavior of each sub-network. If theaccuracy (error) of sub-models is higher (lower), Alexey Dosovitskiy, Lucas Beyer, Alexander

the multimodal prediction will be improved. Our Kolesnikov, Dirk Weissenborn, Xiaohua Zhai,

Thomas Unterthiner, Mostafa Dehghani, Matthias

experiments show that current state-of-the-art im-

Minderer, Georg Heigold, Sylvain Gelly, et al. 2020.

age classification models still have a long way to An image is worth 16x16 words: Transformers

classify news images correctly. The second method for image recognition at scale. arXiv preprint

is to let the multimodal classifier be able to deter- arXiv:2010.11929.

mine which sub-classifier extracts the more valu-

Anastasia Giachanou, Guobiao Zhang, and Paolo

able feature. A more effective fusion network is Rosso. 2020. Multimodal fake news detection with

needed to better combine image and text features. textual, visual and semantic information. In Inter-

national Conference on Text, Speech, and Dialogue,

6 Conclusion pages 30–38. Springer.

Rohan Katari and Madhu Bala Myneni. 2020. A survey

In this paper, we introduce a multimodal news on news classification techniques. In 2020 Interna-

dataset N15News. N15News is collected from the tional Conference on Computer Science, Engineer-

New York Times containing both images and texts, ing and Applications (ICCSEA), pages 1–5. IEEE.

which enables the research of multimodal appli-

Diederik P Kingma and Jimmy Ba. 2014. Adam: A

cation in news classification. Compared to pre- method for stochastic optimization. arXiv preprint

vious datasets, it covers almost all the essential arXiv:1412.6980.

news categories in our daily life, making the re-

search on it can be more widely applied to the real Ranjay Krishna, Yuke Zhu, Oliver Groth, Justin John-

son, Kenji Hata, Joshua Kravitz, Stephanie Chen,

world. Based on N15News, we propose a multitask

Yannis Kalantidis, Li-Jia Li, David A Shamma,

multimodal network, which leverages the current Michael Bernstein, and Li Fei-Fei. 2016. Visual

state-of-the-art image classification model and text genome: Connecting language and vision using

classification model. Experiment results show that crowdsourced dense image annotations.

combining image features and text features can

Ken Lang. 1995. Newsweeder: Learning to filter

achieve better classification accuracy comparing to netnews. In Machine Learning Proceedings 1995,

the previous text-only methods. Our error analysis pages 331–339. Elsevier.

explains multimodal is helpful because the infor-

mation of images and texts can complement each Kai Nakamura, Sharon Levy, and William Yang Wang.

2019. r/fakeddit: A new multimodal benchmark

other. Accordingly, future work on improving the dataset for fine-grained fake news detection. arXiv

multimodal classification accuracy could include preprint arXiv:1911.03854.

two main aspects: 1) improving image and text

classification accuracy separately, especially the Jiquan Ngiam, Aditya Khosla, Mingyu Kim, Juhan

Nam, Honglak Lee, and Andrew Y Ng. 2011. Mul-

news image classification; 2) designing a more ef- timodal deep learning. In ICML.

fective fusion network to better combine image and

text features. Behnaz Nojavanasghari, Deepak Gopinath, Jayanth

Koushik, Tadas Baltrušaitis, and Louis-Philippe

Morency. 2016. Deep multimodal fusion for per-

suasiveness prediction. In Proceedings of the 18th

References ACM International Conference on Multimodal Inter-

action, pages 284–288.

Antonios Anastasopoulos, Shankar Kumar, and Hank

Liao. 2019. Neural language modeling with visual Richard Pérez-Peña. 2008. Times plans to combine

features. arXiv preprint arXiv:1903.02930. sections of the paper. The New York Times. New.

Linlin Chao, Jianhua Tao, Minghao Yang, Ya Li, and Ramon Sanabria, Ozan Caglayan, Shruti Palaskar,

Zhengqi Wen. 2015. Long short term memory recur- Desmond Elliott, Loïc Barrault, Lucia Specia, and

rent neural network based multimodal dimensional Florian Metze. 2018. How2: a large-scale dataset

emotion recognition. In Proceedings of the 5th in- for multimodal language understanding. arXiv

ternational workshop on audio/visual emotion chal- preprint arXiv:1811.00347.

lenge, pages 65–72.

Kevin J Shih, Saurabh Singh, and Derek Hoiem. 2016.

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Where to look: Focus regions for visual question

Kristina Toutanova. 2018. Bert: Pre-training of deep answering. In Proceedings of the IEEE conference

bidirectional transformers for language understand- on computer vision and pattern recognition, pages

ing. arXiv preprint arXiv:1810.04805. 4613–4621.Mohammad Soleymani, David Garcia, Brendan Jou, Björn Schuller, Shih-Fu Chang, and Maja Pantic. 2017. A survey of multimodal sentiment analysis. Image and Vision Computing, 65:3–14. Panagiotis Tzirakis, George Trigeorgis, Mihalis A Nicolaou, Björn W Schuller, and Stefanos Zafeiriou. 2017. End-to-end multimodal emotion recogni- tion using deep neural networks. IEEE Journal of Selected Topics in Signal Processing, 11(8):1301– 1309. Valentin Vielzeuf, Alexis Lechervy, Stéphane Pateux, and Frédéric Jurie. 2018. Centralnet: a multilayer approach for multimodal fusion. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, pages 0–0. Yaqing Wang, Fenglong Ma, Zhiwei Jin, Ye Yuan, Guangxu Xun, Kishlay Jha, Lu Su, and Jing Gao. 2018. Eann: Event adversarial neural networks for multi-modal fake news detection. In Proceed- ings of the 24th acm sigkdd international conference on knowledge discovery & data mining, pages 849– 857. Semih Yagcioglu, Aykut Erdem, Erkut Erdem, and Na- zli Ikizler-Cinbis. 2018. Recipeqa: A challenge dataset for multimodal comprehension of cooking recipes. arXiv preprint arXiv:1809.00812. Ze Yang, Can Xu, Wei Wu, and Zhoujun Li. 2019a. Read, attend and comment: A deep architecture for automatic news comment generation. In Proceed- ings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th Inter- national Joint Conference on Natural Language Pro- cessing (EMNLP-IJCNLP), pages 5076–5088, Hong Kong, China. Association for Computational Lin- guistics. Zhilin Yang, Zihang Dai, Yiming Yang, Jaime Car- bonell, Ruslan Salakhutdinov, and Quoc V Le. 2019b. Xlnet: Generalized autoregressive pretrain- ing for language understanding. arXiv preprint arXiv:1906.08237. Chao Zhang, Zichao Yang, Xiaodong He, and Li Deng. 2020a. Multimodal intelligence: Representa- tion learning, information fusion, and applications. IEEE Journal of Selected Topics in Signal Process- ing, 14(3):478–493. Wenqiao Zhang, Siliang Tang, Jiajie Su, Jun Xiao, and Yueting Zhuang. 2020b. Tell and guess: cooperative learning for natural image caption generation with hierarchical refined attention. Multimedia Tools and Applications, pages 1–16. Xiang Zhang, Junbo Zhao, and Yann LeCun. 2015. Character-level convolutional networks for text clas- sification. arXiv preprint arXiv:1509.01626.

You can also read