Integrating large-scale web data and curated corpus data in a search engine supporting German literacy education

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Integrating large-scale web data and curated corpus data in a

search engine supporting German literacy education

Sabrina Dittricha Zarah Weissa Hannes Schröterc Detmar Meurersa,b

a

Department of Linguistics, University of Tübingen

b

LEAD Graduate School and Research Network, University of Tübingen

c

German Institute for Adult Education – Leibniz Centre for Lifelong Learning

{dittrich,zweiss,dm}@sfs.uni-tuebingen.de schroeter@die-bonn.de

Abstract medium length (Grotlüschen et al., 2019), falling

short of the literacy rate expected after nine years

Reading material that is of interest and at

of schooling. While these figures are lower than

the right level for learners is an essential

those reported in previous years (Grotlüschen and

component of effective language educa-

Riekmann, 2011), there seems to be no signifi-

tion. The web has long been identified as a

cant change in the proportion of adults with low

valuable source of reading material due to

literacy skills when taking into account demo-

the abundance and variability of materials

graphic changes in the composition of the popu-

it offers and its broad range of attractive

lation from 2010 to 2018 (Grotlüschen and Solga,

and current topics. Yet, the web as source

2019, p. 34), such as the risen employment rate

of reading material can be problematic in

and average level of education.

low literacy contexts.

Literacy skills at such a low level impair the

We present ongoing work on a hybrid ability to live independently, to participate freely

approach to text retrieval that combines in society, and to compete in the job market. To

the strengths of web search with retrieval address this issue, the German federal and state

from a high-quality, curated corpus re- governments launched the National Decade for

source. Our system, KANSAS Suche 2.0, Literacy and Basic Skills (AlphaDekade) 2016–

supports retrieval and reranking based on 2026.1 One major concern in the efforts to pro-

criteria relevant for language learning in mote literacy and basic education in Germany is

three different search modes: unrestricted the support of teachers of adult literacy and basic

web search, filtered web search, and cor- education classes, who face particular challenges

pus search. We demonstrate their comple- in ensuring the learning success of their students.

mentary strengths and weaknesses with re- Content-wise, teaching materials should be of per-

gard to coverage, readability, and suitabil- sonal interest to them and closely aligned with the

ity of the retrieved material for adult lit- demands learners face in their everyday and work-

eracy and basic education. We show that ing life (BMBF and KMK, 2016, p. 6). Language-

their combination results in a very versa- wise, reading materials for low literacy and for

tile and suitable text retrieval approach for language learning in general should be authentic

education in the language arts. (Gilmore, 2007) and match the individual reading

skills of the learners so that they are challenged

but not overtaxed (Krashen, 1985; Swain, 1985;

1 Introduction Gilmore, 2007). This demand for authentic, high-

quality learning materials is currently not met by

Low literacy skills are an important challenge for

publishers, making it difficult for teachers to ad-

modern societies. In Germany, 12.1% of the

dress the needs of their diverse literacy classes.

German-speaking working age population (18 to

Relatedly, there is a lack of standardized didactic

64 years), approximately 6.2 million people, can-

concepts and scientifically evaluated materials, de-

not read and write even short coherent texts; an-

spite first efforts to address this shortage (Löffler

other 20.5% cannot read or write coherent texts of

and Weis, 2016). What complicates matters fur-

This work is licensed under a Creative Commons Attribu-

tion 4.0 International Licence. Licence details: http://

1

creativecommons.org/licenses/by/4.0 https://www.alphadekade.de

Sabrina Dittrich, Zarah Weiss, Hannes Schröter and Detmar Meurers 2019. Integrating large-scale web data

and curated corpus data in a search engine supporting German literacy education. Proceedings of the 8th

Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019).

Linköping Electronic Conference Proceedings 164: 41–56.

41ther is that literacy and basic education classes are ent from previous text retrieval systems for lan-

comprised of learners with highly heterogeneous guage learning, focusing on either web search or

biographic and education backgrounds. This in- compiled text repositories (Heilman et al., 2010;

cludes native and non-native speakers, the latter Collins-Thompson et al., 2011; Walmsley, 2015;

of whom may or may not be literate in their na- Chinkina et al., 2016), our approach instantiates

tive language. Low literacy skills sometimes are a hybrid architecture in the spectrum of potential

also associated with neuro-atypicalities such as strategies (Chinkina and Meurers, 2016, Figure

intellectual disorders, dyslexia, or Autism Spec- 4) by combining the strengths of focused, high-

trum Disorders (ASD; Friedman and Bryen, 2007; quality text databases with large-scale, more or

Huenerfauth et al., 2009). less parameterized web search.

Given the shortage of appropriate reading mate- The remainder of the article is structured as fol-

rials provided by publishers, the web is an attrac- lows: First, we briefly review research on read-

tive alternative source for teachers seeking read- ability and low literacy and compare previous ap-

ing materials for their literacy and basic education proaches to text retrieval systems for education

classes. There is an exceptional coverage of cur- contexts (section 2). Then, we describe our sys-

rent topics on the web, and a standard web search tem in section 3, before providing a quantitative

engine provides English texts at a broad range of and qualitative comparison of the three different

reading levels (Vajjala and Meurers, 2013), though search modes supported by our system in sec-

the average reading level of the texts is quite high. tion 4. Section 5 closes with some final remarks

For German, most online reading materials appear on future work.

to target native speakers with a medium to high

2 Related Work

level of literacy. Offers for low literate readers are

restricted to a few specialized web pages present- Text retrieval for low literate readers or language

ing information in simple language or simplified learners at its core consists of two tasks: text re-

language for language learners or children. These trieval, and readability assessment of the retrieved

may or may not be suited in content and presen- texts. We here provide some background on pre-

tation style for low literate adults. Web materi- vious work on these two tasks as well as on the

als specifically designed for literacy and basic ed- German debate on how to characterize low liter-

ucation do not follow a general standard indicat- acy skills. We start by reviewing work on read-

ing how the appropriateness of the material for ability analysis for language learning and low lit-

this context was assessed. This makes it difficult eracy contexts (section 2.1), before discussing the

for teachers of adult literacy and basic education characterization of low literacy skills (section 2.2),

classes to verify the suitability of the materials. and ending with an overview of text retrieval ap-

As we argued in Weiss et al. (2018), this chal- proaches for language learning (section 2.3).

lenge extends beyond the narrow context of liter-

acy classes, as it also pertains to the question of 2.1 Readability Assessment

web accessibility for low literate readers who per-

Automatic readability assessment matches texts

form their own web queries.

to readers with a certain literacy skill such that

We address this issue by presenting our ongo- they can fulfill a predefined reading goal or task

ing work on KANSAS Suche 2.0, a hybrid search such as extracting information from a text. Early

engine for low literacy contexts that offers three work on readability assessment started with read-

search modes: free web search, filtered web search ability formulas (Kincaid et al., 1975; Chall and

exclusively on domains providing reading materi- Dale, 1995) which are still used in some studies

als for low levels of literacy (henceforth: alpha (Grootens-Wiegers et al., 2015; Esfahani et al.,

sites), and a corpus of curated, high-quality liter- 2016) despite having been widely criticized for

acy and basic education materials we are currently being too simplistic and unreliable (Feng et al.,

compiling. The corpus will come with a copyright 2009; Benjamin, 2012). In answer to this criti-

allowing teachers to adjust and distribute the mate- cism, more advanced methods supporting broader

rials for their classes. We thus considerably extend linguistic modeling using Natural Language Pro-

the original KANSAS Suche system (Weiss et al., cessing (NLP) were established. For example, Va-

2018), which only supported web search. Differ- jjala and Meurers (2012) showed that measures

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

42of language complexity originally devised in Sec- able for our education purposes, as it does not dif-

ond Language Acquisition (SLA) research can ferentiate degrees of readability within the range

successfully be adopted to the task of readabil- of low literacy. In Weiss et al. (2018), we pro-

ity classification. An increasing amount of NLP- pose a rule-based classification approach for Ger-

based research is being dedicated to the assess- man following the analysis of texts for low literate

ment of readability for different contexts, in par- readers in terms of the so-called Alpha Readabil-

ticular for English (Feng et al., 2010; Crossley ity Levels introduced in the next section. As far as

et al., 2011; Xia et al., 2016; Chen and Meurers, we are aware, this currently is the only automatic

2017b), with much less work on other languages, readability classification approach that differenti-

such as French, German, Italian, and Swedish ates degrees of readability at such low literacy lev-

(François and Fairon, 2012; Weiss and Meurers, els.

2018; Dell’Orletta et al., 2011; Pilán et al., 2015).

2.2 Characterizing Low Literacy Skills

Automatic approaches to readability assessment

According to recent large-scale studies, there is a

at low literacy levels are less common, arguably

high proportion of low literate readers in all age

also due to the lack of labeled training data for

groups of the population in Germany (Schröter

the highly heterogeneous group of adults with low

and Bar-Kochva, 2019). For the German working

literacy in their native language (Yaneva et al.,

age population (18–64 years), three major studies

2016b). But there is research in this domain bring-

further focused on investigating the parts of the

ing in eye-tracking evidence to identify challenges

working population with the lowest level of liter-

and reading strategies for neuro-atypical read-

acy skills. The lea. – Literalitätsentwicklung von

ers with low literacy skills, such as people with

Arbeitskräften [literacy development of workers]

dyslexia (Rello et al., 2013a,b) or ASD (Yaneva

study carried out from 2008 to 2010, supported by

et al., 2016a; Eraslan et al., 2017). Two ap-

the Federal Ministry of Education and Research,

proaches should be mentioned that overcome the

was the first national survey on reading and writ-

lack of available training data by implementing

ing competencies in the German adult popula-

rules determined in previously developed guide-

tion.2 In this context, a scale of six Alpha Levels

lines for low literacy contexts. Yaneva (2015)

was developed to allow a fine grained measure of

presents a binary classification approach to deter-

the lowest levels of literacy. These levels were em-

mine the adherence of texts to Easy-to-read guide-

pirically tested in the first leo. – Level-One study

lines. Easy-to-read guidelines are designed to pro-

(Grotlüschen and Riekmann, 2011), which was

mote accessibility of reading materials for readers

updated in 2018 (Grotlüschen et al., 2019).3

with cognitive disabilities such as ‘Make It Sim-

At Alpha Level 1, literacy is restricted to the

ple’ by Freyhoff et al. (1998) and ‘Guidelines for

level of individual letters and does not extend to

Easy-to-read Materials’ by Nomura et al. (2010).

the word level. At Alpha Level 2, literacy is re-

Yaneva (2015) applies this algorithm to web mate-

stricted to individual words and does not extend to

rials labeled as Easy-to-Read to investigate their

the sentence level. At Alpha Level 3, literacy is

compliance to the guidelines by Freyhoff et al.

restricted to single sentences and does not extend

(1998). She shows that providers of Easy-to-Read

to the text level. At Alpha Levels 4 to 6, liter-

materials overall adhere to the guidelines. This

acy skills are sufficient to read and write increas-

is an important finding since not all self-declared

ingly longer and more complex texts. The descrip-

‘simple’ reading materials on the web actually are

tions of Alpha Levels in the lea. and leo. studies

suitable for readers with lower reading skills. For

are ability-based, i.e., they focus on what someone

example, Simple Wikipedia was found to not be

at this literacy level can and cannot read or write.

systematically simpler than Wikipedia (see, for

Weiss and Geppert (2018) used these descriptions

example, Štajner et al., 2012, Xu et al., 2015, and

to derive annotation guidelines for the assessment

Yaneva et al., 2016b), though Vajjala and Meurers

of texts rather than people, focusing on the Lev-

(2014) illustrate that an analysis at the sentence

els 3 to 6 relevant for characterizing texts. Based

level can identify relative complexity differences.

on those annotation guidelines, Weiss et al. (2018)

While such research on the adherence of web ma-

terials to guidelines is an important contribution to 2

https://blogs.epb.uni-hamburg.de/lea

3

the evaluation of web accessibility, it is less suit- https://blogs.epb.uni-hamburg.de/leo

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

43developed a rule-based algorithm supporting the yond the educational domain, leveled web search

automatic classification of reading materials for engines also contribute to web accessibility by al-

low literacy contexts. They demonstrate that the lowing web users with low literacy skills to query

classifier successfully approximates human judg- web sites that are at a suitable reading level for

ments of Alpha Readability Levels as operational- their purposes. One example for such a system

ized in Weiss and Geppert (2018). for literacy training is the original KANSAS Suche

While Alpha Levels 4 and higher still describe (Weiss et al., 2018). The system analyses web

very low literacy skills, only Alpha Levels 1 to 3 search results and assigns reading levels to them

constitute what has previously been referred to as based on a rule-based algorithm which is specifi-

functional illiteracy in the German adult literacy cally designed for low literate readers. Linguistic

discourse: literacy skills at a level that only per- constructions can be (de-)prioritized to re-rank the

mits reading and writing below the text level. Lit- search results.

eracy at this level is not sufficient to fulfill standard The main drawback of such web-based ap-

social requirements regarding written communica- proaches, however, is the lack of control of the

tion in the different domains of working and living quality of the content. This may lead to results that

(Decroll, 1981). Grotlüschen et al. (2019) argue include incorrect or biased information or inappro-

that the term functional illiteracy is stigmatizing priate materials, such as propaganda, racist or dis-

and therefore ill-suited for use in adult education. criminating contents, or fake news. This issue may

Instead, they refer to adults with low literacy skills require the attentive eye of a teacher during the se-

and low literacy. In the following, we will use lection process. Query results may also include

the term low literacy in a broad sense to refer to picture or video captions, forum threads, or shop-

literacy skills up to and including Alpha Level 6. ping pages, which are unsuited as reading texts.

To discuss literacy below the text level, i.e., Alpha To avoid such issues, many applications rely on

Levels 1 to 3, we will make this explicit by refer- restricted web searches on pre-defined websites,

ring to low literacy in a narrow sense. as is the case for FERN (Walmsley, 2015) or net-

Trekker (Huff, 2008), which may also be crawled

2.3 Text Retrieval for Language Learning and analyzed beforehand, as in SyB (Chen and

Meurers, 2017a).

A growing body of research is dedicated to the de-

velopment of educational applications that provide Some systems extend their functionality beyond

reading materials for language and literacy acqui- a leveled search engine and incorporate tutoring

sition. Many of them are forms of leveled search system functions. For example, the FERN search

engines, i.e., information retrieval systems that engine for Spanish (Walmsley, 2015) provides an

perform some form of content-based web query enhanced reading support by allowing readers to

and analyze the readability of the retrieved materi- flag and look up unknown words and train new vo-

als on the fly, often by using readability formulas cabulary in automatically generated training ma-

as discussed in section 2.1. The readability level terial. This relates to another type of educa-

of the results is then displayed to the user as addi- tional application that provides reading materials

tional criterion for the text choice, the results are for language and literacy acquisition: reading tu-

ranked according to the level, or a readability filter tors. Such systems generally provide access to

allows exclusion of hits with undesired readability a collection of texts that have been collected and

levels. The majority of these systems are designed analyzed beforehand (Brown and Eskenazi, 2004;

for English (Miltsakaki and Troutt, 2007; Collins- Heilman et al., 2008; Johnson et al., 2017). The

Thompson et al., 2011; Chinkina et al., 2016), al- collections are usually a curated selection of high-

though there are some notable exceptions for a few quality texts that are tailored towards the specific

other languages (Nilsson and Borin, 2002; Walms- needs of the intended target group. To function as

ley, 2015; Weiss et al., 2018). One of the main ad- tutoring systems, the systems support interaction

vantages of leveled web search engines is that they for specific tasks, e.g., reading comprehension or

allow access to a broad bandwidth of texts that summarizing tasks. One example for such a sys-

are always up-to-date. These are important fea- tem in the domain of literacy and basic education

tures for the identification of interesting and rele- is iSTART-ALL, the Interactive Strategy Training

vant reading materials in educational contexts. Be- for Active Reading and Thinking for Adult Liter-

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

44acy Learners (Johnson et al., 2017). It is an in- tent queries on web pages specifically designed for

telligent tutoring system for reading comprehen- low literacy and basic education purposes, and c)

sion with several practice modules, a text library, a corpus search mode to retrieve edited materials

and an interactive narrative. It contains a set of 60 that have been pre-compiled specifically for the

simplified news stories sampled from the Califor- purpose of literacy and basic education courses.

nia Distance Learning Project.4 They are specifi- Users may flexibly switch between search modes

cally designed to address the interests and needs of if they find that for a specific search term the cho-

adults with low literacy skills (technology, health, sen search mode does not yield results satisfy-

family). It offers summarizing and question ask- ing their needs. In all three search modes, the

ing training for these texts as well as an inter- new system allows users to re-rank search results

active narrative with integrated tasks and imme- based on the (de-)prioritization of linguistic con-

diate corrective feedback. The greater quality of structions, just as in the original KANSAS Suche.

curated reading materials in reading tutors comes The results are automatically leveled by readabil-

at the cost of drawing from a considerably more ity in terms of an Alpha Level-based readabil-

limited pool of reading materials, which may be- ity scale specifically tailored towards the needs of

come obsolete quickly. Thus, leveled web search low literacy contexts following Kretschmann and

engines as well as reading tutors have complemen- Wieken (2010) and Gausche et al. (2014), as de-

tary strengths and weaknesses. As we will demon- tailed in Weiss et al. (2018). This readability clas-

strate in the following, combining the two ap- sification may be used to further filter results, to

proaches can help obtain the best of both worlds. align them with the reading competencies of the

intended reader. Users can also upload their own

3 Our System corpora to re-rank the texts in them based on their

We present KANSAS Suche 2.0, a hybrid search linguistic characteristics and automatically com-

system for the retrieval of appropriate German pute their Alpha Readability Levels. We under-

reading materials for literacy and basic educa- stand this upload functionality as an additional

tion classes. While these classes are typically de- feature rather than a separate search mode because

signed for low literate native speakers, in prac- it does not provide a content search and does not

tice, they are comprised of native- and non-native differ from the corpus search mode in terms of

speakers of German.5 As the original KANSAS its strengths and weaknesses. Accordingly, it will

Suche (Weiss et al., 2018), which was inspired not receive a separate mention in the discussion of

by FLAIR (Chinkina and Meurers, 2016; Chink- search modes below.

ina et al., 2016), the updated system operates on

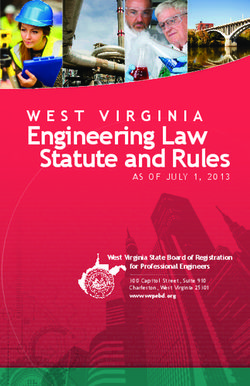

3.1 Workflow & Technical Implementation

the premise that users want to select reading ma-

terials in a way combining content queries with a KANSAS Suche 2.0 is a web-based application that

specification of the linguistic forms that should be is fully implemented in Java. Its workflow is il-

richly represented or not be included in the text. lustrated in Figure 1. The basic architecture re-

But KANSAS Suche 2.0 is a hybrid system in the mains similar to the original KANSAS Suche, see

sense that it combines different search modes in Weiss et al. (2018) for comparison, but has been

order to overcome the individual weaknesses of heavily extended in order to accommodate the ad-

web-based and corpus-based text retrieval outlined ditional search options offered by our system. The

in the previous section. user can enter a search term and start a search re-

More specifically, our system offers three differ- quest which is communicated from the client to

ent search modes: a) an unrestricted web search the server using Remote Procedure Calls.

option to perform large-scale content queries on The front end, based on the Google Web Toolkit

the web, b) a filtered web search to perform con- (GWT)6 and GWT Material Design7 , is shown in

4

Figure 2.8 It allows users to choose between the

http://www.cdlponline.org

5 three search modes: unrestricted web search, fil-

There also are literacy and basic education classes

specifically designed to prepare newly immigrated people tered web search on alpha sites, and corpus search

and refugees for integration courses. Our system is being de- 6

signed with a focus on traditional literacy and basic education http://www.gwtproject.org

7

classes. While our system is not specifically targeting Ger- https://github.com/GwtMaterialDesign

8

man as a second language learners, it is plausible to assume Note that the actual front end is in German. For illustra-

that our target readers include native and non-native speakers. tion purposes, we here translated it into English.

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

45client server 3.2 The Filtered Web Search

Enter Search term Web Search Corpus Search While the web provides access to a broad variety

Bing Search API Solr Query

either web

or corpus search

of up-to-date content, an unrestricted web search

Text Extraction Loading of may also retrieve various types of inappropriate

Boilerpipe API Preprocessed material. Not all search results are reading ma-

Documents

Linguistic Analysis Deserialization terials (sales pages, advertisement, videos, etc.),

of Java Objects

Stanford CoreNLP

(Text Extraction, many reading materials on the web require high

Linguistic Analysis,

Alpha Readability literacy skills, and some of the sufficiently easy

front-end Alpha Readability Classification

Level Classification has already reading materials contain incorrect or biased in-

rule-based approach been done offline)

formation. However, there are several web pages

Reranking, specialized in providing reading materials for lan-

Filtering by Alpha List of rankable

Readability Level, documents

guage learners, children, or adults with low liter-

Visualization acy skills in their native language. One option to

improve web search results thus is to ensure that

Figure 1: System workflow including web search queries are processed so that they produce results

and corpus search components from such web pages.

We provide the option to focus the web search

on a pre-compiled list of alpha sites, i.e., web

as well as the option to upload their own corpus. In pages providing reading materials for readers with

the case of an unrestricted or filtered web search, low literacy skills. For this, we use BING’s

the request is communicated to Microsoft Azure’s built-in search operator site, which restricts the

BING Web Search API (version 5.0)9 and further search to the specified domain. The or operator

processed at runtime. The text content of each can be used to broaden the restriction to multi-

web page is then retrieved using the Boilerpipe ple domains. The following example illustrates

Java API (Kohlschlütter et al., 2010).10 We re- this by restricting the web search for Bundestag

move links, meta information, and embedded ad- (“German parliament”) to the web pages of the

vertisements. The NLP analysis is then performed German public international broadcaster Deutsche

using the Stanford CoreNLP API (Manning et al., Welle (DW) and the web pages of the German Fed-

2014). We identify linguistic constructions with eral Agency for Civic Education Bundeszentrale

TregEx patterns (Levy and Andrew, 2006) we de- für politische Bildung (BPB):

fined. The linguistic annotation is also used to

extract all information for the readability clas- (site:www.dw.com or

sification. We use the algorithm developed for site:www.bpb.de) Bundestag

KANSAS Suche (Weiss et al., 2018), currently the

only automatic approach we are aware of for de- The site operator is a standard operator of

termining readability levels for low literate read- most major search engines and could be directly

ers in German. The resulting list of analyzed and specified using exactly this syntax by the users. In

readability-classified documents is then returned KANSAS Suche 2.0 we integrate a special option

to the client side. The user can re-rank the re- to promote its use for a series of specific websites

sults based on the (de-)prioritization of linguis- for three reasons. First, the site operator and the

tic constructions, filter them by Alpha Readabil- use of operators in search engine queries overall

ity Level, or use the system’s visualization to in- are relatively unknown to the majority of search

spect the search results. For re-ranking we use the engine users. Allowing users to specify a query

BM25 IR algorithm (Robertsin and Walker, 1994). with a site operator through a check box in our

The corpus search follows a separate workflow user interface makes this feature more accessible.

on the server side which will be elaborated on in Second, while specifying multiple sites is possi-

more detail in section 3.3 after discussing the fil- ble using the or operator, it becomes increasingly

tered web search in section 3.2. cumbersome the more domains are added. Hav-

ing a shortcut for suitable web sites considerably

9

https://azure.microsoft.com/ increases ease of use. Third, there are a number

en-us/services/cognitive-services/

bing-web-search-api of web pages that offer materials for low literacy

10

https://boilerpipe-web.appspot.com classes, but many of them will not be know to the

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

46Figure 2: KANSAS Suche 2.0 search mode options set to web query for Staat (“state”) on 30 alpha sites.

user and some cannot be directly accessed by a de/de/leichte-sprache, hurraki.de/

search engine, as discussed in more detail below. wiki, nachrichtenleicht.de), texts writ-

Our list of alpha sites makes it possible to quickly ten for children (klexikon.zum.de, geo.

access a broad selection of relevant web sites that de/geolino), and texts for German as a Second

are compatible with the functionality of KANSAS Language learners (deutsch-perfekt.com).

Suche 2.0. While this is a relatively short list compared to the

To compile our list of alpha sites, we surveyed number of web sites in our initial survey, these

75 web sites that provide reading materials for sites provide access to 34,100 materials, as identi-

low literacy contexts. Not all of them are well- fied by entering a BING search using the relevant

suited for the envisioned use case. We excluded operator specification without a specific content

web pages that offer little content (fewer than three search term. The fact that we found so few suitable

texts), require prior registration, or predominantly domains also showcases that the search function-

offer training exercises at or below the word level ality with a pre-compiled list goes beyond what

rather than texts. While the latter may in princi- could readily be achieved by a user thinking about

ple be interesting for teachers of literacy and basic potentially interesting sites and manually spelling

education classes, they are ill-suited for the kind out a query using the site operator. We are con-

of service provided by our system. The linguis- tinuously working on expanding the list of alpha

tic constructions that we allow the teacher or user sites used by the system and welcome any infor-

to (de-)prioritize often target the phrase or clause mation about missing options.

level and do not make sense for individual words.

However, by far the biggest drop in the number 3.3 The Corpus Search

of potentially relevant web sites resulted from the While there are some web sites dedicated to the

fact that many web sites are designed in such a distribution of reading materials for low literate

way that the materials they offer are not crawled readers, high-quality open source materials for lit-

and indexed by search engines at all. Since the ma- eracy and basic education classes are relatively

terial on these web sites cannot be found by search scarce. Even where materials are available, the

engines, it makes no sense to include them as al- question under which conditions teachers may al-

pha sites in our system. ter and distribute materials often remains unclear.

At the end of our survey, we were left with We want to address this issue by providing the op-

six domains that are both relevant as accessi- tion to specifically query for high-quality materi-

ble. This includes lexicons, news, and maga- als that have been provided as open educational

zine articles in simple German (lebenshilfe. resources with a corresponding license. For this,

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

47we are currently assembling a collection of such GermanLightStemFilterFactory stems the to-

materials in collaboration with institutions creat- kens using the light stemming algorithm im-

ing materials for literacy and basic education. plemented by the University of Neuchâtel.13

In our system, this collection may be accessed

through the same interface as the unrestricted and This ensures that the texts are recognized, pro-

the filtered web search. On the server side, how- cessed, and indexed as German, which will im-

ever, a separate pipeline is involved, as illustrated prove the query results. Given a query, Solr re-

in Figure 1. Unlike the web search processing turns the relevant text ids and the system can then

the retrieved web data on the run, the corpus deserialize the documents given the returned ids.

search accesses already analyzed data. For this, Just as for the web search, the list of documents

we first perform the relevant linguistic analyses on then is passed to the front-end, where the user can

the reading materials offline using the same NLP rerank, filter, and visualize the results.

pipeline and readability classification as for the We are still in the process of compiling the col-

web search. lection of high quality reading materials specifi-

We use an Apache Solr index to make the cor- cally designed for low literacy contexts. To be able

pus accessible to content queries.11 Solr is a to test our pipeline and evaluate the performance

query platform for full-text indexing and high- of the different search modes already at this stage,

performance search. It is based on the Lucene we use a test corpus of 10,012 texts crawled from

search library and can be easily integrated into web sites providing reading materials for low lit-

Java applications. When a query request for the erate readers, compiled for the original KANSAS

corpus search is sent by the user, the search results Suche (Weiss et al., 2018). We cleaned the cor-

are fetched from that local Apache Solr index. In pus in a semi-automatic approach, in which we

order to load the preprocessed documents into a separated texts that had been extracted together

Solr index, we transform each document into an and excluded non-reading materials. While we are

XML file. We add a metaPath element which confident that the degree of preprocessing is suffi-

contains the name of the project responsible for cient to demonstrate the benefits of our future cor-

the creation of the material, the author’s name and pus when compared to the web search options, it

the title. Additionally, we assign a text de at- should be kept in mind that the current results are

tribute to each text element, which ensures that only a first approximation. The pilot corpus was

Solr recognizes the text as German and applies the only minimally cleaned so that it may still con-

corresponding linguistic processing. The follow- tain, for example, advertisement that would not

ing tokenizer and filter factories, which are pro- be included in the high quality corpus being built.

vided by Solr, have been set in the schema file of The pilot corpus also lacks explicit copyright in-

the index: formation and thus is unsuitable outside of scien-

tific analysis and demonstration purposes.

StandardTokenizerFactory splits the text into

tokens. 4 Comparison of Search Modes

Our system combines three search modes that

LowerCaseFilterFactory converts all tokens have complementing strengths and weaknesses.

into lowercase to allow case-insensitive Unrestricted web search can access a vast quan-

matching. tity of material, yet, most of it is not designed for

low literate readers. Also, the lack of quality con-

StopFilterFactory removes all the words given trol may yield many unsuitable results for certain

in a predefined list of German stop words pro- search terms, for example, those prone to elicit ad-

vided by the Snowball Project.12 vertising. In contrast, restricted searches or corpus

searches draw results from a considerably smaller

GermanNormalizationFilterFactory pool of documents. Thus, although it stands to rea-

normalizes the German special charac- son that the retrieved text results are of more con-

ters ä, ö, ü, and ß. sistent, higher quality and more likely to be at ap-

11 13

http://lucene.apache.org/solr http://members.unine.ch/jacques.

12

http://snowball.tartarus.org savoy/clef

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

48propriate reading levels for our target users, there the text length criterion. Since texts may be rel-

may be too few results. atively easily shortened by teachers before using

To test these assumptions and see how the them for literacy and basic education classes, we

strengths and weaknesses play out across several include both sets in our evaluation.

queries, we compared the three search modes with

regard to three criteria: 4.2 Coverage of Retrieved Text

Our first evaluation criterion concerns the cov-

Coverage Does the search mode return enough

erage of retrieved material across search terms.

results to satisfy a query request?

While the unlimited web search (referred to as

Readability Are the retrieved texts readable for “www”) draws from a broad pool of available

low literate readers? data, the restricted web search (“filter”) and the

corpus search (“corpus”) are based on a consid-

Suitability Are the retrieved texts suitable as erably more restricted set of texts. Therefore,

teaching materials? we first investigated to which extent the differ-

ent search modes are capable of providing the re-

While the first criterion addresses a question quested number of results across search terms.

of general interest for text retrieval systems, the Overall, we obtained 817 texts for the requested

other two are more specifically tailored towards 900 results. While the unrestricted web search

the needs of our system as a retrieval system for returned the requested number of 30 results for

low literacy contexts. We expect all three search each search term (i.e., overall 300 texts), the cor-

modes to show satisfactory performance in gen- pus search only retrieved 261 texts and the filtered

eral, but to exhibit the strengths and weaknesses web-search 256 texts. The latter search modes

hypothesized above. struggled to provide enough texts for the search

4.1 Set-Up terms Deutschkurs (“German course”), Erkältung

(“common cold”). As shown in Figure 3, the cor-

For each search mode, we queried ten search pus search returns only nine for the former and 12

terms requesting 30 results per term. The ten results for the latter term, while the filtered search

search terms were obtained by randomly sam- identifies seven and nine results, respectively. For

pling from a list of candidate terms that was com- the other eight search terms, all three search modes

piled from the basic vocabulary list for illiter- retrieve the requested 30 results.

acy teaching by Bockrath and Hubertus (2014).

The results indicate that with regard to plain

The selection criterion for candidate terms was

coverage, the web search outperforms the two re-

to identify nouns that in a wider sense relate

to topics of basic education such as finance,

Alkohol (alcohol) Deutschkurs (German lesson)

health, politics, and society. The final list con-

www

sists of the intersection of candidate terms se- filter

lected by two researchers. The final ten search corpus

terms used in our evaluation are: Alkohol (“alco- Erkältung (common cold) Heimat (home(land))

www

hol”), Deutschkurs (“German course”), Erkältung filter

(“common cold”), Heimat (“home(land)”), Inter- corpus

net (“internet”), Kirche (“church”), Liebe (“love”), Internet (internet) Kirche (church)

Search Mode

www

Polizei (“police”), Radio (“radio”), and Staat filter

(“state”). In the following, all search terms will corpus

be referred to by their English translation. Liebe (love) Polizei (police)

www

All texts were then automatically analyzed and filter

rated by the readability classifier used in our sys- corpus

tem. We calculated their Alpha Readability Level Radio (radio) Staat (state)

www

– both including and excluding the text length cri- filter

terion of Weiss et al. (2018) based on Gausche corpus

0 10 20 30 0 10 20 30

et al. (2014); Kretschmann and Wieken (2010), Number of Results

since we found that many materials for low liter-

ate readers available on the web do not adhere to Figure 3: Results per term across search modes

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

49stricted search modes. This is expected since nei- for the retrieval of low literacy reading materials

ther the filtered web search nor the corpus search even though there is clear room for improvement.

have access to the vast number of documents ac- The filtered web search does not seem to per-

cessible to the unrestricted web search. However, form much better at first glance. On the contrary,

they do provide the requested number of results for with 68.75% it shows the overall highest rate of

the majority of queries, illustrating that even the No Alpha labeled texts when including the text

more restrictive search options may well provide length criterion and it retrieves only 0.39% Alpha

sufficient coverage for many likely search terms. 3 texts. However, when ignoring text length, the

rate of No Alpha texts drops to 8.98% – the low-

4.3 Readability of Retrieved Texts

est rate of No Alpha texts observed across all three

The second criterion that is essential for our com- search modes. It also retrieves 4.30% of Alpha 3

parison is the readability of the retrieved texts on texts and 53.91% of Alpha 4 texts. This shows that

a readability scale for low literacy. For this, we while many of the texts found by the filtered web

used the readability classifier integrated in our sys- search seem to be too long, they are otherwise bet-

tem to assess the Alpha Level of each text, once ter suited for the needs of low literate readers than

with and once without the text length criterion. texts found with the unrestricted web search.

Tables 1 and 2 show the overall representation of

The corpus search exhibits the lowest rate of

Alpha Readability Levels aggregated over search

No Alpha texts (36.78%) and the highest rate of

terms for each search mode including and ignor-

Alpha 3 and Alpha 4 texts (4.98% and 35.25%,

ing text length as a rating criterion.

respectively) when including the text length crite-

rion. Without it, the rate of Alpha 3 texts even rises

Alpha Level WWW Filter Corpus to 13.41%. Interestingly, though, it has a slightly

Alpha 3 0.00% 0.39% 4.98% higher rate of No Alpha texts than the filtered web

Alpha 4 19.00% 15.23% 35.25% search. That the corpus contains texts that are be-

Alpha 5 14.33% 8.20% 14.56% yond the Alpha Levels at first may seem counter-

Alpha 6 10.00% 7.42% 8.43% intuitive. However, the test corpus also includes

No Alpha 56.67% 68.75% 36.78% texts written for language learners which may very

well exceed the Alpha Levels. Considering that

Table 1: Distribution of Alpha Readability Levels the majority of texts identified by this search mode

including text length across search modes are within the reach of low literate readers, this is

not an issue for the test corpus. The selection of

Alpha Level WWW Filter Corpus suitable materials does yield more fitting results in

terms of readability.

Alpha 3 1.00% 4.30% 13.41%

Alpha 4 49.67% 53.91% 50.19% Figure 4 shows the distribution of Alpha Read-

Alpha 5 21.00% 22.66% 18.77% ability Levels ignoring text length across search

Alpha 6 2.00% 10.16% 7.28% terms. It can be seen that for all search terms,

No Alpha 26.33% 8.98% 10.34% Alpha Level 4 is systematically the most com-

monly retrieved level. A few patterns relat-

Table 2: Distribution of Alpha Readability Levels ing search terms and elicited Alpha Levels can

ignoring text length across search modes be observed. Deutschkurs (“German course”),

Erkältung (“common cold”) elicit notably fewer

As expected, the unrestricted web search elicits Alpha 4 texts, which is due to the lack of cov-

a high percentage of texts that are above the level erage in the filtered web and the corpus search.

of low literate readers. 56.67% of texts are rated Other than that, Polizei (“police”) elicits by far the

as No Alpha and not a single text receives the rat- least No Alpha texts and among the most Alpha

ing Alpha 3. When ignoring text length, the rate 3 and Alpha 4 texts, indicating that texts retrieved

of No Alpha texts drops to 26.33% but there are for this topic are overall better suited for low liter-

still only 1.00% Alpha 3 texts. It should be noted, acy levels. In contrast, Radio (“radio”) elicits most

though, that 49.67% of results are rated as Alpha 4 No Alpha texts and among the least Alpha 3 texts.

when ignoring text length, indicating that the un- However, it also exhibits the highest rate of Alpha

restricted web search is not completely unsuitable 4 texts. Thus, overall it seems that the distribution

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

50Search Mode corpus filter www

Alpha 3 Alpha 4 Alpha 5 Alpha 6 no Alpha

Staat (state)

Search Term Radio (radio)

Polizei (police)

Liebe (love)

Kirche (church)

Internet (internet)

Heimat (home(land))

Erkältung (common cold)

Deutschkurs (German lesson)

Alkohol (alcohol)

0 20 40 0 20 40 0 20 40 0 20 40 0 20 40

Number of Results

Figure 4: Distribution of query results across search terms by Alpha Level (ignoring text length)

of Alpha Readability Levels is comparable across captions are. However, since the point of a search

search terms. This is in line with our expectations, engine such as KANSAS Suche 2.0 is to analyze

given that all terms were drawn from a list of basic the web resource itself rather than the pages being

German vocabulary. linked to on the page, such pages are unsuitable.

Since the reliable evaluation of bias and misinfor-

4.4 Suitability of Retrieved Texts mation is beyond the scope of this paper, we ex-

Our final criterion concerns the suitability of texts cluded this aspect from our evaluation. We also

for reading purposes. As mentioned, some materi- discarded materials as not suitable if they neither

als from the web are ill-suited as reading materials contained the search term nor a synonym to the

for literacy and basic education classes. Search re- search term. Since the information retrieval algo-

sults may not be reading materials but rather sales rithm used in our corpus search is less sophisti-

pages, advertisement, or videos. Certain search cated than the one used by BING, stemming mis-

terms, such as those denoting purchasable items, takes can lead to such unrelated and thus unsuit-

such as Radio (“radio”), are more likely to yield able results. Finally, we restricted texts to 1,500

such results than others. Other terms, such as words and flagged everything beyond that as un-

those relating to politics, may be prone to elicit suitable. This is based on the practical considera-

biased materials or texts containing misinforma- tion that it would take teachers too much time to

tion. The challenge of suitability has already been review such long texts for suitability – but this rule

recognized in previous web-search based systems, only became relevant for six texts from the cor-

such as FERN (Walmsley, 2015) or netTrekker pus, which contained full chapters from booklets

(Huff, 2008), where it was addressed by restricting on basic education matters written in simplified

the web search to manually verified web pages. language.

We investigated to which extent suitability of Based on this definition of suitability that we

contents is an issue for our search modes by man- specifically fitted for the needs of our system, our

ually labeling materials as suitable or unsuitable two annotators show a prevalence and bias cor-

on a stratified sample of the full set of queries rected Cohen’s kappa of κ = 0.765. For the fol-

that samples across search modes, search terms, lowing evaluation of suitability, we only consid-

and Alpha Readability Levels (N=451). Note that ered texts as not suitable if both annotators flagged

since Alpha Readability Levels are not evenly dis- them as such. Results that have been classified

tributed across search modes, the stratified sample as unsuitable by only one annotator were treated

does not contain the same number of hits for each as suitable materials. This resulted in overall 137

search term. However, each search mode is repre- texts being flagged as not suitable, i.e., 30.38%

sented approximately evenly with 159 results for of all search results. When splitting these results

the corpus search, 142 for the filtered web search, across search modes, we find that the unrestricted

and 150 for the unrestricted web search. web search has the highest rate of unsuitable re-

On this sample, we let two human annotators sults: 52.70% of all retrieved materials were iden-

flag search results as unsuitable, if they were a) tified as not suitable. In contrast, only 8.80% of

advertisement, b) brief captions of a video or fig- the corpus search results were labeled as unsuit-

ure, c) or a hub for other web pages on the topic. able. For the filtered web search, the percentage

Such hub pages linking to relevant topics are not of unsuitable materials lies between these two ex-

unsuitable per se, in the way advertisement or brief tremes, at 31.00%. Figure 5 shows the distribution

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

51Suitability not suitable suitable

Alkohol (alcohol) Deutschkurs (German lesson) Erkältung (common cold) Heimat (home(land)) Internet (internet)

www

filter

Search Mode

corpus

Kirche (church) Liebe (love) Polizei (police) Radio (radio) Staat (state)

www

filter

corpus

0 5 10 15 20 0 5 10 15 20 0 5 10 15 20 0 5 10 15 20 0 5 10 15 20

Number of Results

Figure 5: Suitability of sample texts (N=451) across search modes by query term

of suitable and not suitable materials across search readability level ignoring text length. It shows,

modes split by search terms. As can be seen, that more than half of the Alpha Level 4 texts

some search terms elicit more unsuitable materi- found in the unrestricted web search as well as ap-

als than others. Deutschkurs (“German course”), proximately half of those found in the filtered web

for example, contains by far more unsuitable than search are actually unsuitable. Similarly, a con-

suitable materials for both web searches. This siderable number of Alpha Level 5 and nearly all

puts our previous findings into perspective that Alpha Level 6 texts retrieved by the unrestricted

the unrestricted web search has higher coverage web search in our sample are flagged as unsuit-

for this term than the corpus search. At least for able. This puts the previous findings concerning

the sample analyzed here, the corpus search re- the readability of search results into a new per-

trieves considerably more suitable texts than either spective. After excluding unsuitable results, both

web search, despite its lower overall coverage.14 web searches yield considerably fewer results that

The terms Heimat (“home(land)”), Internet (“in- are readable for low literacy levels as compared to

ternet”), and Radio (“radio”) also seem to be par- the corpus search.

ticularly prone to yield unsuitable materials in an

unrestricted web search. 4.5 Discussion

But not all search terms elicit high numbers of

unsuitable results in the unrestricted web search, The comparison of search modes confirmed our

see for example Alkohol (“alcohol”), Erkältung initial assumptions about the strengths and weak-

(“common cold”), and Staat (“state”). With for nesses of the different approaches. The unre-

some exceptions, such as Erkältung (“common stricted web search has the broadest plain cover-

cold”) and Heimat (“home(land)”), the filtered age but elicits considerably more texts which re-

corpus search behaves similar to the unrestricted quire too high literacy skills or contain unsuitable

corpus search with regard to retrieving suitable materials. Although it retrieves a high propor-

materials. The corpus search clearly outperforms tion of Alpha 4 texts, the majority of these con-

both web-based approaches in terms of suitabil- sist of unsuitable material. After correcting for

ity. The only term that elicits a notable quantity this, it becomes apparent that users may struggle

of unsuitable materials is Heimat (“home(land)”), to obtain suitable reading materials at low liter-

for which the corpus includes some advertisement acy levels when solely relying on an unrestricted

texts expressed in plain language. For all other web search. However, depending on the search

search terms, corpus materials flagged as unsuit- term, the rate of unsuitable materials widely dif-

able were so based on their length. fers. Thus, it stands to reason that the unrestricted

Figure 6 displays the distribution of suitable and web search works well for some queries while oth-

unsuitable materials across search modes split by ers will be less fruitful for low literacy contexts.

In these cases, the filtered web search or the cor-

14

This does not hold for the other term for which low cov- pus search can be of assistance. They both have

erage for all but the unrestricted web search was reported. For

the search term Erkältung (“common cold”), the unrestricted been shown to retrieve more texts suitable for low

web search finds a high number of suitable results. literacy levels despite struggling with coverage

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

52Suitability not suitable suitable

Alpha 3 Alpha 4 Alpha 5 Alpha 6 no Alpha

www

Search Mode

filter

corpus

0 10 20 30 40 50 0 10 20 30 40 50 0 10 20 30 40 50 0 10 20 30 40 50 0 10 20 30 40 50

Number of Results

Figure 6: Suitability of sample texts (N=451) across search modes by Alpha Levels (ignoring text length)

for some search terms. Interestingly, the corpus mode suits their needs best for a given query.

search was shown to exceed the web search in cov- While the system itself is fully implemented,

erage after subtracting unsuitable results for one we are still compiling the corpus of reading ma-

search term. This demonstrates that raw coverage terials for low literacy contexts and work on ex-

may be misleading depending on the suitability of panding the list of domains for our restricted web

the retrieved results. All in all, the restricted web search. We are also conducting usability stud-

search showed fewer advantages than the other ies with teachers of low literacy and basic edu-

two search modes as it suffered from both, low cation classes and with German language teach-

coverage and unsuitable materials. However, it ers in training. We plan to expand the function-

does elicit a considerably lower ratio of unsuitable ality of the corpus search to also support access

materials than the unrestricted web search, while to the corpus solely based on linguistic properties

keeping the benefit of providing up-to-date materi- and reading level characteristics, without a content

als. Thus, we would still argue that it is a valuable query. This will make it possible to retrieve texts

contribution to the overall system. richly representing particular linguistic properties

Overall, the results show that depending on the or constructions that are too infrequent when hav-

search term and targeted readability level, it makes ing to focus on a subset of the data using the con-

sense to allow users to switch between search tent query. We are also considering development

modes so that they can identify the ideal configu- of a second readability classifier targeting CEFR

ration for their specific needs, as there is no single levels to accommodate the fact that German adult

search mode that is superior across contexts. literacy and basic education classes are not only

attended by low literate native speakers but also

5 Summary and Outlook by German as a second language learners.

We presented our ongoing work on KANSAS Acknowledgments

Suche 2.0, a hybrid text retrieval system for read-

KANSAS is a research and development project

ing materials for low literate readers of German.

funded by the Federal Ministry of Education and

Unlike previous systems, our approach makes it

Research (BMBF) as part of the AlphaDekade15

possible to combine the strengths of unrestricted

(“Decade of Literacy”), grant number W143500.

web search, broad coverage of current materials,

with those of more restricted searches in curated

corpora, high quality materials with clear copy- References

right information. We demonstrated how, depend-

Rebekah George Benjamin. 2012. Reconstructing

ing on the search term, the suitability and read- readability: Recent developments and recommenda-

ability of results retrieved by an unrestricted web tions in the analysis of text difficulty. Educational

search can become problematic for users search- Psychology Review, 24:63–88.

ing for materials at low literacy levels. Our study BMBF and KMK. 2016. General Agreement on

showed that a restricted web search and the search the National Decade for Literacy and Basic

of materials in our corpus are valuable alternatives Skills 2016-2026. Reducing functional illiteracy

in these cases. Overall, there is no single best and raising the level of basic skills in Germany.

Bundesministerium für Bildung und Forschung

solution for all searches, so our hybrid solution

15

allows users to choose themselves which search https://www.alphadekade.de

Proceedings of the 8th Workshop on Natural Language Processing for Computer Assisted Language Learning (NLP4CALL 2019)

53You can also read