Characterizing Fairness Over the Set of Good Models Under Selective Labels

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Characterizing Fairness Over the Set of Good Models Under Selective Labels

Amanda Coston * 1 Ashesh Rambachan * 2 Alexandra Chouldechova 3

Abstract ous methods for learning anew the best performing model

Algorithmic risk assessments are used to inform among those that satisfy a chosen notion of predictive fair-

decisions in a wide variety of high-stakes settings. ness (e.g. Zemel et al. (2013), Agarwal et al. (2018), Agar-

Often multiple predictive models deliver simi- wal et al. (2019)). However, for real-world settings where a

lar overall performance but differ markedly in risk assessment is already in use, practitioners and auditors

their predictions for individual cases, an empiri- may instead want to assess disparities with respect to the

cal phenomenon known as the “Rashomon Effect.” current model, which we term the benchmark model. For ex-

These models may have different properties over ample, the benchmark model for a bank may be an existing

various groups, and therefore have different pre- credit score used to approve loans. The relevant question

dictive fairness properties. We develop a frame- for practitioners is: Can we improve upon the benchmark

work for characterizing predictive fairness prop- model in terms of predictive fairness with minimal change

erties over the set of models that deliver similar in overall accuracy?

overall performance, or “the set of good models.” We explore this question through the lens of the “Rashomon

Our framework addresses the empirically relevant Effect,” a common empirical phenomenon whereby multi-

challenge of selectively labelled data in the setting ple models perform similarly overall but differ markedly

where the selection decision and outcome are un- in their predictions for individual cases (Breiman, 2001).

confounded given the observed data features. Our These models may perform quite differently over various

framework can be used to 1) audit for predictive groups, and therefore have different predictive fairness prop-

bias; or 2) replace an existing model with one that erties. We propose an algorithm, Fairness in the Rashomon

has better fairness properties. We illustrate these Set (FaiRS), to characterize predictive fairness properties

use cases on a recidivism prediction task and a over the set of models that perform similarly to a chosen

real-world credit-scoring task. benchmark model. We refer to this set as the set of good

models (Dong & Rudin, 2020). FaiRS is designed to effi-

ciently answer the following questions: What are the range

1. Introduction of predictive disparities that could be generated over the set

Algorithmic risk assessments are used to inform decisions of good models? What is the disparity minimizing model

in high-stakes settings such as health care, child welfare, within the set of good models?

criminal justice, consumer lending and hiring (Caruana et al., A key empirical challenge in domains such as credit lending

2015; Chouldechova et al., 2018; Kleinberg et al., 2018; is that outcomes are not observed for all cases (Lakkaraju

Fuster et al., 2020; Raghavan et al., 2020). Unfettered use et al., 2017; Kleinberg et al., 2018). This selective labels

of such algorithms in these settings risks disproportionate problem is particularly vexing in the context of assessing

harm to marginalized or protected groups (Barocas & Selbst, predictive fairness. Our framework addresses selectively

2016; Dastin, 2018; Vigdor, 2019). As a result, there is labelled data in contexts where the selection decision and

widespread interest in measuring and limiting predictive outcome are unconfounded given the observed data features.

disparities across groups.

Our methods are useful for legal audits of disparate impact.

The vast literature on algorithmic fairness offers numer- In various domains, decisions that generate disparate impact

*

Equal contribution 1 Heinz College and Machine Learning must be justified by “business necessity” (civ, 1964; ECO,

Department, Carnegie Mellon University 2 Department of 1974; Barocas & Selbst, 2016). For instance, financial regu-

Economics, Harvard University 3 Heinz College, Carnegie lators investigate whether credit lenders could have offered

Mellon Univesrity. Correspondence to: Amanda Cos- more loans to minority applicants without affecting default

ton , Ashesh Rambachan rates (Gillis, 2019). Employment regulators may investigate

.

whether resume screening software screens out underrep-

Proceedings of the 38 th International Conference on Machine resented applicants for reasons that cannot be attributed to

Learning, PMLR 139, 2021. Copyright 2021 by the author(s).Characterizing Fairness Over the Set of Good Models Under Selective Labels

the job criteria (Raghavan et al., 2020). Our methods pro- evaluation.

vide one possible formalization of the business necessity

criteria. An auditor can use FaiRS to assess whether there 2.2. Fair Classification and Fair Regression

exists an alternative model that reduces predictive disparities

without compromising performance relative to the bench- An influential literature on fair classification and fair re-

mark model. If possible, then it is difficult to justify the gression constructs prediction functions that minimize loss

benchmark model on the grounds of business necessity. subject to a predictive fairness constraint chosen by the de-

cision maker (Dwork et al., 2012; Zemel et al., 2013; Hardt

Our methods can also be a useful tool for decision makers et al., 2016; Menon & Williamson, 2018; Donini et al., 2018;

who want to improve upon an existing model. A decision Agarwal et al., 2018; 2019; Zafar et al., 2019). In contrast,

maker may use FaiRS to search for a prediction function we construct prediction functions that minimize a chosen

that reduces predictive disparities without compromising measure of predictive disparities subject to a constraint on

performance relative to the benchmark model. We empha- overall performance. This is useful when decision makers

size that the effective usage of our methods requires careful find it difficult to specify acceptable levels of predictive dis-

thought about the broader social context surrounding the parities, but instead know what performance loss is tolerable.

setting of interest (Selbst et al., 2019; Holstein et al., 2019). It may be unclear, for instance, how a lending institution

Contributions: We (1) develop an algorithmic framework, should specify acceptable differences in credit risk scores

Fairness in the Rashomon Set (FaiRS), to investigate pre- across groups, but the lending institution can easily specify

dictive disparities over the set of good models; (2) provide an acceptable average default rate among approved loans.

theoretical guarantees on the generalization error and predic- Our methods allow users to directly search for prediction

tive disparities of FaiRS [§ 4]; (3) propose a variant of FaiRS functions that reduce disparities given such a specified loss

that addresses the selective labels problem and achieves the tolerance. Similar in spirit to our work, Zafar et al. (2019)

same guarantees under oracle access to the outcome regres- provide a method for selecting a classifier that minimizes

sion function [§ 5]; (4) use FaiRS to audit the COMPAS a particular notion of predictive fairness, “decision bound-

risk assessment, finding that it generates larger predictive ary covariance,” subject to a performance constraint. Our

disparities between black and white defendants than any method applies more generally to a large class of predic-

model in the set of good models [§ 6]; and (5) use FaiRS on tive disparities and covers both classification and regression

a selectively labelled credit-scoring dataset to build a model tasks.

with lower predictive disparities than a benchmark model While originally developed to solve fair classification and

[§ 7]. All proofs are given in the Supplement. fair regression problems, we show that the “reductions ap-

proach” used in Agarwal et al. (2018; 2019) can be suitably

2. Background and Related Work adapted to solve general optimization problems over the set

of good models. This provides a general computational ap-

2.1. Rashomon Effect proach that may be useful for investigating the implications

In a seminal paper on statistical modeling, Breiman (2001) of the Rashomon Effect for other model properties.

observed that often a multiplicity of good models achieve In constructing the set of good models with comparable

similar accuracy by relying on different features, which performance to a benchmark model, our work bears resem-

he termed the “Rashomon effect.” Even though they have blance to techniques that “post-process” existing models.

similar accuracy, these models may differ along other key Post-processing techniques typically modify the predictions

dimensions, and recent work considers the implications of from an existing model to achieve a target notion of fairness

the Rashomon effect for model simplicity, interpretability, (Hardt et al., 2016; Pleiss et al., 2017; Kim et al., 2019).

and explainability (Fisher et al., 2019; Marx et al., 2020; By contrast, our methods only use the existing model to

Rudin, 2019; Dong & Rudin, 2020; Semenova et al., 2020). calibrate the performance constraint, but need not share any

We introduce these ideas into research on algorithmic fair- other properties with the benchmark model. While post-

ness by studying the range of predictive disparities that can processing techniques often require access to individual

be achieved over the set of good models. We provide com- predictions from the benchmark model, our approach only

putational techniques to directly and efficiently investigate requires that we know its average loss.

the range of predictive disparities that may be generated

over the set of good models. Our recidivism risk prediction 2.3. Selective Labels and Missing Data

and credit scoring applications demonstrate that the set of In settings such as criminal justice and credit lending, the

good models is a rich empirical object, and we illustrate how training data only contain labeled outcomes for a selectively

characterizing the range of achievable predictive fairness observed sample from the full population of interest. For

properties over this set can be used for model learning and example, banks use risk scores to assess all loan applicants,Characterizing Fairness Over the Set of Good Models Under Selective Labels

yet the historical data only contains default/repayment out- a constraint on predictive performance. We measure per-

comes for those applicants whose loans were approved. This formance using average loss, where l : Y × [0, 1] → [0, 1]

is a missing data problem (Little & Rubin, 2019). Because is the loss function and loss(f ) := E [l(Yi∗ , f (Xi ))]. The

the outcome label is missing based on a selection mecha- loss function is assumed to be 1-Lipshitz under the l1 -norm

nism, this type of missing data is known as the selective la- following Agarwal et al. (2019). The constraint on perfor-

bels problem (Lakkaraju et al., 2017; Kleinberg et al., 2018). mance takes the form loss(f ) ≤ for some specified loss

One solution treats the selectively labelled population as if tolerance ≥ 0. The set of prediction functions satisfying

it were the population of interest, and proceeds with train- this constraint is the set of good models.

ing and evaluation on the selectively labelled population

The loss tolerance may be chosen based on an existing

only. This is also called the “known good-bad” (KGB) ap-

benchmark model f˜ such as an existing risk score, e.g., by

proach (Zeng & Zhao, 2014; Nguyen et al., 2016). However,

setting = (1 + δ) loss(f˜) for some δ ∈ [0, 1]. The set

evaluating a model on a population different than the one

of good models now describes the set of models whose

on which it will be used can be highly misleading, partic-

performance lies within a δ-neighborhood of the benchmark

ularly with regards to predictive fairness measures (Kallus

model. When defined in this manner, the set of good models

& Zhou, 2018; Coston et al., 2020). Unfortunately, most

is also called the “Rashomon set” (Rudin, 2019; Fisher et al.,

fair classification and fair regression methods do not offer

2019; Dong & Rudin, 2020; Semenova et al., 2020).

modifications to address the selective labels problem. Our

framework does [§ 5].

3.1. Measures of Predictive Disparities

Popular in credit lending applications, “reject inference” pro-

cedures incorporate information from the selectively unob- We consider measures of predictive disparity of the form

served cases (i.e., rejected applicants) in model construction

and evaluation by imputing missing outcomes using aug- disp(f ) := β0 E [f (Xi )|Ei,0 ] + β1 E [f (Xi )|Ei,1 ] , (1)

mentation, reweighing or extrapolation-based approaches

(Li et al., 2020; Mancisidor et al., 2020). These approaches where Ei,a is a group-specific conditioning event that de-

are similar to domain adaptation techniques, and indeed the pends on (Ai , Yi∗ ) and βa ∈ R for a ∈ {0, 1} are chosen

selective labels problem can be cast as domain adaptation parameters. Note that we measure predictive disparities over

since the labelled training data is not a random sample of the the full population (i.e., not conditional on Di ).

target distribution. Most relevant to our setting are covariate For different choices of the conditioning events Ei,0 , Ei,1 and

shift methods for domain adaptation. Reweighing proce- parameters β0 , β1 , our predictive disparity measure summa-

dures have been proposed for jointly addressing covariate rizes violations of common definitions of predictive fairness.

shift and fairness (Coston et al., 2019; Singh et al., 2021).

Definition 1. Statistical parity (SP) requires the predic-

While FaiRS similarly uses iterative reweighing to solve our

tion f (Xi ) to be independent of the attribute Ai (Dwork

joint optimization problem, we explicitly use extrapolation

et al., 2012; Zemel et al., 2013; Feldman et al., 2015). By

to address covariate shift. Empirically we find extrapolation

setting Ei,a = {Ai = a} for a ∈ {0, 1} and β0 = −1,

can achieve lower disparities than reweighing.

β1 = 1, disp(f ) measures the difference in average predic-

tions across values of the sensitive attribute.

3. Setting and Problem Formulation

Definition 2. Suppose Y = {0, 1}. Balance for the

The population of interest is described by the random vector positive class (BFPC) and balance for the negative class

(Xi , Ai , Di , Yi∗ ) ∼ P , where Xi ∈ X is a feature vector, (BFNC) requires the prediction f (Xi ) to be independent

Ai ∈ {0, 1} is a protected or sensitive attribute, Di ∈ D is of the attribute Ai conditional on Yi∗ = 1 and Yi∗ = 0

the decision and Yi∗ ∈ Y ⊆ [0, 1] is a discrete or continuous respectively (e.g., Chapter 2 of (Barocas et al., 2019)).

outcome. The training data consist of n i.i.d. draws from Defining Ei,a = {Yi∗ = 1, Ai = a} for a ∈ {0, 1} and

the joint distribution P and may suffer from a selective β0 = −1, β1 = 1, disp(f ) describes the difference in av-

labels problem: There exists D∗ ⊆ D such that the outcome erage predictions across values of the sensitive attribute

is observed if and only if the decision satisfies Di ∈ D∗ . given Yi∗ = 1. If instead Ei,a = {Yi∗ = 0, Ai = a} for

Hence, the training data are {(Xi , Ai , Di , Yi )}ni=1 , where a ∈ {0, 1}, then disp(f ) equals the difference in average

Yi = Yi∗ 1{Di ∈ D∗ }) is the observed outcome and 1{·} predictions across values of the sensitive attribute given

denotes the indicator function. Yi∗ = 0.

Given a specified set of prediction functions F with ele- Our focus on differences in average predictions across

ments f : X → [0, 1], we search for the prediction function groups is a common relaxation of parity-based predictive

f ∈ F that minimizes or maximizes a measure of predictive fairness definitions (Corbett-Davies et al., 2017; Mitchell

disparities with respect to the sensitive attribute subject to et al., 2019).Characterizing Fairness Over the Set of Good Models Under Selective Labels

Our predictive disparity measure can also be used for fair- over the set of good models using techniques inspired by

ness promoting interventions, which aim to increase oppor- the reductions approach in Agarwal et al. (2018; 2019).

tunities for a particular group. For instance, the decision Although originally developed to solve fair classification

maker may wish to search for the prediction function among and fair regression problems in the case without selective

the set of good models that minimizes the average predicted labels, we extend the reductions approach to solve general

risk score f (Xi ) for a historically disadvantaged group. optimization problems over the set of good models in the

Definition 3. Defining Ei,1 = {Ai = 1} and β0 = 0, β1 = presence of selective labels. For exposition, we first focus

1, disp(f ) measures the average risk score for the group on the case without selective labels, where D∗ = D and the

with Ai = 1. This is an affirmative action-based fairness outcome Yi∗ is observed for all observations. We solve (2)

promoting intervention. Further assuming Y = {0, 1} and in the main text and (3) in § A.3 of the Supplement. We

defining Ei,1 = {Yi∗ = 1, Ai = 1}, disp(f ) measures the cover selective labels in § 5.

average risk score for the group with both Yi∗ = 1, Ai = 1.

This is a qualified affirmative action-based fairness pro- 4.1. Computing the Range of Predictive Disparities

moting intervention. We consider randomized prediction functions that select

Our approach can accommodate other notions of predictive f ∈ F according to some distribution Q ∈ ∆(F)

disparities. In Supplement §A.4, we show how to achieve where

P ∆ denotes the probability simplex.

P Let loss(Q) :=

bounded group loss, which requires that the average loss f ∈F Q(f ) loss(f ) and disp(Q) := f ∈F Q(f ) disp(f ).

conditional on each value of the sensitive attribute reach We solve

some threshold (Agarwal et al., 2019). min disp(Q) s.t. loss(Q) ≤ . (4)

Q∈∆(F )

3.2. Characterizing Predictive Disparities over the Set

of Good Models While it may be possible to solve this problem directly for

certain parametric function classes, we develop an approach

We develop the algorithmic framework, Fairness in the that can be applied to any generic function class.1 A key

Rashomon Set (FaiRS), to solve two related problems over object for doing so will be classifiers obtained by thresh-

the set of good models. First, we characterize the range olding prediction functions. For cutoff z ∈ [0, 1], define

of predictive disparities by minimizing or maximizing the hf (x, z) = 1{f (x) ≥ z} and let H := {hf : f ∈ F } be

predictive disparity measure over the set of good models. the set of all classifiers obtained by thresholding prediction

We focus on the minimization problem functions f ∈ F. We first reduce the optimization prob-

lem (4) to a constrained classification problem through a

min disp(f ) s.t. loss(f ) ≤ . (2)

f ∈F discretization argument, and then solve the resulting con-

strained classification problem through a further reduction

Second, we search for the prediction function that minimizes

to finding the saddle point of a min-max problem.

the absolute predictive disparity over the set of good models

Following the notation in Agarwal et al. (2019), we define

min |disp(f )| s.t. loss(f ) ≤ . (3) a discretization grid for [0, 1] of size N with α := 1/N

f ∈F

and Zα := {jα : j = 1, . . . , N }. Let Ỹα be an α2 -cover

For auditors, (2) traces out the range of predictive dispar- of Y. The piecewise approximation to the loss function is

ities that could be generated in a given setting, thereby lα (y, u) := l(y, [u]α + α2 ), where y is the smallest ỹ ∈ Ỹα

identifying where the benchmark model lies on this frontier. such that |y − ỹ| ≤ α2 and [u]α rounds u down to the nearest

This is crucially related to the legal notion of “business ne- integer multiple of α. For a fine enough discretization grid,

cessity” in assessing disparate impact – the regulator may lossα (f ) := E [lα (Yi∗ , f (Xi ))] approximates loss(f ).

audit whether there exist alternative prediction functions

Define c(y, z) := N × l(y, z + α2 ) − l(y, z − α2 ) and

that achieve similar performance yet generate different pre-

dictive disparities (civ, 1964; ECO, 1974; Barocas & Selbst, Zα to be the random variable that uniformly samples

2016). For decision makers, (3) searches for prediction zα ∈ Zα and is independent of the data (Xi , Ai , Yi∗ ). For

functions that reduce absolute predictive disparities without hf ∈ H, define the cost-sensitive average loss function as

compromising predictive performance. cost(hf ) := E [c(Y ∗i , Zα )hf (Xi , Zα )]. Lemma 1 in Agar-

wal et al. (2019) shows cost(hf ) + c0 = lossα (f ) for any

f ∈ F, where c0 ≥ 0 is a constant that does not depend

4. A Reductions Approach to Optimizing over on f . Since lossα (f ) approximates loss(f ), cost(hf ) also

the Set of Good Models approximates loss(f ). For Q ∈ ∆(F), define Qh ∈ ∆(H)

We characterize the range of predictive disparities (2) and 1

Our error analysis only covers function classes whose

find the absolute predictive disparity minimizing model (3) Rademacher complexity can be bounded as in Assumption 1.Characterizing Fairness Over the Set of Good Models Under Selective Labels

to be the induced distribution over threshold classifiers hf . disp(Q̃) + Õ(n−φ −φ

0 ) + Õ(n1 ) for any Q̃ that is feasible in

By the same argument,

P cost(Qh ) + c0 = lossα (Q), where (4); or 2) Q̂h = null and (4) is infeasible.2

cost(Qh ) := hf ∈H Qh (h) cost(hf ) and lossα (Q) is de-

fined analogously. Theorem 1 shows that the returned solution Q̂h is approxi-

mately feasible and achieves the lowest possible predictive

We next relate the predictive disparity measure defined disparity up to some error. Infeasibility is a concern if no

on prediction functions to a predictive disparity measure prediction function f ∈ F satisfies the average loss con-

defined on threshold classifiers. Define disp(hf ) := straint. Assumption 1 is satisfied for instance under LASSO

β0 E [hf (Xi , Zα ) | Ei,0 ] + β1 E [hf (Xi , Zα ) | Ei,1 ] . and ridge regression. If Assumption 1 does not hold, FaiRS

Lemma 1. Given any distribution over (Xi , Ai , Yi∗ ) and still delivers good solutions to the sample analogue of Eq. 5

f ∈ F, |disp(hf ) − disp(f )| ≤ (|β0 | + |β1 |) α. (see Supplement § C.1.2).

Lemma 1 combined with Jensen’s Inequality imply A practical challenge is that the solution returned by the

| disp(Qh ) − disp(Q)| ≤ (|β0 | + |β1 |) α. exponentiated gradient algorithm Q̂h is a stochastic pre-

diction function with possibly large support. Therefore it

Based on these results, we approximate (4) with its analogue may be difficult to describe, time-intensive to evaluate, and

over threshold classifiers memory-intensive to store. Results from Cotter et al. (2019)

min disp(Qh ) s.t. cost(Qh ) ≤ − c0 . (5) show that the support of the returned stochastic prediction

Qh ∈∆(H) function may be shrunk while maintaining the same guaran-

tees on its performance by solving a simple linear program.

We solve the sample analogue in which we minimize

The linear programming reduction reduces the stochastic

disp(Q d h ) ≤ ˆ, where ˆ := − ĉ0

d h ) subject to cost(Q

prediction function to have at most two support points and

plus additional slack, and ĉ0 , disp(Q

d h ), cost(Qd h ) are the we use this linear programming reduction in our empirical

associated sample analogues. We form the Lagrangian work (see § A.2 of the Supplement for details).

L(Qh , λ) := disp(Q

d h ) + λ(cost(Q d h ) − ˆ) with primal vari-

able Qh ∈ ∆(H) and dual variable λ ∈ R+ . Solving the

sample analogue is equivalent to finding the saddle point of

5. Optimizing Over the Set of Good Models

the min-max problem minQh ∈∆(H) max0≤λ≤Bλ L(Qh , λ), Under Selective Labels

where Bλ ≥ 0 bounds the Lagrange multiplier. We search We now modify the reductions approach to the empirically

for the saddle point by adapting the exponentiated gradi- relevant case in which the training data suffer from the selec-

ent algorithm used in Agarwal et al. (2018; 2019). The tive labels problem, whereby the outcome Yi∗ is observed

algorithm delivers a ν-approximate saddle point of the La- only if Di ∈ D∗ with D∗ ⊂ D. The main challenge con-

grangian, denoted (Q̂h , λ̂). Since it is standard, we provide cerns evaluating model properties over the target population

the details of and the pseudocode for the exponentiated when we only observe labels for a selective (i.e., biased)

gradient algorithm in § A.1 of the Supplement. sample. We propose a solution that uses outcome modeling,

also known as extrapolation, to estimate these properties.

4.2. Error Analysis

To motivate this approach, we observe that average loss

The suboptimality of the returned solution Q̂h can be con- and measures of predictive disparity (1) that condition on

trolled under conditions on the complexity of the model Yi∗ are not identified under selective labels without further

class F and how various parameters are set. assumptions. We introduce the following assumption on the

Assumption 1. Let Rn (H) be the Radermacher complexity nature of the selective labels problem for the binary decision

of H. There exists constants C, C 0 , C 00 > 0 and φ ≤ 1/2 setting with D = {0, 1} and D∗ = {1}.

such that Rn (H) ≤ Cn−φ and ˆ = − ĉ0 + C 0 n−φ − Assumption 2. The joint distribution (Xi , Ai , Di , Yi∗ ) ∼

C 00 n−1/2 . P satisfies 1) selection on observables: Di ⊥⊥ Yi∗ | Xi ,

0 and 2) positivity: P (Di = 1 | Xi = x) > 1 with probabil-

Theorem 1. Suppose Assumptionq 1 holds for C ≥ 2C +

p − log(δ/8) ity one.

2 + 2 ln(8N/δ) and C 00 ≥ 2 . Let n0 , n1 de-

note the number of samples satisfying the events Ei,0 , Ei,1 This assumption is common in causal inference and se-

respectively. lection bias settings (e.g., Chapter 12 of Imbens & Rubin

Then, the exponentiated gradient algorithm with ν ∝ n−φ , (2015) and Heckman (1990))3 and in covariate shift learn-

Bλ ∝ nφ and N ∝ nφ terminates in O(n4φ ) iterations and ing (Moreno-Torres et al., 2012). Under Assumption 2, the

returns Q̂h , which when viewed as a distribution over F, 2

The notation Õ(·) suppresses polynomial dependence on

satisfies with probability at least 1 − δ one of the following: ln(n) and ln(1/δ)

1) Q̂h 6= null, loss(Q̂h ) ≤ + Õ(n−φ ) and disp(Q̂h ) ≤ 3

Casting this into potential outcomes notation where Yid is theCharacterizing Fairness Over the Set of Good Models Under Selective Labels

regression function µ(x) := E[Yi∗ | Xi = x] is identified as where the nuisance function g(Xi , Yi ) is con-

E[Yi | Xi , Di = 1], and may be estimated by regressing the structed to identify the measure of interest.4 To

observed outcome Yi on the features Xi among observations illustrate, the qualified affirmative action fairness-

with Di = 1, yielding the outcome model µ̂(x). promoting intervention (Def. 3) is identified as

(Xi )µ(Xi )|Ai =1]

We can use the outcome model to estimate loss on the full E[f (Xi )|Yi∗ = 1, Ai = 1] = E[fE[µ(X i )|Ai =1]

population. One approach, Reject inference by extrapola- under Assumption 2 (See proof of Lemma 8 in the

tion (RIE), uses µ̂(x) as pseudo-outcomes for the unknown Supplement). This may be estimated by plugging in

observations (Crook & Banasik, 2004). We consider a sec- the outcome model estimate µ̂(x). Therefore, Eq. 6

ond approach, Interpolation & extrapolation (IE), which specifies the qualified affirmative action fairness-promoting

uses µ̂(x) as pseudo-outcomes for all applicants, replacing intervention by setting β0 = 0, β1 = 1, Ei,1 = 1 {Ai = 1},

the {0, 1} labels for known cases with smoothed estimates and g(Xi , Yi ) = µ̂(Xi ). This more general definition

of their underlying risks. Letting n0 , n1 be the number (Eq. 6) is only required for predictive disparity measures

of observations in the training data with Di = 0, Di = 1 that condition on events E depending on both Y ∗ and

respectively, Algorithms 1-2 summarize the RIE and IE A; it is straightforward to compute disparities based on

methods. If the outcome model could perfectly recover events E that only depend on A over the full population. To

µ(x), then the IE approach recovers an oracle setting for compute disparities based on events E that also depend on

which the FaiRS error analysis continues to hold (Theorem Y ∗ , we find the

h saddle

h point of the following Lagrangian:

ii

2 below). L(hf , λ) = Ê EZα cλ (µ̂i , Ai , Zα )hf (Xi , Zα ) − λˆ ,

where we now use case weights cλ (µ̂i , Ai , Zα ) :=

β0 β1

Algorithm 1: Reject inference by extrapolation p̂0 g(Xi , Yi )(1 − Ai ) + p̂1 g(Xi , Yi )Ai + λc(µ̂i , Zα ) with

(RIE) for the selective labels setting p̂a = Ê[g(Xi , Yi )1 {Ai = a}] for a ∈ {0, 1}. Finally, as

1

Input: {(Xi , Yi , Di = 1, Ai )}ni=1 , before, we find the saddle point using the exponentiated

0

{(Xi , Di = 0, Ai )}ni=1 gradient algorithm.

Estimate µ̂(x) by regressing Yi ∼ Xi | Di = 1.

Ŷ (Xi ) ← (1 − Di )µ̂(Xi ) + Di Yi 5.1. Error Analysis under Selective Labels

1

Output: {(Xi , Ŷi (Xi ), Di , Ai )}ni=1 , Define lossµ (f ) := E[l(µ(Xi ), f (Xi ))] for f ∈ F with

0

{(Xi , Ŷi (Xi ), Di , Ai )}ni=1 lossµ (Q) defined analogously for Q ∈ ∆(F). The error

analysis of the exponentiated gradient algorithm continues

to hold in the presence of selective labels under oracle access

to the true outcome regression function µ.

Algorithm 2: Interpolation and extrapolation (IE)

method for the selective labels setting Theorem 2 (Selective Labels). Suppose Assumption 2 holds

1

Input: {(Xi , Yi , Di = 1, Ai )}ni=1 , and the exponentiated gradient algorithm is given as input

0 the modified training data {(Xi , Ai , µ(Xi )}ni=1 .

{(Xi , Di = 0, Ai )}ni=1

Estimate µ̂(x) by regressing Yi ∼ Xi | Di = 1. Under the same conditions as Theorem 1, the exponentiated

Ŷ (Xi ) ← µ̂(Xi ) gradient algorithm terminates in O(n4φ ) iterations and

1

Output: {(Xi , Ŷi (Xi ), Di , Ai )}ni=1 , returns Q̂h , which when viewed as a distribution over F,

0

{(Xi , Ŷi (Xi ), Di , Ai )}ni=1 satisfies with probability at least 1 − δ either one of the

following: 1) Q̂h 6= null, lossµ (Q̂h ) ≤ + Õ(n−φ ) and

disp(Q̂h ) ≤ disp(Q̃) + Õ(n−φ −φ

0 ) + Õ(n1 ) for any Q̃ that

Estimating predictive disparity measures on the full popula- is feasible in (4); or 2) Q̂h = null and (4) is infeasible.

tion requires a more general definition of predictive disparity

than previously given in Eq. 1. Define the modified predic- In practice, estimation error in µ̂ will affect the bounds in

tive disparity measure over threshold classifiers as Theorem 2. The empirical analysis in § 7 finds that our

method nonetheless performs well when using µ̂.

E [g(Xi , Yi )hf (Xi , Zα ) | Ei,0 ]

disp(hf ) =β0 +

E[g(Xi , Yi ) | Ei,0 ]

(6) 6. Application: Recidivism Risk Prediction

E [g(Xi , Yi )hf (Xi , Zα )|Ei,1 ]

β1 , We use FaiRS to empirically characterize the range of dis-

E[g(Xi , Yi ) | Ei,1 ]

parities over the set of good models in a recidivism risk pre-

counterfactual outcome if decision d were assigned, we define

Yi0 = 0 and Yi1 = Yi∗ (e.g., a rejected loan application cannot 4

Note that we state this general form of g to allow g to use Yi

default). The observed outcome Yi then equals Yi1 Di . for e.g. doubly-robust style estimates.Characterizing Fairness Over the Set of Good Models Under Selective Labels

diction task applied to ProPublica’s COMPAS data (Angwin

Table 1. COMPAS fails an audit of the “business necessity” de-

et al., 2016). Our goal is to illustrate (i) how FaiRS may

fense for disparate impact by race. The set of good models (per-

be used to tractably characterize the range of predictive dis- forming within 1% of COMPAS’s training loss) includes models

parities over the set of good models; (ii) that the range of that achieve significantly lower disparities than COMPAS. The

predictive disparities over the set of good models can be first panel (SP) displays the disparity in average predictions for

quite large empirically; and (iii) how an auditor may use black versus white defendants (Def. 1). The second panel (BFPC)

the set of good models to assess whether the COMPAS risk analyzes the disparity in average predictions for black versus white

assessment generates larger disparities than other competing defendants in the positive class, and the third panel examines the

good models. Such an analysis is a crucial step to assessing disparity in average predictions for black versus white defendants

legal claims of disparate impact. in the negative class (Def. 2). Standard errors are reported in

parentheses. See § 6 for details.

COMPAS is a proprietary risk assessment developed by

Northpointe (now Equivant) using up to 137 features (Rudin M IN . D ISP. M AX . D ISP. COMPAS

et al., 2020). As this data is not publicly available, our SP −0.060 0.120 0.194

audit makes use of ProPublica’s COMPAS dataset which (0.004) (0.007) (0.013)

contains demographic information and prior criminal his- BFPC 0.049 0.125 0.156

tory for criminal defendants in Broward County, Florida. (0.005) (0.012) (0.016)

Lacking access to the data used to train COMPAS, our set BFNC 0.044 0.117 0.174

of good models may not include COMPAS itself (Angwin (0.005) (0.009) (0.016)

et al., 2016). Nonetheless, prior work has shown that simple

models using age and criminal history perform on par with

COMPAS (Angelino et al., 2018). These features will there- tions for black relative to white defendants is strictly larger

fore suffice to perform our audit. A notable limitation of the than that of any model in the set of good models (Table

ProPublica COMPAS dataset is that it does not contain infor- 1, SP). Interestingly, the minimal balance for the positive

mation for defendants who remained incarcerated. Lacking class and balance for the negative class disparities between

both features and outcomes for this group, we proceed with- black and white defendants over the set of good models are

out addressing this source of selection bias. We also make strictly positive (Table 1, BFPC and BFNC). For example

no distinction between criminal defendants who had varying any model whose performance lies in a neighborhood of

lengths of incarceration before release, effectively assuming COMPAS’ loss has a higher false positive rate for black

a null treatment effect of incarceration on recidivism. This defendants than white defendants. This suggests while we

assumption is based on findings that a counterfactual audit can reduce predictive disparities between black and white

of COMPAS yields equivalent conclusions (Mishler, 2019). defendants relative to COMPAS on all measures, we may

We analyze the range of predictive disparities with respect to be unable to eliminate balance for the positive class and

race for three common notions of fairness (Definitions 1-2) balance for the negative class disparities without harming

among logistic regression models on a quadratic polynomial predictive performance.

of the defendant’s age and number of prior offenses whose In addition to the retrospective auditing considered in this

training loss is near-comparable to COMPAS (loss tolerance section, characterizing the range of predictive disparities

= 1% of COMPAS training loss).5 We split the data over the set of good models is also important for model

50%-50% into a train and test set. Table 1 summarizes the development and selection. The next section shows how to

range of predictive disparities on the test set. The disparity construct a more equitable model that performs comparably

minimizing and disparity maximizing models over the set to a benchmark.

of good of models achieve a test loss that is comparable to

COMPAS (see § D.1 of the Supplement).

7. Application: Consumer Lending

For each predictive disparity measure, the set of good mod-

els includes models that achieve significantly lower dispari- Suppose a financial institution wishes to replace an exist-

ties than COMPAS. In this sense, COMPAS generates “un- ing credit scoring model with one that has better fairness

justified” disparate impact as there exists competing models properties and comparable performance, if such a model

that would reduce disparities without compromising per- exists. We show how to accomplish this task by using FaiRS

formance. Notably, COMPAS’ disparities are also larger to find the absolute predictive disparity-minimizing model

than the maximum disparity over the set of good models. over the set of good models. On a real world consumer

For example, the difference in COMPAS’ average predic- lending dataset with selectively labeled outcomes, we find

5

that this approach yields a model that reduces predictive

We use a quadratic form following the analysis in Rudin et al. disparities relative to the benchmark without compromising

(2020).

overall performance.Characterizing Fairness Over the Set of Good Models Under Selective Labels

We use data from Commonwealth Bank of Australia, a large outcomes Yei∗ according to Yei∗ | Xi ∼ Bernoulli(µ(Xi )).

financial institution in Australia (henceforth, ”CommBank”), We train all models as if we only knew the synthetic out-

on a sample of 7,414 personal loan applications submitted come for the synthetically funded applications. We estimate

from July 2017 to July 2019 by customers that did not µ̂(x) := P̂ (Ỹi = 1|Xi = x, D̃i = 1) using random forests

have a prior financial relationship with CommBank. A and use µ̂(Xi ) to generate the pseudo-outcomes Ŷ (Xi ) for

personal loan is a credit product that is paid back with RIE and IE as described in Algorithms 1 and 2. As bench-

monthly installments and used for a variety of purposes such mark models, we use the loss-minimizing linear models

as purchasing a used car or refinancing existing debt. In our learned using KGB, RIE, and IE approaches, whose respec-

sample, the median personal loan size is AU$10,000 and tive training losses are used to select the corresponding loss

the median interest rate is 13.9% per annum. For each loan tolerances . We use the class of linear models for the FaiRS

application, we observe application-level information such algorithm for KGB, RIE, and IE approaches.

as the applicant’s credit score and reported income, whether

We compare against the fair reductions approach to classifi-

the application was approved by CommBank, the offered

cation (fairlearn) and the Target-Fair Covariate Shift (TFCS)

terms of the loan, and whether the applicant defaulted on

method. TFCS iteratively reweighs the training data via

the loan. There is a selective labels problem as we only

gradient descent on an objective function comprised of the

observe whether an applicant defaulted on the loan within 5

covariate shift-reweighed classification loss and a fairness

months (Yi ) if the application was funded, where “funded”

loss (Coston et al., 2019). Fairlearn searches for the loss-

denotes that the application is both approved by CommBank

minimizing model subject to a fairness parity constraint

and the offered terms were accepted by the applicant. In

(Agarwal et al., 2018). The fairlearn model is effectively a

our sample, 44.9% of applications were funded and 2.0% of

KGB model since the fairlearn package does not offer mod-

funded loans defaulted within 5 months.

ifications for selective labels.7 We use logistic regression

Motivated by a decision maker that wishes to reduce credit as the base model for both fairlearn and TFCS. Results are

access disparities across geographic regions, we focus on reported on all applicants in a held out test set, and perfor-

the task of predicting the likelihood of default Yi∗ = 1 based mance metrics are constructed with respect to the synthetic

on information in the loan application Xi while limiting pre- outcome Ỹi∗ .

dictive disparities across SA4 geographic regions within

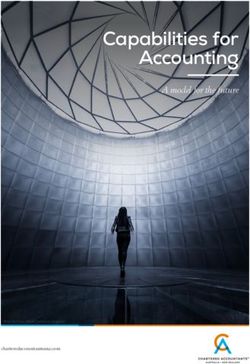

Figure 1 shows the AUC (y-axis) against disparity (x-axis)

Australia. SA4 regions are statistical geographic areas de-

for the KGB, RIE, IE benchmarks and their FaiRS variants

fined by the Australian Bureau of Statistics (ABS) and are

as well as the TFCS models and fairlearn models. Colors

analogous to counties in the United States. An SA4 region is

denote the adjustment strategy for selective labels, and the

classified as socioeconomically disadvantaged (Ai = 1) if it

shape specifies the optimization method. The first row eval-

falls in the top quartile of SA4 regions based on the ABS’ In-

uates the models on all applicants in the test set (i.e., the

dex of Relative Socioeconomic Disadvantage (IRSD), which

target population). On the target population, FaiRS with

is an index that aggregates census data related to socioeco-

reject extrapolation (RIE and IE) reduces disparities while

nomic disadvantage.6 Applicants from disadvantaged SA4

achieving performance comparable to the benchmarks and

regions are under-represented among funded applications,

to the reweighing approach (TFCS). It also achieves lower

comprising 21.7% of all loan applications, but only 19.7%

disparities than TFCS, likely because TFCS optimizes a

of all funded loan applications.

non-convex objective function and may therefore converge

Our experiment investigates the performance of FaiRS under to a local minimum. Reject extrapolation achieves better

our two proposed extrapolation-based solutions to selective AUC than all KGB models, and only one KGB model (fair-

labels, RIE and IE (See Algorithms 1-2), as well as the learn) achieves a lower disparity. The second row evalu-

Known-Good Bad (KGB) approach that uses only the se- ates the models on only the funded applicants. Evaluation

lectively labelled population. Because we do not observe on the funded cases underestimates disparities across the

default outcomes for all applications, we conduct a semi- methods and overestimates AUC for the TFCS and KGB

synthetic simulation experiment by generating synthetic models. This underscores the importance of accounting for

funding decisions and default outcomes. On a 20% sam- the selective labels problem in both model construction and

ple of applicants, we learn π(x) := P̂ (Di = 1|Xi = x) evaluation.

and µ(x) := P̂ (Yi = 1|Xi = x, Di = 1) using random

FaiRS is also applicable in the regression setting. On the

forests. We generate synthetic funding decisions D

e i accord-

7

ing to Di | Xi ∼ Bernoulli(π(Xi )) and synthetic default

e To accommodate reject inference, a method must support

real-valued outcomes. The fairlearn package does not, but the

6

Complete details on the IRSD may be found in Australian Bu- related fair regressions method does (Agarwal et al., 2019). This

reau of Statistics (2016) and additional details on the definition of is sufficient for statistical parity (Def. 1), but other parities such

socioeconomic disadvantage are given in § D.2 of the Supplement. as BFPC and BFNC (Def. 2) require further modifications as

discussed in § 5Characterizing Fairness Over the Set of Good Models Under Selective Labels

0.70

be used to find a more equitable model with performance

0.69 comparable to the benchmark. Characterizing the properties

All applicants

●

● ● ●

of the set of good models is a relevant enterprise for both

0.68

model designers and auditors alike. This exercise opens

0.67 ● ● new perspectives on algorithmic fairness that may provide

exciting opportunities for future research.

AUC

0.66

0.70

0.69 Acknowledgements

Funded applicants

●

●● ● ●●

0.68 We are grateful to our data partners at Commonwealth Bank

0.67

of Australia, and in particular to Nathan Damaj, Bojana

Manojlovic and Nikhil Ravichandar for their generous sup-

0.66

0.00 0.02 0.04 port and assistance throughout this research. We also thank

Disparity: E[f(X)|A = 1] − E[f(X)|A = 0]

Riccardo Fogliato, Sendhil Mullainathan, Cynthia Rudin

● Known Good−Bad (KGB) ● Reject extrapolation (RIE) ● Inter & extrapolation (IE) ● Reweighed

● benchmark Fairlearn TFCS ● FaiRS (ours)

and anonymous reviewers for valuable comments and feed-

back. Rambachan gratefully acknowledges financial support

Figure 1. Area under the ROC curve (AUC) with respect to the from the NSF Graduate Research Fellowship under Grant

synthetic outcome against disparity in the average risk prediction No. DGE1745303. Coston gratefully acknowledges finan-

for the disadvantaged (Ai = 1) vs advantaged (Ai = 0) groups. cial support support from the K&L Gates Presidential Fel-

FaiRS reduces disparities for the RIE and IE approaches while lowship and the National Science Foundation Graduate Re-

maintaining AUCs comparable to the benchmark models (first row). search Fellowship Program under Grant No. DGE1745016.

Evaluation on only funded applicants (second row) overestimates Any opinions, findings, and conclusions or recommenda-

the performance of TFCS and KGB models and underestimates tions expressed in this material are solely those of the au-

disparities for all models. Error bars show the 95% confidence thors.

intervals. See § 7 for details.

Communities & Crime dataset, FaiRS improves on statisti-

cal parity without compromising performance relative to a

benchmark loss-minimizing least squares regression model

(See Supplement §D.5).

8. Conclusion

We develop a framework, Fairness in the Rashomon Set

(FaiRS), to characterize the range of predictive disparities

and find the absolute disparity minimizing model over the

set of good models. FaiRS is suitable for a variety of appli-

cations including settings with selectively labelled outcomes

where the selection decision and outcome are unconfounded

given the observed features. The method is generic, apply-

ing to both a large class of prediction functions and a large

class of predictive disparities.

In many settings, the set of good models is a rich class, in

which models differ substantially in terms of their fairness

properties. Exploring the range of predictive fairness proper-

ties over the set of good models opens new perspectives on

how we learn, select, and evaluate machine learning models.

A model designer may use FaiRS to select the model with

the best fairness properties among the set of good models.

FaiRS can be used during evaluation to compare the predic-

tive disparities of a benchmark model against other models

in the set of good models. When this evaluation illuminates

unjustified disparities in the benchmark model, FaiRS canCharacterizing Fairness Over the Set of Good Models Under Selective Labels

References Coston, A., Ramamurthy, K. N., Wei, D., Varshney, K. R.,

Speakman, S., Mustahsan, Z., and Chakraborty, S. Fair

Civil rights act, 1964. 42 U.S.C. § 2000e.

transfer learning with missing protected attributes. In

Equal credit opportunity act, 1974. 15 U.S.C. § 1691. Proceedings of the 2019 AAAI/ACM Conference on AI,

Ethics, and Society, pp. 91–98, 2019.

Agarwal, A., Beygelzimer, A., Dudik, M., Langford, J., and Coston, A., Mishler, A., Kennedy, E. H., and Chouldechova,

Wallach, H. A reductions approach to fair classification. A. Counterfactual risk assessments, evaluation and fair-

In Proceedings of the 35th International Conference on ness. In FAT* ’20: Proceedings of the 2020 Conference

Machine Learning, pp. 60–69, 2018. on Fairness, Accountability, and Transparency, pp. 582–

593, 2020.

Agarwal, A., Dudı́k, M., and Wu, Z. S. Fair regression:

Quantitative definitions and reduction-based algorithms. Cotter, A., Jiang, H., and Sridharan, K. Two-player games

In Proceedings of the 36th International Conference on for efficient non-convex constrained optimization. In

Machine Learning, ICML, 2019. Proceedings of the 30th International Conference on Al-

gorithmic Learning Theory, pp. 300–332, 2019.

Angelino, E., Larus-Stone, N., Alabi, D., Seltzer, M., and

Rudin, C. Learning certifiably optimal rule lists for cate- Crook, J. and Banasik, J. Does reject inference really im-

gorical data. Journal of Machine Learning Research, 18: prove the performance of application scoring models?

1–78, 2018. Journal of Banking & Finance, 28(4):857–874, 2004.

Dastin, J. Amazon scraps secret ai recruiting tool

Angwin, J., Larson, J., Mattu, S., and Kirchner, L. Ma-

that showed bias against women, Oct 2018. URL

chine bias. there’s software used across the country to

https://www.reuters.com/article/us-

predict future criminals. and it’s biased against blacks.

amazon-com-jobs-automation-insight/

ProPublica, 2016.

amazon-scraps-secret-ai-recruiting-

Australian Bureau of Statistics. Socio-economic indexes for tool-that-showed-bias-against-women-

areas (seifa) technical paper. Technical report, 2016. idUSKCN1MK08G.

Dong, J. and Rudin, C. Exploring the cloud of variable im-

Barocas, S. and Selbst, A. Big data’s disparate impact.

portance for the set of all good models. Nature Machine

California Law Review, 104:671–732, 2016.

Intelligence, 2(12):810–824, 2020.

Barocas, S., Hardt, M., and Narayanan, A. Fairness and Donini, M., Oneto, L., Ben-David, S., Shawe-Taylor, J. R.,

Machine Learning. fairmlbook.org, 2019. http:// and Pontil, M. A. Empirical risk minimization under

www.fairmlbook.org. fairness constraints. In NIPS’18: Proceedings of the

32nd International Conference on Neural Information

Breiman, L. Statistical modeling: The two cultures. Statisti- Processing Systems, pp. 2796–2806, 2018.

cal Science, 16(3):199–215, 2001.

Dua, D. and Graff, C. UCI machine learning repository,

Caruana, R., Lou, Y., Gehrke, J., Koch, P., Sturm, M., and 2017. URL http://archive.ics.uci.edu/ml.

Elhadad, N. Intelligible models for healthcare: Predict-

Dwork, C., Moritz Hardt, T. P., Reingold, O., and Zemel, R.

ing pneumonia risk and hospital 30-day readmission. In

Fairness through awareness. In ITCS ’12: Proceedings

Proceedings of the 21th ACM SIGKDD International

of the 3rd Innovations in Theoretical Computer Science

Conference on Knowledge Discovery and Data Mining,

Conference, pp. 214–226, 2012.

pp. 1721–1730, 2015.

Feldman, M., Friedler, S. A., Moeller, J., Scheidegger, C.,

Chouldechova, A., Benavides-Prado, D., Fialko, O., and and Venkatasubramanian, S. Certifying and removing

Vaithianathan, R. A case study of algorithm-assisted de- disparate impact. In KDD ’15: Proceedings of the 21th

cision making in child maltreatment hotline screening ACM SIGKDD International Conference on Knowledge

decisions. volume 81 of Proceedings of Machine Learn- Discovery and Data Mining, pp. 259–268, 2015.

ing Research, pp. 134–148, 2018.

Fisher, A., Rudin, C., and Dominici, F. All models

Corbett-Davies, S., Pierson, E., Feller, A., Goel, S., and Huq, are wrong, but many are useful: Learning a variable’s

A. Algorithmic decision making and the cost of fairness. importance by studying an entire class of prediction

In KDD ’17: Proceedings of the 23rd ACM SIGKDD models simultaneously. Journal of Machine Learn-

International Conference on Knowledge Discovery and ing Research, 20(177):1–81, 2019. URL http://

Data Mining, pp. 797–806, 2017. jmlr.org/papers/v20/18-760.html.Characterizing Fairness Over the Set of Good Models Under Selective Labels

Fuster, A., Goldsmith-Pinkham, P., Ramadorai, T., and Marx, C., Calmon, F., and Ustun, B. Predictive multiplicity

Walther, A. Predictably unequal? the effects of machine in classification. In Proceedings of the 37th International

learning on credit markets. Technical report, 2020. Conference on Machine Learning, pp. 6765–6774, 2020.

Gillis, T. False dreams of algorithmic fairness: The case of Menon, A. K. and Williamson, R. C. The cost of fairness

credit pricing. 2019. in binary classification. In Proceedings of the 1st Confer-

ence on Fairness, Accountability and Transparency, pp.

Hardt, M., Price, E., and Srebro, N. Equality of opportunity 107–118. 2018.

in supervised learning. In NIPS’16: Proceedings of the

30th International Conference on Neural Information Mishler, A. Modeling risk and achieving algorithmic fair-

Processing Systems, pp. 3323–3331, 2016. ness using potential outcomes. In Proceedings of the

2019 AAAI/ACM Conference on AI, Ethics, and Society,

Heckman, J. J. Varieties of selection bias. The American pp. 555–556, 2019.

Economic Review, 80(2):313–318, 1990.

Mitchell, S., Potash, E., Barocas, S., D’Amour, A., and Lum,

Holstein, K., Wortman Vaughan, J., Daumé, H., Dudik, M., K. Prediction-based decisions and fairness: A catalogue

and Wallach, H. Improving fairness in machine learning of choices, assumptions, and definitions. Technical report,

systems: What do industry practitioners need? In Pro- arXiv Working Paper, arXiv:1811.07867, 2019.

ceedings of the 2019 CHI Conference on Human Factors

in Computing Systems, CHI ’19, pp. 1–16, 2019. Moreno-Torres, J. G., Raeder, T., Alaiz-Rodrı́guez, R.,

Chawla, N. V., and Herrera, F. A unifying view on

Imbens, G. W. and Rubin, D. B. Causal Inference for Statis- dataset shift in classification. Pattern Recognition, 45

tics, Social and Biomedical Sciences: An Introduction. (1):521–530, 2012.

Cambridge University Press, Cambridge, United King-

dom, 2015. Nguyen, H.-T. et al. Reject inference in application score-

cards: evidence from france. Technical report, University

Kallus, N. and Zhou, A. Residual unfairness in fair ma- of Paris Nanterre, EconomiX, 2016.

chine learning from prejudiced data. In International

Conference on Machine Learning, pp. 2439–2448, 2018. Pleiss, G., Raghavan, M., Wu, F., Kleinberg, J., and Wein-

berger, K. Q. On fairness and calibration. In Advances in

Kim, M. P., Ghorbani, A., and Zou, J. Multiaccuracy: Black- Neural Information Processing Systems 30 (NIPS 2017),

box post-processing for fairness in classification. In Pro- pp. 5680–5689. 2017.

ceedings of the 2019 AAAI/ACM Conference on AI, Ethics,

and Society, pp. 247–254, 2019. Raghavan, M., Barocas, S., Kleinberg, J., and Levy, K.

Mitigating bias in algorithmic hiring: Evaluating claims

Kleinberg, J., Lakkaraju, H., Leskovec, J., Ludwig, J., and and practices. In Proceedings of the 2020 Conference on

Mullainathan, S. Human decisions and machine pre- Fairness, Accountability, and Transparency, pp. 469–481,

dictions. The Quarterly Journal of Economics, 133(1): 2020.

237–293, 2018.

Rudin, C. Stop explaining black box machine learning

Lakkaraju, H., Kleinberg, J., Leskovec, J., Ludwig, J., and models for high stakes decisions and use interpretable

Mullainathan, S. The selective labels problem: Evalu- models instead. Nature Machine Intelligence, 1:206–215,

ating algorithmic predictions in the presence of unob- 2019.

servables. In Proceedings of the 23rd ACM SIGKDD

International Conference on Knowledge Discovery and Rudin, C., Wang, C., and Coker, B. The age of secrecy

Data Mining, pp. 275–284, 2017. and unfairness in recidivism prediction. Harvard Data

Science Review, 2(1), 2020.

Li, Z., Hu, X., Li, K., Zhou, F., and Shen, F. Inferring the

outcomes of rejected loans: An application of semisuper- Selbst, A. D., Boyd, D., Friedler, S. A., Venkatasubrama-

vised clustering. Journal of the Royal Statistical Society: nian, S., and Vertesi, J. Fairness and abstraction in so-

Series A, 183(2):631–654, 2020. ciotechnical systems. In Proceedings of the Conference

on Fairness, Accountability, and Transparency, FAT* ’19,

Little, R. J. and Rubin, D. B. Statistical analysis with pp. 59–68, 2019.

missing data, volume 793. John Wiley & Sons, 2019.

Semenova, L., Rudin, C., and Parr, R. A study in rashomon

Mancisidor, R. A., Kampffmeyer, M., Aas, K., and Jenssen, curves and volumes: A new perspective on generalization

R. Deep generative models for reject inference in credit and model simplicity in machine learning. Technical

scoring. Knowledge-Based Systems, pp. 105758, 2020. report, arXiv preprint arXiv:1908.01755, 2020.Characterizing Fairness Over the Set of Good Models Under Selective Labels Singh, H., Singh, R., Mhasawade, V., and Chunara, R. Fair- ness violations and mitigation under covariate shift. In FAccT’21: Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 2021. Vigdor, N. Apple card investigated after gender dis- crimination complaints, Nov 2019. URL https: //www.nytimes.com/2019/11/10/business/ Apple-credit-card-investigation.html. Zafar, M. B., Valera, I., Gomez-Rodriguez, M., and Gum- madi, K. P. Fairness constraints: A flexible approach for fair classification. Journal of Machine Learning Research, 20(75):1–42, 2019. Zemel, R., Wu, Y., Swersky, K., Pitassi, T., and Dwork, C. Learning fair representations. In Proceedings of the 30th International Conference on Machine Learning, pp. 325–333, 2013. Zeng, G. and Zhao, Q. A rule of thumb for reject inference in credit scoring. Math. Finance Lett., 2014:Article–ID, 2014.

You can also read