Image Classifiers Leak Sensitive Attributes About Their Classes

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Image Classifiers Leak Sensitive Attributes About Their Classes

Lukas Struppek* 1 , Dominik Hintersdorf1 , Felix Friedrich1 , Manuel Brack1 ,

Patrick Schramowski1,3,4,5 , and Kristian Kersting1,2,3,4

1

Department of Computer Science, Technical University of Darmstadt, Germany

2

Centre for Cognitive Science, Technical University of Darmstadt

3

arXiv:2303.09289v1 [cs.LG] 16 Mar 2023

German Research Center for Artificial Intelligence (DFKI)

4

Hessian Center for AI (hessian.AI), Germany

5

LAION

Abstract the same time to not reveal information about their appearance

to third parties. However, this assumption does not necessar-

Neural network-based image classifiers are powerful tools ily hold true, and malicious parties could extract sensitive fea-

for computer vision tasks, but they inadvertently reveal sensi- tures about users by simply interacting with the model in a

tive attribute information about their classes, raising concerns black-box fashion.

about their privacy. To investigate this privacy leakage, we

We investigate the privacy leakage of image classifiers and

introduce the first Class Attribute Inference Attack (C AIA),

demonstrate that models indeed leak sensitive class informa-

which leverages recent advances in text-to-image synthesis to

tion even without any specific information about the classes,

infer sensitive attributes of individual classes in a black-box

training samples, or attribute distributions available. We fo-

setting, while remaining competitive with related white-box

cus on face recognition models and show that these models

attacks. Our extensive experiments in the face recognition do-

reveal sensitive details within their outputs, such as gender,

main show that C AIA can accurately infer undisclosed sensi-

hair color, and racial appearance, for the different identities

tive attributes, such as an individual’s hair color, gender, and

they have been trained to recognize.

racial appearance, which are not part of the training labels.

Interestingly, we demonstrate that adversarial robust models Within our analysis, we introduce a Class Attribute

are even more vulnerable to such privacy leakage than stan- Inference Attack (C AIA), which enables an adversary to in-

dard models, indicating that a trade-off between robustness fer sensitive attributes of a class with high accuracy. Phrased

and privacy exists. differently, C AIA allows creating a profile of the individual

classes by interacting with the trained classifier through ex-

traction of class attributes that have not been explicitly part

1 Introduction of the training objective. We utilize recent advances in text-

to-image synthesis to craft images that only differ in one at-

tribute by editing real images with textual guidance. We then

Classifying images with neural networks is widely adopted exploit that image classifiers, as we show, assign higher log-

in various domains [6, 16, 2, 1]. Face recognition systems, its to inputs that share the sensitive attribute with the training

for example, take facial images as input and attempt to predict samples of a class, which allows us to infer class informa-

the depicted person’s identity. In the pursuit of enhancing a tion by only observing the input-output relation of the model.

model’s predictive performance, privacy concerns of the ac- Compared to related inference attacks [8, 7, 47, 38], C AIA is

quired knowledge are often disregarded and moved into the model-agnostic and requires only black-box access and basic

background. However, correctly assessing and mitigating the domain knowledge. Once the attack images are crafted, at-

risk of compromising private information is crucial in privacy- tribute inference requires only a single model forward pass of

sensitive domains, as neglect can lead to the disclosure of the generated samples.

private information [37, 38, 14]. For example, smart home

In our extensive evaluation, we demonstrate the success of

devices contain face recognition models for access control.

C AIA and show that robust models trained with adversarial

Users expect these models to recognize them reliably, but at

training [10, 28] are even more susceptible to these attacks.

* Contact: lukas.struppek@cs.tu-darmstadt.de They leak more information about their individual classes thannon-robust models, even if their prediction accuracy is sub- imputation in settings with limited data and skewed distribu-

stantially lower. This indicates a trade-off for model design- tions.

ers: making a model robust to adversarial examples comes at Model Inversion Attacks. All presented AIAs are limited

the expense of higher privacy leakage. to tabular data and are not applicable to image classification

Disclaimer: This paper investigates the extraction of sensi- since the variation of single image attributes, e.g., changing

tive identity information, including gender and racial appear- the hair color in a facial image, is not trivially possible. More-

ance of people. The groups used (Asian, Black, Indian, White) over, the AIA setting itself is not transferable to the vision

are in line with current research and follow the taxonomy of domain, since it is unclear how an adversary can have access

Karkkainen and Joo [21], which itself is adopted from the to incomplete images with only one attribute missing. How-

US Census Bureau [3]. Importantly, we emphasize that this ever, model inversion attacks (MIAs) fill this gap for image

work’s goal is to investigate the leakage of sensitive attributes classification. We note that the notion of MIAs is not consis-

in image classifiers. We do not intend to discriminate against tent in the literature, and the term is sometimes also used for

identity groups or cultures in any way. AIAs. Generally, given a classification model, an adversary

attempts to create synthetic input samples that either recon-

2 Background and Related Work struct samples from the model’s training data [7, 47, 4, 19]

or craft synthetic samples that reflect the characteristics of a

Attribute Inference Attacks. In recent years, various specific class [42, 38]. While most MIAs require access to

types of inference attacks have been proposed. Those include samples from the target training distribution to train a custom

membership inference attacks [37, 45, 5, 13, 14] and property generative adversarial network (GAN), Struppek et al. [38] re-

inference attacks [9, 33, 48, 43]. Most related to our work are cently proposed Plug & Play (PPA) MIAs, which make the

attribute inference attacks (AIAs) [8], which aim to infer sen- attacks agnostic to the target model, increase their flexibil-

sitive attribute values of an incomplete data record in the con- ity, and enhance their inference speed by utilizing pre-trained

text of classification and regression models. More specifically, GANs. We will use PPA as a baseline for comparing with our

the adversary has access to a target model Mtarget , which has C AIA.

been trained on a dataset Strain , sampled from the distribution Novelty of C AIA. In contrast to previous work on AIAs,

D. Each training sample is a triplet (xs , xn , y) and consists of we move the scope of inference attacks from the sample level

some sensitive attributes xs and non-sensitive attributes xn to- to a class level in the vision domain, with the goal of infer-

gether with a ground-truth label y. The adversary has access ring sensitive information about the distinct classes learned by

to a set of candidates (xn , y) ⊆ Strain with the sensitive at- a model. To achieve this, we use the latest advancements in

tribute values missing. AIAs try to infer the sensitive values text-to-image synthesis to manipulate single attributes of input

xs by exploiting Mtarget and its learned information about images, resulting in consistent images that differ only in the

distribution D. targeted attribute. Our approach is more efficient than MIAs

Fredrikson et al. [8, 7] proposed maximum-a-posterior as it requires only black-box access to the target model and no

AIAs that, assuming all attributes are independent, predict extensive knowledge of the training data distribution. Further-

the sensitive attribute value that minimizes the adversary’s ex- more, the inference step is done in seconds, since C AIA does

pected misclassification rate. Yeom et al. [45] extended the not require any further sample optimization after constructing

approach and combined attribute inference with membership the initial attack dataset. This makes C AIA more flexible and

inference attacks. Mehnaz et al. [29] introduced an attack target-independent than previous AIAs and MIAs. Through-

based on the softmax scores of the target model, assuming out this work, we focus on the privacy-sensitive domain of

that the model’s prediction is more likely to be correct and face recognition systems. The goal is to infer sensitive infor-

confident if the input sample contains the true sensitive at- mation about identities, e.g., their gender or racial appearance,

tribute value. Common AIAs make strong assumptions re- without any specific information about the individual identity

garding the adversary’s knowledge that is generally hard to or underlying training set distributions available.

gain under realistic assumptions, e.g., the adversary knows Adversarial Robust Training. Besides privacy attacks,

the marginal prior of sample attributes [8, 7, 45] or the target neural networks are known to be susceptible to adversarial ex-

model’s confusion matrix on its training data [7, 29]. Jayara- amples [40, 10]. Formally, adversarial examples are crafted

man and Evans [18] questioned previous black-box AIAs and by adding an optimized perturbation δ with kδk ≤ to a

empirically showed that those attacks could not reveal more model input x to maximize the model’s loss L(M(x + δ), y)

private information than a comparable adversary without ac- for the true label y. One of the most reliable and commonly

cess to the target model. They conclude that black-box AIAs used defenses is adversarial training [10, 28], which updates a

perform similarly well as data imputation techniques to fill model’s weights θ on adversarial examples. During each train-

the missing attribute values. To improve AIAs over data im- ing step, a sample-wise worst-case perturbation is computed

putation, they proposed a white-box attack that outperforms in an -environment around the clean samples to maximize the

2Black Blond Brown Gray

Attribute Attribute

Variations Filter

{ “a photo of a person,

” }

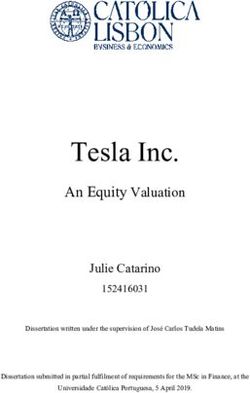

Figure 1: Overview of our attack dataset crafting process for the sensitive attribute hair color, which has four possible values. Real

images are used to generate image variations by modifying characteristics associated with the sensitive attribute. The resulting

candidate images are then filtered to ensure that each sample accurately reflects the intended attribute values. The final output of

this process is the set of attack samples.

model’s confusion. These perturbations are then added to the Adversary’s Capabilities. The adversary has only black-

clean samples to train the model and make it robust against box access to the target model, i.e., the adversary can query

such manipulations. Formally, this comes down to a min-max the target model and observe its output logits. Furthermore,

optimization: the adversary knows the domain of the target model’s training

data, e.g., facial images, but not the exact training data distri-

X

min max L(M(x + δ), y) . (1) bution. For the sensitive attributes, the adversary defines an

θ kδk≤ individual set of possible values to infer. We emphasize that

(x,y)∈Strain

C AIA is model- and task-agnostic and does not require white-

Since the inner maximization problem cannot be solved box access to the target model. Information about the prior

numerically in tractable time, local search algorithms are ap- distribution of the sensitive attributes is neither available nor

plied to craft adversarial examples, e.g., FGSM [10, 44] or required.

PGD [28]. By training on adversarial examples, the model

becomes more robust against adversarial perturbations. In our

3.1 Crafting Attack Samples

experiments, we also investigate the influence of model ro-

bustness on its privacy leakage.

While it is easy for tabular data to vary the values of at-

tributes in an inference sample, this is a non-trivial task on im-

3 Class Attributes Inference Attacks ages since attributes are encoded in pixels, and multiple pixels

define not only a single attribute but a set of possibly entan-

We now introduce the novel Class Attribute Inference gled attributes. For example, human faces contain information

Attack (C AIA), which consists of two stages. First, the ad- such as age and skin color, among many more. Furthermore,

versary generates a set of attack samples by creating different changing the value for any of these attributes requires seman-

versions of images through a generative approach that alters tically consistent changes in multiple pixels.

the sensitive attribute values. In the second stage, these attack To enable meaningful image manipulations, we utilize re-

samples are used to infer the sensitive attribute values for the cent advances in text-to-image synthesis. Systems like Stable

identities in a face recognition model. Before delving into the Diffusion [35] are able to generate high-quality images fol-

details, we outline our general threat model. lowing a user-provided text description p. Mokady et al. [30]

Adversary’s Goal. Let M : X → R|Y | denote the trained recently proposed Null-text Inversion to encode real images

target image classifier, which takes input images x ∈ X and into the domain of diffusion models and enable text-based

computes prediction scores for each class label y ∈ Y . The editing while keeping the overall image composition and con-

model’s training data Strain consisted of labeled data samples tent fixed. In combination with Prompt-to-Prompt [12], it al-

(x, y) ∼ D. The underlying attack assumption is that sam- lows a user to instruct image edits xedit = E(x, p) on real

ples of a certain class share a constant sensitive attribute value images x conditioned on a description p. We use Null-text In-

z ∈ Z, which is not part of the class label but is implicitly en- version to generate variations of existing images by changing

coded in the image features. For example, a face recognition only the sensitive attribute values, while aiming to leave other

model is trained to predict the identity y of each facial image image aspects unchanged.

x. A sensitive attribute z in this context might be the gender Fig. 1 illustrates the crafting process for the attack sam-

appearance or hair color of a specific identity. The attack goal ples. Formally, the adversary has access to a data distri-

is to infer the value of this sensitive attribute for each individ- bution D̂ from which to sample images x. Note that the

ual class. attack does not require the attack distribution D̂ to be the

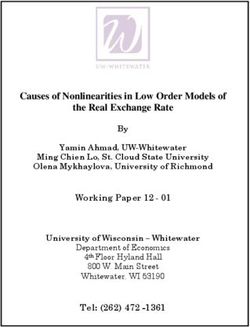

3Output Logits Relative Attribute

Target Model Black Blond Brown Gray Advantage Prediction

1.07 0.95 1.24 1.01 0.0 “Identity 0 has

-0.11 -0.20 -0.05 -0.35 0.0

0.17 Brown hair”

...

...

...

...

0.33 0.27 0.13 0.22 0.0

Figure 2: Overview of the class attribute inference step. Each image tuple from the attack set is fed sequentially into the target

model to compute the logits for the target class. The relative advantage is then computed by subtracting the second highest

logit value from the maximum value, and this difference is added to a running sum for each sensitive attribute value. The final

prediction for the sensitive attribute is the value with the highest relative advantage computed across all attack samples.

same as the training data distribution D but only that both ample, changing a person’s hair color to gray might also influ-

data distributions are from the same domain, e.g., facial im- ence the depicted age. To mitigate such influences, we predict

ages. As we will show in our experimental evaluation, even the sensitive attribute with a variety of different attack samples

if the style, size, and quality of images between both distri- to, in turn, reduce the influence of these confounding factors

butions vary significantly, the attack is still highly success- over a larger number of samples.

ful. The adversary defines the target attribute with a set of k Be M(x)y : X → R the pre-softmax logits computed by

possible attribute values Z = {z1 , . . . , zk } and correspond- the target model M on image x for class y. To infer the sen-

ing edit prompts pz that describe the general domain and ex- sitive attribute value z of class y, we query the target model

plicitly state an attribute value z ∈ Z. For example, pz = consecutively with multiple sample tuples, x ∈ Sattack . For

”A photo of a person, hgenderi”, where hgenderi is replaced each xz ∈ x, we compute its relative advantage A(x)z by

by z ∈ {female appearance, male appearance}. The candi-

date dataset Scandidate is then constructed as Scandidate = A(x)z = max 0, M(xz )y − max M(x̃)y . (2)

x̃∈x,x̃6=xz

{E(x, pz )|z ∈ Z, x ∼ D̂}, consisting of image tuples x =

(x1 , . . . , xk ), each containing k images with different sensi- The relative advantage computes the difference between

tive attribute values. the highest and the second-highest logit values and assigns

However, the attribute manipulation might not always suc- this difference to the attribute sample xz ∈ x with the high-

ceed in changing an image attribute to the desired value. This est logit. For all other samples x ∈ x, the relative ad-

can be due to interfering concepts already present in the image vantage is set to zero. Fig. 2 illustrates the relative advan-

that are strongly entangled with the target attribute. For exam- tage computation for a single x. The final attribute predic-

ple, changing the hair color of a person with a dark skin tone tion ẑ is then done by taking the attribute with the high-

to blonde often fails because such attribute combinations are est relative advantage

P summed up over all attack samples:

rather rare in the actual training data, which is also reflected in ẑ = argmaxz∈Z x∈Sattack A(x)z . We emphasize that only

the underlying diffusion model. We, therefore, employ a fil- a single forward pass for all attack samples is sufficient to

tering approach, i.e., filtering out all sample tuples x that are compute the relative advantage for all classes, which makes

not correctly depicting the various attribute values. For this, the inferring step computationally very cheap, e.g., the gen-

we use a trained attribute classifier Fτ : X → Z to create a der inference for a ResNet-101 model and 500 identities took

subset Sattack = {x ∈ Scandidate |Fτ (xz ) = z, ∀z ∈ Z} of roughly 2 seconds in our experiments.

sample tuples x = (x1 , . . . , xk ). We also add a threshold τ

on the softmax scores of the attribute classifier and classify 4 Experimental Evaluation

only predictions with a softmax score ≥ τ as correct. This

removes images for which the attribute classifier has only low Next, we experimentally investigate the information leak-

confidence in its prediction. age of face recognition models with C AIA. We provide our

source code with all hyperparameters to reproduce the exper-

3.2 Revealing Sensitive Attributes iments and facilitate future research. More experimental de-

tails are provided in Appx. A.

With the attack samples, we can now begin with inferring

the sensitive class attributes learned by the target classifier. 4.1 Experimental Setup

Fig. 2 illustrates the basic concept of the inference. While

our filtering approach ensures that the variations of an image Datasets. We used the CelebA facial attributes dataset [27]

depict the different values of the sensitive attribute, other con- to train our target face recognition systems. CelebA con-

founding behavior might still remain unintentionally. For ex- tains images of 10,177 individuals and 40 binary attribute

4Attack Accuracy 100.0% ResNet-18 ResNet-101 ResNet-152 DenseNet-169 ResNeSt-101

90.0%

80.0%

70.0%

FaceScrub CelebA CelebA CelebA CelebA

Gender Gender Eyeglasses Hair Color Racial Appearance

Figure 3: Attack accuracy for different target model architectures and CelebA attribute datasets. Results are averaged over three

models and three attack datasets. Except for ResNet-18, the attacks are comparably successful on the different models.

annotations per image. We selected the attributes gender = images depicting different racial appearances, we relied on

{female, male}, eyeglasses = {no glasses, glasses}, and hair the pre-trained FairFace classifier. The attack datasets were

color = {black, blond, brown, gray} as sensitive attributes, generated with Stable Diffusion v1.5 [35].

and additionally applied a pretrained FairFace [21] classifier To investigate effects of model robustness on information

to add racial appearance = {Asian, Black, Indian, White} la- leakage, we trained adversarial robust models on FaceScrub

bels to each image. Since the provided attributes and labels are with standard adversarial training [10]. We perturbed training

often inconsistent for samples of one identity, e.g., people do samples with Projected Gradient Descent [28] with 7 steps,

not always wear eyeglasses or might dye their hair, we created = 4/255, and step size α = 1/2551 .

custom subsets for each sensitive attribute group by selecting Metrics. We computed the precision, recall, and F1 score

an equal number of identities for each attribute value and re- for each attribute value, together with the overall prediction

moved samples with inconsistent labels. This is important for accuracy for all attributes. All experimental results are av-

evaluation since otherwise the training samples of an identity eraged over three models and three disjoint subsets of attack

depict various values for the sensitive attribute, and no clear samples, resulting in nine runs per configuration.

ground-truth value can be defined. Baselines. We took random guessing as a naive baseline.

We trained various target models on these datasets to pre- Since the sensitive attributes are equally distributed among

dict a person’s identity. Since not every attribute is present the different identities, random guessing corresponds to the

with every identity, we selected the 100 identities for each at- underlying prior attribute distribution. Since existing AIAs

tribute value of hair color, eyeglasses, and racial appearance, are not applicable to our use case, we instead performed the

respectively, with the most training samples available. For state-of-the-art Plug & Play model inversion attack (PPA) [38]

gender, we selected 250 identities per attribute value. We also to synthesize characteristic samples for each class. We then

created datasets with 1,000 identities to see if more classes used our filter models to predict the sensitive attribute value

influence the attack success. Additional models were trained for each sample and take the majority vote for each targeted

on the FaceScrub [32] facial image dataset, which contains identity as the attribute prediction. We emphasize that, un-

images of 530 identities with equal gender split. We split all like C AIA, PPA requires white-box access to the target model

datasets into 90% for training and 10% for measuring the pre- and a pre-trained GAN, for which we used the official Style-

diction accuracy. Details on the different dataset statistics are GAN2 [24] FFHQ model. Due to the high computational ef-

provided in Appx. A.2. fort, we limit the PPA comparison to individual ResNet-101

To craft the attack datasets, we used the Flickr-Faces-HQ models for each setting.

(FFHQ) [23] and CelebAHQ [22] datasets. We generated and

filtered images with attribute manipulations to collect 300 at- 4.2 Extracting Sensitive Class Information

tack image tuples for each attribute group. We set the filter

threshold τ = 0.6 in all experiments. We visualize randomly In the main part of the paper, we focus on results on

selected training and attack samples in Appx. D. ResNet-101 models using FFHQ attack samples. We pro-

Models. We trained ResNet-18, ResNet-101, ResNet- vide more detailed results for the other architectures, Cele-

152 [11], ResNeSt-101 [46], and DenseNet169 [15] tar- bAHQ as attack dataset, and experiments with 1,000 identities

get models. For each dataset-architecture combination, we in Appx. B. We note that the attack success for an increased

trained three models with different seeds. We further trained number of identities did not change substantially. The attack

ResNet-50 filter models on the CelebA attributes to classify accuracy for different models and target attributes in Fig. 3

gender, glasses, and hair color. To mitigate the overconfi- demonstrates that C AIA performed comparably well on dif-

dence of neural networks, we trained all filter models with la- ferent architectures and predicted the sensitive attribute values

bel smoothing [41, 31] to calibrate the models and make them

more suitable for the confidence threshold approach. To filter 1 Corresponds to images with pixel values in range [0, 1].

5Accuracy F1 Precision Recall PPA Random Guessing

Overall Overall

Female Glasses

Male No

Glasses

40% 60% 80% 100% 40% 60% 80% 100%

(a) Gender (CelebA) (b) Eyeglasses (CelebA)

Overall Overall

Black Asian

Blond Black

Brown Indian

Gray White

20% 40% 60% 80% 100% 20% 40% 60% 80% 100%

(c) Hair Color (CelebA) (d) Racial Appearance (CelebA)

Figure 4: Evaluation results for C AIA performed on ResNet-101 CelebA models to infer four different target attributes. The black

horizontal lines denote the standard deviation over nine runs. We further state random guessing (dashed line) and Plug and Play

Attacks (PPA, green dots) for comparison. While C AIA outperforms random guessing by a large margin, it extracts information

on racial appearance and if someone is wearing eyeglasses even more reliably than the white-box PPA attack.

correctly in over 90% of the cases for the attributes gender and wearing eyeglasses, PPA fell significantly behind C AIA. Re-

eyeglasses, and about 80% for the hair color and racial ap- garding racial appearance, PPA’s attack accuracy was com-

pearance. Only the attack results of ResNet-18 stood out and parable to C AIA but less consistent between different attribute

were a few percentage points lower than those of the other ar- values. We suspect the reason to be the uneven distribution

chitectures, which we attribute to the small number of model of the racial appearance and eyeglasses attribute values in the

parameters (only about a quarter of ResNet-101). Still, all at- underlying StyleGAN2’s training data [20]. Since PPA starts

tacks reliably inferred the sensitive attributes in most cases. from randomly sampled latent vectors, the biased attribute dis-

Next, we investigate the attribute leakage more closely. tributions also influence the attack success in terms of gener-

Therefore, we performed a more detailed analysis of the at- ating and inferring sensitive attributes. Nevertheless, C AIA

tribute leakage of ResNet-101 models, for which the results shows competitive and impressive performance given that it

are depicted in Fig. 4. For all four attributes, C AIA signifi- accesses the target model only in a black-box fashion and has

cantly beat the random guessing baseline by a large margin. no access to internal gradient information.

Whereas gender and eyeglasses were predicted correctly in

about 94% of the cases, racial appearance could be inferred 4.3 Robustness Increases Privacy Leakage

correctly in 84% of the cases. The attack accuracy for hair

color was also about 82% on average, but the attack suc- Since adversarial examples are a common weakness of

cess varied substantially between the different attribute values. deep learning models, adversarial training is widely adopted

Blond hair seems to be the hardest value to predict, which to make models robust against this security threat. To establish

is not unexpected since hair colors have different shades of a connection between privacy and security, we now extend our

which blond might be the broadest one. Corresponding con- investigation to robustly trained models and show that robust-

fusion matrices are visualized in Appx. B.1. ness even increases privacy leakage. However, training robust

In contrast, we also investigated gradient-based model models requires larger datasets due to increased sample com-

inversion attacks, here PPA, to compare to state-of-the-art plexity [36]. The limited number of CelebA samples, which

white-box methods that reconstruct characteristic class inputs. provides approx. 30 samples per identity, makes it difficult to

On the ResNet-101 models, PPA achieved perfect attack re- train stable and robust models. Therefore, this section focuses

sults for inferring an identity’s gender. It also precisely re- on models trained on FaceScrub, which provides an average of

vealed hair color and outperformed the black-box C AIA for 70 samples per identity and facilitates stable adversarial train-

both settings. However, for inferring whether an identity is ing. We trained ResNet-101 models on both cropped images

6Identity Prediction Accuracy Gender Attack Accuracy Robust Models Random Guessing

Model Model

Training Training

Attack Attack

40% 60% 80% 100% 40% 60% 80% 100%

(a) Gender (FaceScrub Cropped) (b) Gender (FaceScrub Uncropped)

Figure 5: Gender appearance inference results for C AIA performed on ResNet-101 models trained on cropped (a) and uncropped

(b) FaceScrub samples. We compared standard models to robust models trained with adversarial training (hatched bars). The

results demonstrate that robust models indeed increase the information leakage (blue bars), even if the underlying models’ pre-

diction accuracy (orange bars) is significantly below that of non-robust models.

containing only faces and uncropped images showing a large tures remain predictive even under adversarial

δ

perturbations

amount of content unrelated to an identity. For a visual com- with kδk ≤ , satisfying E(x,y)∼D ŷ · M(x̃irobust + δ)y ≥

parison of the datasets, we refer the reader to Appx. D.1. ρ, while non-robust predictive features lose their predictive

The attack results for ResNet-101 models are shown in power under adversarial perturbations. Neural networks rely

Fig. 5, while Appx. C presents results for other model archi- not only on salient features, such as hair color and other fa-

tectures. Comparing the attack accuracy against non-robust cial characteristics, for image processing, but also on subtle,

models (93.08%) with that of robust models (96.62%) trained non-robust image features that can still be highly predictive.

on cropped images suggests that robust models tend to leak Adversarial perturbations can significantly disrupt non-

more gender information about their learned identities than robust models’ predictions by manipulating x̃non-robust in an

non-robust models, even if the models’ clean prediction accu- -environment that is imperceptible to humans. Adversarial

racy is roughly five percentage points lower. A similar pattern training can help reduce the impact of adversarial examples

holds for models trained on uncropped images, with robust by focusing the model’s prediction on x̃robust . However, we

models still exhibiting higher information leakage. However, stress that adversarial training’s potential to enhance model

it is important to note that the standard model’s prediction robustness comes at the cost of increased privacy leakage. To

accuracy (78.75%) is significantly higher than that of robust explain why adversarial training increases a model’s privacy

models (55.23%). This suggests that a model’s prediction ac- leakage, we hypothesize that human-recognizable, sensitive

curacy is not necessarily indicative of its privacy leakage. We attributes are part of x̃robust . These attributes are highly pre-

hypothesize that robust models tend to exhibit higher privacy dictive because they enable discrimination between samples

leakage due to their concentration on more robust features for of different classes based on the assumption that they remain

their predictions. We formalize this hypothesis in the remain- constant within samples of a specific class. We also argue that

ing section. these attributes are robust features since they are easily identi-

Our formal analysis builds upon the robust feature model fiable by humans, and small adversarial perturbations are un-

for binary classifiers of Ilyas et al. [17], which we extend to likely to affect their value.

the privacy leakage setting. Let M : X → Y be a model In the context of face recognition, gender is a sensitive at-

trained to predict a label y ∈ Y for each input x ∈ X. tribute that is highly predictive, enabling us to distinguish in-

We divide a model’s inputs x = (x̃, x̄) into predictive fea- dividuals from people with other gender appearances. Fur-

tures x̃ and non-predictive features x̄. The i-th predictive thermore, it is a robust feature, as altering a person’s gender

feature x̃iis positively correlated with a sample’s true label: appearance in an image would require significant changes to

E(x,y)∼D ŷ · M(x̃i )y ≥ ρ for a sufficiently large ρ. Here, the pixels. To showcase the importance of robust features in

we deviate slightly from our previous notation of y and define robust models, we utilized the axiomatic attribution method

ŷ ∈ {−1, 1} for each class, with ŷ = 1 indicating the ground- Integrated Gradients [39] on two ResNet-101 models, one

truth label. We further assume that all model outputs My are trained using standard training, and the other with adversar-

centered around zero. Predictive features are (ideally) learned ial training. Fig. 6a visualizes the computed gradients for

by a model to predict the label. Conversely, a non-predictive four input images from our attack datasets. While the stan-

feature x̄j has no correlation with the true label andshould be dard model relies on noisy, non-robust features, such as im-

ignored during inference if E(x,y)∼D ŷ · M(x̄j )y < ρ. age background, the robust model concentrates more on facial

We further explore the nature of the predictive features attributes, including hair, eyes, and facial structure.

by categorizing them into two groups: robust features x̃robust To also provide quantitative support for our analysis, we

and non-robust features x̃non-robust . Robust predictive fea- used Integrated Gradients to measure a model’s relative at-

7Standard Model

Input

40% Robust Model

Relative Attribution

30%

1.0

Standard

Model

0.8

20%

0.6

0.4 10%

Robust

Model

0.2

0%

0.0 Eyeglasses Eyes Hair Mouth Hat Cloth Skin

(a) Visualized Attribution with Integrated Gradients. (b) Relative Attribution for facial features.

Figure 6: The comparison of absolute attribution based on integrated gradients between a robust and a standard ResNet-101

model in (a) shows that robust models assign perceptually more attuned attribution to facial features. A quantitative comparison

of relative attribution in (b) further highlights that the robust model assigns more attribution to unique facial features, such as eyes

and hair, while the standard model assigns most attribution to non-specific parts of the skin.

tribution to specific image parts. For this, we applied a pre- sensitive class information, with significant implications for

trained face segmentation model [26] to locate various at- the secure application of machine learning models. While

tributes in facial images. Be HZ (x) ∈ {0, 1}H×W the binary black-box models have traditionally been practically secure

segmentation mask for image x and attribute class Z, such as against MIAs and AIAs, our research shows that models in

a person’s hair. Be further A(M, x) ∈ RH×W the absolute the vision domain are not immune to attribute inference. By

pixel-wise attribution by Integrated Gradients for model M leveraging recent advances in text-to-image synthesis, C AIA

and image x ∈ RH×W . Let also k · k1 denote the sum norm infers sensitive information from image classifiers with high

and the Hadamard product. The relative attribution for a accuracy, comparable to white-box MIAs. Furthermore, our

dataset X is then computed by: findings suggest a trade-off between adversarial training and a

model’s information leakage, and future adversarial defenses

should account for the privacy leakage of models to avoid cre-

1 X kA(M, x) HZ (x)k1

Arel (M, X) = . (3) ating new vulnerabilities.

|X| kA(M, x)k1

x∈X

By analyzing the relative attribution of seven attributes in

Although C AIA offers reliable attribute inference capa-

100 FFHQ samples and over 530 target identities of ResNet-

bilities, it still faces some challenges and limitations. Ac-

101 models, Fig. 6b demonstrates that robust models assign

curately evaluating class information leakage requires high-

more importance to distinct features like eyes or hair, while

quality data and consistent labeling, which heavily relies on

standard models prioritize general skin areas. This highlights

the underlying dataset. We note that the sample quality of

the greater importance placed by robustly trained models on

CelebA and FaceScrub images varies widely in terms of res-

sensitive attributes encoded in images and reflected in their

olution, sharpness, and coloring, and the labeling is not al-

outputs. As C AIA exploits the differences in logits, robust

ways consistent within an identity or attribute class, with also

models appear to be more susceptible to such privacy attacks

falsely labeled samples contained in the datasets. For in-

and leak more sensitive attribute information. Thus, there ex-

stance, the boundaries between gray and blond hair are not

ists a trade-off between model robustness and sensitive class

well-defined, which can lead to reduced inference accuracy

information leakage.

for these attributes. However, C AIA is generally successful in

inferring sensitive attribute values in most cases. The attack

5 Discussion and Challenges metrics might even underestimate its effectiveness since false

attribute predictions might still provide some identity infor-

In this paper, we introduced C AIA, a novel privacy attack mation, e.g., black skin tone predictions make white as ground

that enables the inference of sensitive attribute information truth rather unlikely. Although, the attack may be more pow-

about classes learned by standard image classifiers. Our ex- erful for more consistently labeled datasets with higher image

tensive experiments demonstrate that classifiers indeed leak quality.

86 Conclusion References

To summarize, our research provides novel insights into the [1] Prabal Datta Barua, Nadia Fareeda Muhammad Gowdh,

privacy of image classifiers and shows that models leak more Kartini Rahmat, Norlisah Ramli, Wei Lin Ng, Wai Yee

sensitive information than previously assumed. We hope it Chan, Mutlu Kuluozturk, Sengul Dogan, Mehmet Bay-

motivates future security research and defense endeavors in gin, Orhan Yaman, Turker Tuncer, Tao Wen, Kang Hao

building secure and private models. Cheong, and U. Rajendra Acharya. Automatic covid-

Expanding on the mentioned challenges, we expect that 19 detection using exemplar hybrid deep features with

with the upcoming developments in text-guided image ma- x-ray images. International Journal of Environmental

nipulation, we propose to enhance C AIA and extend its use Research and Public Health, 18(15), 2021.

to continuous features like age or more detailed skin tone

grading. For example, the attack samples could be gener- [2] Ulrich Baumann, Yuan-Yao Huang, Claudius Gläser,

ated to reflect various shades of dark skin color or fine-grained Michael Herman, Holger Banzhaf, and J. Marius

age representations. Moreover, in its current implementation, Zöllner. Classifying road intersections using transfer-

C AIA infers each attribute independently. However, in the real learning on a deep neural network. In International Con-

world, attributes often correlate with each other. For example, ference on Intelligent Transportation Systems (ITSC),

information inferred on racial appearance influences the prob- pages 683–690, 2018.

ability distribution of hair colors. To this end, C AIA could [3] U.S. Census Bureau. About the topic of race, 2022.

leverage already inferred attributes as a prior for inferring ad- URL https://www.census.gov/topics/

ditional attributes. We also envision the use of C AIA beyond population/race/about.html. Accessed:

privacy analyses. For example, it is exciting to explore it in the 22-February-2023.

context of explainable AI to determine the importance of fea-

tures by analyzing prediction scores for different feature char- [4] Si Chen, Mostafa Kahla, Ruoxi Jia, and Guo-Jun

acteristics. Similarly, it could be employed to assess the fair- Qi. Knowledge-Enriched Distributional Model Inver-

ness of models and identify potential biases associated with sion Attacks. In International Conference on Computer

certain classes. Vision (ICCV), pages 16178–16187, 2021.

[5] Christopher A. Choquette-Choo, Florian Tramèr,

Reproducibility Statement. Our source code is publicly

Nicholas Carlini, and Nicolas Papernot. Label-only

at https://github.com/LukasStruppek/Class_

membership inference attacks. In International Confer-

Attribute_Inference_Attacks to reproduce the ex-

ence on Machine Learning (ICML), pages 1964–1974,

periments and facilitate further analysis.

2021.

Acknowledgments This research has benefited from the [6] Andre Esteva, Kat Chou, Serena Yeung, Nikhil Naik,

Federal Ministry of Education and Research (BMBF) project Ali Madani, Ali Mottaghi, Yun Liu, Eric Topol, Jeff

KISTRA (reference no. 13N15343), the Hessian Ministry of Dean, and Richard Socher. Deep learning-enabled med-

Higher Education, Research, Science and the Arts (HMWK) ical computer vision. npj Digital Medicine, 2021.

cluster projects “The Third Wave of AI” and hessian.AI, from

the German Center for Artificial Intelligence (DFKI) project [7] Matt Fredrikson, Somesh Jha, and Thomas Ristenpart.

“SAINT”, as well as from the joint ATHENE project of the Model Inversion Attacks that Exploit Confidence Infor-

HMWK and the BMBF “AVSV”. mation and Basic Countermeasures. In Conference on

Computer and Communications Security (CCS), pages

1322–1333, 2015.

[8] Matthew Fredrikson, Eric Lantz, Somesh Jha, Simon M.

Lin, David Page, and Thomas Ristenpart. Privacy in

pharmacogenetics: An end-to-end case study of person-

alized warfarin dosing. In USENIX Security Symposium,

pages 17–32, 2014.

[9] Karan Ganju, Qi Wang, Wei Yang, Carl A. Gunter, and

Nikita Borisov. Property inference attacks on fully con-

nected neural networks using permutation invariant rep-

resentations. In Conference on Computer and Commu-

nications Security (CCS), pages 619–633, 2018.

9[10] Ian J. Goodfellow, Jonathon Shlens, and Christian [21] Kimmo Karkkainen and Jungseock Joo. Fairface: Face

Szegedy. Explaining and harnessing adversarial exam- attribute dataset for balanced race, gender, and age for

ples. In International Conference on Learning Repre- bias measurement and mitigation. In Winter Confer-

sentations (ICLR), 2015. ence on Applications of Computer Vision (WACV), pages

1548–1558, 2021. Pretrained classifier available at

[11] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian https://github.com/dchen236/FairFace.

Sun. Deep residual learning for image recognition. In

Conference on Computer Vision and Pattern Recognition [22] Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehti-

(CVPR), pages 770–778, 2016. nen. Progressive growing of gans for improved quality,

stability, and variation. In International Conference on

[12] Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aber- Learning Representations (ICLR), 2018.

man, Yael Pritch, and Daniel Cohen-Or. Prompt-to-

prompt image editing with cross attention control. arXiv [23] Tero Karras, Samuli Laine, and Timo Aila. A Style-

preprint, arXiv:2208.01626, 2022. Based Generator Architecture for Generative Adversar-

ial Networks. In Conference on Computer Vision and

[13] Dominik Hintersdorf, Lukas Struppek, and Kristian Ker-

Pattern Recognition (CVPR), pages 4401–4410, 2019.

sting. To trust or not to trust prediction scores for mem-

bership inference attacks. In International Joint Con- [24] Tero Karras, Samuli Laine, Miika Aittala, Janne

ference on Artificial Intelligence (IJCAI), pages 3043– Hellsten, Jaakko Lehtinen, and Timo Aila. Ana-

3049, 2022. lyzing and Improving the Image Quality of Style-

GAN. In Conference on Computer Vision and

[14] Dominik Hintersdorf, Lukas Struppek, and Kristian Ker-

Pattern Recognition (CVPR), 2020. Pretrained

sting. Clipping privacy: Identity inference attacks on

FFHQ model available at https://github.com/

multi-modal machine learning models. arXiv preprint,

NVlabs/stylegan2-ada-pytorch.

arXiv:2209.07341, 2022.

[15] Gao Huang, Zhuang Liu, Laurens van der Maaten, and [25] Diederik P. Kingma and Jimmy Ba. Adam: Method for

Kilian Q. Weinberger. Densely Connected Convolu- Stochastic Optimization. In International Conference on

tional Networks. In Conference on Computer Vision and Learning Representations (ICLR), 2015.

Pattern Recognition (CVPR), pages 2261–2269, 2017. [26] Cheng-Han Lee, Ziwei Liu, Lingyun Wu, and Ping Luo.

[16] Ahmad Ibrahim, Hoda K. Mohamed, Ali Maher, and Maskgan: Towards diverse and interactive facial im-

Baochang Zhang. A survey on human cancer catego- age manipulation. In IEEE Conference on Computer

rization based on deep learning. Frontiers in Artificial Vision and Pattern Recognition (CVPR), 2020. Pre-

Intelligence, 5, 2022. trained model available at https://github.com/

switchablenorms/CelebAMask-HQ.

[17] Andrew Ilyas, Shibani Santurkar, Dimitris Tsipras, Lo-

gan Engstrom, Brandon Tran, and Aleksander Madry. [27] Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang.

Adversarial examples are not bugs, they are features. In Deep Learning Face Attributes in the Wild. In Interna-

Conference on Neural Information Processing Systems tional Conference on Computer Vision (ICCV), 2015.

(NeurIPS), volume 32, 2019.

[28] Aleksander Madry, Aleksandar Makelov, Ludwig

[18] Bargav Jayaraman and David Evans. Are attribute in- Schmidt, Dimitris Tsipras, and Adrian Vladu. Towards

ference attacks just imputation? In Conference on Com- deep learning models resistant to adversarial attacks. In

puter and Communications Security (CCS), pages 1569– International Conference on Learning Representations

1582, 2022. (ICLR), 2018.

[19] Mostafa Kahla, Si Chen, Hoang Anh Just, and Ruoxi [29] Shagufta Mehnaz, Sayanton V. Dibbo, Ehsanul Kabir,

Jia. Label-only model inversion attacks via boundary re- Ninghui Li, and Elisa Bertino. Are your sensitive at-

pulsion. In Conference on Computer Vision and Pattern tributes private? novel model inversion attribute infer-

Recognition (CVPR), pages 15025–15033, 2022. ence attacks on classification models. In USENIX Secu-

rity Symposium, pages 4579–4596, 2022.

[20] Cemre Karakas, Alara Dirik, Eylul Yalcinkaya, and

Pinar Yanardag. Fairstyle: Debiasing stylegan2 with [30] Ron Mokady, Amir Hertz, Kfir Aberman, Yael Pritch,

style channel manipulations. In European Conference and Daniel Cohen-Or. Null-text inversion for editing real

on Computer Vision (ECCV), volume 13673, pages 570– images using guided diffusion models. arXiv preprint,

586, 2022. arXiv:2211.09794, 2022.

10[31] Rafael Müller, Simon Kornblith, and Geoffrey E. Hin- [40] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever,

ton. When does label smoothing help? In Conference Joan Bruna, Dumitru Erhan, Ian J. Goodfellow, and Rob

on Neural Information Processing Systems (NeurIPS), Fergus. Intriguing properties of neural networks. In

pages 4696–4705, 2019. International Conference on Learning Representations

(ICLR), 2014.

[32] Hongwei Ng and Stefan Winkler. A data-driven ap-

proach to cleaning large face datasets. In IEEE Inter- [41] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe,

national Conference on Image Processing (ICIP), pages Jonathon Shlens, and Zbigniew Wojna. Rethinking the

343–347, 2014. inception architecture for computer vision. In Con-

ference on Computer Vision and Pattern Recognition

[33] Mathias P. M. Parisot, Balázs Pejó, and Dayana Spag- (CVPR), pages 2818–2826, 2016.

nuelo. Property inference attacks on convolutional

neural networks: Influence and implications of target [42] Kuan-Chieh Wang, Yan Fu, Ke Liand Ashish Khisti,

model’s complexity. In International Conference on Se- Richard Zemel, and Alireza Makhzani. Variational

curity and Cryptography (SECRYPT), pages 715–721, Model Inversion Attacks. In Conference on Neural In-

2021. formation Processing Systems (NeurIPS), 2021.

[34] Adam Paszke, Sam Gross, Francisco Massa, Adam [43] Xiuling Wang and Wendy Hui Wang. Group prop-

Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, erty inference attacks against graph neural networks. In

Zeming Lin, Natalia Gimelshein, Luca Antiga, Al- Conference on Computer and Communications Security

ban Desmaison, Andreas Köpf, Edward Yang, Zachary (CCS), pages 2871–2884, 2022.

DeVito, Martin Raison, Alykhan Tejani, Sasank Chil-

[44] Eric Wong, Leslie Rice, and J. Zico Kolter. Fast is better

amkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and

than free: Revisiting adversarial training. In Interna-

Soumith Chintala. PyTorch: An Imperative Style, High-

tional Conference on Learning Representations (ICLR),

Performance Deep Learning Library. In Conference

2020.

on Neural Information Processing Systems (NeurIPS),

pages 8024–8035, 2019. [45] Samuel Yeom, Irene Giacomelli, Matt Fredrikson, and

Somesh Jha. Privacy risk in machine learning: Analyz-

[35] Robin Rombach, Andreas Blattmann, Dominik Lorenz,

ing the connection to overfitting. In Computer Security

Patrick Esser, and Björn Ommer. High-resolution image

Foundations Symposium (CSF), pages 268–282, 2018.

synthesis with latent diffusion models. In Conference

on Computer Vision and Pattern Recognition (CVPR), [46] Hang Zhang, Chongruo Wu, Zhongyue Zhang, Yi Zhu,

pages 10684–10695, 2022. Haibin Lin, Zhi Zhang, Yue Sun, Tong He, Jonas

Mueller, R. Manmatha, Mu Li, and Alexander

[36] Ludwig Schmidt, Shibani Santurkar, Dimitris Tsipras,

Smola. Resnest: Split-attention networks. CoRR,

Kunal Talwar, and Aleksander Madry. Adversarially ro-

abs/2004.08955, 2020.

bust generalization requires more data. In Conference

on Neural Information Processing Systems (NeurIPS), [47] Yuheng Zhang, Ruoxi Jia, Hengzhi Pei, Wenxiao Wang,

pages 5019–5031, 2018. Bo Li, and Dawn Song. The Secret Revealer: Genera-

tive Model-Inversion Attacks Against Deep Neural Net-

[37] Reza Shokri, Marco Stronati, Congzheng Song, and Vi-

works. In Conference on Computer Vision and Pattern

taly Shmatikov. Membership inference attacks against

Recognition (CVPR), pages 250–258, 2020.

machine learning models. In Symposium on Security and

Privacy (S&P), pages 3–18, 2017. [48] Junhao Zhou, Yufei Chen, Chao Shen, and Yang Zhang.

Property inference attacks against gans. In Annual

[38] Lukas Struppek, Dominik Hintersdorf, Antonio

Network and Distributed System Security Symposium

De Almeida Correia, Antonia Adler, and Kristian

(NDSS), 2022.

Kersting. Plug & play attacks: Towards robust and

flexible model inversion attacks. In International

Conference on Machine Learning (ICML), volume 162,

pages 20522–20545, 2022.

[39] Mukund Sundararajan, Ankur Taly, and Qiqi Yan. Ax-

iomatic attribution for deep networks. In International

Conference on Machine Learning (ICML), volume 70,

pages 3319–3328, 2017.

11A Experimental Details

A.1 Hard- and Software Details

We performed our experiments on NVIDIA DGX machines running NVIDIA DGX Server Version 5.1.0 and Ubuntu 20.04.4

LTS. The machines have 2TB of RAM and contain NVIDIA A100-SXM4-40GB GPUs and AMD EPYC 7742 CPUs. We further

relied on CUDA 11.4, Python 3.10.0, and PyTorch 1.12.1 with Torchvision 0.13.1 [34] for our experiments. If not stated otherwise,

we used the model architecture implementations and pre-trained ImageNet weights provided by Torchvision. We further provide

a Dockerfile together with our code to make the reproduction of our results easier. In addition, all training and attack configuration

files are available to reproduce the results stated in this paper.

To perform Plug & Play Attacks (PPA), we used a NVIDIA DGX machine running NVIDIA DGX Server Version 5.2.0 and

Ubuntu 20.04.4 LTS. The machines has 2TB of RAM and contain NVIDIA A100-SXM4-80GB GPUs and AMD EPYC 7742

CPUs. The experiments were performed with CUDA 11.4, Python 3.8.10, and PyTorch 1.10.0 with Torchvision 0.11.0. We

further used PPA in combination with the pre-trained FFHQ StyleGAN-2 and relied on the standard CelebA attack parameters,

as stated in Struppek et al. [38] and https://github.com/LukasStruppek/Plug-and-Play-Attacks. We only

changed the number of candidates to 50 and the final samples per target to 25, to speed up the attack process.

A.2 Dataset Details

We state the number of identities and samples for each custom CelebA subset in Appx. A.2. We further state the lowest,

median and maximum number of samples of a single identity per attribute and for the total dataset. For the filter models, the

datasets contained 155,304 samples (gender and eyeglasses) and 93,942 (hair color), respectively.

Total Min Median Max Avg

Attribute Value Identities

Samples Samples Samples Samples Samples

Female 250 7,639 30 30 35 30.56

Gender

CelebA

Male 250 7,652 30 30 36 30.61

Total 500 15,291 30 30 36 30.58

No Glasses 100 3186 31 32 36 31.86

CelebA

Glasses

Glasses 100 2,346 20 22 31 23.46

Total 200 5532 20 31 36 27.66

Asian 100 2944 28 30 31 29.44

Black 100 2876 26 29 34 28.76

CelebA

Race

Indian 100 2281 19 22 30 22.81

White 100 3164 31 31 35 31.64

Total 400 11,265 19 29 35 28.16

Black Hair 100 2,840 23 28 32 28.40

Blond Hair 100 2,867 25 29 31 28.67

CelebA

Color

Hair

Brown Hair 100 2,564 17 26 30 25.64

Gray Hair 100 2,117 14 20 31 21.17

Total 400 10,388 14 27 32 25.97

FaceScrub

Female 265 17,743 9 65 140 66.95

Gender

Male 265 20,136 25 77 128 75.98

Total 530 37,879 9 72 140 71.47

Table 1: Dataset identity statistics

12A.3 Training Hyperparameters

We emphasize that we did not aim for achieving state-of-the-art performances but rather tried to train models with generally

good prediction performance. All non-robust models were initialized with pre-trained ImageNet weights and trained for 80 epochs

with an initial learning rate of 0.1 and a batch size of 128. We multiplied the learning rate by factor 0.1 after 60 and 70 epochs. For

the models trained with adversarial training, set the number of epochs to 100 and reduced the learning rate after 80 and 90 epochs.

Optimization was done with SGD and a momentum of 0.9. Images were resized to 224 × 224 and normalized with µ = σ = 0.5

to set the pixel value range to [−1, 1]. As augmentation, we applied random horizontal flipping with p = 0.5 flipping probability

and random resized cropping with scale = [0.8, 1.0] and ratio = [0.8, 1.25]. Cropped images were resized to size 224 × 224. Due

to the limited number of training samples available for each identity, we did not use a validation set and early stopping. For each

architecture and dataset, we trained three models with different seeds. Performance evaluation was done on a holdout test set.

We further trained ResNet-50 models on the CelebA attributes for gender, eyeglasses, and hair color for filtering the candidate

images. All models were initialized with pre-trained ImageNet weights and trained for 10 epochs with an initial learning rate of

1e − 3 and a batch size of 128. We multiplied the learning rate by factor 0.1 after 9 epochs. We used the Adam optimizer [25]

with β = (0.9, 0.999) and no weight decay. Normalization and augmentation were identical to training the target models, except

the scale and ratio parameters of the random resized cropping, which we set to [0.9, 1.0] and [1.0, 1.0] to prevent cutting out the

attributes from the training samples. We again used no validation set for early stopping. To calibrate the models, we applied label

smoothing with smoothing factor α = 0.1 during training. Since some attributes are more frequent in the CelebA dataset, we draw

the same number of samples for each attribute by oversampling / undersampling from the attribute images. Details on the training

of FairFace for filtering the racial appearance images can be found at https://github.com/dchen236/FairFace.

Dataset Num Classes Architecture Accuracy Dataset Num Classes Architecture Accuracy

ResNet-18 85.99% ± 0.21 ResNet-18 93.92% ± 0.17

FaceScrub

ResNet-101 86.78% ± 0.66 ResNet-101 94.93% ± 0.43

Cropped

Gender

500 ResNet-152 86.62% ± 0.62 530 ResNet-152 95.32% ± 0.22

DenseNet-169 75.93% ± 5.54 DenseNet-169 91.93% ± 0.65

ResNeSt-101 81.53% ± 4.40 ResNeSt-101 93.50% ± 0.70

ResNet-18 93.08% ± 0.58 ResNet-18 83.79% ± 0.21

FaceScrub

Eyeglasses

ResNet-101 90.97% ± 1.41 ResNet-101 88.12% ± 0.24

Cropped

Robust

200 ResNet-152 91.10% ± 0.85 530 ResNet-152 87.11% ± 0.28

DenseNet-169 93.56% ± 0.38 DenseNet-169 88.53% ± 0.76

ResNeSt-101 90.73% ± 0.21 ResNeSt-101 90.04% ± 0.48

ResNet-18 84.65% ± 0.44 ResNet-18 70.29% ± 2.71

Uncropped

FaceScrub

ResNet-101 82.99% ± 0.40 ResNet-101 78.75% ± 0.84

Race

400 ResNet-152 84.06% ± 1.19 530 ResNet-152 78.52% ± 0.58

DenseNet-169 85.00% ± 0.96 DenseNet-169 69.11% ± 12.73

ResNeSt-101 81.13% ± 1.04 ResNeSt-101 77.32% ± 0.54

ResNet-18 87.94% ± 0.56 ResNet-18 41.58% ± 0.63

Uncropped

Hair Color

FaceScrub

ResNet-101 87.65% ± 0.70 ResNet-101 55.23% ± 0.60

Robust

400 ResNet-152 87.20% ± 0.67 530 ResNet-152 55.69% ± 1.24

DenseNet-169 88.45% ± 1.68 DenseNet-169 54.65% ± 2.98

ResNeSt-101 85.53% ± 0.53 ResNeSt-101 58.74% ± 0.84

(a) CelebA (b) FaceScrub

Table 2: Prediction accuracy of the target models on the individual test sets.

13You can also read