A Computational Acquisition Model for Multimodal Word Categorization

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

A Computational Acquisition Model for Multimodal Word Categorization

Uri Berger1,2 , Gabriel Stanovsky1 , Omri Abend1 , and Lea Frermann2

1

School of Computer Science and Engineering, The Hebrew University of Jerusalem

2

School of Computing and Information Systems, University of Melbourne

{uri.berger2, gabriel.stanovsky, omri.abend}@mail.huji.ac.il

lea.frermann@unimelb.edu.au

Abstract

Recent advances in self-supervised modeling

of text and images open new opportunities for

computational models of child language acqui-

sition, which is believed to rely heavily on

cross-modal signals. However, prior studies

have been limited by their reliance on vision

models trained on large image datasets anno-

tated with a pre-defined set of depicted object

categories. This is (a) not faithful to the infor-

mation children receive and (b) prohibits the

evaluation of such models with respect to cat-

egory learning tasks, due to the pre-imposed

category structure. We address this gap, and

present a cognitively-inspired, multimodal ac-

quisition model, trained from image-caption

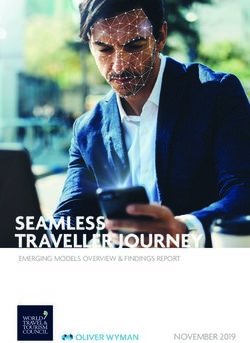

pairs on naturalistic data using cross-modal Figure 1: Model overview. Given an image-caption

self-supervision. We show that the model pair (left), both the visual (top) and textual encoder

learns word categories and object recognition (bottom) generate a binary vector indicative of the clus-

abilities, and presents trends reminiscent of ters associated with the current input. The text/visual

those reported in the developmental literature. model predicts a probability vector over clusters per

We make our code and trained models public word/image. Vectors are mapped to a binary space

for future reference and use1 . using thresholding. The modality-specific vectors pro-

vide mutual supervision during training (right).

1 Introduction

To date, the mechanisms underlying the efficiency on large labeled data bases such as ImageNet (Deng

with which infants learn to speak and understand et al., 2009) or Visual Genome (Krishna et al.,

natural language remain an open research question. 2017). This has two drawbacks: first, systematic

Research suggests that children leverage contex- access to labeled data is a cognitively implausible

tual, inter-personal and non-linguistic information. assumption in a language acquisition setting; sec-

Visual input is a case in point: when spoken to, ond, imposing a pre-defined categorization system

infants visually perceive their environment, and precludes studying categories that emerge when

paired with the input speech, the visual environ- learning from unlabeled multimodal data. This

ment could help bootstrap linguistic knowledge type of setting more closely resembles the data un-

(Tomasello et al., 1996). Unlike social cues, visual derlying early language learning at a time when the

input has a natural physical representation, in the child has only acquired little conceptual informa-

form of pixel maps or videos. tion. Although the subject of much psycholinguis-

Previous multimodal language acquisition stud- tic work, the computational study of multimodal

ies either considered toy scenarios with small vo- word categories, formed without recourse to man-

cabularies (Roy and Pentland, 2002; Frank et al., ual supervision has been scarcely addressed in pre-

2007), or used visual encoders that were pretrained vious work.

1

github.com/SLAB-NLP/multimodal_clustering We present a model that learns categories as clus-

3819

Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics:

Human Language Technologies, pages 3819 - 3835

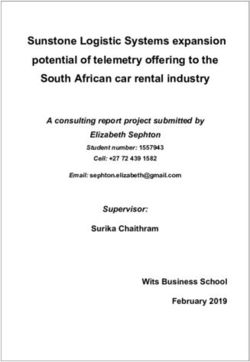

July 10-15, 2022 ©2022 Association for Computational LinguisticsModel parameters token (img, capt) train time 2 Background and Related Work

BERT 110M 3300M – 64 TPU days

word2vec 1B 100B – 60 CPU days We briefly review previous studies of unimodal

CLIP 63M – 400M 10K GPU days learning without pretraining (for both text and vi-

Ours 3M 3M 280K 4 GPU days

Children – 13K/day – –

sion) and multimodal learning (studied mainly in

acquisition implausible settings) to highlight the

Table 1: Comparing the size, training data and gap that this work addresses.

training time of popular self-supervised models

Unimodal Learning. Self-supervised learning

(BERTbase , word2vec (300d, google-news) and CLIP

with ResNet50X64) against our model (Ours, bold) and without pre-training has been extensively studied,

typical child input (Children; Gilkerson et al., 2017). but predominantly in a unimodal scenario or un-

For the multimodal models (CLIP and Ours) we only der cognitively implausible assumptions. For the

mention the size of the text encoder. text modality, large language models have been de-

veloped in recent years (e.g., BERT; Devlin et al.,

2019), trained on large unlabeled text corpora (Ta-

ble 1). A more cognitively motivated model is

ters of words from large-scale, naturalistic multi- BabyBERTa (Huebner et al., 2021), a smaller ver-

modal data without any pre-training. Given (im- sion of RoBERTa (Liu et al., 2019) (also) trained

age, caption)-pairs as input, the model is trained to on transcribed child directed speech. In the visual

cluster words and images in a shared, latent space, domain, self-supervision is typically implemented

where each cluster pertains to a semantic category. as contrastive learning, training the model to align

In a self-supervised setup, a neural image classifier corrupted images with their original counterparts

and a co-occurrence based word clustering module (Chen et al., 2020a), with subsequent fine-tuning.

provide mutual supervision through joint training

Multimodal Language Learning. Early language

with an expectation-maximization (EM) style algo-

acquisition studies (Roy and Pentland, 2002) con-

rithm. Figure 1 illustrates our model.

sidered toy scenarios with small vocabularies and

Our input representation is cognitively plausible used heuristics for image processing. Silberer and

in that we use raw image data (pixels), and train Lapata (2014) model multi-modal human catego-

our model on a comparatively small data set (Ta- rization using human-annotated feature vectors as

ble 1). However, we follow previous work (Kádár input for a multimodal self-supervised autoencoder,

et al., 2015; Nikolaus and Fourtassi, 2021) in us- while we learn the features from raw images.

ing written and word-segmented text input. Young Unlike our work, recent work on cross-modal

infants do not have access to such structure, and language learning (Kádár et al., 2015; Chrupała

this assumption therefore deviates from cognitive et al., 2017; Ororbia et al., 2019; Nikolaus and Four-

plausibility (but opens avenues for future work). tassi, 2021) typically use Convolutional Neural Net-

works, pre-trained on large labeled data bases like

We show that semantic and visual knowledge ImageNet (Deng et al., 2009), or alternatively (e.g.,

emerges when training on the self-supervised cate- Lu et al., 2019; Chen et al., 2020b) use object detec-

gorization task. In a zero-shot setup, we evaluate tors pre-trained on Visual Genome (Krishna et al.,

our model on (1) word concreteness prediction as a 2017) as the visual model.

proxy for noun identification, one of the first cues Few studies assume no prior knowledge of the

for syntax in infant language acquisition (Fisher grounded modality. Most related to our study is

et al., 1994); (2) visual classification and object CLIP (Radford et al., 2021), a pre-trained off-the-

segmentation. We also study the emerging latent shelf model trained to project matching images and

word clusters and show that words are clustered captions to similar vectors. CLIP assumes a multi-

syntagmatically (De Saussure, 1916): words repre- modal joint space which is continuous, unlike our

senting entities that are likely to occur together are binary space. Liu et al. (2021) use CLIP pretrained

more likely clustered together (e.g., dog-bark), than encoders to learn cross-modal representations with

words that share taxonomic categories (e.g., dog- a similar training objective as ours. They discretize

cat). This concurs with findings that young children the output of the encoders by mapping it to the clos-

acquire syntagmatic categories more readily than est vector from a finite set of learned vectors, which

taxonomic categories (Sloutsky et al., 2017). can be viewed as a form of categorization. CLIP-

3820based works are trained to match entire sentences Formally, given a sentence s=(w1 , w2 , ..., wn )

to images and have no explicit representation of of words wi , and an assignment of the words

words and phrases. We therefore view it as a cogni- to clusters f :{w1 , ..., wn }→{1, ..., N } ∪ {∅}, the

tively less plausible setting than is presented in the clusters to which the sentence is assigned are:

N

current study. Nevertheless, we include CLIP as a c|if ∃wi s.t. f (wi )=c c=1 . When assigning

point of comparison when applicable. words to clusters, we make two simplifying as-

sumptions: (1) the probability that a word is as-

3 Model signed to a specific cluster is independent of the

linguistic context, meaning that we assign to clus-

Our goal is to learn the meaning of words and

ters on the type- rather than the token level (a rea-

raw images through mutual supervision by map-

sonable assumption given that children learn single

ping both modalities to a joint representation. In-

words first); (2) a single word cannot be assigned

tuitively, given a visual input paired with relevant

to more than one cluster, but it might be assigned

text (approximating a typical learning scenario),

to no cluster at all if it does not have a visual corre-

the output for each of the modalities is a binary

spondent in the image (e.g., function words). Under

vector in {0, 1}N , with non-zero dimensions indi-

these assumptions, the encoder estimates P (c|w)

cating the clusters2 to which the input is assigned

for each c ∈ {1, . . . , N } and for each w ∈ V ,

and N is the total number of clusters (a predefined

where V is the vocabulary. If the probability of

hyper-parameter). The clusters are unknown a pri-

assigning a given word in a sentence to any of the

ori and are formed during training. The goal is to

clusters exceeds a hyper-parameter threshold θt , it

assign matching text and image to the same clus-

is assigned to the cluster with the highest proba-

ters. In order to minimize assumptions on innate

bility, otherwise it is not assigned to any cluster.

knowledge or pre-imposed categories available to

Formally:

the language learner, and enable the study of emerg-

ing categories from large-scale multi-modal input

data, we deliberately avoid any pre-training of our

argmax P (c|w) if max P (c|w) ≥ θt

models. f (w) = c∈[N ] c∈[N ]

∅ else

3.1 Visual Encoder

The visual encoder (Figure 1, top) is a randomly In the next step, we define the word-cluster associa-

initialized ResNet50 (He et al., 2016), without pre- tions P (c|w). We estimate these using Bayes Rule,

training. We set the output size of the network to N

(the number of clusters) and add an element-wise P (w|c)P (c)

P (c|w) = (1)

sigmoid layer.3 To produce the binary output and P (w)

predict the clusters given an input image, we apply P (w|c) is defined as the fraction of all predictions

a hyper-parameter threshold θv to the output of the of cluster c from the visual encoder, in which w oc-

sigmoid layer. curred in the corresponding caption. We instantiate

3.2 Text Encoder the prior cluster probability P (c) as uniform over

all clusters.4

The text encoder (Figure 1, bottom) is a sim- Finally,

ple probabilistic model based on word-cluster co- PNfor a given word w, we estimate

P (w)= i=1 P (ci )P (w|ci ). Intuitively, we ex-

occurrence, which is intuitively interpretable and pect that a concrete word would repeatedly occur

makes minimal structural assumptions. Given a with similar visual features (of the object described

sentence, the model assigns each word to at most by that word), therefore repeatedly co-occurring

one cluster. The sentence is assigned to the union with the same cluster and receiving a high assign-

of the clusters to which the words in it are assigned. ment probability with that cluster, whereas abstract

2

We use the term clusters for the output of models and words would co-occur with multiple clusters, there-

the term categories for ground truth classification of elements fore not being assigned to any cluster.

(e.g., human defined categories).

3 4

This is a multi-label categorization task (i.e., an image We also tried instantiating P (c) as the empirical cluster

can be assigned to multiple clusters): Cluster assignments do distribution as predicted by the visual encoder. However,

not compete with one another, which is why we chose sigmoid the noisy initial predictions lead to a positive feedback loop

over softmax. leading to most clusters being unused.

38214 Training 5.1 Semantic Word Categorization

At each training step, the model observes a batch of Background. Semantic word categorization is the

(image, caption)-pairs. We first perform inference clustering of words based on their semantic fea-

with both encoders, and then use the results of each tures. Psycholinguistic studies have shown that

encoder’s inference to supervise the other encoder. children use semantic word categories to solve lin-

guistic tasks by the end of the second year of life

Text Encoder. Given the list of clusters predicted (e.g., Styles and Plunkett, 2009). There is a long

by the visual encoder and a tokenized caption established fundamental distinction between syn-

s={w1 , ..., wn }, for each wi ∈s and for each clus- tagmatic (words that are likely to co-occur in the

ter cj predicted by the visual encoder, we increment same context) and paradigmatic relations (words

count(cj ) and count(wi , cj ). These are needed to that can substitute one another in a context with-

compute the probabilities in equation (1). out affecting its grammaticality or acceptability)

Visual Encoder. For each input image and corre- (De Saussure, 1916). Each relation type invokes a

sponding cluster vector predicted by the text en- different type of word categories (syntagmatic rela-

coder, we use binary cross entropy loss comparing tions invoke syntagmatic categories, or associative

the output of the sigmoid layer with the predicted categories; paradigmatic relation invoke taxonomic

cluster vector and use Backpropagation to update categories). Despite an acknowledgement that in-

the parameters of the ResNet model. fants, unlike adults, categorize based on syntag-

matic criteria more readily than on paradigmatic cri-

teria (“The Syntagmatic-Paradigmatic shift”; Ervin,

5 Experiments 1961), and empirical evidence that syntagmatic cat-

egories might be more important for word learning

We trained our model on the 2014 split of than taxonomic categories (Sloutsky et al., 2017),

MSCOCO (Lin et al., 2014), a dataset of natu- computational categorization studies and datasets

ralistic images with one or more corresponding predominantly focused only on taxonomic hierar-

captions, where each image is labeled with a list chies (Silberer and Lapata, 2014; Frermann and

of object classes it depicts. MSCOCO has 80 ob- Lapata, 2016).

ject classes, 123K images and 616K captions (split

into 67% train, 33% test). We filtered out images Setting. Our model’s induced clusters are created

that did not contain any labeled objects, and images by using the text encoder to predict, for each word,

that contained objects with a multi-token label (e.g., the most likely cluster.

“fire hydrant”).5 After filtering, we are left with 65 We evaluated induced clusters against a taxo-

ground-truth classes. The filtered training (test) nomic and a syntagmatic reference data set. First,

set contains 56K (27K) images and 279K (137K) we followed Silberer and Lapata (2014), used the

captions. We set apart 20% of the training set for categorization dataset from Fountain and Lapata

hyper-parameter tuning. (2010), and transformed the dataset into hard cat-

egories by assigning each noun to its most typical

We trained our model with a batch size of 50

category as extrapolated from human typicality rat-

until we observed no improvement in the F-score

ings. The resulting dataset contains 516 words

measure from Section 5.1 (40 epochs). Training

grouped into 41 taxononmic categories. We fil-

took 4 days on a single GM204GL GPU. We used

tered the dataset to contain only words that occur

N =150 clusters, θt =0.08, and θv =0.5. The visual

in the MSCOCO training set and in the word2vec

threshold θv was first set heuristically to 0.5 to

(Mikolov et al., 2013) dictionary, obtaining the final

avoid degenerate solutions (images being assigned

dataset with 444 words grouped into 41 categories.

to all or no clusters initially). Then, N and θt

were determined in a grid search, optimizing the In order to quantify the syntagmatic nature of the

F-score measure from Section 5.1. We used spaCy induced clusters, we used a large dataset of human

(Honnibal and Montani, 2017) for tokenization. word associations, the "Small World of Words"

(SWOW, De Deyne et al., 2019). SWOW was com-

5

piled by presenting a cue word to human partici-

This was enforced because multi-token labels do not nec-

essarily map to a single cluster, rendering the evaluation more pants and requesting them to respond with the first

difficult. We plan to address this gap in future work. three words that came to mind. The association

3822strength of a pair of words (w1 , w2 ) is determined Model F-Score

by the number of participants who responded with

w2 to cue word w1 . Prior work has shown that Random 0.15 ± 0.0032

word associations are to a large extent driven by Text-only 0.26 ± 0.0098

syntagmatic relations (Santos et al., 2011). Word2vec 0.40 ± 0.0172

BERTBASE 0.33 ± 0.011

Comparison with other models. We compare CLIP 0.38 ± 0.0142

against several word embedding models,6 where Ours 0.33 ± 0.0109

for each model we first induce embeddings, which

we then cluster into K=41 clusters (the number of Table 2: Taxonomic categorization results, comparing

taxonomic gold classes) using K-Means. We com- a random baseline (top) against text-only (center) and

pare against a text-only variant of our model7 by multi-modal models (bottom), as mean F-score and std

creating a co-occurrence matrix C where Ci,j is the over 5 runs. Word2vec (bold) achieves the highest

score.

number of captions in which tokens i, j in the vo-

cabulary co-occur. The normalized rows of C are

the vector embeddings of words in the vocabulary. Model MAS

We compare against off-the-shelf word2vec and

Taxonomic 5.72

BERTBASE embeddings. For BERT, given a word

Random 4.23 ± 1.88

w, we feed an artificial context (“this is a w”) and

Text-only 5.47 ± 0.25

take the embedding of the first subword of w. We

Word2Vec 6.65 ± 0.16

also include the multi-modal CLIP, using prompts

BERTBASE 5.75 ± 0.23

as suggested in the original paper (“a photo of a

CLIP 7.08 ± 0.41

w”).8 Finally, we include a randomized baseline,

Ours 7.45 ± 0.33

which assigns each word at random to one of 41

clusters. Implementation details can be found in Table 3: Sytagmatic categorization results on the same

Appendix A.1. models as in Table 2, reporting mean association

Taxonomic categorization. We use the F-score strength (MAS) of pairs of clustered concepts in the

SWOW dataset, over 5 random initializations. Taxo-

metric following Silberer and Lapata (2014). The F-

nomic refers to the taxonomic gold categories. Our

value of a (gold class, cluster)-pair is the harmonic model (bold) achieves the highest score.

mean of precision and recall defined as the size

of intersection divided by the number of items in

the cluster and the number of items in the class, Syntagmatic categorization. We quantify the syn-

respectively. The F-score of a class is the maximum tagmatic nature of a clustering by the mean asso-

F-value attained at any cluster, and the F-score of ciation strength (MAS) of pairs of words in the

the entire clustering is the size-weighted sum of SWOW dataset, where association strength of a

F-scores of all classes. We report performance over pair of words (w1 , w2 ) is again number of partic-

five random restarts for all models. ipants who responded with w2 to cue word w1.

Results are presented in Table 2. The text-only MAS is computed across all word pairs from the

baseline improves results over a random categoriza- taxonomic dataset in which both words were as-

tion algorithm. Our multi-modal model grounded signed the same cluster by this clustering solution.

in visual input improves over its unimodal vari- Results are presented in Table 3. The multimodal

ant. Our model is competitive with BERT and is models (ours and CLIP) outperform all unimodal

surpassed by word2vec and CLIP. However, con- models, an indication of the impact of multimodal-

sidering the small model and training data we used ity on category learning: multimodal word learn-

(see Table 1), our results are competitive. ing shifts the learner towards syntagmatic relations

6

We omit Silberer and Lapata (2014) since we were unable more significantly than unimodal word learning.

to obtain their model. To our knowledge, this is the first computational

7

It was not clear how to design co-occurrence based vision- result to support this hypothesis, shown empiri-

only baselines, as images do not naturally factorize into con-

cepts/regions, unlike text which is divided into words. cally in human studies with infants (Elbers and van

8

We also tried BERT and CLIP feeding as input the target Loon-Vervoorn, 1999; Mikolajczak-Matyja, 2015).

word only. Results for CLIP slightly decreased, while results

for BERT decreased significantly. Qualitative analysis. Table 4 shows four of the

3823Ours 1 skis; axe; sled; parka; sleigh; pants; Setting. We evaluate concreteness estimation us-

gloves ing the dataset by Brysbaert et al. (2013), which

contains concreteness ratings for 40K English

Ours 2 sailboat; canoe; swan; raft; boat; yacht; words averaged over multiple human annotated

duck; willow; ship; drum ratings on a scale of 1 to 5. We estimate the con-

Ours 3 train; bullet; subway; tack; bridge; trol- creteness of a word as the maximum probability

ley with which it was assigned to any cluster. For eval-

uation, we follow Charbonnier and Wartena (2019)

Ours 4 bedroom; rocker; drapes; bed; dresser;

and compute the Pearson correlation coefficient of

sofa; couch; piano; curtains; cushion; lamp;

our predictions with the ground-truth values. In

chair; fan; bureau; stool; cabin; book

addition, we investigate the impact of word fre-

W2V cluster avocado, walnut, pineapple, quency on our model’s predictions by evaluating

grapefruit, coconut, olive, lime, lemon the model on subsets of words in the Brysbaert data

of increasing minimum frequency in MSCOCO.

Table 4: Four clusters induced by our model (Ours 1,

2, 3, 4), sorted by P (c|w), and one cluster induced

by word2vec. Our clusters are syntagmatic, while the

Comparison with other models. First, we com-

W2V cluster is taxonomic. Words highlighted by the pare against supervised SVM regression mod-

same color belong to the same taxonomic category. els, which have shown strong performance on the

Brysbaert data in prior work (Charbonnier and

Wartena, 2019). Following their work, we use two

clusters created by our model and one cluster cre- feature configurations: (1) POS tags + suffixes,

ated by word2vec for the taxonomic categorization (2) POS tags + suffixes + pre-trained FastText em-

dataset.9 The clusters formed by our algorithm beddings (Joulin et al., 2017). We train the SVMs

are syntagmatic, associating words frequently ob- on the full Brysbaert data.

served together (e.g., tokens in cluster 1 are related

to snow activity, while cluster 2 broadly relates to Second, we compare with a minimally super-

water). The cluster formed by word2vec embed- vised text-only model. As in Sec 5.1, we create

dings is taxonomic (all tokens are food products). word vector representations from co-occurrence

Our results provide initial evidence that syntag- counts. Next, following prior work (Turney et al.,

matic clusters emerge from an unsupervised train- 2011), we select concrete (abstract) representative

ing algorithm drawing on simple joint clustering of words by taking the 20 words with the highest (low-

words and images. est) concreteness value in the Brysbaert data that

occur more than 10 times in the MSCOCO training

5.2 Concreteness Estimation set. We predict a word’s concreteness by comput-

ing its average cosine similarity to the concrete

Background. Fisher et al. (1994) suggest that the representative words minus the average of its co-

number of nouns in a sentence is among the ear- sine similarity to the abstract representative words.

liest syntactic cues that children pick up. Conse-

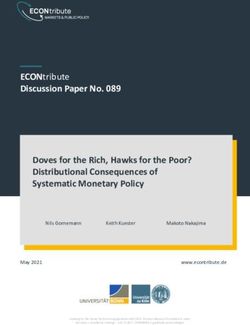

quently, noun identification is assumed to be one Results. Figure 2 presents the results in terms of

of the first syntactic tasks learned by infants. We Pearson correlation when evaluated on words of

approximate noun identification as concreteness varying minimum frequency in MSCOCO. When

estimation, since words representing concrete enti- considering frequent tokens only, our model pre-

ties are mostly nouns.10 Chang and Bergen (2021) dicts word concreteness with an accuracy higher

show that while children acquire concrete words than the SVM with POS and suffix features, al-

first, neural text-based models show no such effect, though additional embedding features improve

suggesting that multimodality impacts the learning SVM performance further. Note that the super-

process. vised baseline was trained on the full data set, and

9

See appendix for the full list of clusters of all clustering hence evaluated on a subset of its training set. Our

algorithms. multimodal model performs better than its text-

10

For example, in the concreteness dataset built by Brys- only variant for tokens that occur at least 100 times,

baert et al. (2013), in which human annotators rated the con-

creteness of words on a scale of 1 to 5, 85.6% of the words even though the text-only model has received some

with an average concreteness rating above 4 are nouns. supervision (by selecting the representative words).

3824Model Precision Recall F-Score

Ours 0.43 ± 0.04 0.21 ± 0.02 0.28 ± 0.01

CLIP 0.52 0.39 0.45

Table 5: Visual multi-label classification results on the

MSCOCO data for our model and CLIP in terms of pre-

cision, recall and F1 score. We report mean (std) over

5 random initializations for our model. CLIP experi-

ments are deterministic (we use the pretrained model

directly, unlike Sec 5.1 where we used KMeans on top

of CLIP).

Figure 2: Pearson correlation between predicted word with highest cosine similarity to its encoding. We

concreteness and gold standard human ratings, evalu- adapt CLIP to a multi-label setting as follows: In-

ated over test sets with increasing minimum frequency stead of assigning the image to the class with the

in the MSCOCO data. Results are averaged across 5 highest cosine similarity, we take into account the

random initializations (std was consistently < 0.03).

cosine similarity with all classes for each image.

We consider a class as predicted if its cosine simi-

5.3 Visual Multi-Label Classification larity exceeds a threshold, tuned on the MSCOCO

training split.

In addition to linguistic knowledge, infants acquire

visual semantic knowledge with little explicit super- Results. Table 5 presents the results. As expected,

vision, i.e., they learn to segment and classify ob- CLIP outperforms our model. However, our model

jects. To test whether our model also acquires such achieves impressive results considering its simplic-

knowledge we evaluated it on the multi-label clas- ity, its size, and that CLIP is the current state-of

sification task: For each image in the MSCOCO the-art in self-supervised vision and language learn-

test set, predict the classes of objects in the image. ing. Training a CLIP model of comparable size and

In a zero-shot setting, we mapped the induced exposed to similar training data as our model is be-

clusters to predicted lists of MSCOCO classes as yond the scope of this paper, but an interesting

follows. We first provided the name of each class to direction for future work.

our model as text input and retrieved the assigned

5.4 Object Localization

cluster, thus obtaining a (one-to-many) cluster-to-

classes mapping. Now, for each test image, we used Another important task performed by infants is vi-

the visual encoder to predict the assigned cluster(s). sual object localization. To test our model’s ability

The predicted set of MSCOCO classes is the union to reliably localize objects in images we use Class

of the lists of classes to which the predicted clusters Activation Maps (CAM) described by Zhou et al.

are mapped. (2016). Each CAM indicates how important each

pixel was during classification for a specific cluster.

Comparison with CLIP. We compare our results

against CLIP. To ensure comparability with our Quantitative analysis. Most previous studies

model we use CLIP with ResNet50. We use CLIP of zero-shot segmentation (Bucher et al., 2019)

as a point of comparison to provide perspective on trained on a subset of “seen” classes, and evalu-

the capabilities of our model despite differences in ated on both seen and unseen classes. We use a

modeling and assumptions. However, we note two more challenging setup previously referred to as

caveats regarding this comparison. First, CLIP was annotation-free segmentation (Zhou et al., 2021),

trained on a much larger training set and has more where we evaluate our model without any train-

parameters than our model (see Table 1). Second, ing for the segmentation task. We use MSCOCO’s

CLIP has only been used for single- (not multi-) ground-truth bounding boxes, which are human

label classification, by inferring encodings of both annotated and mark objects in the image, for evalu-

input images and prompts representing the ground- ation. Following the original CAM paper, we use a

truth classes (e.g., “a photo of a bus” for the ground heuristic method to predict bounding boxes: Given

truth class bus) and assigning the image to the class a CAM, we segment the pixels of which the value is

3825Model Precision Recall F-Score

Ours 0.178 ± 0.01 0.025 ± 0.004 0.044 ± 0.006

Rand 0.027 ± 0.001 0.027 ± 0.001 0.027 ± 0.001

Table 6: Mean F-score and standard deviation across

5 random initializations on bounding box prediction.

Our model (Ours) improves precision (bold) over ran-

dom (Rand) significantly while achieving similar recall

(might improve by tuning the visual threshold).

above 50% of the max value of the CAM and take

the bounding box that covers the largest connected

component in the segmentation map.

We use precision and recall for evaluation. A

pair of bounding boxes is considered a match if

the intersection over union (IoU) of the pair ex-

ceeds 0.5. Given lists of predicted and ground-truth

bounding boxes, we consider each matched pair as

a true positive and a prediction (ground-truth) for

which no matching ground-truth (prediction) was

found as a false positive (negative). We compare

our model to a random baseline: Sample k random

bounding boxes (where k is the number of ground-

truth bounding boxes in the current image). This

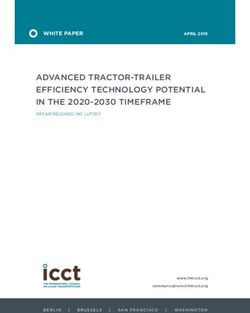

baseline uses the number of ground-truth bounding Figure 3: Examples of heatmaps of CAM values.

boxes in each image (our model is not exposed to Above each image we print the class predicted by the

this information). model.

The results are presented in Table 6. Our

model is significantly more precise than the ran-

dom baseline, but achieves similar recall: the entire multiple modalities; learning word-level seman-

MSCOCO test split contains a total of 164,750 tics (e.g., Fisher et al., 1994, suggest that children

bounding boxes, while our model predicted 38,237 first learn to identify nouns and use this informa-

bounding boxes. This problem could be addressed tion to learn sentence-level semantics); and cross-

by lowering the visual threshold. We leave this situational learning (counting how many times each

direction for future research. word co-occurred with each cluster, see Gleitman,

1990). After training, our model demonstrates capa-

Qualitative analysis. Fig. 3 shows a selection of bilities typical of infant language acquisition: Word

CAMs, plotted as heatmaps and associated with concreteness prediction and identification and seg-

class predictions (see Sec. 5.3). The heatmaps ex- mentation of objects in a visual scene.

tracted by the model were better when the model However, we do not stipulate that infants begin

predicted a correct class in the visual classification their acquisition of language by clustering words.

task (top six images and bottom left image in Fig It would be interesting to design experiments to

3). In the bottom two images two clusters were pre- test this hypothesis, e.g., by connecting our work

dicted for the same original image, one correct and with laboratory work on joint word and category

one incorrect (with an, unsurprisigly, meaningless learning (Borovsky and Elman, 2006), or work on

heatmap). the emergence of syntagmatic vs. taxonomic cate-

gories in young children (Sloutsky et al., 2017).

6 Discussion and Conclusion

While our model is cognitively more plausible

We proposed a model for unsupervised multimodal compared to previous studies, the gap from a realis-

lagnguage acquisition, trained to jointly cluster text tic setting of language acquisition is still large: (1)

and images. Many of our design choices were we assume the language input is segmented into

guided by findings from cognitive studies of in- words; (2) the input data, while naturalistic, is not

fant language acquisition: The joint learning of typical of infants at the stage of language acquisi-

3826tion; (3) the input only includes the visual and tex- Foundation (grant no. 2424/21), by a research gift

tual modality, but not, e.g., pragmatic cues like ges- from the Allen Institute for AI and by the HUJI-

ture; and (4) the model learns in a non-interactive UoM joint PhD program.

setting, whereas physical and social interactions

are considered crucial for language learning, and Ethical Considerations

learns (and is evaluated) in a batch fashion while

human learning is typically incremental (Frermann We used publicly available resources to train our

and Lapata, 2016). model (Lin et al., 2014). As with other statistical

methods for word representations, our approach

In the semantic word categorization and con-

may capture social biases which manifest in its

creteness prediction experiments, we compared our

training data (e.g., MSCOCO was shown to be bi-

multimodal model to unimodal text-only baselines,

ased with respect to gender, Zhao et al., 2017). Our

which we chose to be as similar as possible to our

code includes a model card (Mitchell et al., 2019)

model. The results suggest that multimodality im-

which reports standard information regarding the

proves performance on both text tasks. However,

training used to produce our models and its word

it is unclear which specific information is encoded

clusters.

in the visual modality that benefits these text tasks.

We leave this question for future research.

Syntagmatic categories, although highly intu- References

itive in the context of human memory, were not the

subject of many previous computational studies. Lawrence W. Barsalou. 1983. Ad hoc categories. Mem-

ory&Cognition, 11(3):211–227.

We propose to further investigate this type of cat-

egories and its use. One interesting direction is to Arielle Borovsky and Jeff Elman. 2006. Language in-

combine syntagmatic categories with interactivity: put and semantic categories: A relation between cog-

Given a relevant signal from the environment the nition and early word learning. Journal of child lan-

model can cycle through concepts in the syntag- guage, 33(4):759–790.

matic category triggered by the signal, speeding up

Marc Brysbaert, Amy Beth Warriner, and Victor Ku-

the extraction of relevant concepts in real time. One perman. 2013. Concreteness ratings for 40 thousand

possible application of this direction is modelling generally known english word lemmas. Behavior

the construction of ad-hoc categories, described by Research Methods, 46(3):904–911.

Barsalou (1983).

Maxime Bucher, Tuan-Hung Vu, Matthieu Cord, and

While all experiments are conducted in English, Patrick Pérez. 2019. Zero-shot semantic segmenta-

our setting supports future work on other languages. tion. In Advances in Neural Information Processing

The small training set and the nature of the data Systems 32: Annual Conference on Neural Informa-

(image-sentence pairs that might be, to some extent, tion Processing Systems 2019, NeurIPS 2019, De-

cember 8-14, 2019, Vancouver, BC, Canada, pages

collected from the Internet) allow our model to 466–477.

be extended to low-resource languages, while the

minimal structural assumptions of the text encoder Tyler A Chang and Benjamin K Bergen. 2021. Word

may imply some degree of language-agnosticity. acquisition in neural language models. ArXiv

preprint, abs/2110.02406.

In future work, we plan to improve the cognitive

plausibility of our model by (1) incorporating typ- Jean Charbonnier and Christian Wartena. 2019. Pre-

ical input observed by children (by using videos dicting word concreteness and imagery. In Pro-

taken in real scenes of child language acquisition, ceedings of the 13th International Conference on

see Sullivan et al., 2021); and (2) changing the Computational Semantics - Long Papers, pages 176–

187, Gothenburg, Sweden. Association for Computa-

setting to an interactive one, where the model is tional Linguistics.

transformed into a goal-driven agent that uses in-

teractivity to learn and produce language. Ting Chen, Simon Kornblith, Mohammad Norouzi,

and Geoffrey E. Hinton. 2020a. A simple frame-

Acknowledgements work for contrastive learning of visual representa-

tions. In Proceedings of the 37th International Con-

ference on Machine Learning, ICML 2020, 13-18

We would like to thank the anonymous reviewers July 2020, Virtual Event, volume 119 of Proceedings

for their helpful comments and feedback. This of Machine Learning Research, pages 1597–1607.

work was supported in part by the Israel Science PMLR.

3827Yen-Chun Chen, Linjie Li, Licheng Yu, Ahmed Columbia, Canada, December 3-6, 2007, pages

El Kholy, Faisal Ahmed, Zhe Gan, Yu Cheng, and 457–464. Curran Associates, Inc.

Jingjing Liu. 2020b. Uniter: Universal image-text

representation learning. In European conference on Lea Frermann and Mirella Lapata. 2016. Incremen-

computer vision, pages 104–120. Springer. tal bayesian category learning from natural language.

Cognitive Science, 40(6):1333–1381.

Grzegorz Chrupała, Lieke Gelderloos, and Afra Al-

ishahi. 2017. Representations of language in a Jill Gilkerson, Jeffrey A Richards, Steven F Warren, Ju-

model of visually grounded speech signal. In Pro- dith K Montgomery, Charles R Greenwood, D Kim-

ceedings of the 55th Annual Meeting of the Associa- brough Oller, John HL Hansen, and Terrance D

tion for Computational Linguistics (Volume 1: Long Paul. 2017. Mapping the early language environ-

Papers), pages 613–622, Vancouver, Canada. Asso- ment using all-day recordings and automated anal-

ciation for Computational Linguistics. ysis. American journal of speech-language pathol-

ogy, 26(2):248–265.

Simon De Deyne, Danielle J Navarro, Amy Perfors,

Lila Gleitman. 1990. The structural sources of verb

Marc Brysbaert, and Gert Storms. 2019. The “small

meanings. Language Acquisition, 1(1):3–55.

world of words” english word association norms for

over 12,000 cue words. Behavior research methods, Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian

51(3):987–1006. Sun. 2016. Deep residual learning for image recog-

nition. In 2016 IEEE Conference on Computer Vi-

Ferdinand De Saussure. 1916. Cours de linguistique

sion and Pattern Recognition, CVPR 2016, Las Ve-

générale. Payot, Paris.

gas, NV, USA, June 27-30, 2016, pages 770–778.

Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, IEEE Computer Society.

and Fei-Fei Li. 2009. Imagenet: A large-scale hier- Matthew Honnibal and Ines Montani. 2017. spacy 2:

archical image database. In 2009 IEEE Computer Natural language understanding with bloom embed-

Society Conference on Computer Vision and Pattern dings, convolutional neural networks and incremen-

Recognition (CVPR 2009), 20-25 June 2009, Miami, tal parsing. To appear, 7(1):411–420.

Florida, USA, pages 248–255. IEEE Computer Soci-

ety. Philip A. Huebner, Elior Sulem, Fisher Cynthia, and

Dan Roth. 2021. BabyBERTa: Learning more gram-

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and mar with small-scale child-directed language. In

Kristina Toutanova. 2019. BERT: Pre-training of Proceedings of the 25th Conference on Computa-

deep bidirectional transformers for language under- tional Natural Language Learning, pages 624–646,

standing. In Proceedings of the 2019 Conference Online. Association for Computational Linguistics.

of the North American Chapter of the Association

for Computational Linguistics: Human Language Armand Joulin, Edouard Grave, Piotr Bojanowski, and

Technologies, Volume 1 (Long and Short Papers), Tomas Mikolov. 2017. Bag of tricks for efficient

pages 4171–4186, Minneapolis, Minnesota. Associ- text classification. In Proceedings of the 15th Con-

ation for Computational Linguistics. ference of the European Chapter of the Association

for Computational Linguistics: Volume 2, Short Pa-

Loekie Elbers and Anita van Loon-Vervoorn. 1999. pers, pages 427–431, Valencia, Spain. Association

Lexical relationships in children who are blind. for Computational Linguistics.

Journal of Visual Impairment & Blindness,

93(7):419–421. Ákos Kádár, Afra Alishahi, and Grzegorz Chrupała.

2015. Learning word meanings from images of nat-

Susan M Ervin. 1961. Changes with age in the ver- ural scenes. Traitement Automatique des Langues.

bal determinants of word-association. The American

journal of psychology, 74(3):361–372. Ranjay Krishna, Yuke Zhu, Oliver Groth, Justin John-

son, Kenji Hata, Joshua Kravitz, Stephanie Chen,

Cynthia Fisher, D.geoffrey Hall, Susan Rakowitz, and Yannis Kalantidis, Li-Jia Li, David A. Shamma,

Lila Gleitman. 1994. When it is better to receive Michael S. Bernstein, and Li Fei-Fei. 2017. Vi-

than to give: Syntactic and conceptual constraints sual genome: Connecting language and vision us-

on vocabulary growth. Lingua, 92:333–375. ing crowdsourced dense image annotations. Interna-

tional Journal of Computer Vision, 123(1):32–73.

Trevor Fountain and Mirella Lapata. 2010. Meaning

representation in natural language categorization. In Tsung-Yi Lin, Michael Maire, Serge Belongie, James

Proceedings of the 32nd annual conference of the Hays, Pietro Perona, Deva Ramanan, Piotr Dollár,

Cognitive Science Society, pages 1916–1921. and C. Lawrence Zitnick. 2014. Microsoft coco:

Common objects in context. Computer Vision –

Michael C. Frank, Noah D. Goodman, and Joshua B. ECCV 2014 Lecture Notes in Computer Science,

Tenenbaum. 2007. A bayesian framework for cross- page 740–755.

situational word-learning. In Advances in Neural

Information Processing Systems 20, Proceedings of Alexander H. Liu, SouYoung Jin, Cheng-I Jeff Lai, An-

the Twenty-First Annual Conference on Neural In- drew Rouditchenko, Aude Oliva, and James Glass.

formation Processing Systems, Vancouver, British 2021. Cross-modal discrete representation learning.

3828Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Man- Carina Silberer and Mirella Lapata. 2014. Learn-

dar Joshi, Danqi Chen, Omer Levy, Mike Lewis, ing grounded meaning representations with autoen-

Luke Zettlemoyer, and Veselin Stoyanov. 2019. coders. In Proceedings of the 52nd Annual Meet-

Roberta: A robustly optimized bert pretraining ap- ing of the Association for Computational Linguis-

proach. ArXiv preprint, abs/1907.11692. tics (Volume 1: Long Papers), pages 721–732, Balti-

more, Maryland. Association for Computational Lin-

Jiasen Lu, Dhruv Batra, Devi Parikh, and Stefan guistics.

Lee. 2019. Vilbert: Pretraining task-agnostic visi-

olinguistic representations for vision-and-language Vladimir M Sloutsky, Hyungwook Yim, Xin Yao, and

tasks. In Advances in Neural Information Process- Simon Dennis. 2017. An associative account of the

ing Systems 32: Annual Conference on Neural Infor- development of word learning. Cognitive Psychol-

mation Processing Systems 2019, NeurIPS 2019, De- ogy, 97:1–30.

cember 8-14, 2019, Vancouver, BC, Canada, pages

13–23. Suzy J. Styles and Kim Plunkett. 2009. How do infants

build a semantic system? Language and Cognition,

Nawoja Mikolajczak-Matyja. 2015. The associative 1(1):1–24.

structure of the mental lexicon: hierarchical seman-

tic relations in the minds of blind and sighted lan- Jessica Sullivan, Michelle Mei, Andrew Perfors, Er-

guage users. Psychology of Language and Commu- ica Wojcik, and Michael C. Frank. 2021. Saycam:

nication, 19(1):1. A large, longitudinal audiovisual dataset recorded

from the infant’s perspective. Open Mind : Discov-

Tomas Mikolov, Kai Chen, Greg Corrado, and Jef- eries in Cognitive Science, 5:20 – 29.

frey Dean. 2013. Efficient estimation of word

representations in vector space. arXiv preprint Michael Tomasello, Randi Strosberg, and Nameera

arXiv:1301.3781. Akhtar. 1996. Eighteen-month-old children learn

Margaret Mitchell, Simone Wu, Andrew Zaldivar, words in non-ostensive contexts. Journal of child

Parker Barnes, Lucy Vasserman, Ben Hutchinson, language, 23(1):157–176.

Elena Spitzer, Inioluwa Deborah Raji, and Timnit Peter Turney, Yair Neuman, Dan Assaf, and Yohai Co-

Gebru. 2019. Model cards for model reporting. In hen. 2011. Literal and metaphorical sense identifica-

Proceedings of the conference on fairness, account- tion through concrete and abstract context. In Pro-

ability, and transparency, pages 220–229. ceedings of the 2011 Conference on Empirical Meth-

Mitja Nikolaus and Abdellah Fourtassi. 2021. Evalu- ods in Natural Language Processing, pages 680–

ating the acquisition of semantic knowledge from 690, Edinburgh, Scotland, UK. Association for Com-

cross-situational learning in artificial neural net- putational Linguistics.

works. In Proceedings of the Workshop on Cogni-

Jieyu Zhao, Tianlu Wang, Mark Yatskar, Vicente Or-

tive Modeling and Computational Linguistics, pages

donez, and Kai-Wei Chang. 2017. Men also like

200–210, Online. Association for Computational

shopping: Reducing gender bias amplification using

Linguistics.

corpus-level constraints. In Proceedings of the 2017

Alexander Ororbia, Ankur Mali, Matthew Kelly, and Conference on Empirical Methods in Natural Lan-

David Reitter. 2019. Like a baby: Visually situated guage Processing, pages 2979–2989, Copenhagen,

neural language acquisition. In Proceedings of the Denmark. Association for Computational Linguis-

57th Annual Meeting of the Association for Com- tics.

putational Linguistics, pages 5127–5136, Florence,

Italy. Association for Computational Linguistics. Bolei Zhou, Aditya Khosla, Àgata Lapedriza, Aude

Oliva, and Antonio Torralba. 2016. Learning deep

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya features for discriminative localization. In 2016

Ramesh, Gabriel Goh, Sandhini Agarwal, Girish IEEE Conference on Computer Vision and Pattern

Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Recognition, CVPR 2016, Las Vegas, NV, USA, June

Gretchen Krueger, and Ilya Sutskever. 2021. Learn- 27-30, 2016, pages 2921–2929. IEEE Computer So-

ing transferable visual models from natural lan- ciety.

guage supervision. In Proceedings of the 38th In-

ternational Conference on Machine Learning, ICML Chong Zhou, Chen Change Loy, and Bo Dai. 2021.

2021, 18-24 July 2021, Virtual Event, volume 139 of Denseclip: Extract free dense labels from clip.

Proceedings of Machine Learning Research, pages ArXiv preprint, abs/2112.01071.

8748–8763. PMLR.

A Appendix

Deb K Roy and Alex P Pentland. 2002. Learning words

from sights and sounds: A computational model. A.1 Implementation details

Cognitive science, 26(1):113–146.

A.1.1 Training

Ava Santos, Sergio E. Chaigneau, W. Kyle Simmons,

and Lawrence W. Barsalou. 2011. Property gener-

For the visual encoder, we used the ResNet50 im-

ation reflects word association and situated simula- plementation from the torchvision package with

tion. Language and Cognition, 3(1):83–119. ADAM optimizer and a learning rate of 10−4 .

3829A.1.2 Semantic Word Categorization Cluster 2: trombone, leotards, trumpet, projector,

For clustering of word embeddings, we use the K- cello, harmonica, guitar

Means implementation in scikit-learn. We use the Cluster 3: train, bullet, subway, tack, bridge, trol-

word2vec google-news-300 model from the gensim ley

package, the BERTBASE model from the transform- Cluster 4: bus, ambulance, inn, taxi, level

ers library and CLIP’s official implementation. Cluster 5: elephant, bear, giraffe, paintbrush, rock,

fence, chain

A.1.3 Concreteness Estimation Cluster 6: machete, porcupine, hornet, banana,

Supervised model. For the implementation of the gorilla, apple, turtle, turnip, peach, stick

supervised model described by (Charbonnier and Cluster 7: veil, shawl

Wartena, 2019) we used 3 feature types. For POS Cluster 8: ashtray, mushroom, cheese, spinach,

tag, we used the WordNet module from the NLTK olive, tomato, shrimp, rice, pie, chicken, potato,

package to find, for each word, the number of broccoli, plate, pan, pepper, asparagus, skillet, peas,

synsets in each of the 4 possible POS tags (NOUN, onions, tuna, salmon, cranberry, lettuce, beans,

VERB, ADJ, ADV), and the induced feature vector spatula, ladle, dish, crab, corn, cucumber, tray, wal-

was the normalized count vector. In case no synsets nut, plum, box, lobster, cherry, table, shell

were found the induced feature vector was all zeros. Cluster 9: church, clock, skyscraper, chapel, build-

For suffixes, we collected the 200 most frequent ing, brick, stone, flea

suffixes of length 1 to 4 characters in the training Cluster 10: airplane, helicopter, pier, gate

set, and the induced feature vector was a 200-d Cluster 11: bedroom, rocker, drapes, bed, dresser,

binary vector indicating, for each suffix, if it oc- sofa, couch, piano, curtains, cushion, lamp, chair,

curs in the current word. For word embeddings, we fan, bureau, stool, cabin, book

used the fastText wiki-news-300d-1M model. The Cluster 12: skis, axe, sled, parka, sleigh, pants,

final feature vector is the concatenation of all the gloves

selected feature vectors: POS+suffix for the first Cluster 13: dishwasher, kettle, toaster, freezer,

model (resulting a 204-d feature vector for each stove, microscope, microwave, oven, fridge, cup-

word), and POS+suffix+embedding for the second board, mixer, blender, plug, mittens, grater, pot,

model (resulting a 504-d feature vector for each apron, cabinet, tape, apartment

word). Cluster 14: missile, jet, bomb, rocket, drill

Cluster 15: bouquet, thimble, umbrella, accordion,

Text-only baseline. The 20 concrete representa-

cake, scissors, wrench, jar, pliers, candle, penguin,

tive words were sand, seagull, snake, snowsuit,

frog, doll, bottle, shield, pig, card

spaghetti, stairs, strawberry, tiger, tomato, tooth-

Cluster 16: zucchini, beets, cabbage, celery,

brush, tractor, tree, turtle, umbrella, vase, water,

cauliflower, wheelbarrow, parsley, tongs, shelves

comb, tire, firetruck, tv.

Cluster 17: grapefruit, tangerine, colander, clamp,

The 20 abstract representative words were would,

snail, cantaloupe, pineapple, grape, pear, lemon,

if, though, because, somewhat, enough, as, could,

eggplant, mandarin, garlic, nectarine, basket,

how, yet, normal, ago, so, very, the, really, then,

corkscrew, pyramid, pumpkin, bin, sack, lime, cork,

abstract, a, an.

orange

A.1.4 Object Localization Cluster 18: octopus, kite, crocodile, squid, bal-

To extract Class Activation Mappings, we used the loon, butterfly, whale

CAM module from the torchcam package. Cluster 19: surfboard, swimsuit, board, rope

Cluster 20: hose, hut

A.2 Cluster lists Cluster 21: skateboard, pipe, saxophone, helmet,

Following is a list of clusters created by different escalator, barrel, broom

clustering algorithm, in a specific execution. Cluster 22: shotgun, seal, dolphin, car, hoe, ham-

ster, wheel, house

A.2.1 Our model Cluster 23: sailboat, canoe, swan, raft, boat, yacht,

Words are sorted by P (c|w). duck, willow, ship, drum

Cluster 24: tortoise, dog, cat, tiger, cheetah

Cluster 1: doorknob, canary Cluster 25: hyena

3830Cluster 26: buckle, mug, ruler, envelope, bag, belt, Cluster 4: lantern, chandelier, candle, tripod, pro-

cup, camel, pencil, spider, cart, saucer, closet, tri- jector, lamp

pod, carpet Cluster 5: sailboat, submarine, raft, yacht, canoe,

Cluster 27: crowbar, bathtub, toilet, drain, sink, boat, pier, ship

faucet, marble, mirror, basement, tank, bucket, Cluster 6: avocado, walnut, pineapple, grapefruit,

door, razor, mat coconut, olive, lime, lemon

Cluster 28: toad, mouse, keyboard, key, desk, type- Cluster 7: mittens, doll, slippers, pajamas, neck-

writer, stereo, rat, bookcase, telephone, anchor, ra- lace, socks

dio Cluster 8: rock, cottage, tent, gate, house,

Cluster 29: buzzard, chickadee, finch, wood- brick, pyramid, rocker, door, bluejay, shed, bench,

pecker, grasshopper, worm, sparrow, blackbird, vul- skyscraper, bolts, hut, mirror, key, building, bar-

ture, parakeet, bluejay, hawk, robin, dagger, perch, rel, tape, inn, apartment, cabinet, book, marble,

falcon, stork, peacock, pelican, owl, crow, pigeon, drum, shack, umbrella, crane, bureau, garage, shell,

seagull, flamingo, eagle, vine, birch, beaver, pheas- basement, fan, cathedral, fence, chapel, stone, drill,

ant, raven, goose, squirrel, seaweed, ant, emu, dove, telephone, comb, radio, shield, church, anchor, mi-

cage, crown, shovel croscope, clock, level, board, football, chain, cabin,

Cluster 30: horse, racquet, saddle, pony, buggy, wall, barn, bridge

bat, football, wagon, sword, donkey, ball, fox Cluster 9: elk, bison, pheasant, beaver, deer,

Cluster 31: beetle moose, goose

Cluster 32: zebra, ostrich, elk, deer, lion, pen, pin, Cluster 10: pig, cow, sheep, goat, ostrich, emu,

rifle, bolts calf, buffalo, bull

Cluster 33: bracelet, fawn, slippers, socks, shoes, Cluster 11: elevator, train, whistle, limousine, es-

tap, boots, strainer, jeans, ring calator, subway, bus, taxi, trolley

Cluster 34: whistle, cathedral, wand, thermometer, Cluster 12: groundhog, parakeet, fawn, tortoise,

peg, hook, goldfish, lantern, wall, urn, caterpillar, goldfish, porcupine, fox, cheetah, gorilla, flea, rab-

chandelier, robe, leopard bit, mink, peacock, rooster, mouse, duck, turtle,

Cluster 35: motorcycle, bike, tractor, truck, trailer, squirrel, dog, bear, alligator, rat, raccoon, cat,

tricycle, scooter, jeep, limousine, garage, van, tent, flamingo, tiger, hamster, penguin

crane Cluster 13: razor, pliers, scissors, crowbar, knife,

Cluster 36: baton, revolver, violin, tie, bow, cock- screwdriver, machete

roach, elevator, mink, necklace, blouse, trousers, Cluster 14: keyboard, violin, trumpet, piano, saxo-

vest, scarf, skirt, gun, gown, dress, shirt, sweater, phone, guitar, cello, accordion, trombone, harmon-

bra, cap, jacket, coat, cape ica

Cluster 37: bench, cannon Cluster 15: rocket, helicopter, jet, bomb, missile,

Cluster 38: unicycle, groundhog ambulance, airplane

Cluster 39: pistol, buffalo Cluster 16: jeans, leotards, boots, blouse, skirt,

Cluster 40: clam, pickle, raisin, raspberry, napkin, bracelet, shirt, swimsuit, shoes, trousers, dress,

submarine, fork, coconut, strawberry, bread, spoon, pants, sweater, bra

blueberry, radish, knife, biscuit, cloak, spear, whip, Cluster 17: cauliflower, spinach, cabbage, broc-

avocado, carrot, cottage, turkey, bowl coli, peas, garlic, radish, lettuce, eggplant, cucum-

Cluster 41: lamb, sheep, raccoon, cow, goat, ber, onions, zucchini, parsley, celery, beans, aspara-

rooster, calf, ox, hatchet, bull, moose, bison, barn, gus, beets

rabbit, shed, shack Cluster 18: sofa, drapes, typewriter, napkin, toi-

Cluster 42: screwdriver, pajamas, comb, hammer, let, chair, bathtub, bedroom, bed, doorknob, stool,

brush, alligator desk, carpet, table, dresser, couch, stereo, curtains

Cluster 19: strainer, colander

A.2.2 word2vec Cluster 20: kite, balloon, willow

Cluster 1: leopard, hyena, crocodile, canary, lion Cluster 21: corn, pickle, bread, turkey, biscuit,

Cluster 2: lobster, tuna, clam, octopus, whale, dish, cheese, cake, lamb, pepper, pie, rice, chicken

squid, shrimp, seaweed, salmon, crab, dolphin Cluster 22: frog, spider, toad, ant, worm, cock-

Cluster 3: mat, cage roach, snail, butterfly, beetle, hornet, grasshopper,

3831caterpillar Cluster 3: pheasant, woodpecker, parakeet, ostrich,

Cluster 23: cannon, bullet, gun, pistol, rifle, re- caterpillar

volver, shotgun Cluster 4: stork, fawn, hatchet, hyena, raccoon,

Cluster 24: bag, kettle, mug, envelope, sack, urn, grasshopper

basket, cup, pot, box, card, plate, jar, bucket, bou- Cluster 5: pliers, toaster, mittens, strainer, blender,

quet, bin, ashtray, tray, bottle freezer, saucer

Cluster 25: bowl, spatula, spoon, blender, ladle, Cluster 6: crow, eagle, raven, hawk, dove, pig,

grater, tongs, pan, mixer, saucer sheep, fox, dog, cat, peacock, camel, bear, ele-

Cluster 26: sleigh, trailer, buggy, wheelbarrow, phant, deer, buffalo, rabbit, dolphin, frog, cow, elk,

van, wagon, tractor, truck, cart, jeep lion, moose, donkey, beaver, squirrel, rat, mouse,

Cluster 27: plum, cherry, birch, cork salmon, goat, calf, whale, leopard, bison, horse,

Cluster 28: finch, falcon, pigeon, hawk, pelican, bull, crocodile

raven, seagull, stork, buzzard, vulture, chickadee, Cluster 7: hook, tape, pipe, pyramid, mat, chain,

sparrow, robin, crow, owl, woodpecker, blackbird, drill, balloon, ball, kite, cap, ring, belt, umbrella,

eagle, swan bin, bucket, barrel, basket, bench, gate, wheel, plug,

Cluster 29: racquet, bike, skateboard, skis, unicy- key, stereo, mixer, baton, envelope

cle, sled, scooter, motorcycle, helmet, surfboard, Cluster 8: scooter, sleigh, shawl, sled

wheel, saddle, tricycle, car Cluster 9: raisin, raspberry, beets

Cluster 30: sword, ruler, dagger, spear, baton Cluster 10: birch, grape, pear, plum, apple, cherry,

Cluster 31: bookcase, shelves, closet, fridge, cup- orange, tomato, peach, lemon, peas, beans, pepper,

board carrot, lime, rice, potato, olive, garlic, corn, walnut,

Cluster 32: thermometer, microwave, dishwasher, strawberry, cheese, coconut, mandarin, cabbage,

toaster, skillet, stove, oven, freezer banana, vine, willow, onions

Cluster 33: vest, coat, jacket, parka, gloves Cluster 11: pelican, chickadee, porcupine, cucum-

Cluster 34: plug, thimble, tap, seal, dove, sink, ber, cockroach, tortoise

drain Cluster 12: trailer, hose, saddle, tractor, ambu-

Cluster 35: shawl, scarf, cap, cloak, veil, gown, lance, wagon, taxi, bus, submarine, subway, train,

cape, robe, wand, apron elevator, limousine, bike, trolley, motorcycle, jeep,

Cluster 36: hammer, broom, shovel, pencil, truck, yacht, tank, sofa, rocket, missile, cart, helmet

hatchet, brush, paintbrush, hoe, wrench, bat, pen Cluster 13: skillet, ladle

Cluster 37: clamp

Cluster 14: level, building, bridge, pier, house,

Cluster 38: pumpkin, vine, grape, raspberry, car-

cabin, shield, lantern, marble, sink, apartment, hut,

rot, mandarin, strawberry, pear, banana, apple,

basement, wall, cottage, box, rock, door, table,

turnip, nectarine, cantaloupe, orange, mushroom,

cage, fence, brick, lamp, telephone, drain, shed,

peach, cranberry, tomato, tangerine, raisin, blue-

garage, stone, skyscraper, barn, church, cathedral,

berry, potato

chapel

Cluster 39: faucet, tank, pipe, hose

Cluster 15: buggy

Cluster 40: donkey, ox, pony, horse, camel, ele-

Cluster 16: revolver, rifle, pistol, shotgun, cannon,

phant, zebra, giraffe

gun, bullet

Cluster 41: bow, belt, tie, stick, buckle, cushion,

Cluster 17: seagull, sailboat, seaweed

hook, peg, perch, ring, tack, pin, ball, corkscrew,

Cluster 18: urn

fork, whip, rope, crown

Cluster 19: wheelbarrow, doorknob

A.2.3 BERT Cluster 20: ruler, shovel, stove, keyboard, micro-

Cluster 1: crane, vulture, finch, pigeon, owl, spar- scope, colander, cupboard, bowl, dish, skis, tie,

row, snail, octopus, bat, lobster, crab, mushroom, pie, bread, cake, toilet, stool, cushion, mirror, tap,

shrimp, shell, squid, perch, hornet, spider, worm, cabinet, carpet, fork, comb, apron

butterfly, turtle, toad Cluster 21: skateboard, surfboard, swimsuit

Cluster 2: anchor, tack, bow, raft, doll, tray, knife, Cluster 22: bluejay, blackbird, nectarine, grape-

jet, airplane, canoe, car, helicopter, boat, ship, nap- fruit, tangerine, eggplant, asparagus, cauliflower,

kin, book, board, card, desk, chair, bed, sword, pineapple, cranberry, blueberry, goldfish, ground-

bomb, dagger, spear, rope, bag, pencil hog, mink, broccoli

3832You can also read